I’ve been working with footage shot in the Panoptic Studio at CMU, a markerless motion capture system developed by CMU CS and Robotics PhD students, . I’m interested in volumetric capture of the human body, without rigging a model to a skeleton in traditional motion capture, but in capturing in 3D the actual photographic situation – in my case, the human form. I am collaborating with Pittsburgh dance and music duo, Slowdanger, comprised of Anna Thompson and Taylor Knight. I’m interested in capturing actual video of real people, volumetrically, and creating situations to experience and interact with them.

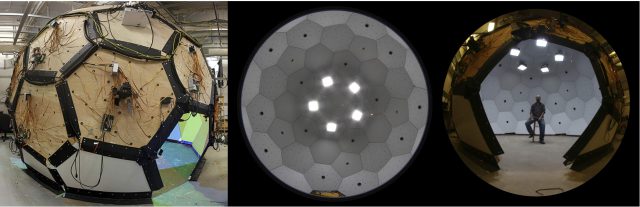

The research question of how to work with and display this data is a challenge from multiple perspectives. First, a capture system must exist to be able to generate the data. The Panoptic Studio uses 480 cameras and 10 Kinects to capture video and depth data in 360 degrees. Secondly, the material is extremely expensive to process with regards to a computer’s RAM, CPU and GPU, and graphics cards. I worked for multiple weeks to convert resulting point clouds – i.e. a series of (x,y,z and r,g,b) points that create a 3-dimensional form, as meshes, with textures, and convert them to obj sequences to manipulate in a 3D program such as Maya or Unity. This had very minimal success – as I was able to get a few meshes to load in and animate in Unity, but without textures. I then decided to work with the point clouds themselves, to see what could be done with these. The resulting tests load in the ply files, in this case 900 of them (or 900 frames), and display them (draw to the screen) one after the other, creating an animation. I experimented with skipping points to create a less dense point cloud, and in displaying nearly every point to see how close I could get to photographic representation.

The resulting artifacts are proof-of-concept, rather than an artwork in and of themselves. I was not originally thinking of this, but the footage has been likened to early film tests of Edison, Muybridge, and Cocteau. It’s interesting to me to think of such a new technology generating material which feels very old – but at the same time, it is oddly appropriate, as we are basically at a similar point in creating visual content with this medium as they were with the early film tests in the late 1800’s, early 1900’s. It is such a challenge to simply process and display this content, that we are experimenting with the form in similar ways.

https://www.youtube.com/watch?v=-CM9W6pYSEo

In a further iteration of this material, I would like to get this content into an Oculus to emphasize its volumetric qualities, giving the viewer the ability to move around the forms in 360 degrees.

Here are the tests:

Creating volumetric films, “4D holograms”, is catching investor and industry attention for taking virtual, augmented, and mixed reality into a new domain beyond CGI – and there is a race to see who will do it best/first/most convincingly. Companies such as 8i and 4D Views are two such companies. I do feel that there are a lot of assumptions and exaggerated claims being made currently around this technology. It’s interesting to look at the types of content that come out of very nascent technology – and draw parallels between the early filmmaking / photography community and this industry / research. Who is making what, who is capturing who, and why? For whom?

The Panoptic Studio at CMU, however, does not come from filmmaking / VFX motivation, but rather a machine learning skeleton detection research question to interpret social interactions through body language. Thus, the question of reconstruction of these captures has not been heavily researched.

Anonymous feedback from group crit, 4/13/2017:

Panoptic Studio Dome at CMU and SlowDanger (dancers) . Victoria scanner

there has to be more to using/making with volumetric capture than just the ability to look around the video from multiple viewpoints (or, that ability has to be exploited in more interesting ways). i don’t think directly just putting it into VR is significantly more interesting than the 2d gifs presented.

Sudden pivot into virtual reality.. Any examples of volumetric capture in virtual reality?

Bill Gates jumping over a chair – https://www.youtube.com/watch?v=8TCxE0bWQeQ

Related work… Veronica Scanner in London (accurate volumetric 3D scanning for high fidelity 3D printing):

https://www.royalacademy.org.uk/exhibition/projects-the-veronica-scanner

Lots of hard work went into this project, it’s kind of hard to tell from your presentation though ++ (we know how hard this was! You should make sure you get credit for it from outside viewers)

An idea for removing the floor: using key technology (remove that specific color, tan?)

The costumes/outfits are curious, what is the choice there?

I’d like to see clips with more dramatic movement… what do those look like?w

What are the people doing? What’s the broader context for this project?

^ the final music video would be helpful in clearing up the context