Audience – rAndom International from Chris O’Shea on Vimeo.

A few weeks back, Mike sent me a link to this webcomic about the process of coming up with new and off-the-wall ideas. It made me pretty happy — as did the protagonist’s almost manic enthusiasm about the possibility of letting the stars see us.

This project doesn’t quite make it to the stars, but it’s a powerful, whimsical and ‘reversive’ installation that makes us consider the purpose of objects and the purpose of ourselves.

The idea of having mirrors turn their faces to follow a person isn’t all that extreme — we see similar types of motion with solar panels following the sun. In my opinion the success of this installation is all in the details: the decision to give each mirror a set of ‘feet’ instead of a tripod or a stalk, or the fact that each mirror has an ‘ambient’ state as well as a reactive state (see video). They are capable of seeing you, but they weren’t made to see you — they seem to pay attention to you because they decide they want to, and so their purpose transcends their task, in a way.

There is a strange subtlety in their positioning too–clumped, but random, like commuters in a train station or traders on Wallstreet. Everything comes together to give the mirrors an eerie humanlike quality, and makes the participant want to engage — because maybe something really is looking back.

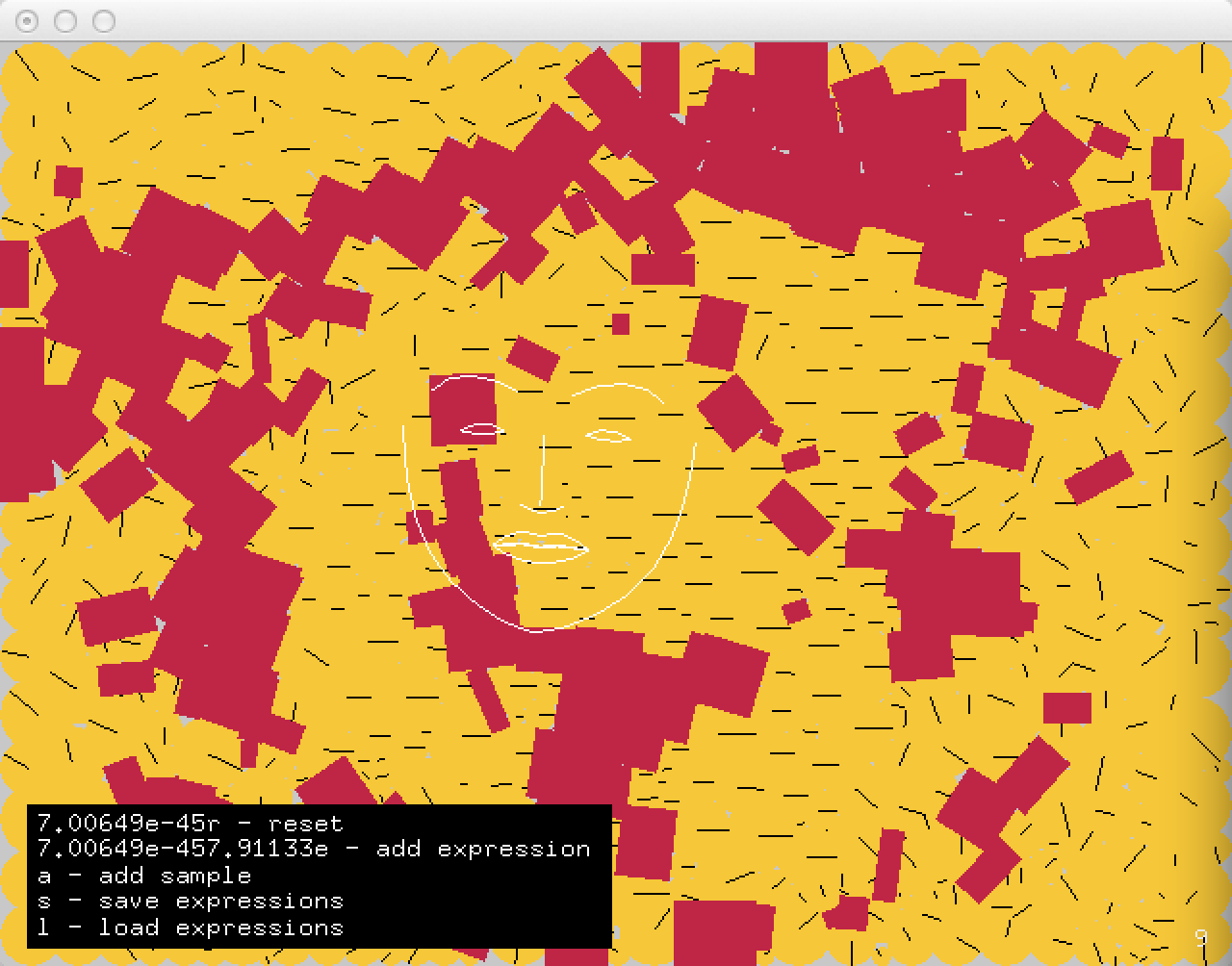

The Treachery of Sanctuary by Chris Milk

I’m probably being really obvious about my tastes, posting about this installation right after gushing about how much I loved the spider dress. Even though at face-value the idea of giving someone’s silhouette a pair of wings seems—I don’t know, adolescent and cliche, maybe?—there’s something elegant, bleak and haunting about this piece. Think Hitchcock, or Poe. I’m less drawn to the final panel (the one where the participant gets wings) than I am to the first two. I really enjoy Milk’s commentary (see video HERE) about how inspiration can feel like disintegrating and taking flight. And there’s something powerful about watching (what appears to be) your own shadow—something constant and predictable, if not immutable—fragment and disappear before your eyes. The fact that Milk has created the exhibit to fool the audience into thinking they are under bright light, rather than under scrutiny from digital imaging technology, lends the trick this power, I think.

All in all, the story Milk tells about the creative process works, and puts the ‘wing-granting’ in the final panel into a context where it makes poetic sense, instead of just turning people into arch-angels because ‘it looks cool’. (It does.)

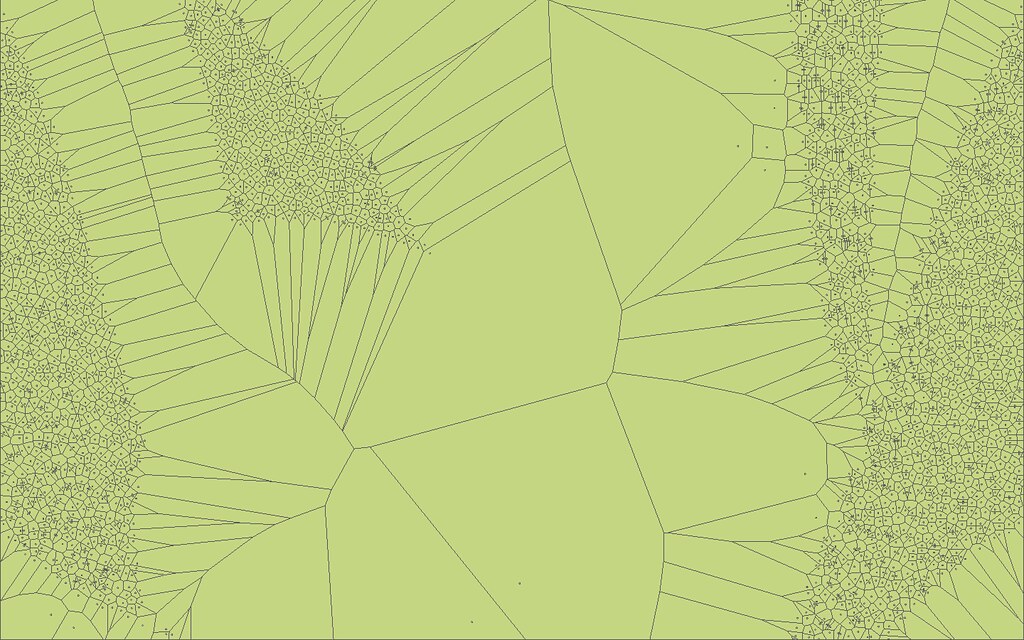

Sentence Tree from Andy Wallace on Vimeo.

This is a quirky little experiment that organizes sentences you type into trees, based on punctuation and basic grammar structures. The creator, Andy Wallace, described the piece as ‘a grammar exercise gone wrong’, but I wonder if the opposite isn’t true. Even as a lover of words, it’s hard to think of something more boring than diagraming sentences the traditional way: teacher at a whiteboard drawing chicken-scratch while students sleep. I like the potential of this program to inject some life into language and linguistics. Think of the possibilities: color code subject, object, verb, participle, gerund. Make subordinate clauses into subordinate branches. Structure paragraphs by transitional phrases, evidence, quotations, counterarguments. Brainstorm entire novels or essays instead of single sentences! This feels like the tip of an iceberg.