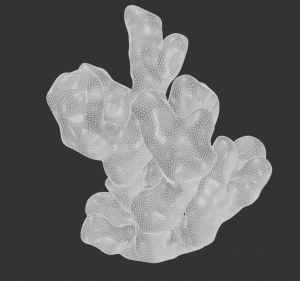

Looking to 3d print and then investment cast.

Looking to 3d print and then investment cast.

Still got a lot of work to do.Turns out flocking is still hard.

Also, modeling.Repetitive modeling.More hours are needed.

I still think I have some hurdles. I’ve gotten a lot done, though.

Here’s what I have: clouds, basic flocking, some models for fish and the ship, and a couple miscellaneous other items and scripts. I’ve been focusing mostly on new modeling techniques, researching clouds and shaders, and animation.

Here’s what I still need to do: more complex flocking, finished models, a few spawning scripts, and more complex animations. Mostly bulk work I think; I need to put in the hours and it’ll get done.

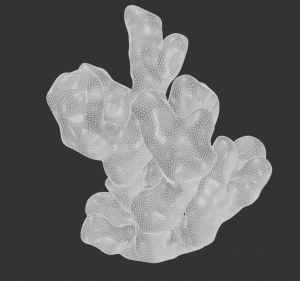

Most of the last week’s work on GraphLambda has been spent porting from the Processing environment to Eclipse and implementing various under-the-hood optimizations.Accordingly, the visible parts of the application look very similar to the last incarnation.The main exception here is the text-editing panel, which now provides an indication that it is active, supports cursor-based insertion editing, and turns red when an invalid string is entered.

Most of the last week’s work on GraphLambda has been spent porting from the Processing environment to Eclipse and implementing various under-the-hood optimizations.Accordingly, the visible parts of the application look very similar to the last incarnation.The main exception here is the text-editing panel, which now provides an indication that it is active, supports cursor-based insertion editing, and turns red when an invalid string is entered.

The biggest issue that still remains is distributing the various elements of the drawing so that the logical flow of the expression is clear.Once this is done, the drawing interface must be implemented, including a method of selection highlighting.

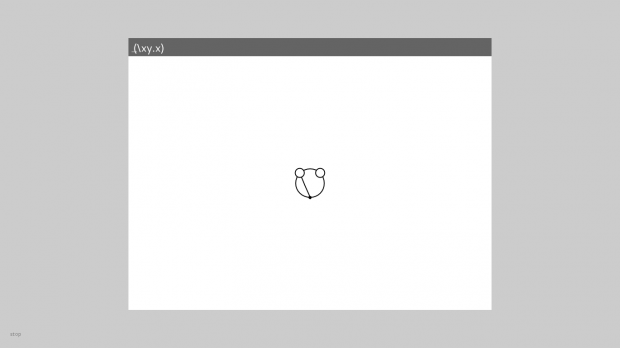

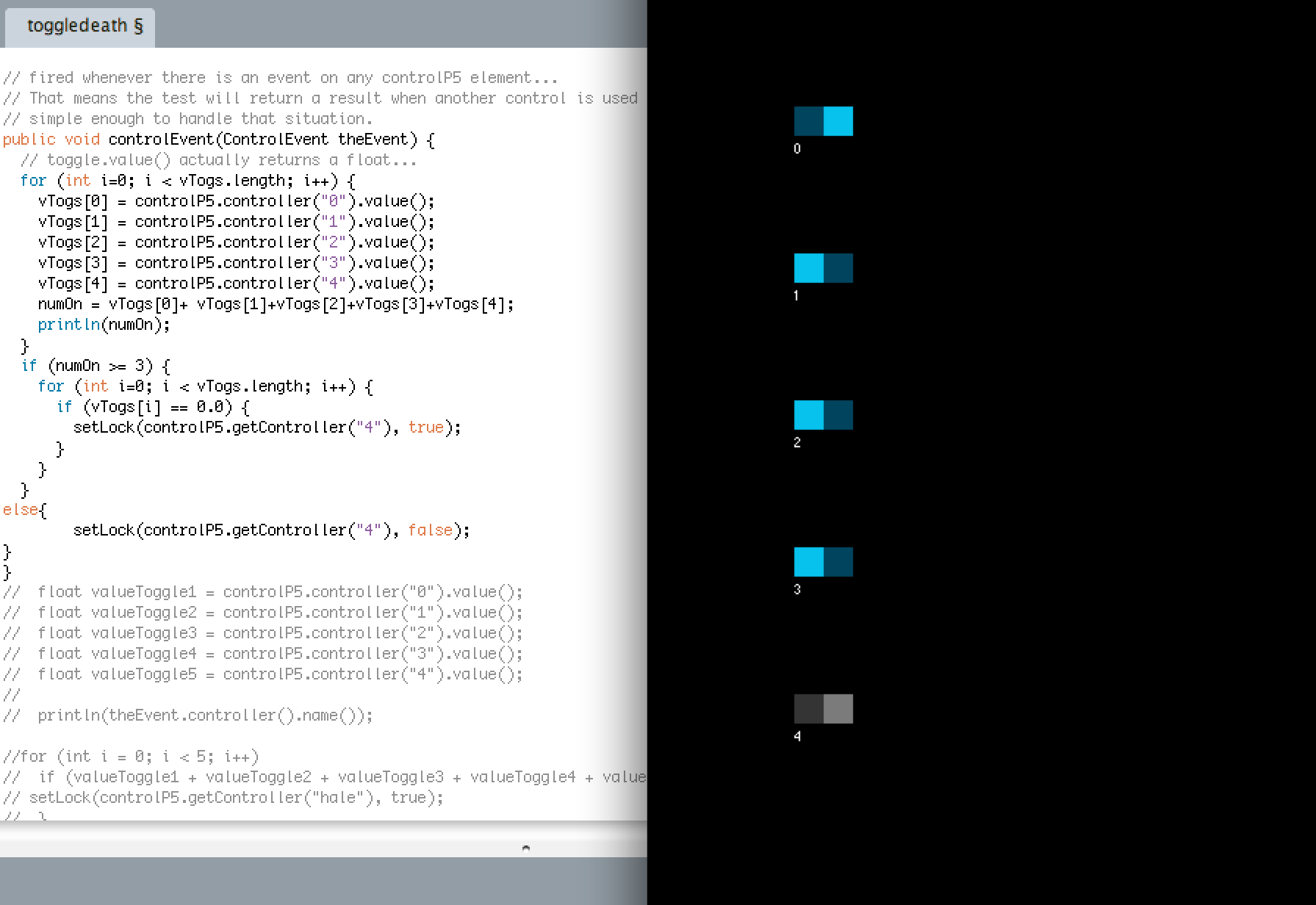

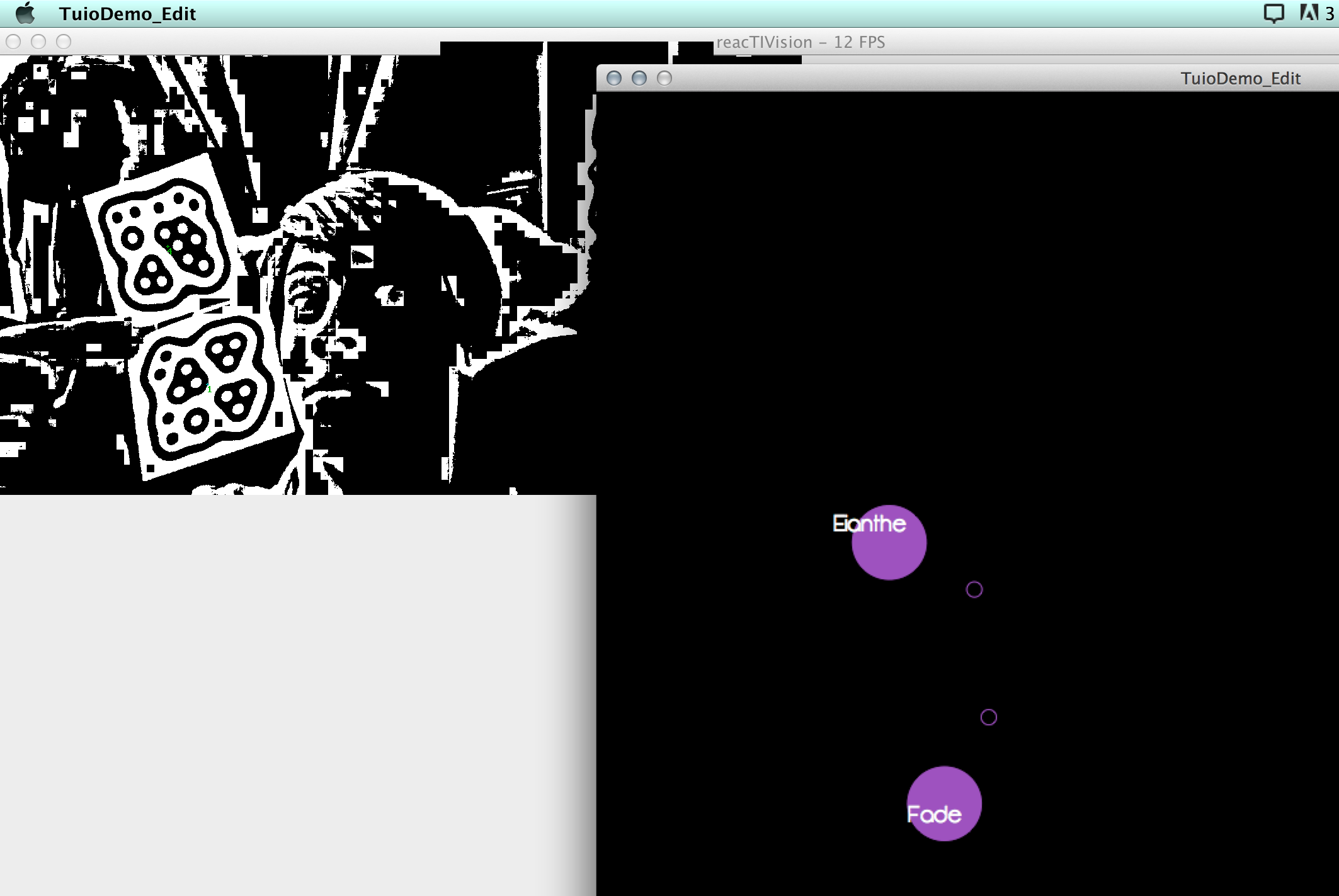

Since you last encountered me, I’ve been working on figuring out TUIO and Control P5, and have a few basic things working, but I still haven’t gotten two basic issues out of the way: 1) How do I make a construct that reliably holds the object IDs for the active fiducials, 2) How do I prevent more than 3 characters being selected at the same time?Both of these issues seem like they should be simple, and solved problems, but I can’t find anything useful on the internet.

In any case, check out some awesome screenshots from my recent tinkering!

Now there are many Kinect or Computer Vision systems.However, these two systems have a very virtual experience.I think I’m going to give some real feeling experience to the user who uses these systems.Also there are some feedback thing for Kinect/CV system.such as haptic phantom here is a video:

But I think it is a little limited for users.They must hold a pen to feel virtual things in the virtual world.So I am thinking to make a more natural feed back for them.The basic idea is to make a wearable device on the back of the hand and when push to something.It will give you some feedback.But it may not be stop your movement.It may just tell you: hey there’s something in the front of you.I’m thinking to use motor to be the engine for feedback part and kinect to detect people’s movement.Just a simple haptic thing for kinect and later maybe more complicated exoskeleton thing which Golan told me.

See Kyna’s post.

Here’s another post on my capstone project, and hopefully this time it will give a better idea of what I am trying to accomplish.

So I am planning on laser-cutting a map of Beijing on acrylic. Size-wise, right now I am thinking about 12 in x 24 in because that’s the biggest size a laser cutter can cut in one piece. I can also laser cut the maps into different pieces, and attach them together. I got the map from www.openstreetmap.org, and find all the main tourist attractions that I have visited during my trip. I am going to use Illustrator to do a vector draw, and laser cut it on opaque black acrylic. I am hoping to make a very detailed map, but that depends on how well I can do the vector drawing and the accuracy of a laser cutter.

Here are a couple of maps I got from www.openstreetmap.org with tourist places marked. I will combine them to make one big map.

This is how I am going to draw out the map in Illustrator:

Since I never used a laser cutter before, I didn’t know that you need hair-sized lines, and not filled shapes, for it to be able to cut. I am planning on have this cut to see how accurate the map will look, and what if acrylic is an appropriate material for me to use. (Or should I just use paper? Would it burn the paper? I guess I have to test it out)

I found this project after researching how to laser cut a map, and I hope to make a map as accurate as this. We’ll see how close can I get.

After the map is cut, I am thinking about either projecting it on the screen or have someone interact with it on a table or something. Since the map is going to be rather big, I only want to project it in a smaller segment of either 3 by 6 inches or 3 by 3 inches.

You can interact with it by moving the map around physically to see different segments. And that’s where the interaction part comes into play. I really like this idea because I get to integrate the hardware and the software together to make a data visualization piece. I will somehow track the map that’s projected, and connect it with the data on different places in Beijing (That’s the main technical part I have to figure out how to do). You can click on the tourist attractions that locate in a specific segment being projected. Once the location is clicked, details, photos, information about that specific place will come up. It will also be a way for me to document the trip and for other people to learn about this city.

1. ExR3 by Kyle McDonald and Elliot Woods

ExR3 is an installation involving a number of mirrors that reflect and refract geometric shapes found on the various walls of the room. As the user moves through the space, they explore and discover the shapes and the interrelation between them. This project is really interesting because the placement of the mirrors and the shapes were carefully calculated out using computer vision, reminding me of the way an architect would plan out the experience of a space.

2. IllumiRoom by Microsoft

This is a really interesting project that allows the experience of a movie or video game to be expanded beyond the boundaries of a television screen and interact with the other objects in the room in a number of ways. The system uses a kinect to gauge the room and find the location and outlines of objects within the room. It definitely changes the experience of both the space and the game/movie being enjoyed but I would like to see some more interesting augmentations. The ones shown in the videos are somewhat predictive and I think that there is much more potential in this new system.

3. Smart Light by Google Creative Lab

This project is a series of explorations involving projecting (literally projecting) digital functionality onto analog objects in the real world. It is inspired by the idea of extending the knowledge based of the web outside of the computer and into the everyday world. I find this idea intriguing but it begs a few questions that I would hope would be explored in the future. Firstly, in the documentation, there is no indication of what is making the projection and I’m wondering how plausible it is to take this technology out of the lab and into the everyday world. If it is not, it is somewhat limiting and not addressing the question as well as it could. Secondly, in the second documentation video, they are using objects that seem to have been built for the sole purpose of these experiments. I prefer the ideas presented in the first video that suggest using this technology in conjunction with everyday objects.