The world’s foremost computational poet is probably Allison Parrish. Here is an astoundingly good lecture of hers from 2015; let’s watch a few minutes of this video starting from 1:30-6:00, where she discusses how she creates programs to explore literature — making robot probes to bring back surprises from unknown poetic territories:

Seeing Like a Computer

Here’s a video by Timo Arnall, “Robot Readable World“, which is made entirely of poetically repurposed demonstration videos from computer-vision research laboratories:

In related work, artist Trevor Paglen collaborated with the Kronos Quartet on Sight Machine (2018; video), a performance in which the musicians were analyzed by (projected) computer vision systems. “Sight Machine’s premise is simple and strange. The Kronos Quartet performs on stage, and Paglen runs a series of computer vision algorithms on the live video of this performance; behind the musicians, he then projects a video that these algorithms generate. Paglen is an artist best known for his photography of spy satellites, military drones, and secret intelligence facilities, and in the past couple of years, he has begun exploring the social ramifications of artificial intelligence.”

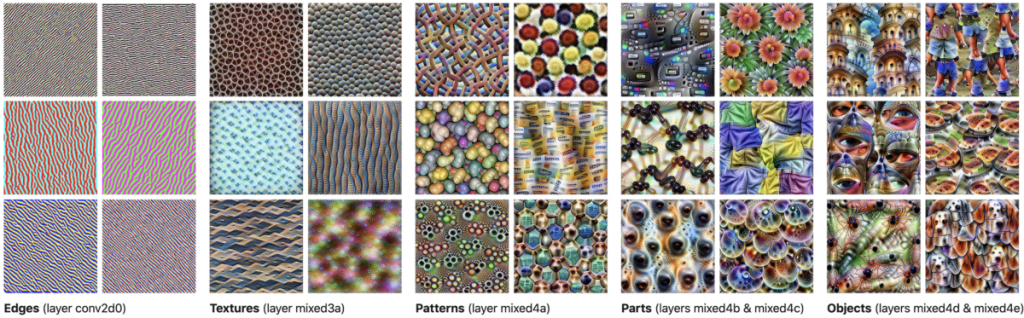

Neural networks build up understandings of images, beginning with simple visual phenomena (edges, spots), then textures, patterns, constituent parts (like wheels or noses), and objects (like cars or dogs). This is a great article to understand more: “Feature Visualization: How neural networks build up their understanding of images“, Olah, Chris and Mordvintsev, Alexander and Schubert, Ludwig.

Here’s Jason Yosinski’s video, Understanding Neural Networks Through Deep Visualization:

“DeepDream”, by Alex Mordvintsev, is a kind of iterative feedback algorithm in which an architecture whose neurons detect specific things (say, dogs) is asked: what small changes would need to be made to regions within this image, so that any part that already resembled something you recognized (like, a dog), looked even more like a dog? Then, make those changes….

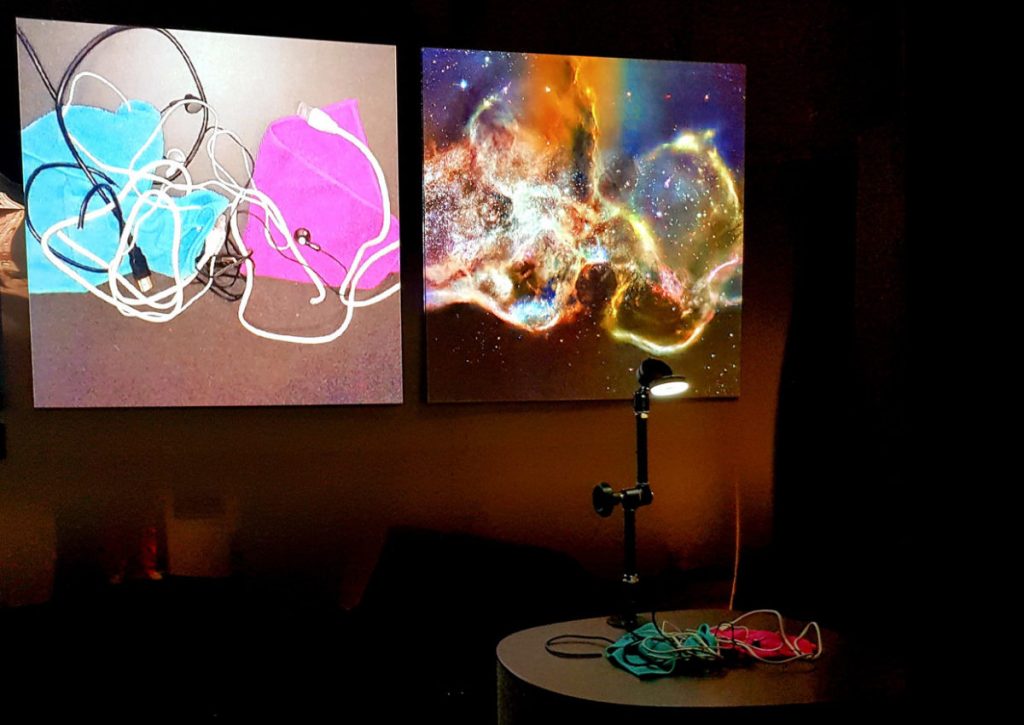

Detecting pornography is a major challenge for Internet companies. Artist Tom White has been working to invert the efforts of these organizations, by creating innocuous images which are designed to fool machine vision systems, in order to better understand how computers see. Below is work from his 2018 series, Synthetic Abstractions:

Tom White’s Synthetic Abstractions series are seemingly innocent images, generated from simple lines and shapes, which trick algorithms into being classified as something else. Above is an arrangement of 10 lines which fools six widely-used classifiers into being perceived as a hammerhead shark, or an iron. Below is a silkscreen print, “Mustard Dream”, which White explains is flagged as “Explicit Nudity” by Amazon Web Services, “Racy” by Google SafeSearch, and “NSFW” by Yahoo. White’s work is “art by AI, for AI”, which helps us see the world through the eyes of a machine. He writes: “My artwork investigates the Algorithmic Gaze: how machines see, know, and articulate the world. As machine perception becomes more pervasive in our daily lives, the world as seen by computers becomes our dominant reality.” This print literally cannot be shown on the internet; taking a selfie in his gallery may get you banned from Instagram.

Style Transfer, Pix2Pix, & Related Methods

(Image: Alex Mordvintsev, 2019)

You may already be aware of “neural style transfer”, developed by a Dutch computing lab in 2015. Neural style transfer is an optimization technique used to take two images—a content image and a style reference image (such as an artwork by a famous painter)—and blend them together so the output image looks like the content image, but “painted” in the style of the style reference image. It is like saying, “I want more like (the details of) this, please, but resembling (the overall structure of) that.”

This is implemented by optimizing the output image to match the content statistics of the content image and the style statistics of the style reference image.

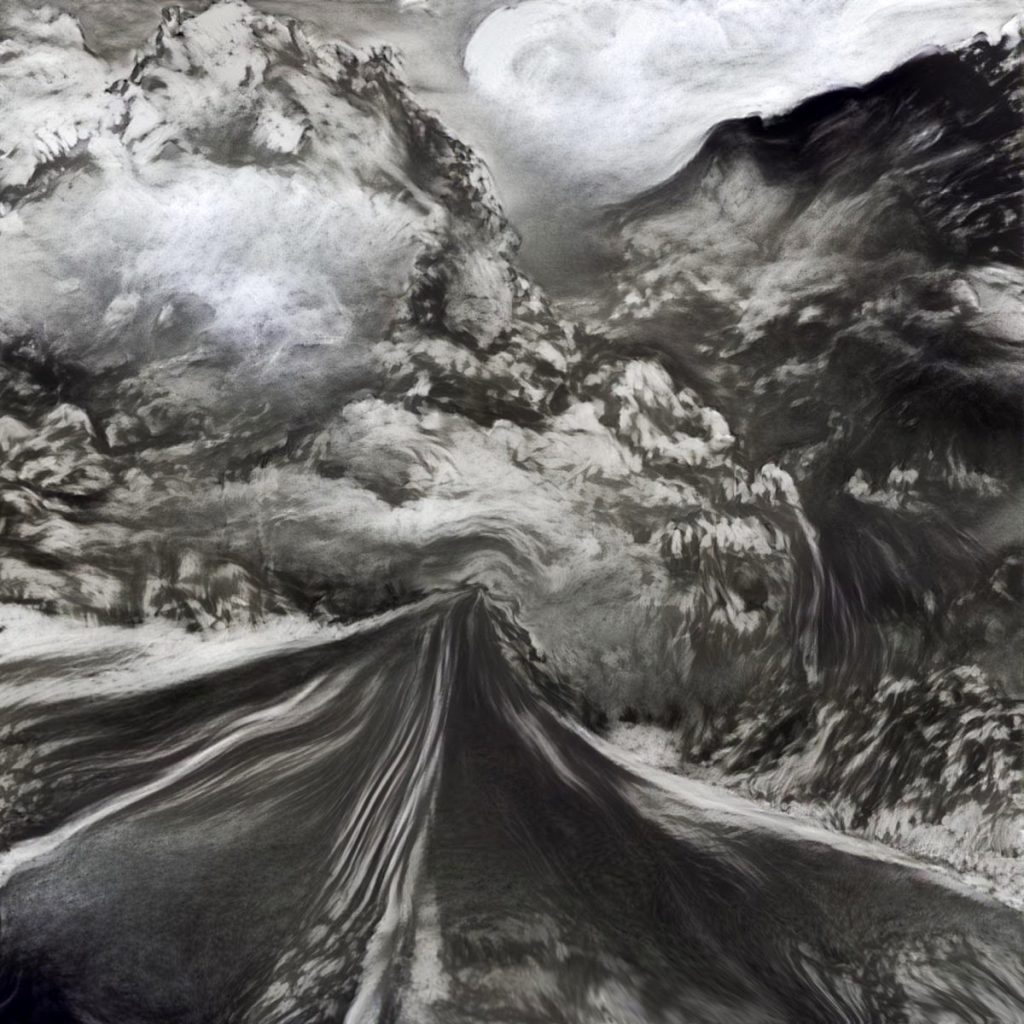

Various new media artists are now using style transfer code, and they’re not using it to make more Starry Night clones. Here’s a project by artist Anne Spalter, who has processed a photo of a highway with Style Transfer from a charcoal drawing:

Some particularly stunning work has been made by French new-media artist Lulu Ixix, who uses custom textures for style-transferred video artworks. She was originally a special effects designer:

More lovely work in the realm of style transfer is done by Dr. Nettrice R. Gaskins, a digital artist and professor of new-media art at Lesley University. Her recent works use a combination of traditional generative techniques and neural algorithms to explore what she terms “techno-vernacular creativity”.

Style transfer has also been used by artists in the context of interactive installations. Memo Akten’s Learning to See (2017; video) uses style transfer techniques to reinterpret imagery on a table from an overhead webcam:

A related interactive project is the whimsical Fingerplay (2018) by Mario Klingemann, which uses a model trained on images of portrait paintings:

Fingerplay (Take 1) pic.twitter.com/oyys84Al0e

— Mario Klingemann 🇺🇦 (@quasimondo) April 7, 2018

Conceptually related to style transfer is the Pix2Pix algorithm by Isola et al. In this way of working, the artist working with neural networks does not specify the rules; instead, she specifies pairs of inputs and outputs, and allows the network to learn the rules that characterize the transformation — whatever those rules may be. For example, a network might study the relationship between:

- color and grayscale versions of an image

- sharp and blurry versions of an image

- day and night versions of a scene

- satellite-photos and cartographic maps of terrain

- labeled versions and unlabeled versions of a photo

And then—remarkably—these networks can run these rules backwards: They can realistically colorize black-and-white images, or produce sharp, high-resolution images from low-resolution ones. Where they need to invent information to do this, they do so using inferences derived from thousands or millions of real examples.

For example, here is an image colorization I made last night with the im2go service.

Here are some other AI-p0wered tools you might find interesting:

Cleanup.pictures helps you remove things:

Deep Nostalgia reanimates faces in photos:

MetaDemo Sketch Animator – brings drawings to life

Waifu2x image super-resolution

Karen X. Cheng has been doing killer projects combining multiple tools, such as DAIN:

1/ Using AI to smooth my footage 😌 with @jperldev. We’re using DAIN, an AI technique that smoothly interpolates frames. #stopmotion #artificialintelligence pic.twitter.com/UxHfc3U6j8

— Karen X. Cheng (@karenxcheng) July 27, 2022

1/ Using AI to generate fashion

After a bunch of experimentation I finally got DALL-E to work for video by combining it with a few other AI tools

See below for my workflow –#dalle2 #dalle #AIart #ArtificialIntelligence #digitalfashion #virtualfashion pic.twitter.com/x3zP3fIp4G

— Karen X. Cheng (@karenxcheng) August 30, 2022

Latent Space Navigation

When a GAN is trained, it produces a “latent space” — a multi-thousand-dimensional mathematical representation of how a given subject varies. Those dimensions correspond to the modes of variability in that dataset, some of which we have conventional names for. For example, for faces, these dimensions might encode visually salient continuums such as:

- looking left … looking right

- facing up … facing down

- smiling … frowning

- young … old

- “male” … “female”

- smiling … frowning

- mouth open … mouth closed

- dark-skinned … light-skinned

- no facial hair … long beard

Some artists have created work which is centered on moving through this latent space. Here is a 2020 project by Mario Klingemann, in which he has linked some variables extracted from music analysis (such as amplitude), to various parameters in the StyleGAN2 latent space. Every frame in the video visualizes a different point in the latent space. Note that Klingemann has made artistic choices about these mappings, and it is possible to see uncanny or monstrous faces at extreme coordinates in this space: (play at slow speed)

It’s also possible to navigate these latent spaces interactively. Here’s Xoromancy (2019), an interactive installation by CMU students Aman Tiwari (Art) and Gray Crawford (Design), shown at the Frame Gallery:

The Xoromancy installation uses a LEAP sensor (which understands the structure of the visitors’ hands); the installation maps variables from the user’s hands to the latent space of the BigGAN network.

In a similar vein, here’s Anna Ridler’s Mosaic Virus (2019, video). Anna Ridler’s real-time video installation shows a tulip blooming, whose appearance is governed by the trading price of Bitcoin. Ridler writes that “getting an AI to ‘imagine’ or ‘dream’ tulips echoes 17th century Dutch still life flower paintings which, despite their realism, are ‘botanical impossibilities’“.

We will watch 6 minutes of this presentation by Anna Ridler (0:48 – 7:22), where she discusses how GANs work; her tulip project; and some of the extremely problematic ways in which the images in datasets that are used to train GANS, have been labeled.