phiaq – Final

Augmented Reality Sound and Objects

TWEET: Scan over household objects and hear the music the objects play

About the Final Project

This was built from a previous project I did where I had music cubes (bottom of post) and it would play sounds. It was also built from my Augmented Photo Album project, where I had video and sounds overlay on specific moments in family. In order to iterate/make it inter-actionable on those and make it more interesting, I chose household products that would juxtapose each other, and create sounds that would layer.

Each object is different from one another, and I thought the collection of objects can also speak to the person who owns it. In this collection, I was thinking about a newly wed, or even a mom who has kids. I think it is interesting concept that you can look at an object objectively, and it will give clues about the personality and role of the person who owns it. Sometimes, they may even reveal embarrassing secrets, such as the stool softener.

In terms of difficulties, I had trouble coming up with a collection of items and sounds that made sense and were interesting at the same time. I think it could be pushed further if I embedded secrets and more surprises in these sounds. Technically, the build on the phone was a bit slow as I was detecting many objects at a time, and the sounds didn’t fade out as I wanted it to. If I were to iterate, I want to pay more attention to the narrative of these objects and experiment with more interesting sounds. I was stuck at the purpose of my AR music of objects, that I think I could have pushed the idea a bit further.

I had more objects with me, but decided to cut them out because their image targets were hard to detect.

INDIVIDUAL SOUNDS FROM BBC SOUND LIBRARY

OBJECTS:

- Wedding Card

- Toy Dog and Piano

- Crayons

- Marbles

- Stool Softner

- Acne Cream

Process

AR MUSIC CUBES:

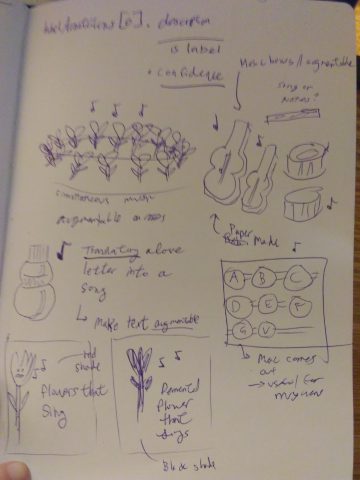

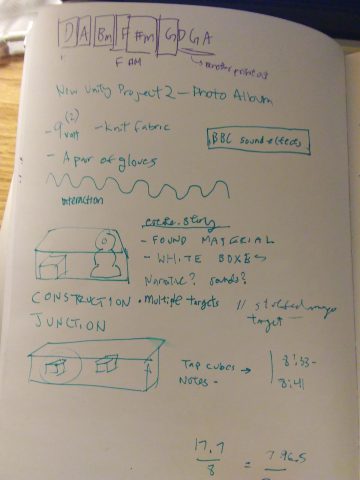

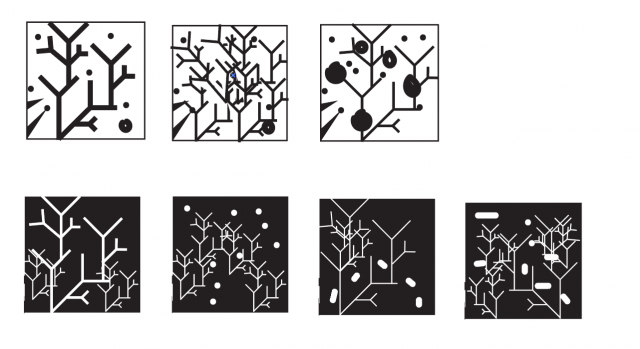

Before this project, I came up with the idea of linking sounds to physical music notes in origami boxes as a way for the audience to create their own music (inspired by AR paper cubes). After talking to Golan, I agree that the music notes might be too similar and I should look at more experimental sounds since I can’t say I’m an expert at music. Also, I agree that the image targets can be more graphic. The AR final objects piece above built upon this idea as it was a way for me to create music out of physical things.

I thought this AR music boxes was interesting because of the layers of notes. I really wanted to push that in my final project with layers of interesting sounds from seemingly “random objects”

SKETCHES:

Throughout my final project, I thought the best way to detect objects were making custom QR codes and realized that they were really small, and were not ideal in detection. Near the end, I finally realized that I just needed objects with logos that had good image detection, and half the objects that I had planned to augment, were gone. Here are the QR codes that I created, but didn’t put in.

Acknowledgements Section

Thank you to Peter Sheehan for teaching me how to build to the phone, Josh Kery for helping me document and giving me conceptual advice, Alex Petrusca for helping me out with the sound part of the scripting, Golan Levin for giving me conceptual advice, and BBC sounds for the sound effects.