// Clair Sijing Sun

// Clock Project

// 60-212

// Sep 20, 2018

var prevSec;

var millisRolloverTime;

var frames = [];

var secondH ;

var counter = 0;

var weekdayNames = ["Sunday", "Monday", "Tuesday", "Wednesday",

"Thursday", "Friday", "Saturday"]

var monthNames = ['January', 'February', 'March', 'April', 'May', 'June', 'July', 'August', 'September', 'October', 'November', 'December'];

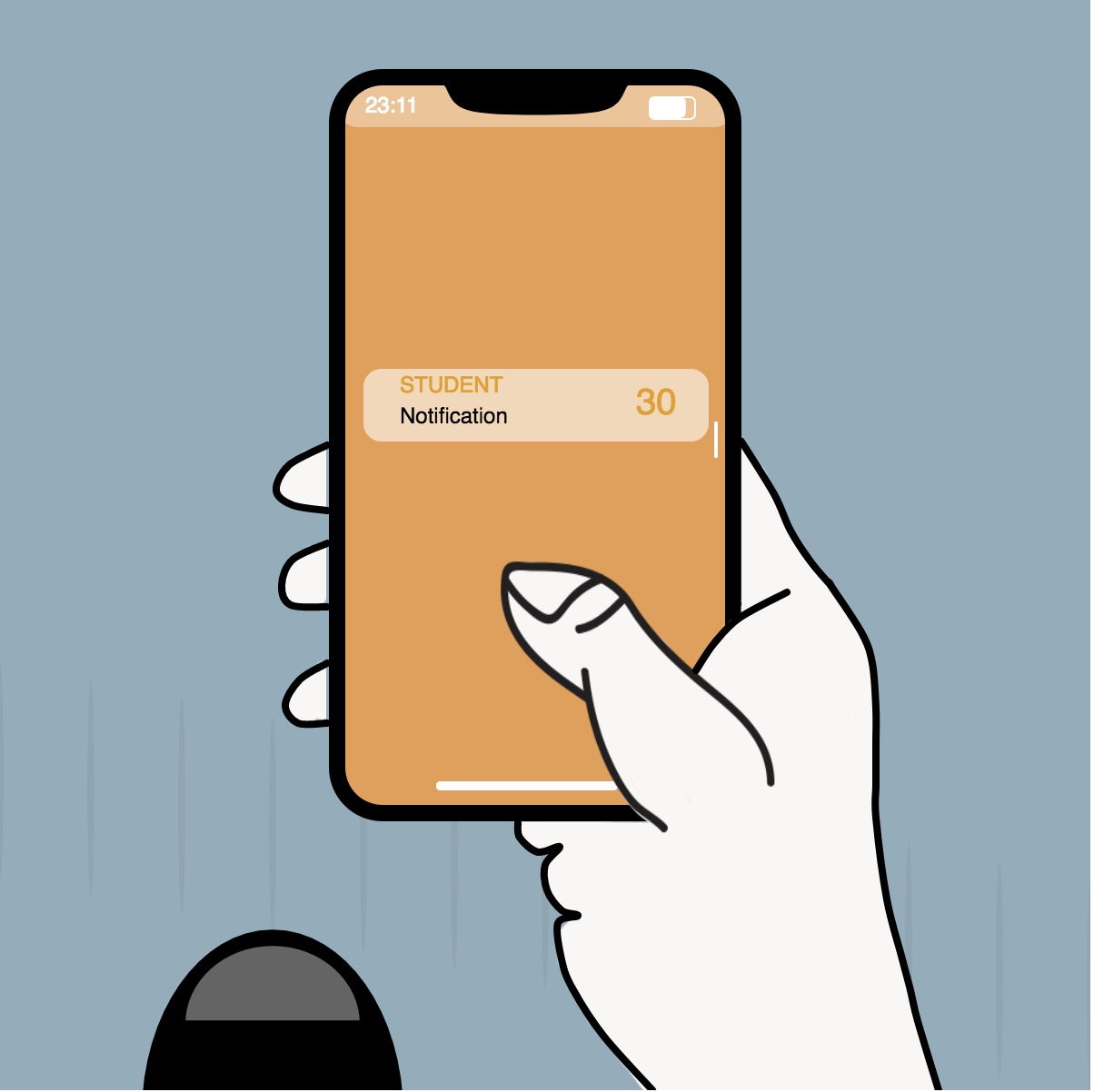

var listofApps = ['WEIBO', 'WECHAT', 'GROUPME', 'GMAIL', 'LINKEDIN', 'FACEBOOK', 'STUDENT', 'SOUNDCLOUD'];

var appL ;

var randomN ;

var batteryR ;

var batteryG ;

var batteryB ;

var batteryL = 25;

var backrockX = 50;

var backrockY = 0;

var barH = 72;

var handM1 = 383;

var handM2 = 369;

var finger = [];

var skin = [];

var feetH ;

var feetH2 ;

//--------------------------

function setup() {

createCanvas(600, 600);

millisRolloverTime = 0;

feetH = height;

feetH2 = height + 100;

}

//--------------------------

function preload(){

frames.push(loadImage("https://i.imgur.com/6LcHHng.png"));

//frames.push(loadImage("https://i.imgur.com/od1uGbs.png"));

frames.push(loadImage("https://i.imgur.com/gHs88Af.png"));

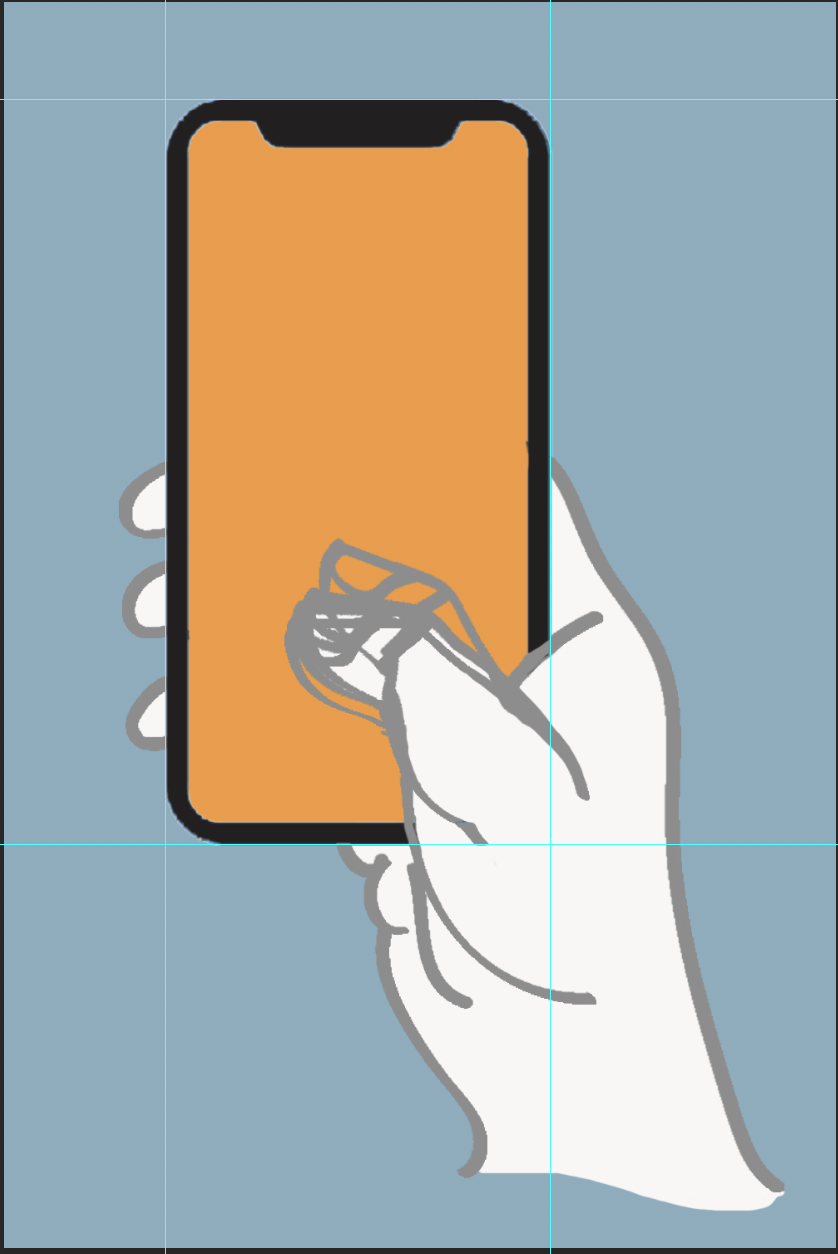

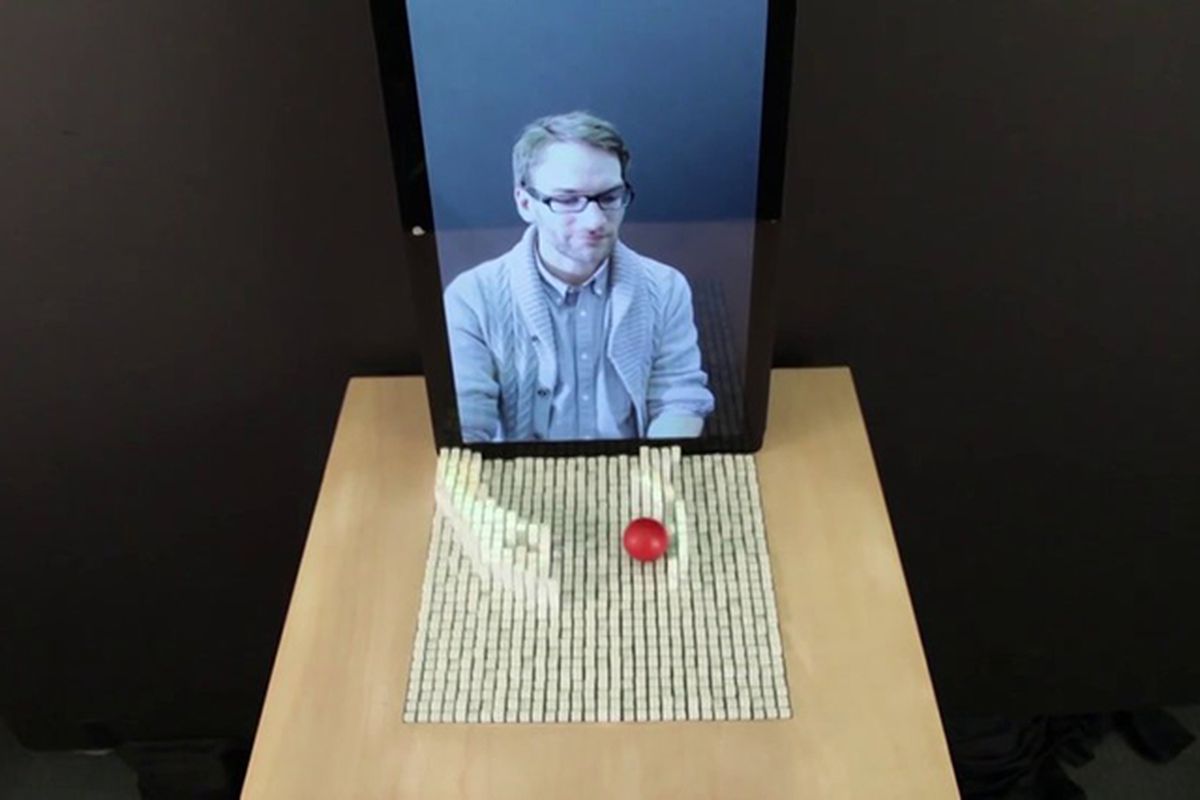

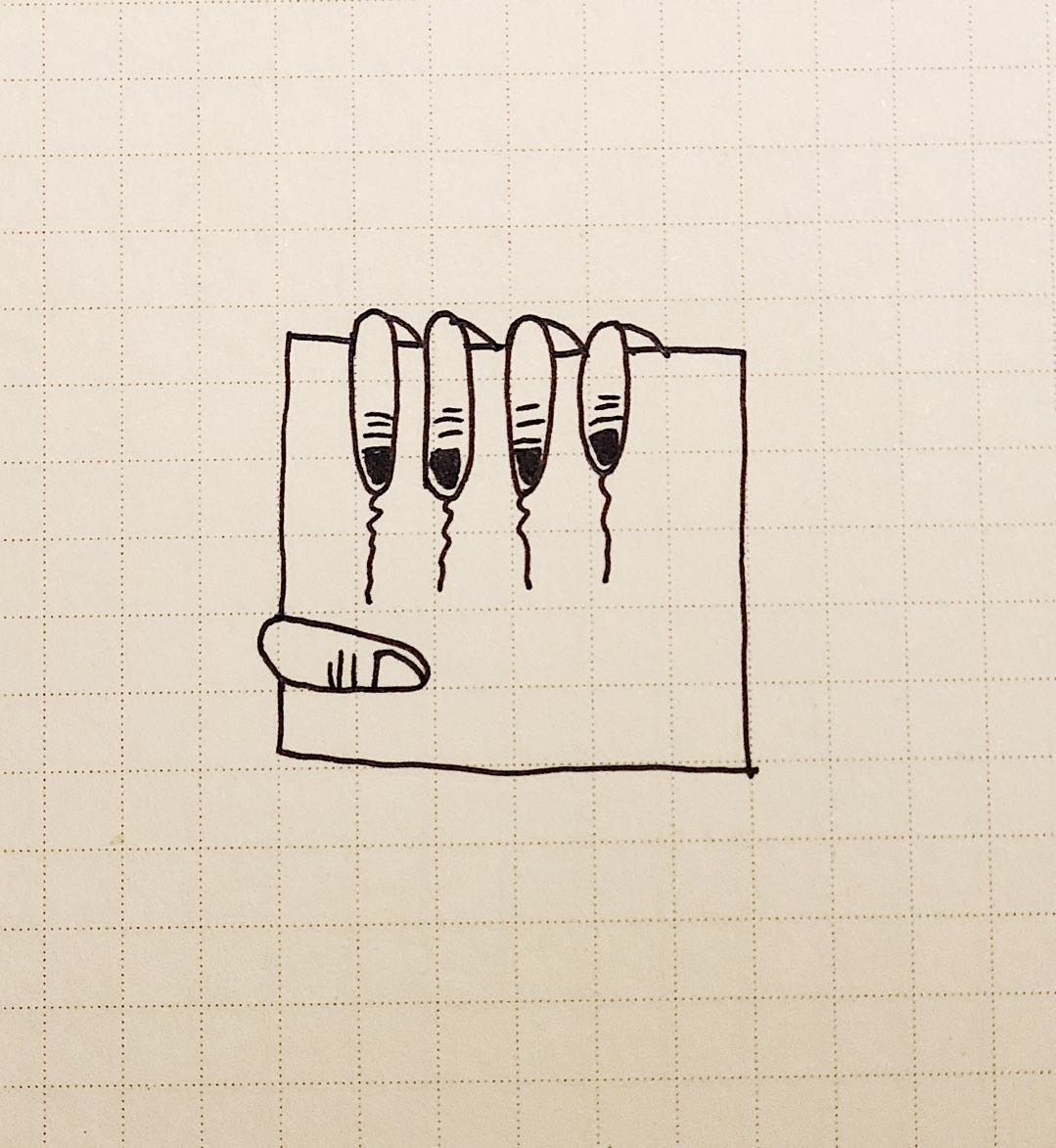

finger.push(loadImage("https://i.imgur.com/QfwFtyL.png"));

finger.push(loadImage("https://i.imgur.com/WFPnTt3.png"));

skin.push(loadImage("https://i.imgur.com/vXlzYE7.png"));

skin.push(loadImage("https://i.imgur.com/KFbFtYv.png"));

}

//--------------------------

function draw() {

background(144, 173, 189);

push();

fill(0, 10);

if (backrockY < height || backrockY == height){

backrockY = backrockY + 1;

}else{backrockY = 0}

for (i = 0; i < 20; i++){ ellipse(backrockX * i, backrockY+i*10, 4, 120); } pop(); appL = listofApps.length; push(); fill(232,158,78); rect(190,40,210,408, 20); pop(); // Fetch the current time var H = hour(); var Min = minute(); var S = second(); var D = day(); var M = month(); var week = new Date().getDay(); // Reckon the current millisecond, // particularly if the second has rolled over. // Note that this is more correct than using millis()%1000; if (prevSec != S) { millisRolloverTime = millis(); } prevSec = S; var mils = floor(millis() - millisRolloverTime); noStroke(); fill('black'); var currTimeString = "Time: " + (H%12) + ":" + nf(M,2) + ":" + nf(S,2) + ((H>12) ? "pm":"am");

var hourBarWidth = map(H, 0, 23, 0, width);

var minuteBarWidth = map(Min, 0, 59, 0, width);

var secondBarWidth = map(S, 0, 59, 0, width);

var secondsWithFraction = S + (mils / 1000.0);

var secondsWithNoFraction = S;

var secondBarHeightChunky = map(secondsWithNoFraction, 0, 60, 0, width);

//for displaying the moving hand

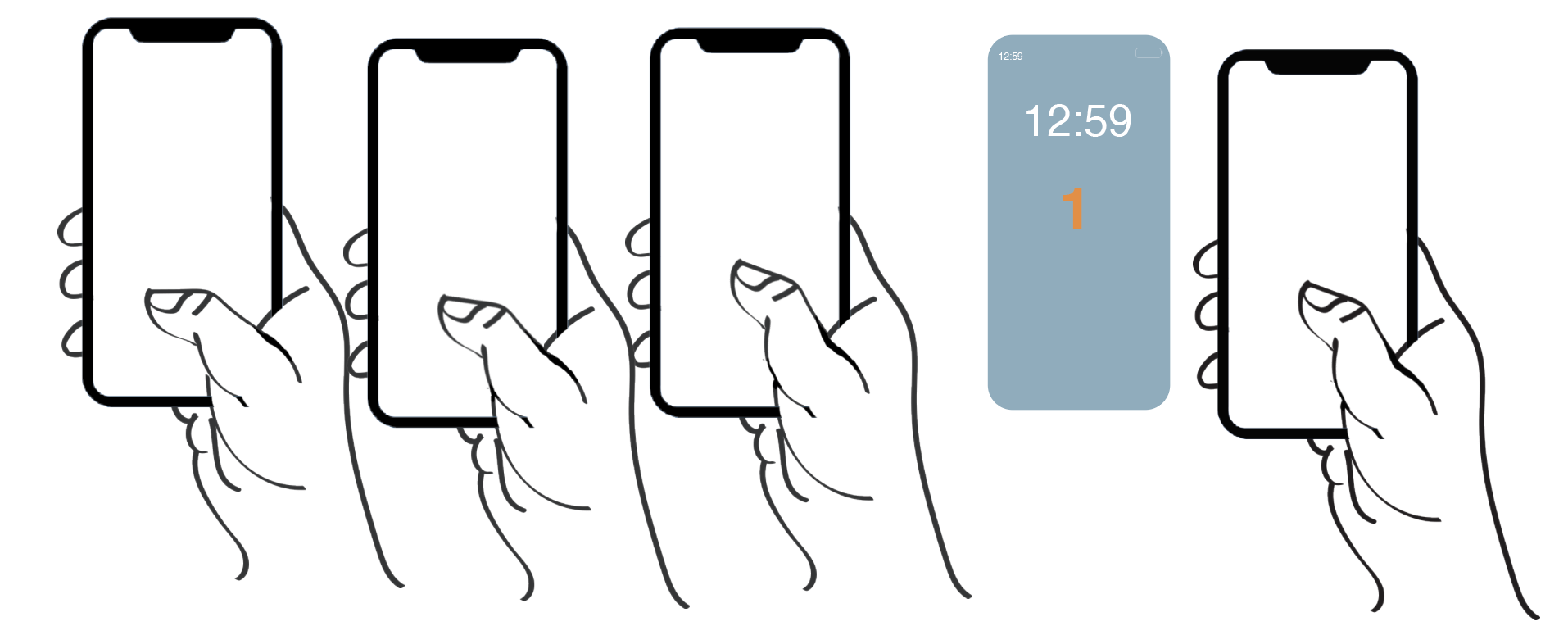

if (mils < 500){

counter = 0;

}else{

counter = 1;

}

//draw the feet

push();

fill(0);

strokeWeight(4);

stroke(0);

if (mils < 500){

feetH = map(mils, 0, 500, height+100, height);

feetH2= map(mils, 0, 500, height, height+100);

} else {

feetH = map(mils, 500, 1000, height, height+100);

feetH2 = map(mils, 500, 1000, height+100, height);

}

ellipse(width/4, feetH, 140, 200);

ellipse(width/4+300, feetH2, 140, 200);

fill(255, 100);

arc(width/4, feetH-50, 100, 90, PI, 2*PI, CHORD);

pop();

//draw the top bar

push();

fill(255, 100);

rect(185, 45, 220, 25, 20);

pop();

//controlling the movement of seconds

var secondBarHeightSmooth = height;

if (mils < 300) { secondBarHeightSmooth = map(mils, 0, 300, 420, 228); }else if (mils >= 300 && mils < 700){

secondBarHeightSmooth = 228;

}else{secondBarHeightSmooth = map(mils, 700, 1000, 228, 39);

}

//changing background for seconds

push();

textSize(20);

fill(255, 150);

if ((''+S).length == 1){

S = '0'+ S;

}

rect(width/3, secondBarHeightSmooth-25, 190, 40, 10);

fill(232,158,7);

text(S, 350, secondBarHeightSmooth);

textSize(12);

//if (S){

//randomN = random(S%8);

//}

text(listofApps[int(S%8)], 220, secondBarHeightSmooth-12);

fill(0)

text('Notification', 220, secondBarHeightSmooth+5);

pop();

fill(255);

barH = map(S, 0, 60, 72, 392);

rect(393, barH, 2, 20, 5);

//display of hour and minutes at the beginning

if (S < 1){

if (mils < 700){

secondH = 126;

}else { secondH = map (mils, 700, 1000, 126, -65);}

}else{

secondH = -10;

}

push();

textStyle(NORMAL);

textSize(40);

fill(255);

textFont('Helvetica');

if ((''+Min).length == 1){

Min = '0'+ Min;

}

text(H + ":" + Min, width/2.5, secondH);

textSize(12);

text(weekdayNames[week]+","+monthNames[M-1]+ " " + D, 224, secondH+(145-126));

pop();

//display of mins and hours on top left

push();

fill(255);

text(H+ ":" + Min, 201, 62);

pop();

//home bar

fill(255);

rect(240, height-170, 100, 5, 10);

//image(frames[1], 80, -20, 500, 700);

//image(frames[0], 80, -20, 500, 700);

push();

noFill();

stroke(0);

strokeWeight(9);

rect(185, 42, 218, 405, 25);

beginShape();

strokeWeight(1);

fill(0);

curveVertex(245, 47);

curveVertex(245, 47);

curveVertex(261, 61);

curveVertex(329, 61);

curveVertex(345, 47);

curveVertex(345, 47);

endShape();

pop();

image(skin[counter],100, -18, 430, 655);

image(finger[counter], 200, 220, 300, 300);

//image(finger[counter], 200, 220, 300, 300);

push();

noFill();

beginShape();

stroke(0);

strokeWeight(4);

print(mouseX, mouseY);

curveVertex(180, 245);

curveVertex(180, 245);

curveVertex(159, 256);

curveVertex(152, 270);

curveVertex(161, 278);

curveVertex(180, 281);

curveVertex(180, 281);

endShape();

beginShape();

curveVertex(180, 299);

curveVertex(180, 299);

curveVertex(161, 308);

curveVertex(155, 322);

curveVertex(160, 333);

curveVertex(180, 334);

curveVertex(180, 334);

endShape();

beginShape();

curveVertex(180, 365);

curveVertex(180, 365);

curveVertex(165, 374);

curveVertex(157, 388);

curveVertex(164, 398);

curveVertex(180, 398);

curveVertex(180, 398);

endShape();

beginShape();

curveVertex(408, 243);

curveVertex(408, 243);

curveVertex(426, 272);

curveVertex(435, 292);

curveVertex(446, 309);

curveVertex(457, 322);

curveVertex(457, 322);

curveVertex(474, 348);

curveVertex(480, 365);

curveVertex(482, 390);

curveVertex(482, 410);

curveVertex(482, 432);

curveVertex(482, 450);

curveVertex(488, 495);

curveVertex(496, 526);

curveVertex(501, 545);

curveVertex(505, 562);

curveVertex(518, 605);

curveVertex(518, 605);

endShape();

if (counter == 1 ){

handM1 = 383;

handM2 = 360;

}else{

handM1 = 383;

handM2 = 369;

}

beginShape();

curveVertex(433, 326);

curveVertex(433, 326);

curveVertex(407, 340);

curveVertex(393, 354);

curveVertex(handM1, handM2);

curveVertex(handM1, handM2);

endShape();

beginShape();

curveVertex(285, 453);

curveVertex(285, 453);

curveVertex(286, 461);

curveVertex(296, 469);

curveVertex(308, 466);

curveVertex(300, 476);

curveVertex(301, 490);

curveVertex(309, 501);

curveVertex(318, 504);

curveVertex(307, 508);

curveVertex(309, 528);

curveVertex(315, 544);

curveVertex(332, 570);

curveVertex(359, 600);

curveVertex(359, 600);

//curveVertex(handM1, handM2);

endShape();

pop();

//battery drawing

if (Min < 40){

batteryR = 255;

batteryG = 255;

batteryB = 255;

} else {

batteryR = 213;

batteryG = 73;

batteryB = 61;

}

batteryL = (60-Min)/60 * 25;

push();

fill(batteryR,batteryG,batteryB);

rect(width/2+57, 53, batteryL, 12, 4);

noFill();

stroke(255);

rect(width/2+57, 53, 25, 12, 3);

pop();

push();

fill(144, 173, 189);

rect(width/4,0, 400, 37);

pop();

} |