Jonathan Ota + John Brieger — Final Project: Virtualized Reality

Virtual reality is the creation of an entirely digital world. Virtualized reality is the translation of the real world into a digital space. There, the real and virtual unify.

We have created an alternate reality in which participants explore their environment in third person. The physical environment is mapped by the the Kinect and presented as an abstracted virtual environment. Forced to examine reality from a new perspective, participants must determine where the boundary lies between the perceived and the actual.

Project Overview

When a participant puts on the backpack and associated hardware, they are forced to view themselves in third person and reexamine their environments in new ways.

Virtualized Reality’s physical hardware is composed of:

- A handcrafted wooden backpack designed to hold a laptop, battery, scan converter, and a variety of cables.

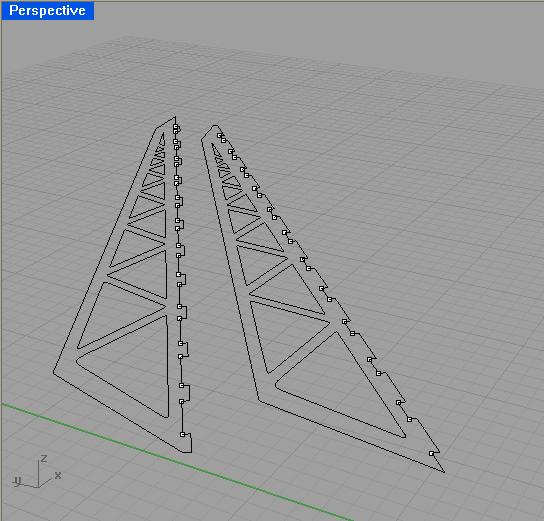

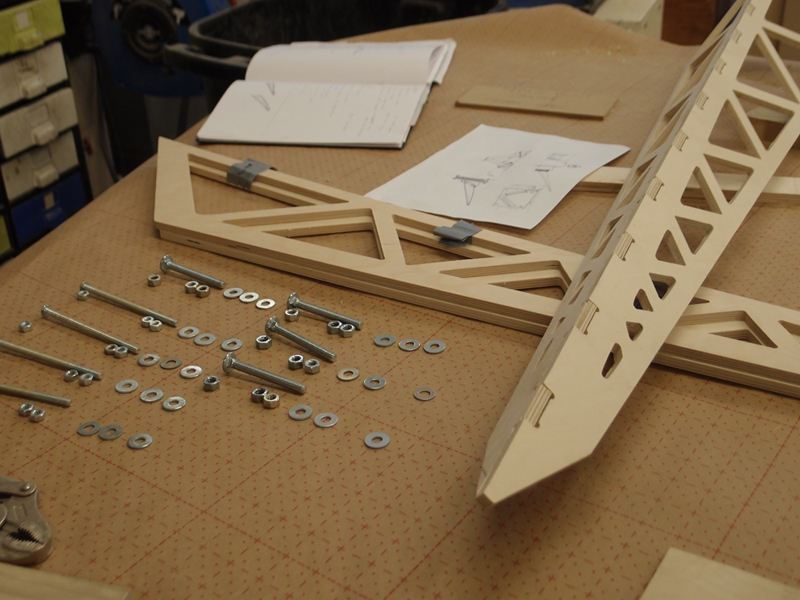

- A CNC Milled wooden truss, fastened into the backplate

- A Microsoft Xbox Kinect, modified to run on 12v LiPo batteries

- A pair of i-glasses SVGA 3D personal display glasses

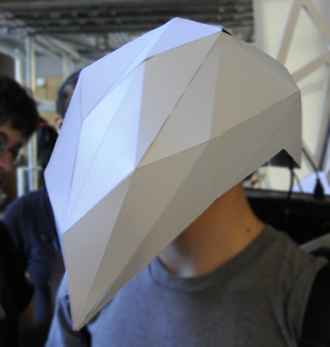

- A laser-cut Styrene helmet designed to fit over the glasses

- A backpack running simple CV software to display Kinect Data

Virtualized Reality, at its core, is about having a linear out of body experience.

First, participants put on the backpack and goggles, mentally preparing them to have a technological experience.

Then, they put on the helmet, a visual cue that separates the experience they have inside of the Virtualized Reality from the physical world.

At that point, we guide participants through the three stages of the experience we designed:

- Participants view themselves in 3rd person using the Kinect’s RGB Camera. They begin to orient themselves to an out of body viewing experience, learning to navigate their environment in third person while retaining familiarity with normal perceptions of space.

- Participants view themselves in 3rd person using a combination of the Kinect’s depth sensing and the RGB camera, in which object’s hues are brightened or darkened based on how far they are from the participant. This also is the first display that takes into account depth of environment.

- Participants view the point cloud constructed by the Kinect’s depth sensing in a shifting perspective that takes them not only outside of their own body, but actually rotates the perspective of the scene around them even if they remain stationary. This forces participants to navigate by orienting their body’s geometry to the geometry of space rather than standard visual navigation. While disorienting, the changing perspective takes participants even farther out of their own bodies.

Design and Process Overview

We wanted to create a strong, clean visual appeal to our structure, something that was both futuristic and functional. We ended up going with an aesthetic based on white Styrene and clean birch plywood.

Jonathan’s first iteration of the project had given us some good measurements as far as perspective goes, but we had a lot of work to do structurally and aesthetically.

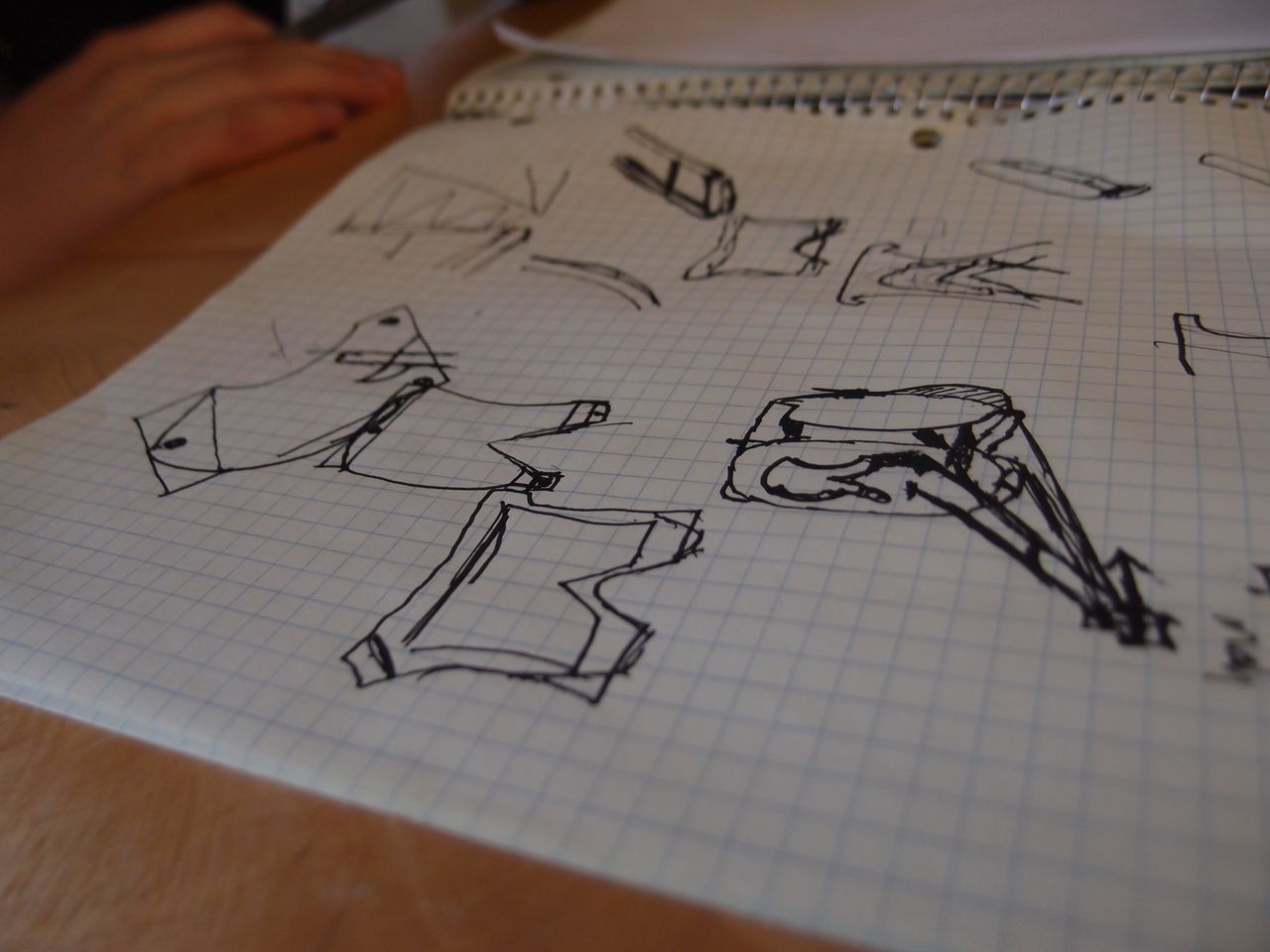

We started by sketching a variety of designs for helmets and backpack structures.

The Helmet:

We started with a few foam helmet models, one of which is pictured below:

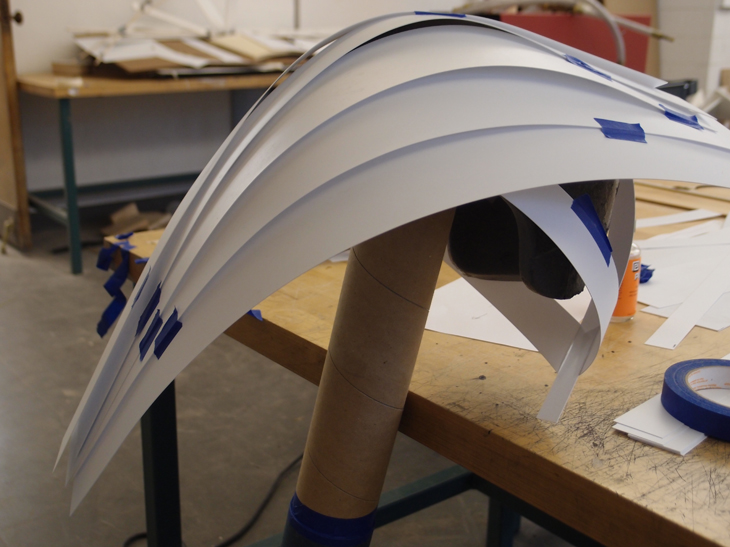

This was followed by a material exploration using layer strips of Styrene, but the results were a bit messy and didn’t hold form very well.

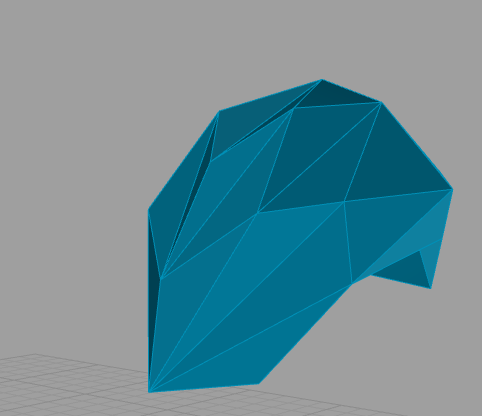

Then, Jonathan modeled a faceted design in RhinoCAD that we felt really evoked the overall look and feel of our project.

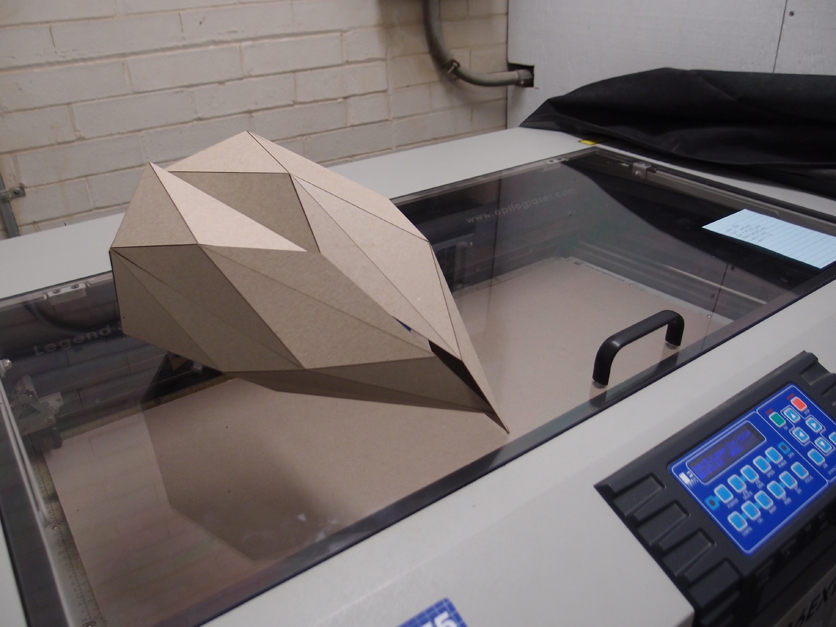

This was then laser cut into chipboard and reassembled into a 3D form:

Happy with this form, we recut it in white styrene and bonded it together with acrylic cement

The Truss:

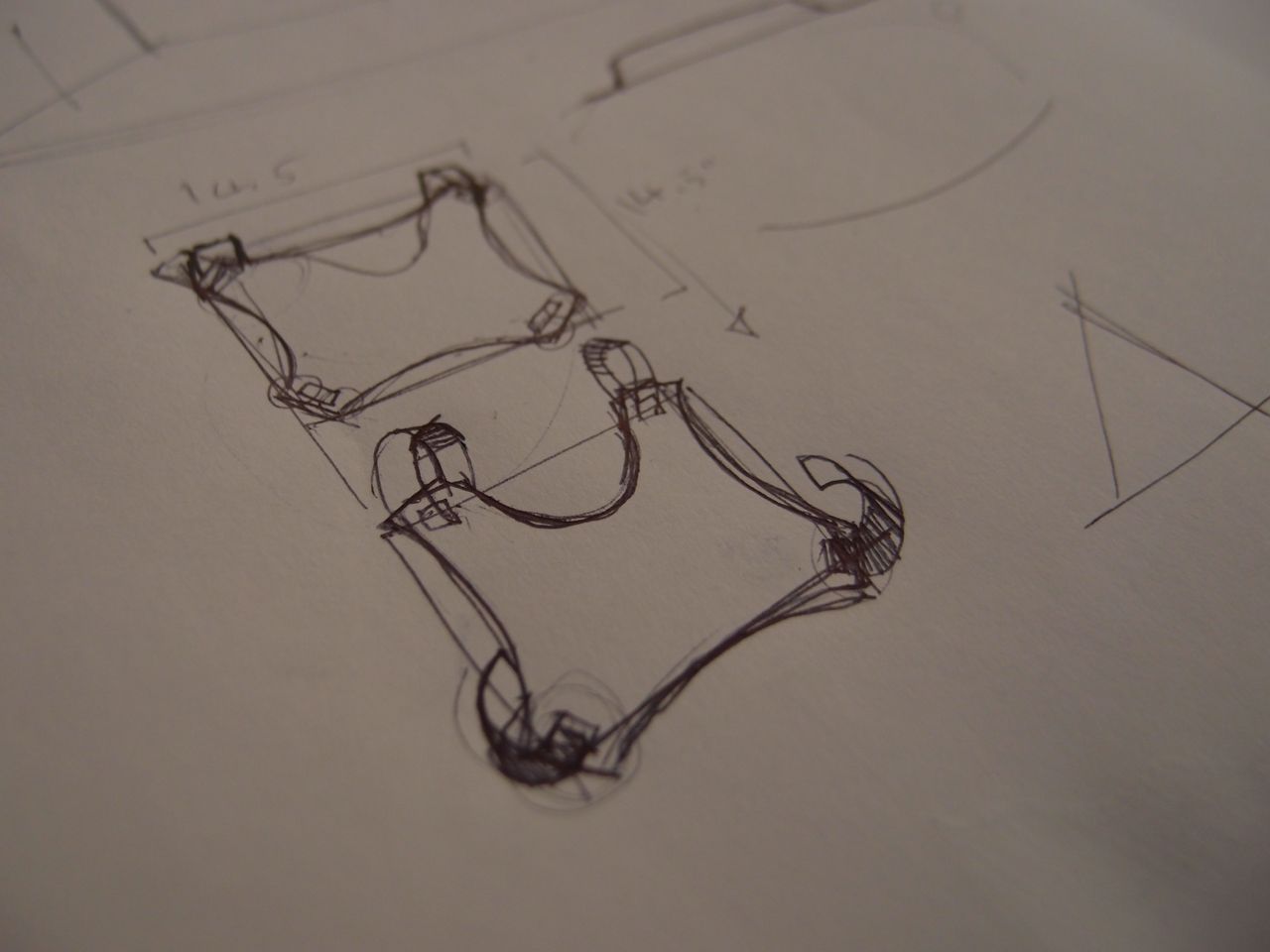

At the same time, we had also been designing the back truss that would hold the Kinect behind and above the participant’s head.

First we prototyped in Foamcore board:

Then we modeled them in RhinoCAD:

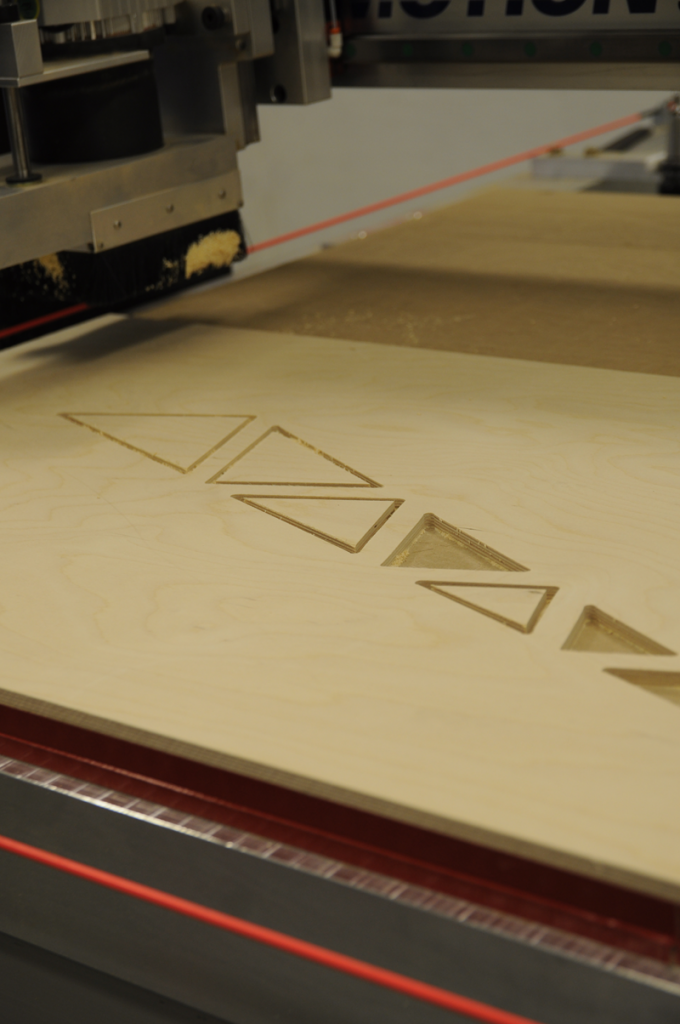

Finally, we used a CNC Mill to cut them out of birch plywood:

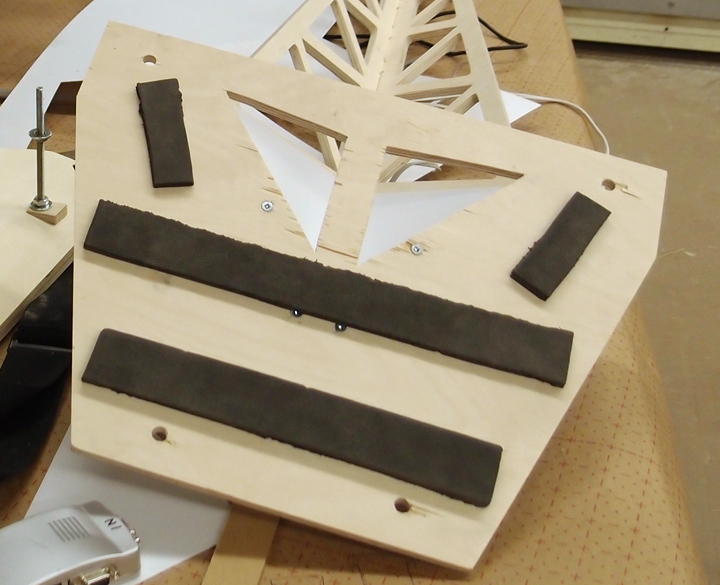

The Backpack

The curved rear shell of the backpack was made by laminating together thin sheets of wood with a resin-based epoxy, then vacuuming the wood to a mold as the epoxy cured

We then cut a back plate and mounted the truss to it.

Jonathan tests out the backpack with a blue foam laptop:

Finally, we added a styrene pocket at the base of the truss to hold the scan converter, Kinect battery and voltage regulator, and extra cable length.

Expansion and Further Thoughts

While we had initially concepted the project to use heavy amounts of algorithmic distortion of the 3D space of the participant, we found that it was both computationally infeasible (the awesomely powerful pointclouds.org library ran at about 4fps) as well as overly disorienting. The experience of viewing yourself in 3rd person is disorienting enough, and combined with the low resolution of Kinect and the virtual reality goggles, distorting the environment loses its meaning. An interesting expansion for us would be real-time control over the suit, something like handtracking to do the panning and tilting, or perhaps a wearable control in a glove or wristguard.