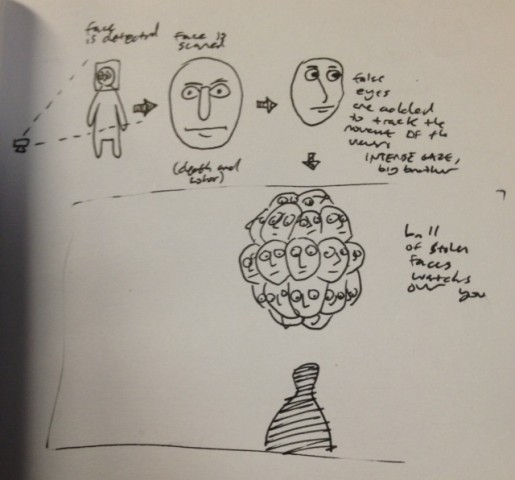

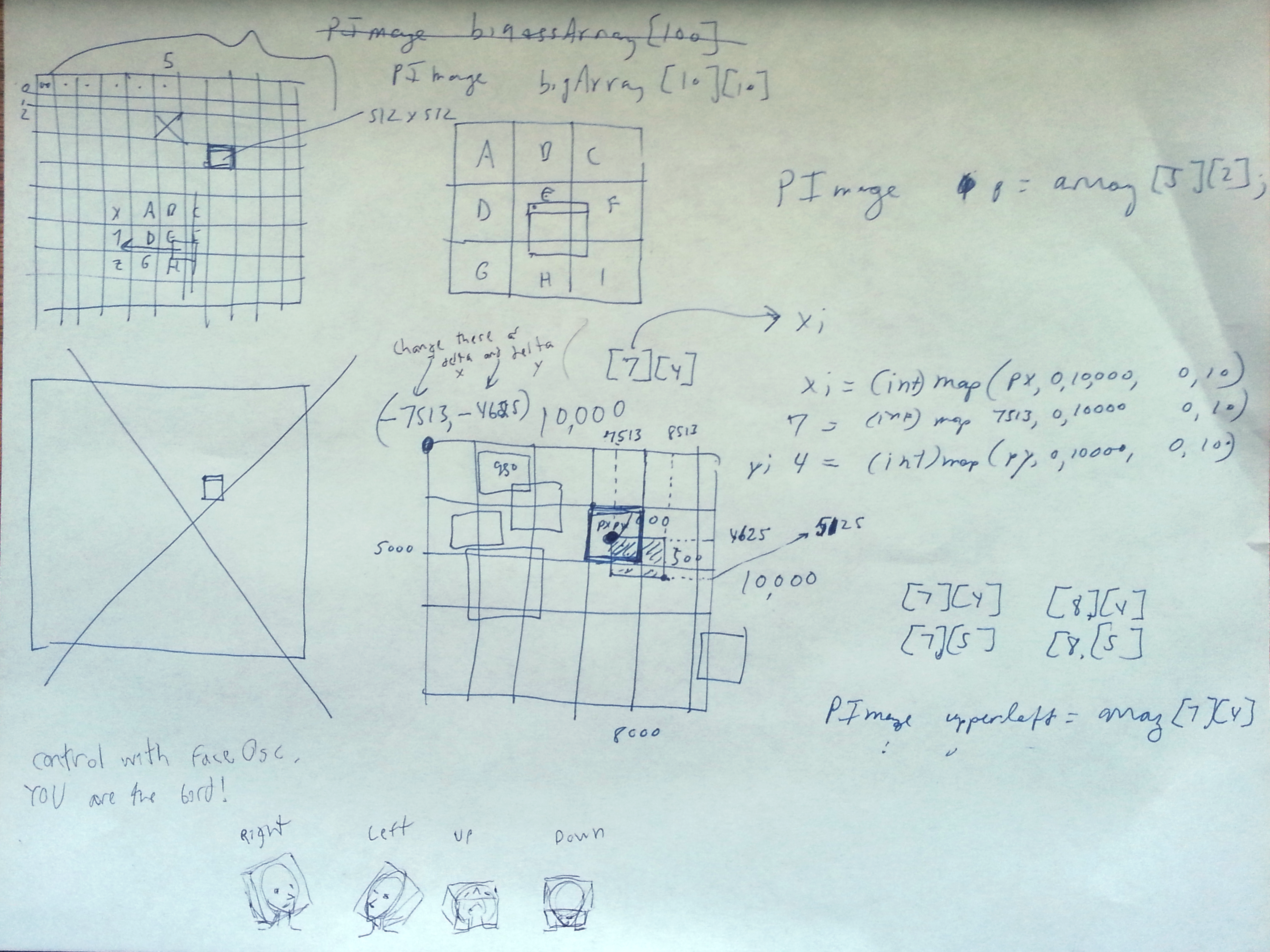

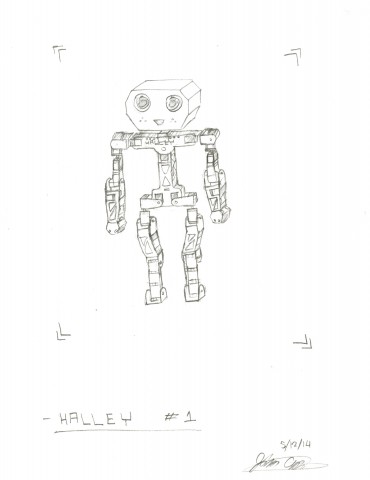

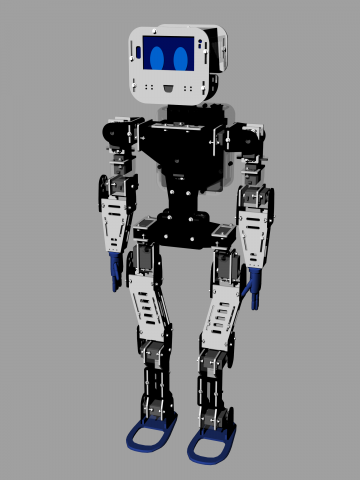

Final Project Sketches

There is a lot of great pseudoscience around what one’s body resistance or frequency can reveal/do, or how it can be changed. Some examples include:

http://www.templeofwellness.com/rife.html

http://www.lermanet.com/e-metershort.htm

http://www.highfrequencyhealing.org/

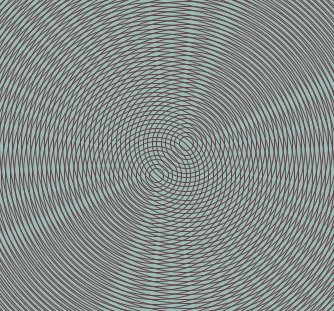

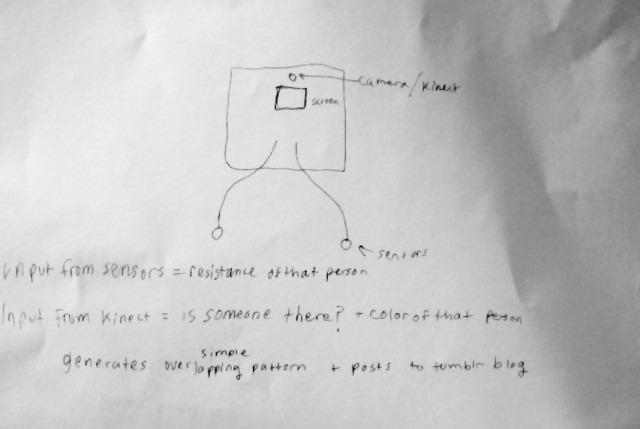

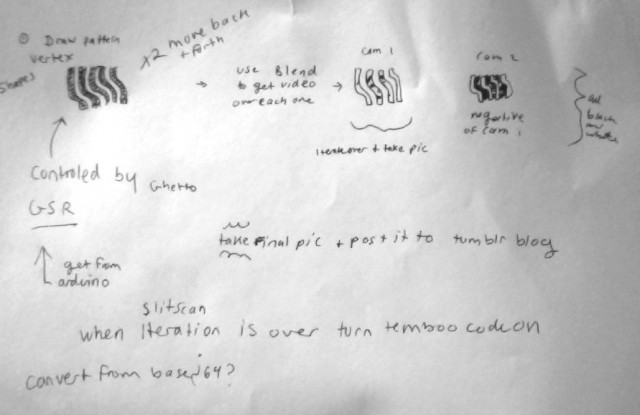

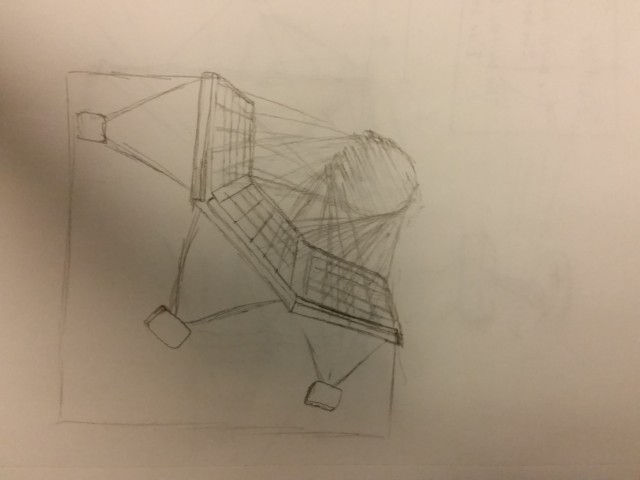

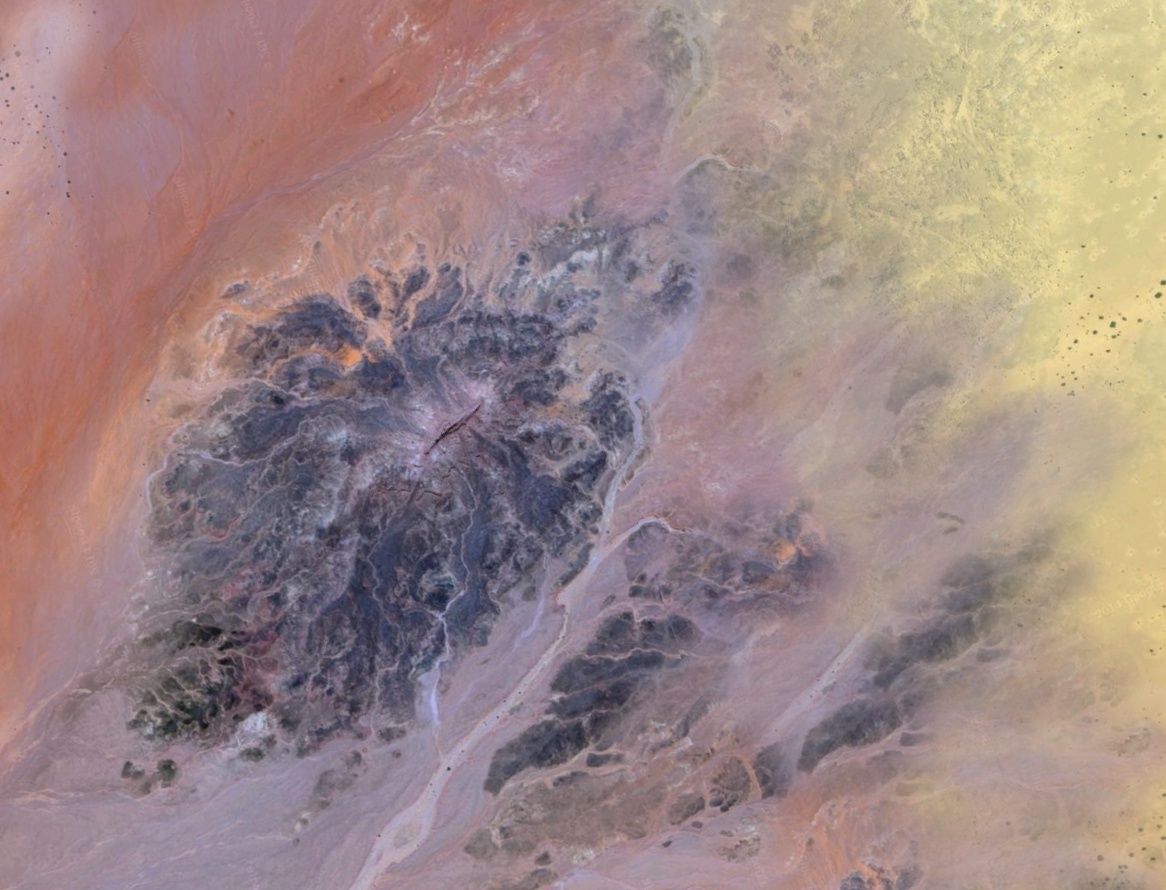

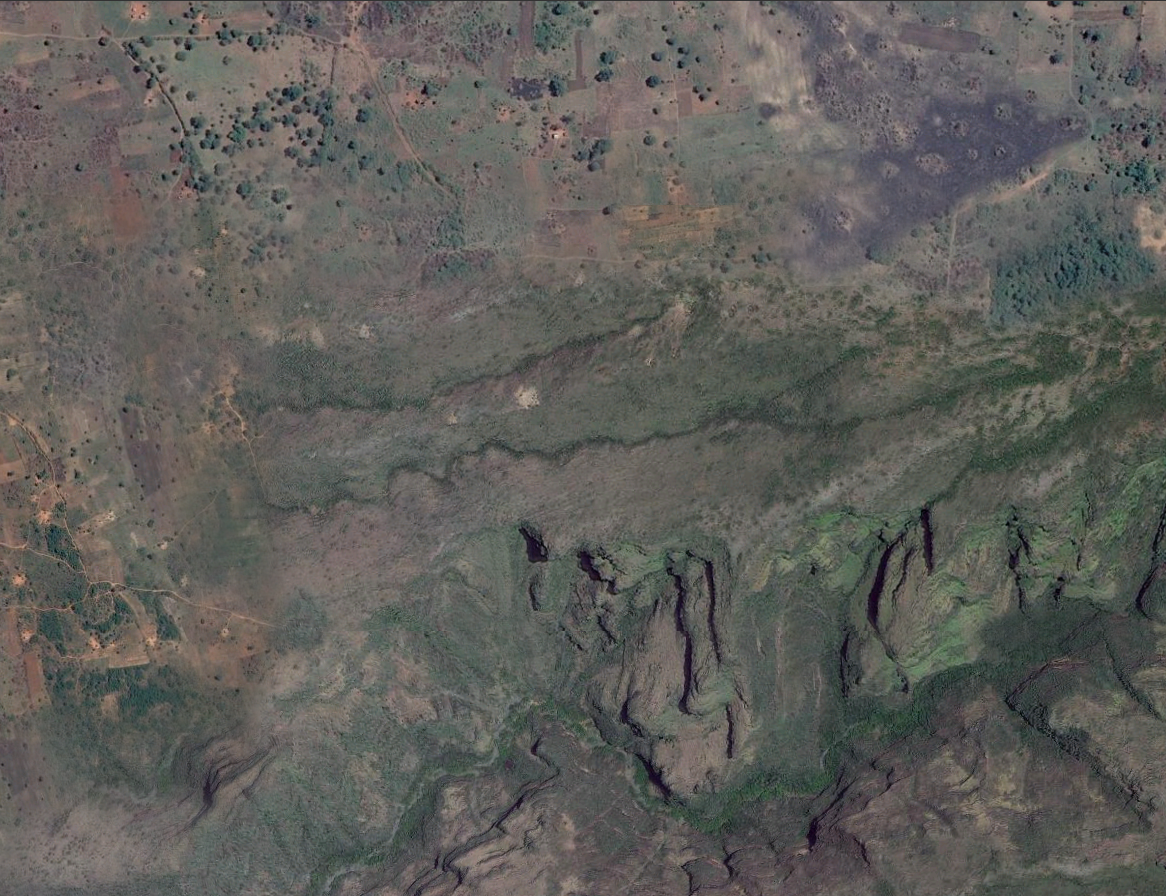

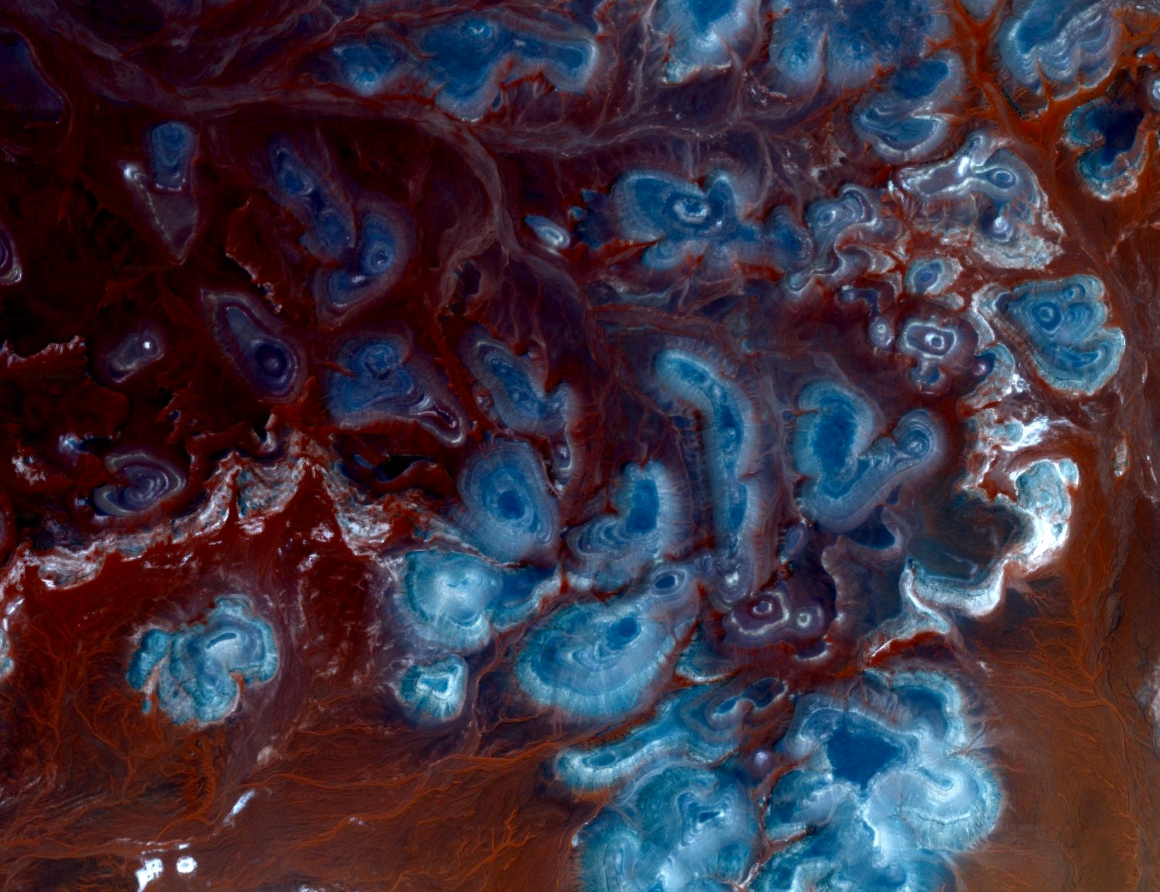

Like my automaton, I wanted to work with moiré patterns again. I simply would like to make a visualization of the participant’s body resistance, or thats all I have right now, itll get more flesh to it as I work on it. Heres a prototype of what the sketch will generate:

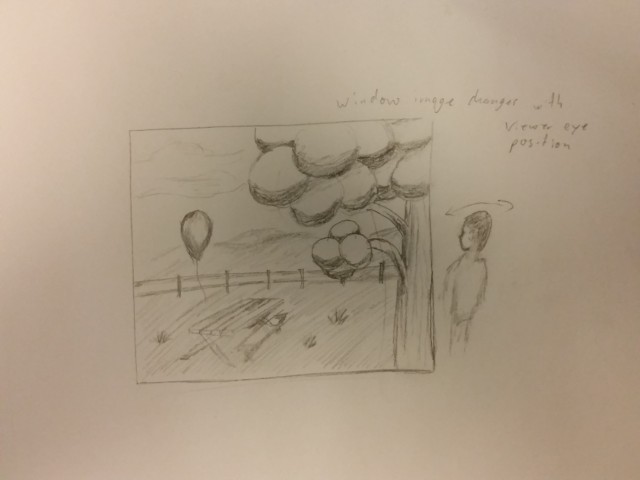

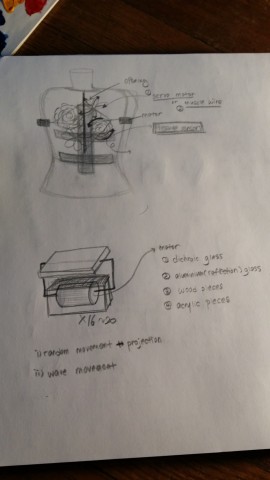

The color is generated by taking the average color from the participant using the kinect’s camera. Im also using the kinect so it can stand by itself as an installation, and will only be activated when someone is standing riiight in front of it. As the person is standing in front of it, the kinect will be taking in information about that person. After the person walks away (as detected from the kinect) it will generate the picture using all the information it gathered and post it to a tumblr blog, with a number so the participant can see which picture represents them.