Looking Outwards: Final Project

(apologies for posting this so late)

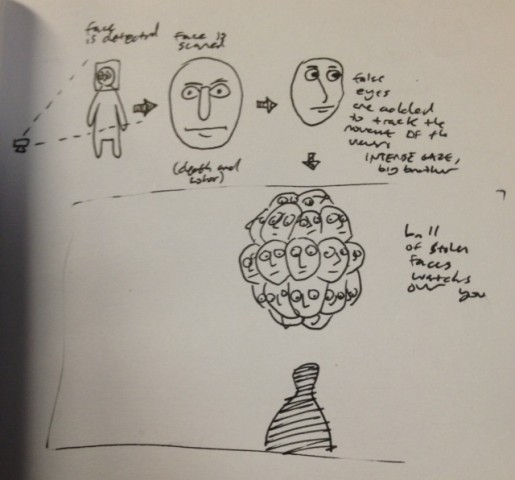

For the past year or so, I’ve been very interested in surveillance conducted by machines using hidden/mysterious/proprietary algorithms and giant databases. These three projects are very relevant to this idea and gave me a lot of inspiration for my final ‘panopticon’ project.

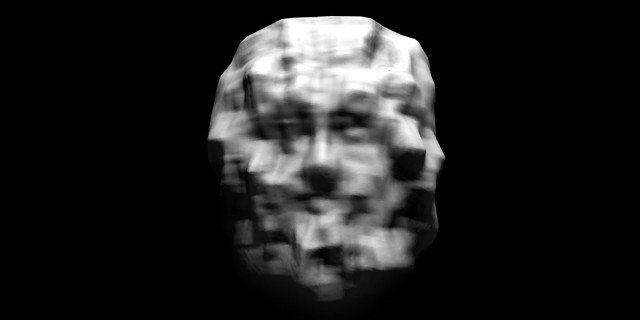

“Data Masks” by Sterling Crispin, 2013-present

“Sterling Crispin’s “Data Masks” use raw data to show how technology perceives humanity…Reverse-engineered from surveillance face-recognition algorithms and then fed through Facebook’s face-detection software, the Data Masks confront viewers with the realization that they’re being seen and watched basically all the time.”

“Stranger Visions” by Heather Dewey-Hagborg, 2012-2013

“In “Stranger Visions”, Heather Dewey-Hagborg analyses DNA from found cigarette butts, chewed gum and stray hairs to generate portraits of each subject based on their genetic data…While not so exact as to readily identify an individual, the portraits demonstrate the disquieting amount of information that can be derived from a single strand of a stranger’s hair and the disturbing potential for surveillance of our most personal information.”

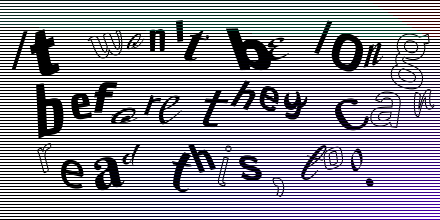

“CAPTCHA Tweet” by Shin Seung Back and Kim Yong Hun, 2013

“‘CAPTCHA Tweet’ is an application that users can post tweets as CAPTCHA. Since computers can hardly read it, humans can communicate behind their sight.”