Finger Launchpad

Tweet:

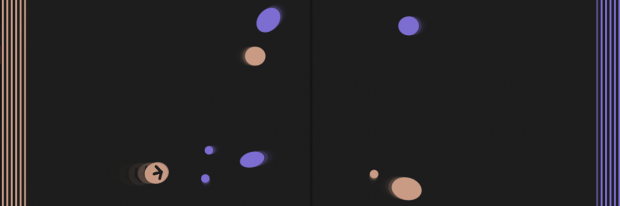

Launch your fingertips at your opponent. Think Multi-Touch Air Hockey. A game for MacBooks.

________________________________________________________________________________

Blurb:

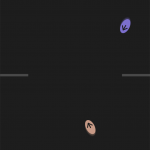

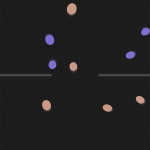

Launch your fingertips at your opponent. Think Multi-Touch Air Hockey. Using the MacBook’s touchpad (which is the same as that of an iPad), use up to 11 fingers to try and lower your opponents health to 0. Hold a finger on the touchpad for a second and then once you lift your finger it will be launched in the direction it was pointing. When fingertips collide, the bigger one wins. Large fingertips do more damage than small ones. Skinny fingertips go faster than wide ones. Engage in the ultimate one-on-one multi-touch battle.

________________________________________________________________________________

Gameplay Video:

________________________________________________________________________________

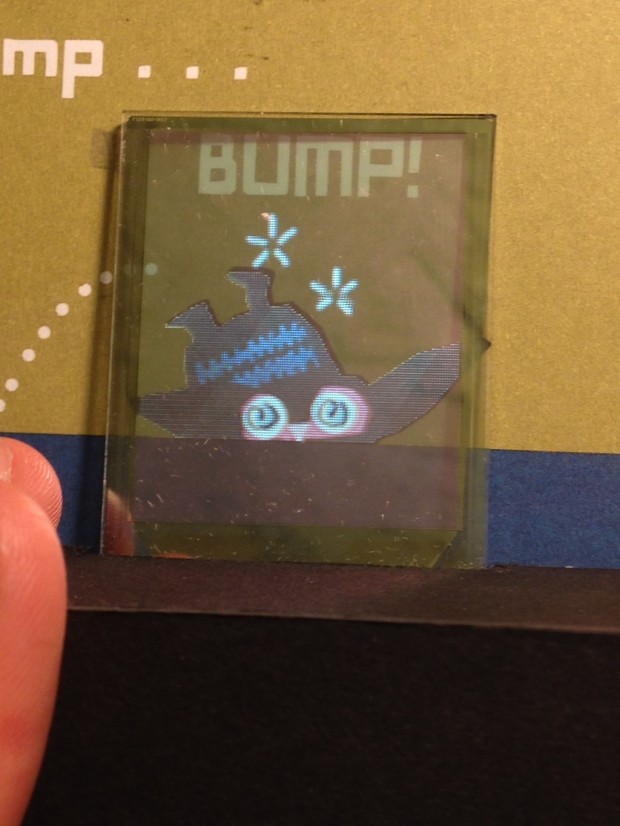

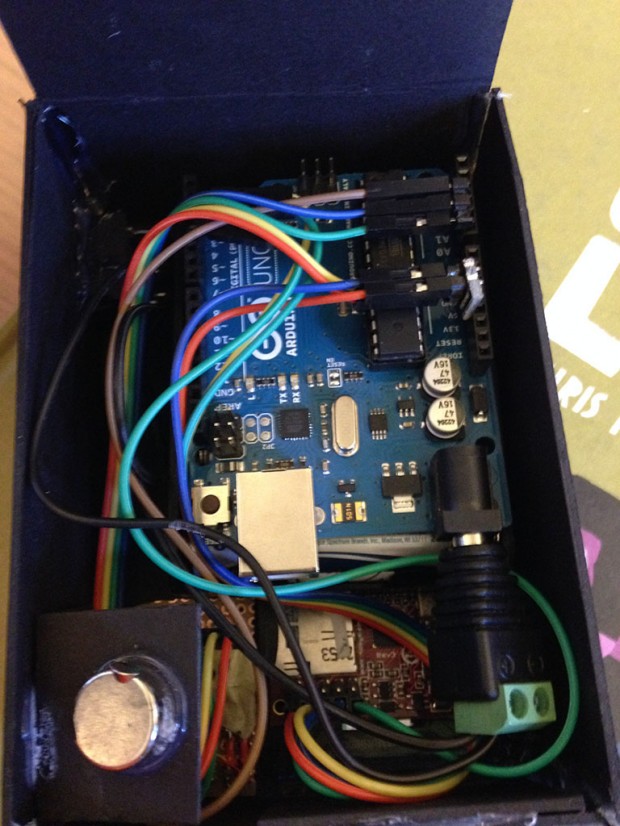

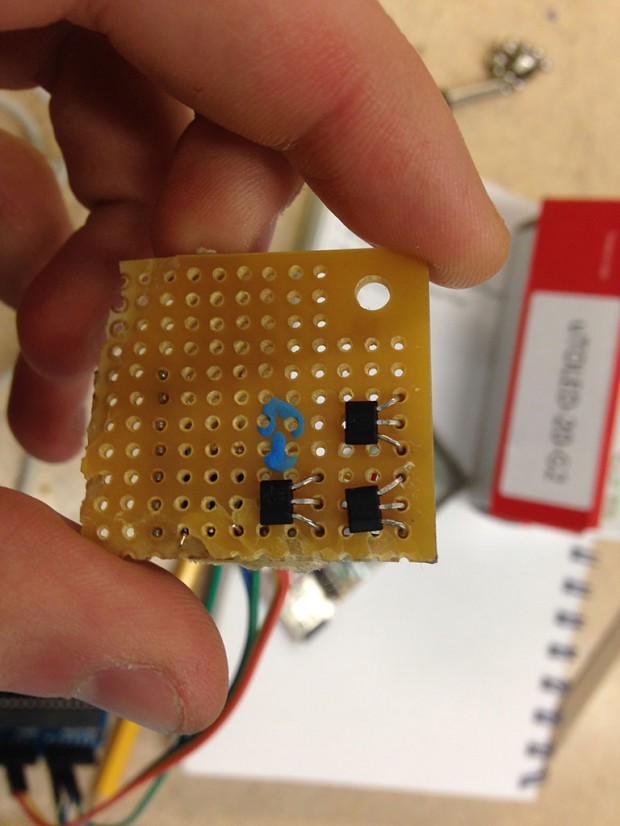

Photos:

________________________________________________________________________________

Narrative:

In Golan’s IACD studio, he told me all semester that I would get to make a game and then the final project came around and it was time to make a game. But what game to make? I was paralyzed with possibilities and with the fear that after a semester of anticipation that I wouldn’t make a game that lived up to mine or Golan’s expectations.

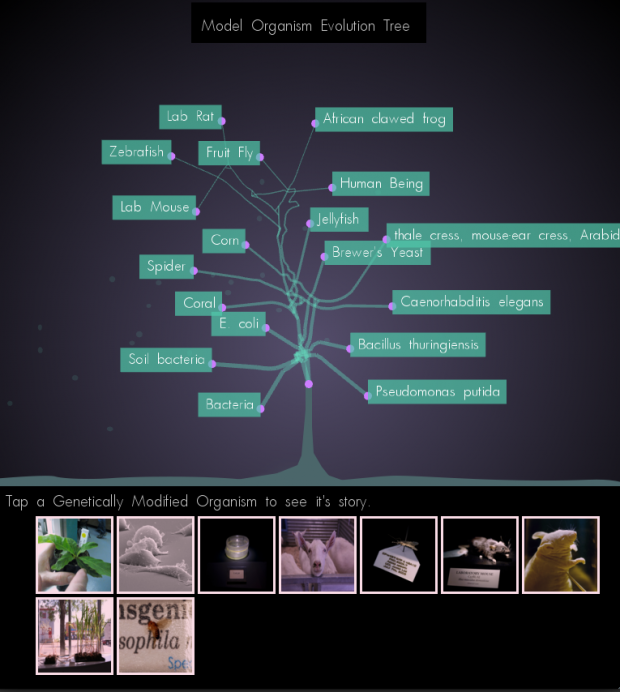

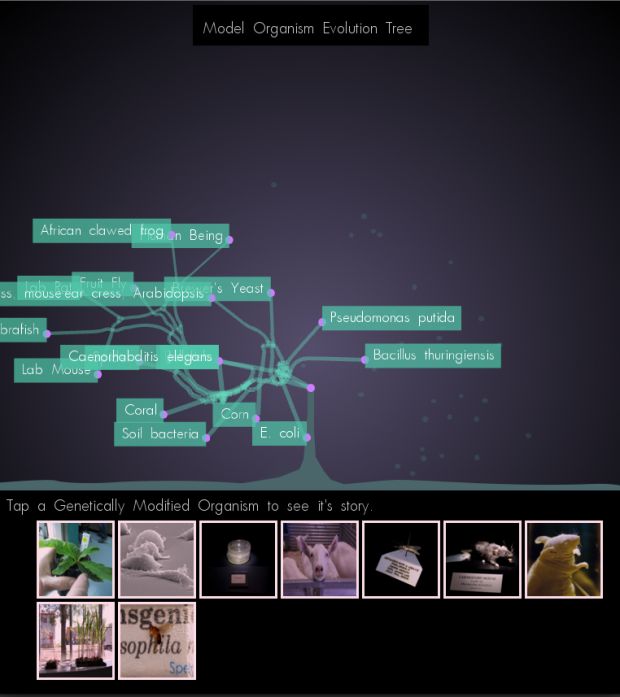

After talking about what I should make a game on, Golan gave me this tool that a previous IACD student made that allows you to easily get the multi-touch interaction that occur on a MacBook trackpad, which meant that I could easily make a multi-touch game without having to jump through hoops to make it on a mobile device.

So I sat there pondering what game to make with this technology and the ideas that were instilled in Paolo’s Experimental Game Design – Alternative Interfaces popped into my mind. If it is multi-touch then it should truly be multi-touch at its core (using multiple fingers at once should be central to gameplay). The visuals should be simple and minimalist (there is no need to do some random theme that masks the game). This is the game that I came up with and it serves as a combination of what I have learned from Paolo and Golan. I think this might be the best designed game I have made yet and so far it is certainly the one I am most proud of having made.

________________________________________________________________________________

Links:

View/Download Code @: GitHub

Download Game @: MacKenzie Bates’ Website

Download SendMultiTouches @: Duncan Boehle’s Website

Read More About Game @: MacKenzie Bates’ Website