tl;dr

– this class is awesome, you should take it

– nothing is impossible/just try it regardless

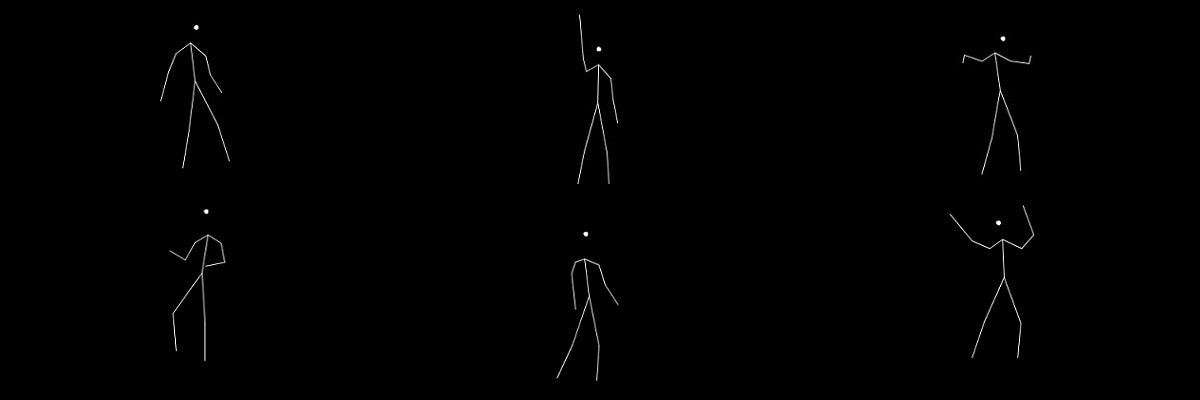

– be proud of your projects

As my junior year draws to a close, I look upon my semester with disdain. Though not because I hated this semester, but rather I am saddened by its finish. I never would have thought that I could come so far in the three years I’ve been in college. I thought my “genius” in high school was my peak. I thought it was good enough—or as good as it was going to get. This course has brought that potential much higher—way beyond what I could have ever imagined I was capable of. And I don’t mean this in terms of intelligence. The experiences I’ve had in the past three years have brought my perseverance potential light years beyond what I could have had if I didn’t go to this school and push my way into this class’ roster.

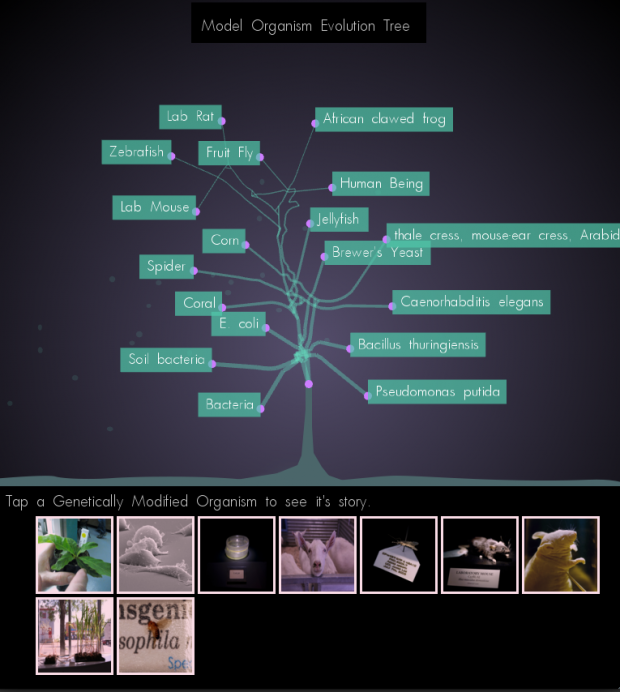

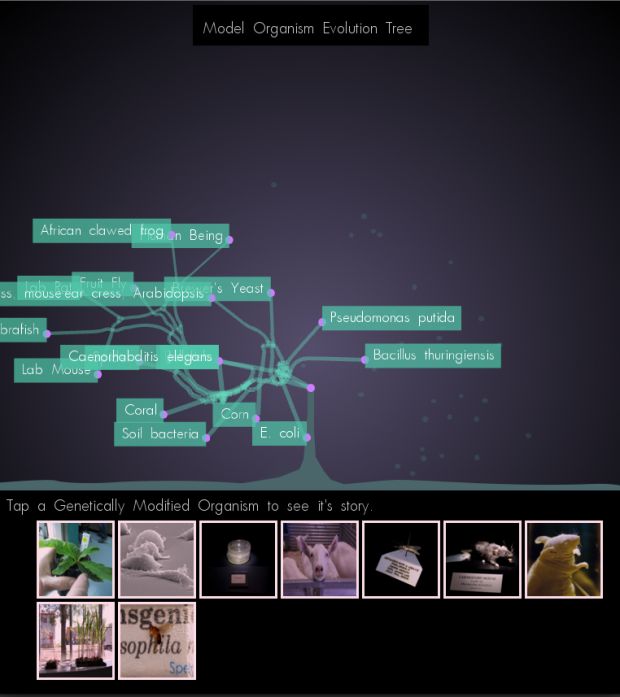

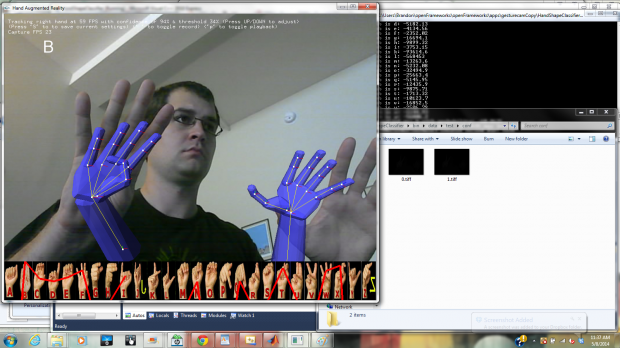

What have I learned from this course you may ask? The main takeaway I received from this class that I’m sure was intentionally bludgeoned into all of us, was that whether or not something is impossible, you should just try it. The possibility of achieving your goals, believe it or not, increases when you actually reach for them. This mentality has produced amazing projects from the course’s students that I could never have thought to witness right before my eyes. I always felt that those amazing tech or design projects I saw online were like celebrities: you knew they grew from regular people, but you never thought you’d see one in the same room as you.

I will also forever take with me the idea that how you talk about your project is just as important as the project itself. This seems obvious and you’d think that you’ve talked up a project enough, but, as it can be seen with some of my peers’ projects this semester: the right documentation and public potential you give your project can make a world of difference. How you present a project will determine how it will be perceived. That self-deprecating thing that most people do when they talk about themselves to garner “oh psh, you’re great”s and “what are you talking about, it’s wonderful”s doesn’t work too well for most projects. Looking down upon your own project will make others look down upon it too, and not see it for what it is at that moment. Sure, often times your project might actually stink, but you don’t want others to know that.

You also have to be careful about how much you reveal about your project. You may think that the technical aspects of how many programs are running in the backend or how many wires you needed to solder together is interesting, but it’s really not. If someone looking at your project cares in that much detail, they’ll ask. You have to present your project for what it is at the moment someone sees it, not what it was a couple hours ago while you were booting it up. It’ll be important to say how you created the project (especially if it was in a new and interesting way), but the extra details might be better off left out. But I digress.

I value this course in more ways than I can describe. Let’s just say that I’m very thankful to have been scrolling through CMU’s schedule of classes on the day just before Golan picked his students. Luck could not have been more on my side. Of course, after the class started, luck was no longer a factor—just hard work. And I’m glad to have needed to put in all that hard work this semester. Without it, I would not have realized what great potential there is inside of me and inside of everyone, for that matter. You always feel like there’s a limit within you so when you think you’ve hit it, you never dare to push past it in fear of breaking. This course has annihilated that fear because I’ve realized that the limit only exists within our minds. Okay, maybe I’m getting a little carried away here, but even so, limitless or not, if there is anything you want to try, just try it.