Tweet:

Witness THE ENCOUNTER of two complex creatures. Teaching and learning causes a relationship to bloom.

Abstract:

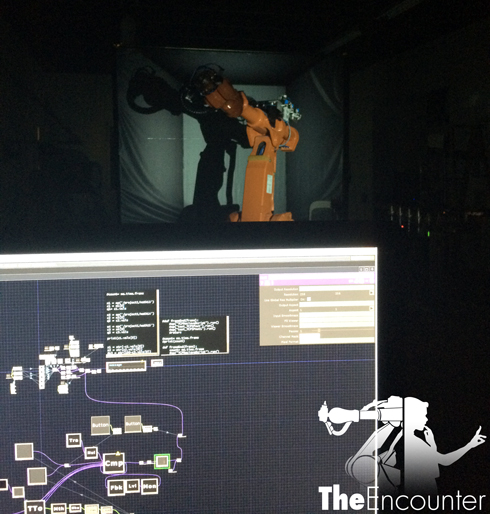

“The Encounter” tells the tale of two complex creatures. A human and industrial robotic arm meet for the first time and engage in an unexpected playful interaction, teaching and learning from each other. This story represents how we, as a society, have grown to learn from and challenge technology as we move forward in time. Using Touch Designer, RAPID, and the Microsoft Kinect we have derived a simplistic theatrical narrative through means of experimentation and collaboration.

Narrative:

During the planning stages of the capstone project, Golan Levin introduced us, the authors of the piece, to one another saying that we could benefit from working with each other. Mauricio, from Tangible Interaction Design, was wanting to explore the use of the robotic arm combined with mobile projections, while Kevan, from the School of Drama VMD, was wanting to explore interactive theatre. This pairing ended up with the two of us exploring and devising a performative piece from the ground up. In the beginning, we had no idea what story we wanted to tell or what we wanted it to look like. We just knew a few things we wanted to explore. We ultimately decided to let our tests and experiments drive the piece and let the story form naturally from the playing between us, the robot, the tools, and the actress, Olivia Brown.

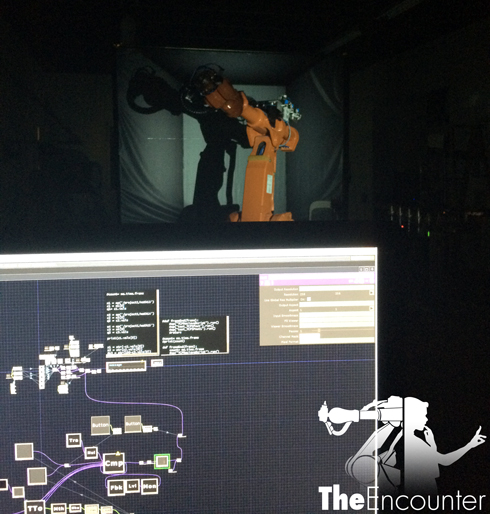

We tried out many different things and built different testing tools. Such as a way to send Kinect Data from a custom TouchDesigner script through a TCP/IP connection so that RAPID could read it and allow the robot to move in sync with an actor. This experiment proved to be a valuable tool and research experience. Also a Processing sketch was used during the testing phases to evaluate some of the robot’s movement capabilities and responsiveness. Although we ended up dropping this from the final performance for more cue based triggering, ultimately the idea of the robot moving and responding to the humans movement drove the narrative of the final piece.

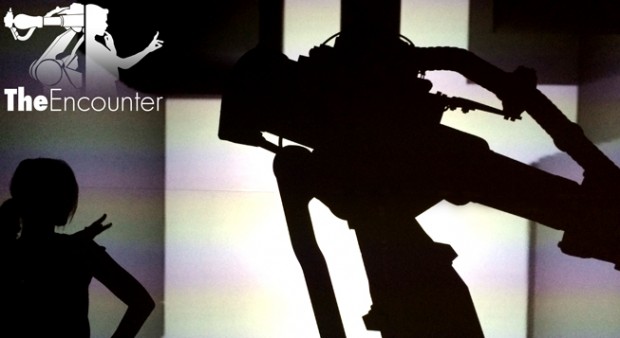

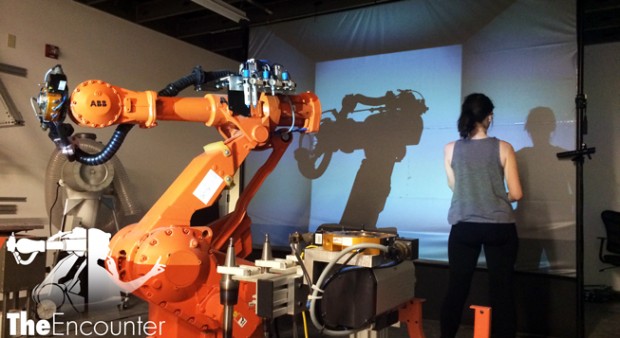

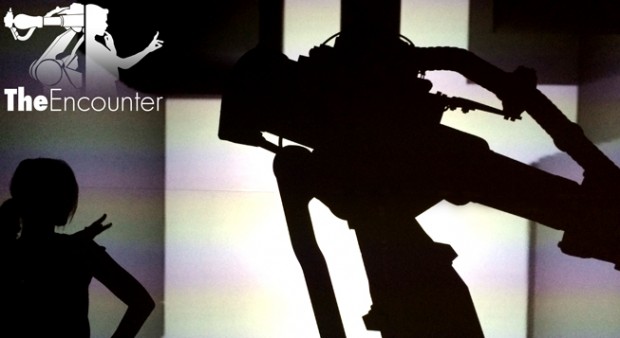

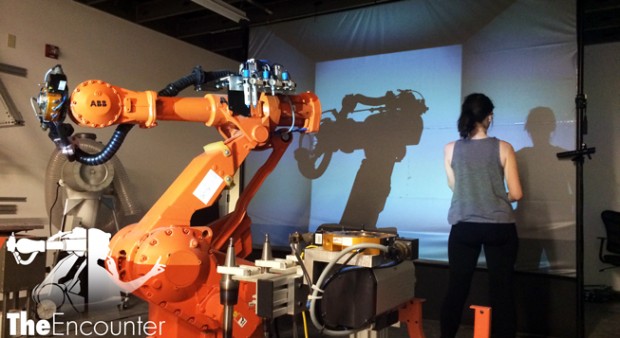

We had planned many different things, such as using the entire dFab (dfabserver.arc.cmu.edu) lab space as the setting for the piece. This would have included multiple projector outputs/ rigging, further planning, extra safety precautions for our actress, etc. Little did we realize that this would have been trouble for us due to the quickly shortening deadline. Four days before we were suppose to have our performance, an unexpecting individual came with a few words of wisdom for us. “Can a robot make shadow puppets?”. Yes, Golan’s own son’s curiosity was the jumping off point for our project to come together not only visually, but also realistically for time sake. From that moment on we set out to create a theatrical interactive robotic shadow performance. The four days included researching the best way to set up the makeshift stage in the small confines of dFAB and finishing the performance as strong as we could. To make the stage, we rigged unistrut (lined with velcro) along the pipes of the ceiling. From there we used a Velcro lined RP screen from the VMD department and attached it to the unistrut and two Autopoles for support. This created a sturdy/clean looking projection/shadow surface to have in the space.

Conceptually we used two different light sources for the narrative. A ellipsoidal stage light and a 6000 Lumen Panasonic Standard Lens Projector. In the beginning of the piece, the stage light was used to cast the shadows. Through experimenting with light sources, we found that this type of light gave the “classic” shadow puppet atmosphere. It gave it a vintage vibe of the yesteryears before technology. As the story progresses and the robot turns on, the stage light flickers off while the digital projector takes over as the main source of light. This transition is meant to show the evolution of technology being introduced to our society while expressing the contrast between analog and digital.

The concept for the digital world was that it would be reactive to both the robot and the human inputs. For example the human brings an organic life to the world. This is represented through the fluctuation of the color spectrum brought upon the cold muted world of the robot. As this is the robot’s world, as it moves around the space and begins to make more intricate maneuvers, the space responds like a machine. Boxes push in and out of the wall, as if the environment is alive and part of the machine. The colors of the world are completely controlled by the actress during the performance, while the boxes where cue based. They used noise patterns to control the speed of movements. If there was one thing would be great to expand on, it would have been for the robot’s data to be sent back to TouchDesigner so that it would live control the box’s movements instead of it being cued by an operator.

For the robots movements, we ended up making them cue based for the performance. This was done directly in RAPID code, cued from an ABB controller “teach pendant”; a touch and joystick based interface for controlling ABB industrial arms. We gave the robot pre planned coordinates and movement operations to do based on rehearsals with the Actress. One thing we would love to expand on further is to allow the recording of the Actress’ movements so that we can play them back live instead of pre defining them in a staged setting. In general we would love to make the whole play between the robot and human more improv rather than staged. Yet, robotic motion proved to be the main challenge to overcome, since the multitude of axis (hence possible motions) of the machine we used (6 axis), makes it very easy to inadvertently reach positions where the robot is “tangled”, and will throw an error and stop. It was interesting to know, specially working on this narrative, that this robotic arms and their programming workflows do not provide any notion of “natural motion” (as in the embodied intelligence humans have when moving their limbs in graceful and efficient manners, without becoming blocked with oneself, for example), and are definitely not targeted towards real time input. This robots are not meant to interact with humans, which was our challenge both technically and to our narrative.

In the end we created a narrative theatrical performance that we feel proud of. One that was created through much discussion and experimenting/play in the dFAB lab. There is much potential for more in this concept, and we hope to maybe explore it further one day!

Github:

https://github.com/maurothesandman/IACD-Robot-performance

https://github.com/kdloney/TouchDesigner_RoboticPerformance_IACD

***The TouchDesigner github link is currently the old file used during rehearsals. The final file will be uploaded at a later date due to travel and insufficient file transfers.

SPECIAL THANK YOU:

Golan Levin, Larry Shea, Buzz Miller, CMU dFAB, CMU VMD, Mike Jeffers, Almeda Beynon, Anthony Stultz, Olivia Brown, and everyone that was involved with Spring 2014 IACD!

Best,

Kevan Loney

Mauricio Contreras