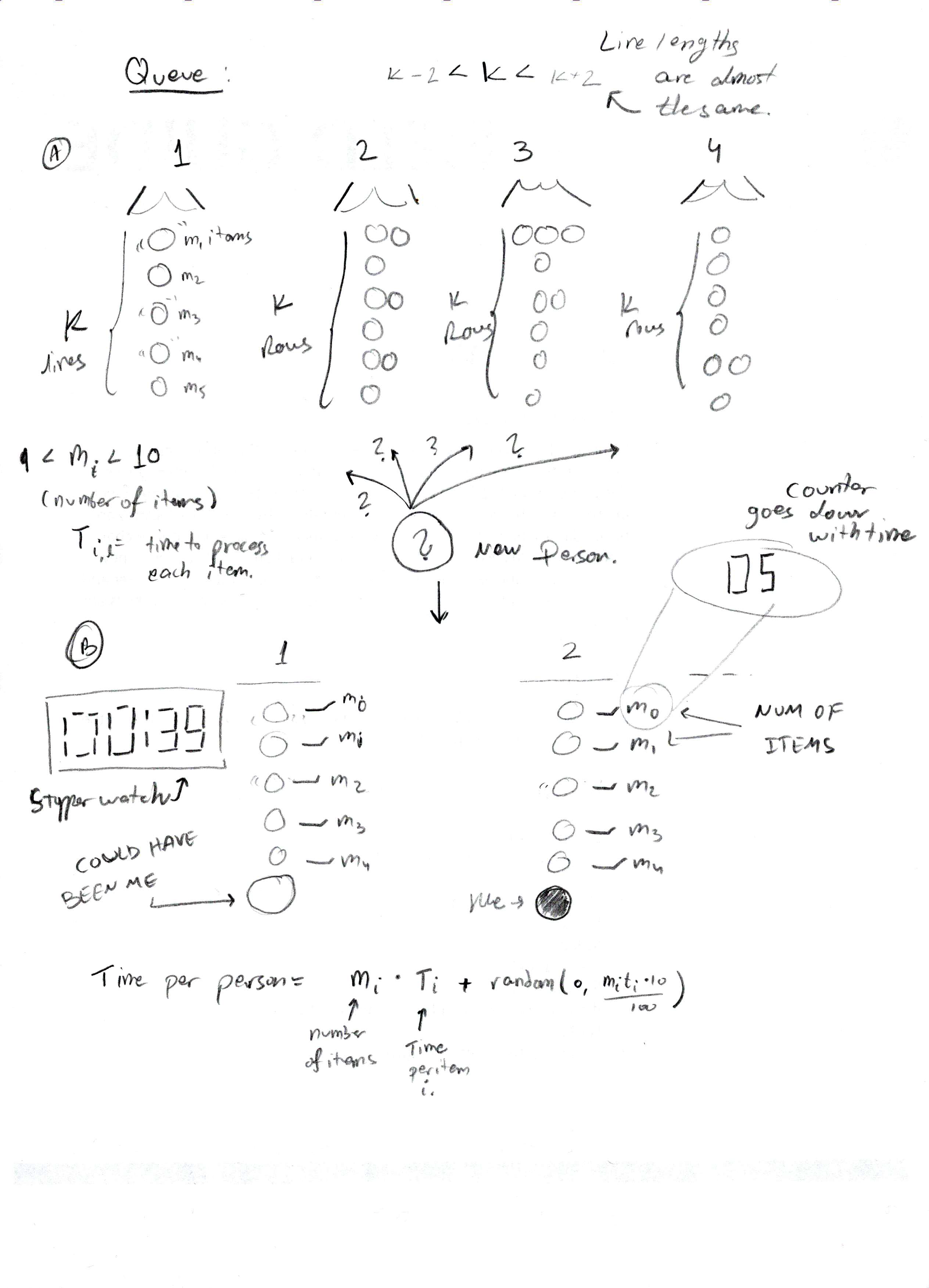

Since in Project Three, we need to come up with a way to generate form, I began my concepting by looking at how I generate form. I do a lot of woodwork and a lot of cooking, so I started looking at how I would be able to generate form in those contexts. Without doing some complicated Rhino scripting to use a CNC Router, doing algorithmic woodworking seemed like a no-go in our timeframe, so I focused on food. Initially, I wanted to build some sort of robotic cooking tool, but again: time issues. Golan encouraged me to do something with Markov Text Chain Synthesis and recipes, so I began to look at way to generate recipes algorithmically.

The difficult part of recipe generation is that the association caused by ingredient lists and titles REALLY messes up Markov synthesis. I started with a Belgian Folk Recipe Book from Project Guttenberg and edited out the intro and exit, then wrote a quick script to strip out the titles. Running that through the Markov synthesizer gave me a very unique recipe for a Cod stew with raspberries, so I knew I was on the right track.

I’ve decided for this project to create a cookbook of 20 or so algorithmically generate recipes, tentatively entitled “Edible Algorithms”. I felt that it really wasn’t enough to just generate the text of recipes (the work for which essentially involves editing a plaintext and running it through a very simple algorithm). To really get at the heart of the project, you have to cook them.

This weekend, I’ll be cooking 8-10 recipes I’ve generated and documenting with photos (which will of course be in the cookbook). I’m finishing my plaintext editing today, and hopefully should have all my recipes generated by tonight. Then, I’ll have to reverse engineer a list of ingredients out of the recipes, and go shopping. I’m also still working on a way to generate titles for each recipe (I’m thinking a wordcount frequency of “Most Common Adjective + Most Common Noun” for each recipe).

I’m pretty excited (and you should be terrified given that I’m probably bringing in some food Thursday).

-John

A note about my plaintext:

I pirated some scans of “Mastery of French Cooking”, “Joy of Cooking”, and “The Silver Palate”, which I consider to be seminal works in American Cuisine. I ran them through OCR, and then have been editing them by writing some simple RegEx based perl scripts to strip out things like page numbers, recipe titles, chapter names, etc.

Sample Recipe I generated last night:

Beat a tablespoon of sugar is whipped into them near the end of which time the meat should be 3 to 3 1/2 FILET STEAKS 297 inches in diameter and buttered on one side of the bird from the neck to the tail, to expose the tender, moist flesh. Gradually make the cut shallower w1til you come up to the rim all around. Set in middle level of preheated oven. Turn heat down to 375· Do not open oven door for 20 minutes. Drain thoroughly, and proceed with the recipe. Blanquette d Agneau Delicious lamburgers may be made like beef stew, and suggestions are listed after it. Savarin Chantilly Savarin with Whipped Cream The preceding savarin is a model for other stews. You may, for instance, omit the green beans, peas, Brussels sprouts, baked tomatoes, or a garniture of sauteed mushrooms, braised onions, and carrots, or with buttered green peas and beans into the boiling salted water. Bring the water to the thread stage 230 degrees. Measure out all the sauteing fat. Pour the sauce over the steaks and serve. rated, washed, drained, and dried A shallow roasting pan containing a rack Preheat oven to 400 degrees. Spread the frangipane in the pastry shell. Arrange a design of wedges to fit the bottom of the pan, forming an omelette shape. A simpleminded but perfect way to master the movement is to practice outdoors with half

UPDATE TO MY UPDATE: Started cooking this first recipe. Since I can’t make a 24 foot steak, I decided I would take a bit of creative license and use 2.97in medallions instead.

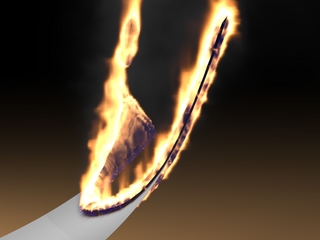

Photo 1: “3 to 3 1/2 FILET STEAKS 2.97in in diameter” with some meat typography from trimming “the cut shallower until you come up to the rim all around”

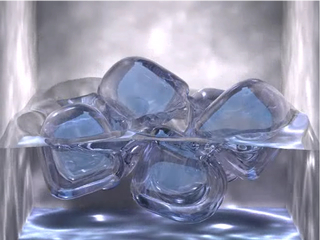

Photo 2: Completed Dish (in frangipane bed, with onions, carrots, peas, and brussel sprout, garnished with sauteed mushrooms.”

It terrifies me that this looks good. As for taste, the frangipane sauce was actually delicious with the meat (which cooked to a medium-rare at 20 minutes and 375 degrees). It did NOT mesh so well with the vegetables, which were less than impressive.