For the final project, I wanted to try something similar to the drawing software I did earlier in the semester (this), but spin it more as a self-contained interactive work in itself rather than a tool for making other work. I focused on the idea of paintable surfaces having more interaction with the painter than just letting themselves be painted. When I viewed the old drawing software in this light, I realized that originally, I was seeing the environment as a static thing that I, as the painter, would populate with life and let grow beyond my original idea while subject to physical program-enforced constraints. This would be great as a tool, but I wanted to go somewhere different with it. I started thinking of the environment as more of a system of both living and static things that might have some interaction with each other, and I, as the painter, would be a potentially-invading outsider to.

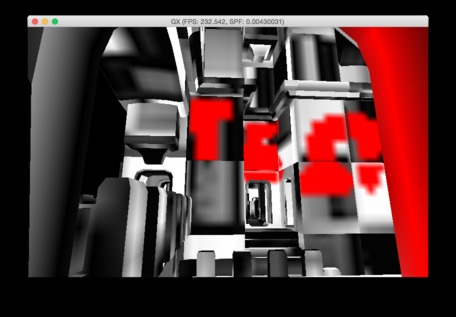

This thinking led me to create this:

Here, there is a simple environment of a static architecture and living creatures that interact via paint that the user applies. By default, the creatures will fly about in arbitrary patterns, but when the user paints the architecture, they will swarm onto the paint to clean it up. The goal was to suggest the idea of the user’s attempt to make the boring environment more colorful being at odds with the creatures’ natural habitat. Unfortunately, the way this was set up did not clearly communicate this idea.

After considering feedback, I realized that part of the reason it wasn’t clearly suggesting this relationship between the objects was that there were too many things visible that were not this interaction. It was too easy to focus on less important aspects.

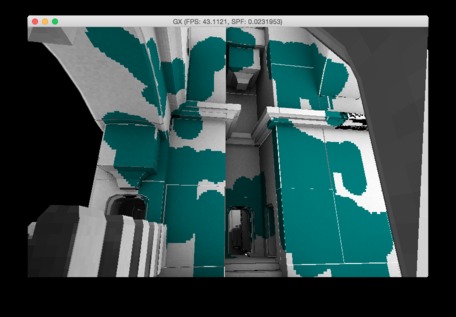

To fix this, I started thinking more about the idea of traditional painting and how it relates to painting on a 3D environment through a 2D surface. I removed the ability for the user to see everything by default, so it would more closely reflect the notion of a painter creating a world via painting and perhaps more strongly motivate a user to interact for a bit rather than just letting the system be. I made the creatures only visible through their interaction with the environment, borrowing from the idea that the automata was only visible through touching the environment in the original drawing program. I also lowered the resolution of the underlying painted texture to further remove information. With less information immediately visible, the user could more freely imagine what could be there.

In the end, I don’t think I made it clear whether the user is interacting with the 3D world of the environment or the 2D world of the screen. The user touches on a 2D surface and a 3D surface becomes visible as a result, but it could also very well be that the user is just revealing things in the same way that someone wipes snow off their windshield. Likewise, it is unclear in this case whether the user is actually painting something from their own imagination with the environment responding to it, or just revealing more of an existing world environment. I think that in both of these cases, the answer I want to be clear is that it is completely valid to view it as either, but I don’t think there is enough going on in this program to confirm that as my intention. The interaction between static geometry, creatures, and user is far too simple currently to suggest much more than these creatures being anything more than randomly moving erasers. More interaction and personality given to these creatures would greatly improve this. Likewise, giving the user a limited amount of additional control like pressure sensitivity, brush size, color, etc… might sell it more as painting and still keep some of the other possibility as well.

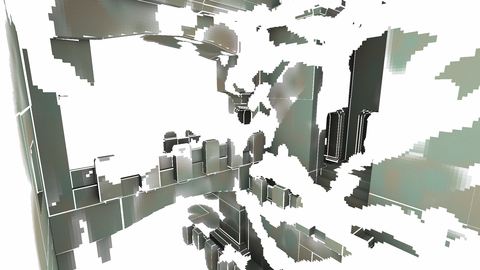

I think this confusion suggests a future direction, though. When the creatures consume the paint and re-hide the environment, the 3D environment gets deconstructed to the point where the visual cues we use to infer the structure of the environment from 2D information no longer exist. Because everything returns to white, there is no reason why the underlying world couldn’t change entirely as long as the visible portions remain the same. In effect, the user would be painting in 2D and changing the structure of a 3D environment, but through the process of the user discovering a random and ever-changing space that must be painted to be preserved.

There is also no reason why the space that is not visible must be physically plausible. The underlying space might not be 3D at all, and, and the 2D projection might seem totally illogical as a 3D space like an MC Escher work. However, if the user is unable to fully paint the surface, they might never realize, and might forever assume that this is just a very complicated 3D surface that they might eventually understand if they keep painting.

How it works

Code: https://github.com/Oddity007/ExplorationViaPainting

Uses: C++, OpenGL, Bullet, Assimp, nanoflann, glfw, lua, glm, stb lib

Has some unused artifacts of: CGAL, OpenVDB

Underneath, the 3D geometry gets unwrapped into a 2D plane such that every pixel on a texture corresponds to a unique pixel on the 3D surface. When the user touches the screen, it unprojects the 2D point out to find the corresponding 3D point in space where the user touched the 3D surface. It then renders a 3D sphere around that point to represent the paint, but instead of rendering it to the screen, it renders it to the unwrapped 3D->2D mesh texture.

The points get put into a physics simulation as very small spheres and can interact with the mesh geometry. After a certain, slightly-random amount of time, they get removed.

The erasing creatures work essentially the same way. They find a nearby painted point on the screen and move to it with a random jitter around the walk. If a painted pixel is nearby, it renders a new sphere, but sets the pixels around it to white instead of mixing it with a paint color.

More screenshots

Even more in-progress screenshots