Bow Shock

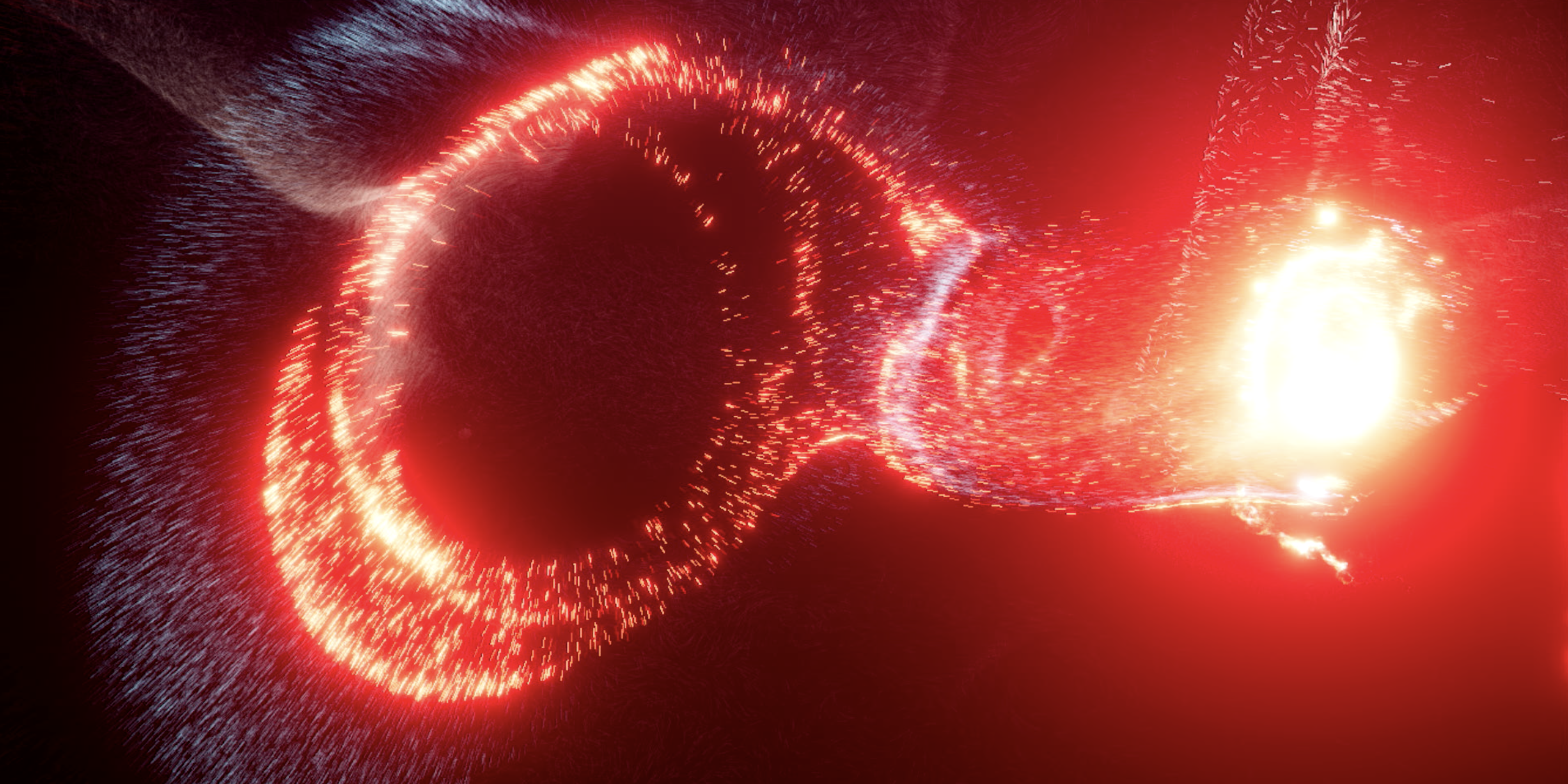

Bow Shock is a musical VR experience where the motion of your hand reveals a hidden song in the action of millions of fiery particles.

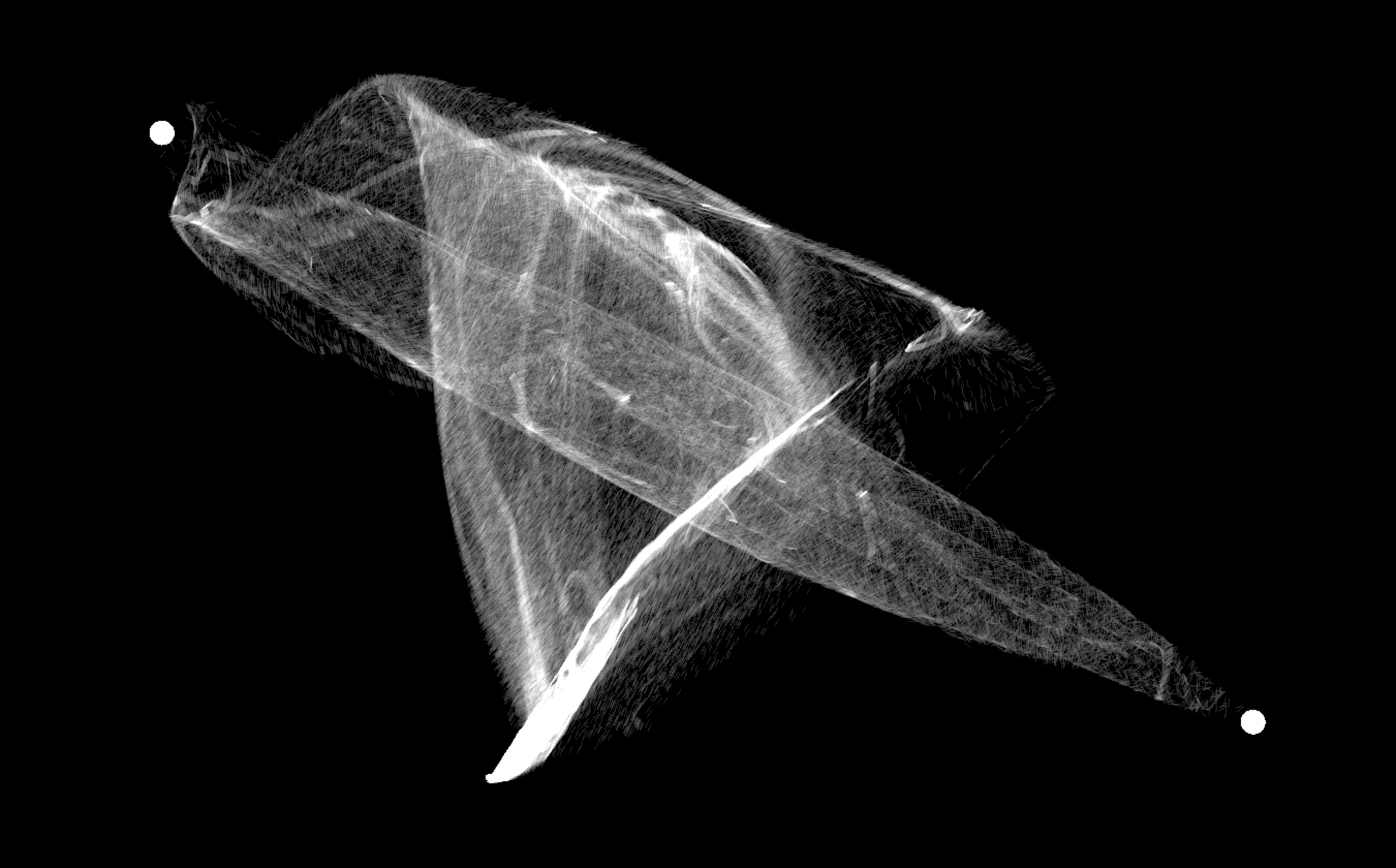

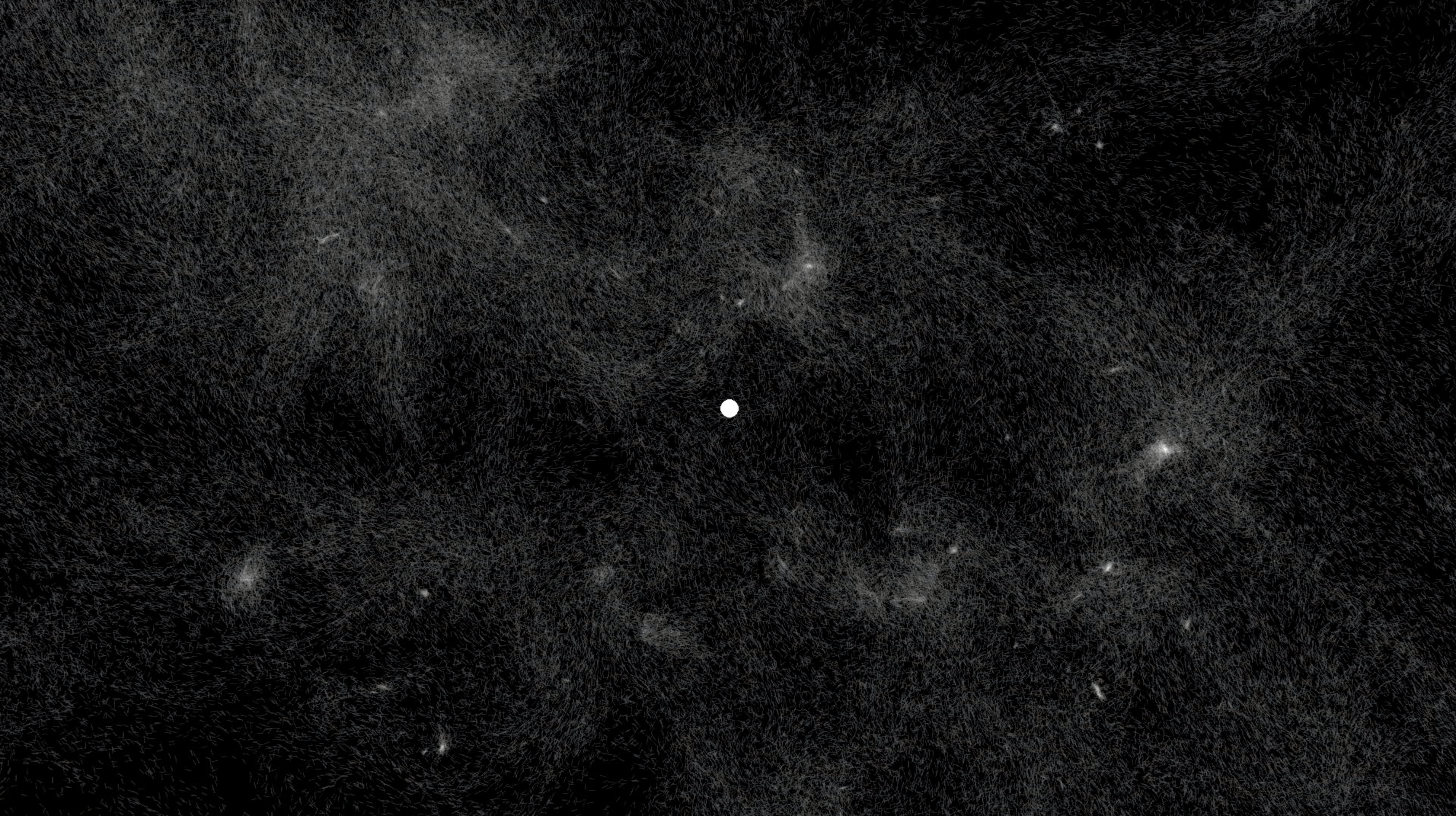

In Bow Shock, your hands control the granulation of a song embedded in space, revealing the invisible music with your movement. You and the environment are composed of fiery particles, flung by your motions and modulated by the frequencies you illuminate, instilling a peculiar agency and identification as a shimmering form.

Bow Shock is a spatially interactive experience of Optia‘s new song of the same name. Part song, part instrument, Bow Shock rewards exploration by becoming performable as an audiovisual instrument.

Bow Shock is an experiment with embodying novel materials, and how nonlocal agency is perceived.

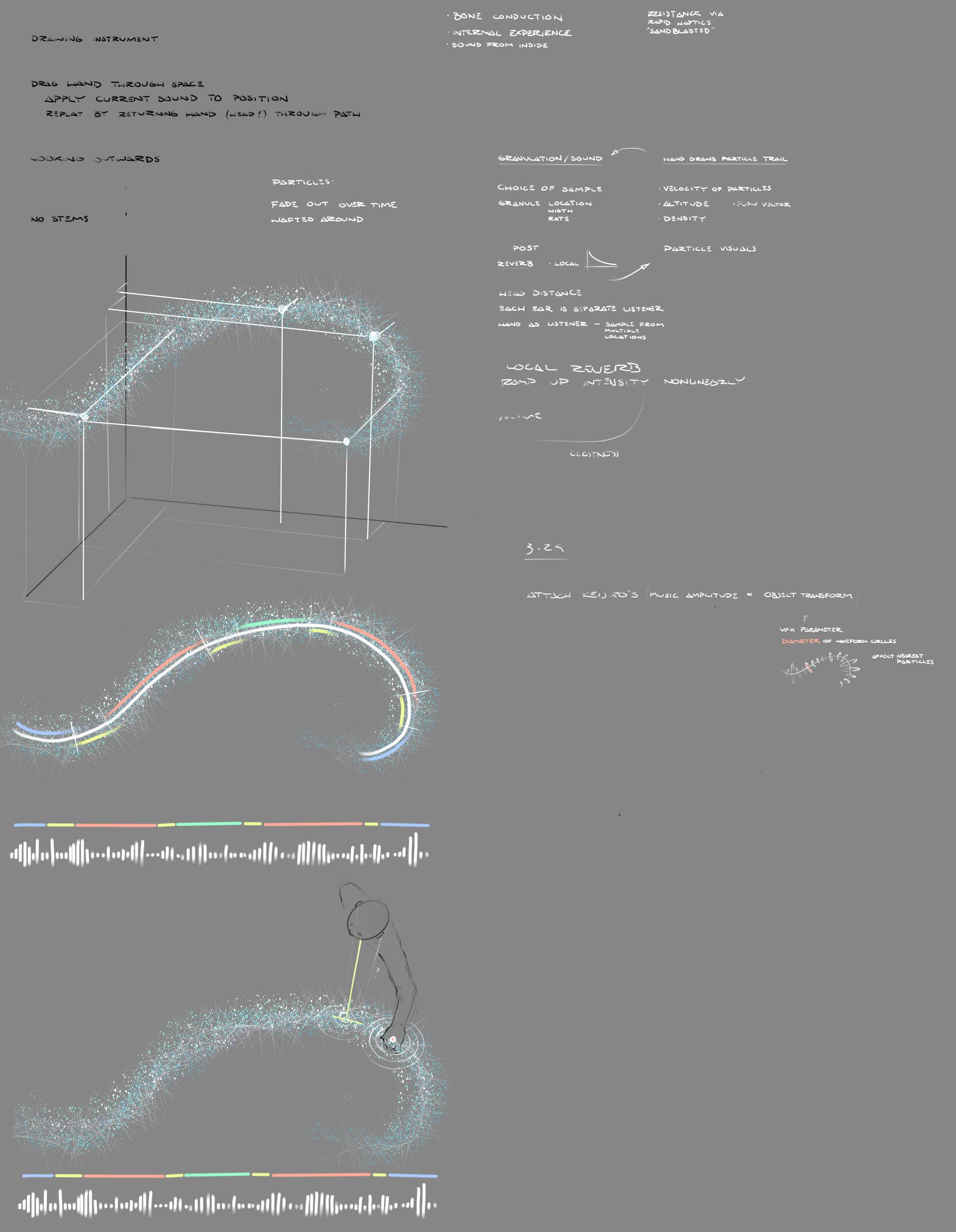

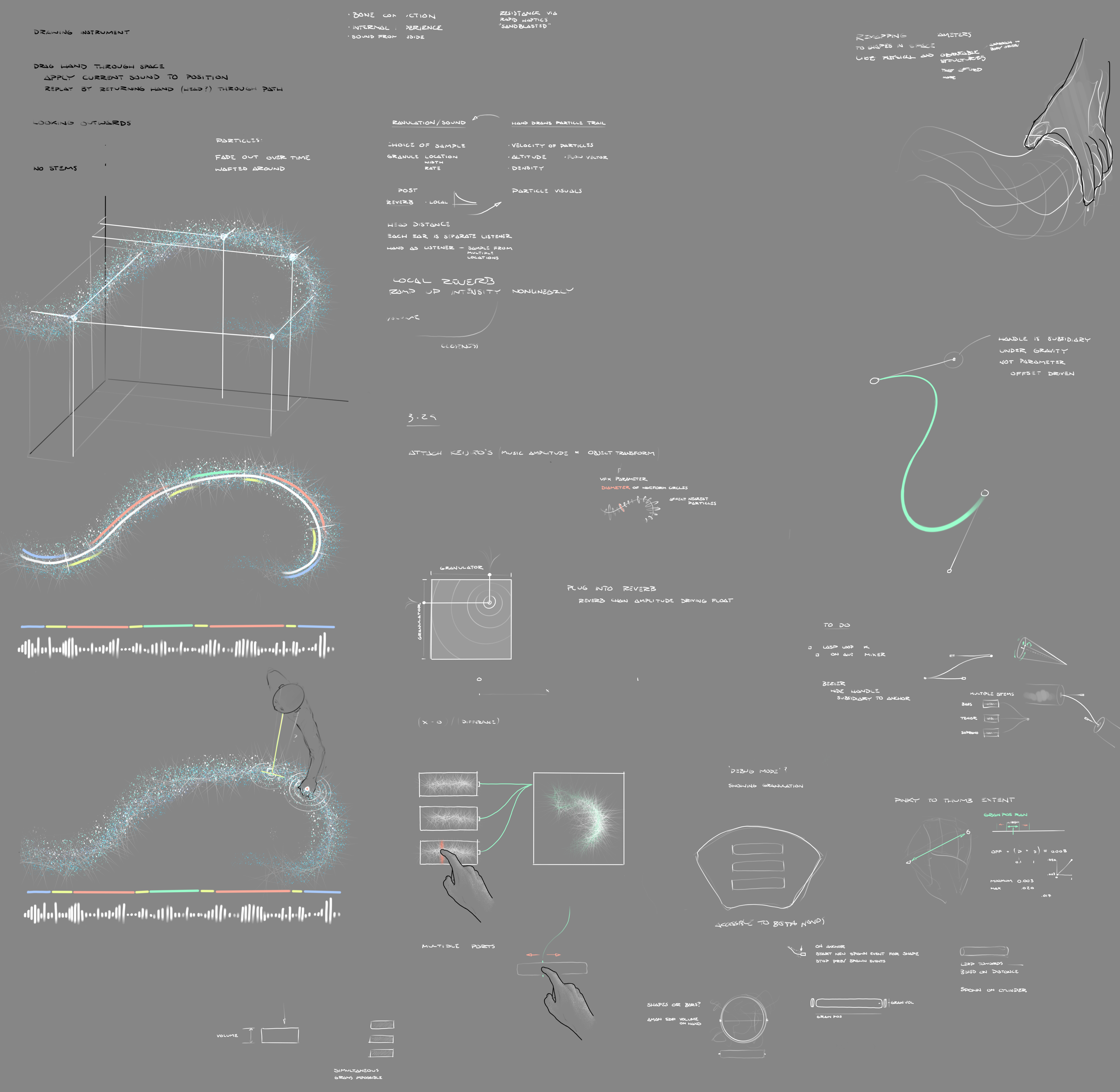

I wanted to create another performable musical VR experience after having made Strata . Initially stemming from a previous assignment for a drawing tool, I wanted to give the user a direct engagement with the creation of spatial, musical structures.

I envisioned a space where the user’s hand would instantiate sound depending on its location and motion, and I knew that granulation was a method that could allow a spatial “addressing” of a position within a sound file.

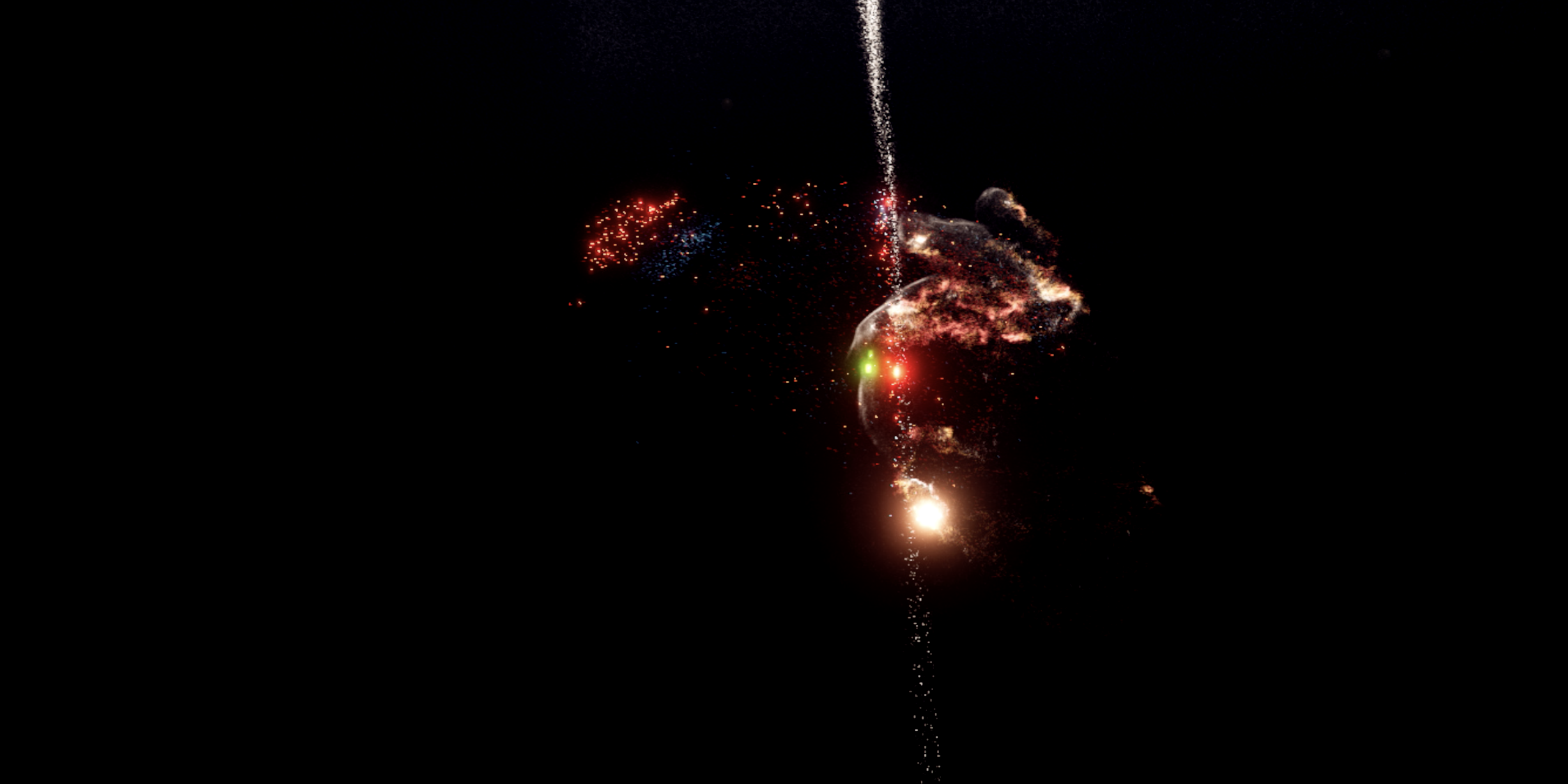

I created an ambient song, Bow Shock, to be the source sound file. I imported the AIFF into Manuel Eisl’s Unity granulation script and wrote a script that took my hand’s percent distance between two points on the x-axis and mapped that to the position within the AIFF that the granulator played at. Thus, when I moved my hand left and right, it was as if my hand was a vinyl record needle “playing” that position in the song. However, the sound was playing constantly, with few dynamics. I wrote a script mapping the distance between my index fingertip and thumbtip to the volume of the granulator, such that pinching completely pinched the sound off. This immediately provided much nuance in being able to “perform” the “instrument” of the song and allow choice of when to have sound and with what harmonic qualities.

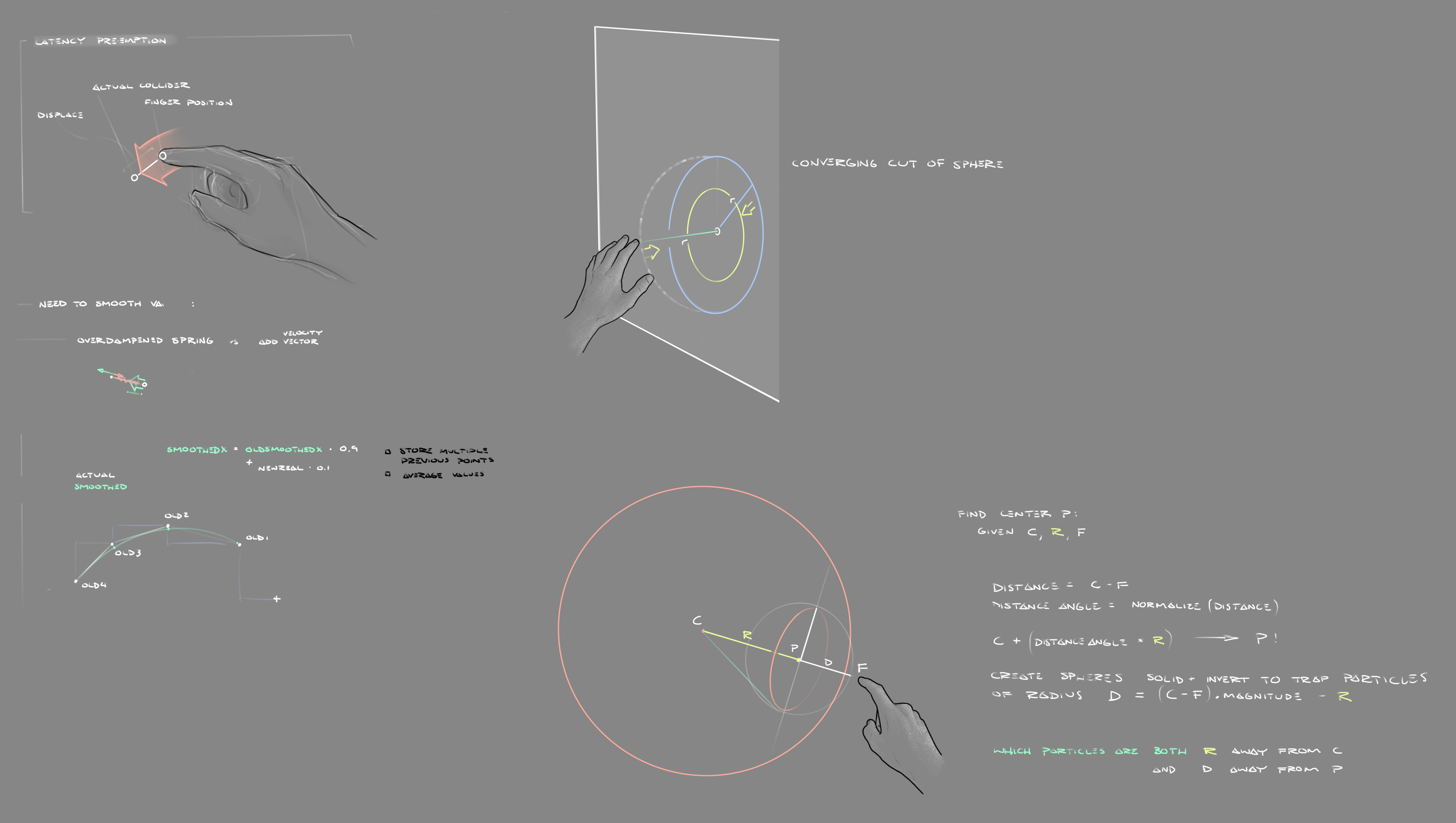

Strata was made using cloth physics, and in an attempt to move away from polygonal structures, my work with the particles in Unity’s VFX Graph made them a great candidate for this. Much of the work centered around the representation of the hand in space. Rather than using the Leap-Motion-tracked hand as a physical collider, I used Aman Tiwari’s MeshToSDF Generator to have my hand be a field influencing the motion of the particles.

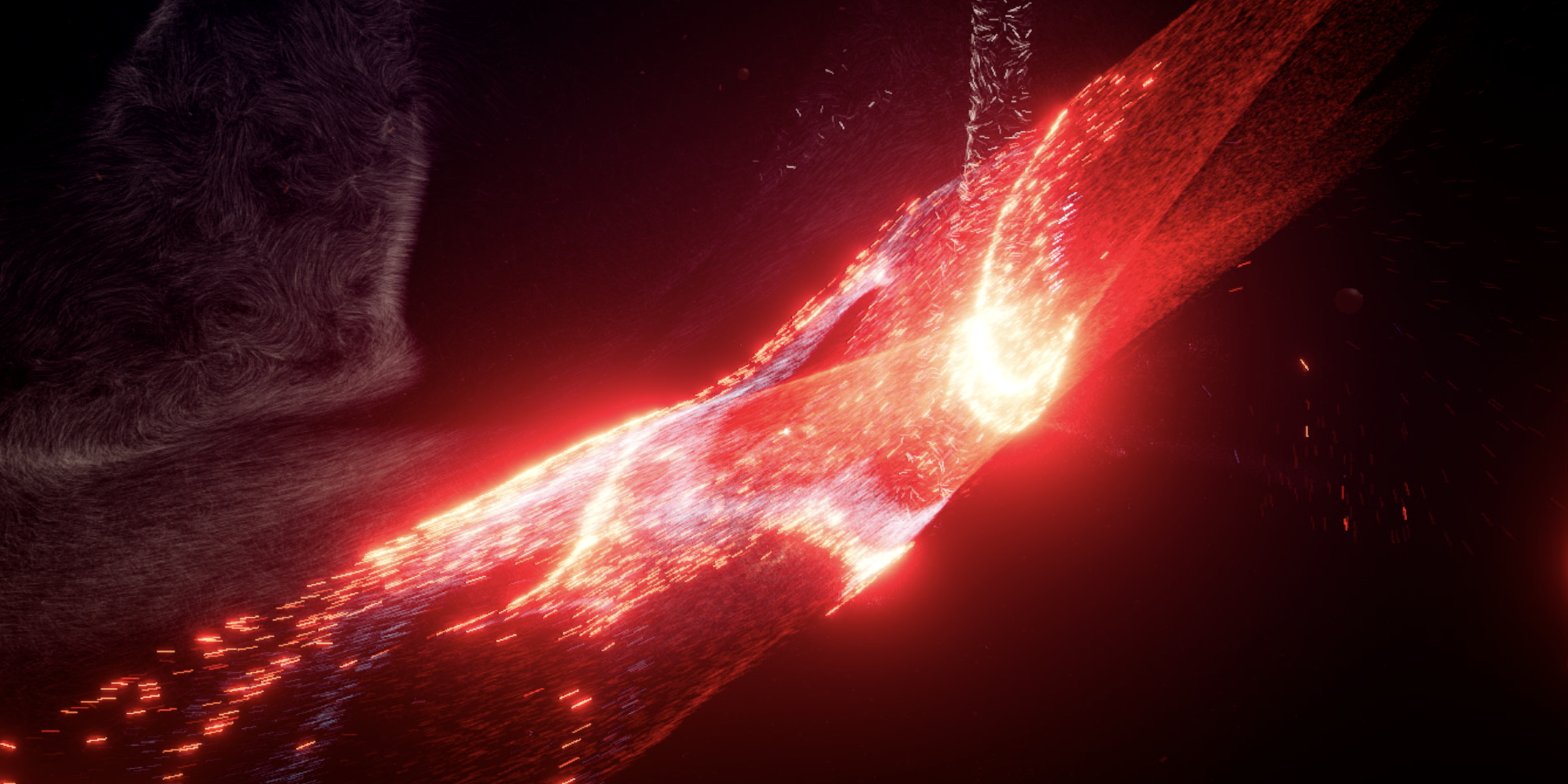

I have two particle systems associated with the hand. The soft white particle system is constantly attempting to conform to the hand SDF, and I have the parameters set such that they can easily be flung off and take time to be dragged out of their orbit back onto the surface of my hand. The other, more chaotic particle system uses the hand SDF as boolean confinement, trapped inside the spatial volume. Earlier in the particle physics stack, I apply an intense and dragful turbulence to the particles, such that floating-point imprecision at those forces glitches the particle motions in a hovering way that mirrors the granulated sound. These structures are slight, and occupy a fraction of the space, such that when confined to within the hand only, predominantly fail to visually suggest the shape of the hand. However, in combination, the flowy, flung particles plus the erratic, confined particles reinforce each other to mesh sufficiently with proprioception to be quite a robust representation of the hand that doesn’t occlude the environmental effects.

What initially was a plan to have the user draw out the shape of the particle instantiation/emission in space evolved into a static spawning volume matching in width the x-extent of the granulated song. In the VFX graph I worked to have the particles inherit the velocity of the finger, which I accomplished by lerping their velocities to my fingertip’s velocity along an exponential curve mapped to their distance from my fingertip, such that only the most near particles inherited the fingertip velocity, with a sharp falloff to zero outside of ~5cm radius away from my fingertip.

The particles initially had infinite lifetime, and with no drag open velocity, after flicking them away from their spawning position they often quickly became out of reach, so I instantiated subtle drag and gave the particles a random lifetime between 30 and 60 seconds such that the flung filamentary particle sheets would remain in the user-created shape for a while before randomly dying off and respawning in the spawn volume. The temporal continuity of the spawn volume provides a consistent visual structure correlated with the granulation bounds.

Critically, I wanted the audio experience to not be exclusively sonic. I wanted some way that the audio affected the visuals, so I used Keijiro’s LASP Loopback Unity plugin to extract the separate amplitudes for low, medium, and high chunks of the realtime frequency range, and mapped their values to parameters available in the VFX Graph. From there, I mapped the frequency chunk amplitudes to particle length, the turbulence on the particles, and the secondary, smaller turbulence on my hand’s particles. Thus, at different parts of the song, for examples, the predominating low frequencies will cause the environmental particles to additively superimpose more versus areas in space where high frequencies dominate and the particle turbulence is increased.

My aspiration for this effect is that the particle behaviors, driven by audio, visually and qualitatively communicate aspects of the song, as if seeing some hyper-dimensional spectrogram of sorts, where the motion of your hands through space draws out or illuminates spots within the spectrogram. Memory of a certain sound being attached to the proprioceptive sensation of the hand’s location at that moment, in combination with the visual structure at that location being correlated with the past (or present) sound associated with that location, would reinforce the unity of the sound and the structure, making the system more coherent and useful as a performance tool.

In order to make it more apparent that movement along the x axis controls the audio state, I multiply up the color of the particles sharing the same x-position as the hand such that a slice of brighter particles tracks the hand. This simultaneously visually shows that something special is associated with x-movement, but also provides a secondary or tertiary visual curiosity allowing a sort of CAT-scan-like appraisal of the 3D structures produced.

Ideally this experience would be a bimanual affair, but I ran into difficulties of multiple fronts. Firstly, if each hand granulates simultaneously, it is likely to be too sonically muddy. Secondly, the math required to use two points (like the fingertips of both hands simultaneously) to influence the velocity of the particles, each in their own localities, proved to be insurmountable in the timeframe. This is likely a solvable problem. What is less solvable, however, is my desire to have two hand SDFs simultaneously affect the same particle system. This might be an insurmountable limitation of VFX Graph’s ‘Conform To SDF’ operation.

Functional prototypes were built to send haptic moments from Unity over OSC to an Apple Watch’s Taptic Engine. These interactions were eventually shifted to an alternate project. Further, functional prototypes of a bezier-curve based wire-and-node system made out of particles were built for future functionality, and not incorporated into the delivered project.

future directions:

- multiple hands

- multiple songs (modulated by aforementioned bezier-wire node system)

- multiple stems of each song

- different particle effects per song section

- distinct particle movements and persistences based on audio

- a beginning and an end

- granulation volume surge only when close to particles (requires future VFX Graph features for CPU to access live GPU particle locations)

Special thanks to

Aman Tiwari for creating MeshToSDF Converter

Manuel Eisl for creating a Unity granulator

Keijiro Takahashi for creating the Low-latency Audio Signal Processing (LASP) plugin for Unity

points along the process

Making my hand a nebula + granular synth! ✨

All #VisualEffectGraph + @LeapMotion + MeshToSDF

Music+interactions for my incoming @optia_ release

Wild how the particles still feel like part of my body even though they diverge so much from my base anatomy pic.twitter.com/mfvbRvGIwL

— Gray Crawford (@graycrawford) April 25, 2019

I'm continually astounded by Unity's #VisualEffectGraph

This feels like legit magic 💫 pic.twitter.com/zI3TUy4Q63

— Gray Crawford (@graycrawford) April 18, 2019

Currently diving into

guiding #VisualEffectGraph particles

with my @LeapMotion hands

using @aman_gif's MeshToSDF converterAlready seeing *so many* places where this can go; stoked to explore further pic.twitter.com/QtOvKbEcOv

— Gray Crawford (@graycrawford) March 12, 2019