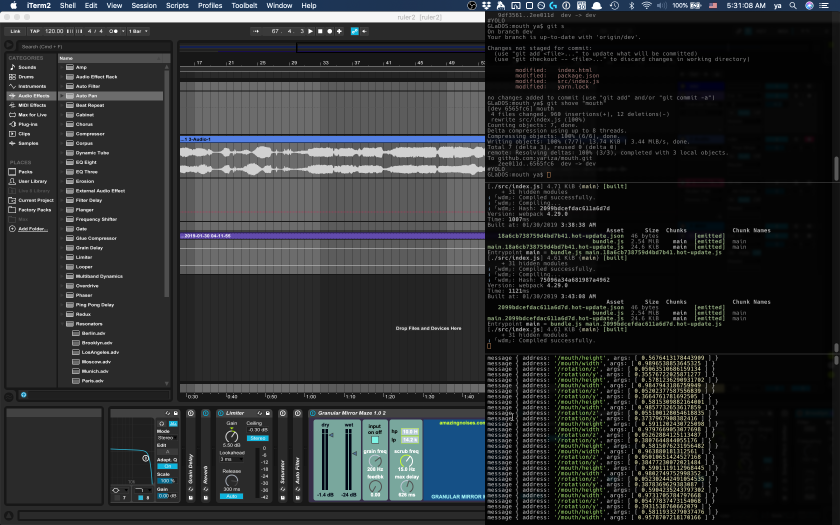

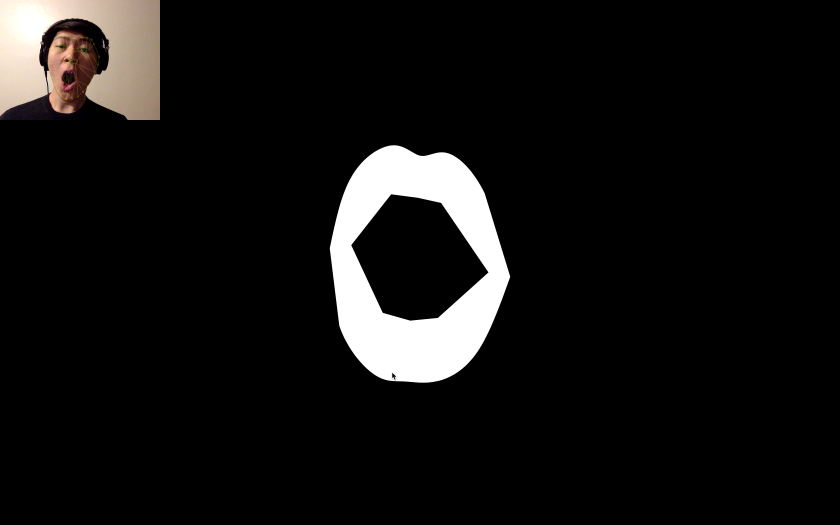

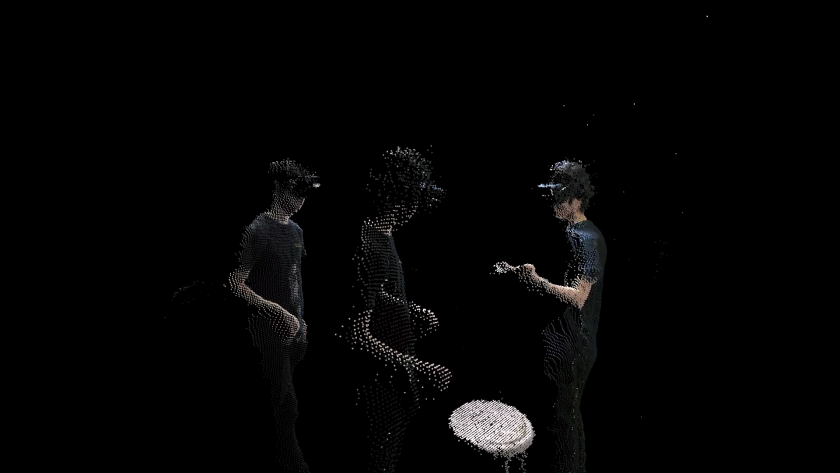

looper is an immersive depth-video delay that facilitates an out-of-body third-person view of yourself.

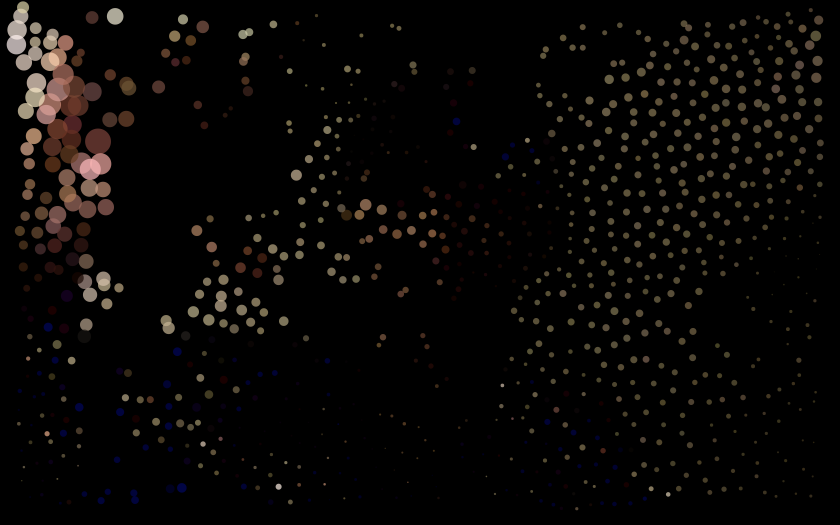

Using a Kinect 2 and an Oculus Rift headset, participants see themselves in point cloud form in virtual reality. Delayed copies of the depth video are then overlaid, allowing participants to see and hear themselves from the past, from a third-person point of view. Delayed copies are represented using a quarter as many points as the previous iteration, allowing the past selves to disintegrate after several minutes.

I initially experimented with re-projecting color and depth data taken from a Kinect sensor in point cloud form, so that I could see my own body in virtual reality. I repurposed this excellent tool by Roel Kok to convert the video data into a point cloud-ready format.

mapping kinect point cloud to myself pic.twitter.com/aPRPEMnMKt

— Yujin Ariza (@YujinAriza) April 19, 2019

While it was compelling to see myself in VR, I couldn’t see my own self from a third person point of view. So I made a virtual mirror.

mirror world

haha pic.twitter.com/syAnw5dstI

— Yujin Ariza (@YujinAriza) April 19, 2019

The virtual mirror was interesting because unlike in a real mirror, I could cross the boundary to enter the mirror dimension, at which point I would see myself on the other side, flipped because of the half-shell rendering of the Kinect point cloud capture.

However, the mirror was limiting in the same ways as a regular mirror: any action I made was duplicated on the mirror side, limiting the perspectives that I could have on viewing myself.

I then started experimenting with video delay.

1min loop feedback pic.twitter.com/246n0RPvvs

— Yujin Ariza (@YujinAriza) April 29, 2019

An immediate discovery was that in the VR headset, the experience of viewing yourself from a third person point of view was a striking one. The one minute delay meant that the past self was removed enough from the current self to feel like another, separate presence in the space.

1st person view pic.twitter.com/FxsFKn52HT

— Yujin Ariza (@YujinAriza) April 29, 2019

I also experimented with shorter delay times; these resulted in more dance-like, echoed choreography — this felt compelling on video but I felt it did not work as well in the headset.

video looper pic.twitter.com/sj6A2yqAFd

— Yujin Ariza (@YujinAriza) April 28, 2019

I then added sound captured from the microphone on the headset. The sound is spatialized from where the headset was when it was captured, so that participants could hear their past selves from where they were.

looper w/ sound pic.twitter.com/6tXgCNuk6J

— Yujin Ariza (@YujinAriza) May 1, 2019

During the class exhibition, I realized that the delay time of one minute was too long; participants often did not wait long enough to see themselves from one minute ago, and would often not recognize their other selves as being from the past. For the final piece, I lowered the delay time to 30 seconds.

The project is public on GitHub here: https://github.com/yariza/looper