Project 1 – Moving

this post is a work in progress…coming wednesday afternoon:

*revised writeup (below)

*cleaned script downloads

Final Presentation PDF (quasi-Pecha Kucha format):

solbisker_project1_moving

Final Project (“Moving”) :

solbisker_project1_moving_v0point1 – PNG

solbisker_project1_moving_v0point1 – PNG

solbisker_project1_moving_v0point1 – Processing applet – Only works on client, not in browser right now; click through to download source

Concept

“Moving” is a first attempt at exploring the American job employment resume as a societal artifact. Countless sites exist to search and view individual resumes based on keywords and skills, letting employers find the right person for the right job. “Moving” is about searching and viewing resumes in the aggregate, in hopes of learning a bit about the individuals who write them.

What It Is:

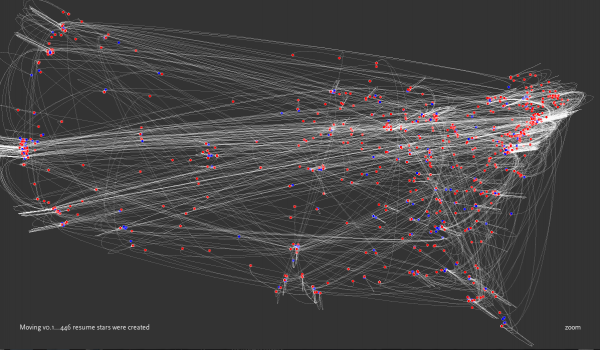

I wrote a script to download over a thousand PDF resumes of individuals from all over the internet (as indexed by Google and Bing). For each resume I then extracted information about where they currently live (through their address zipcode) and where they have moved from in the past (through the cities and states of their employer) over the course of their careers. I’ve then plotted the resulting locations on a map, with each resume having its own “star graph” on the map (the center node being the present location of the person, the outer nodes being the places where they’ve worked.) The resulting visualization gives a picture in how various professionals have moved (or chosen not to move) geographically as they have progressed throughout their careers and lives.

Background

Over winter break, I began updating my resume for the first time since arriving in Pittsburgh. Looking over my resume, it made me think about my entire life in retrospect. In particular, it reminded me of my times spent in other cities, especially my last seven years in Boston (which I sorely miss) – and how the various places I’ve lived, and the memories I associate with each place, have fundamentally shaped me as a person.

How It Works

First, the python script uses the Google API and Bing API to find and download resumes, using the search query “filetype:pdf resume.pdf” to locate URLs of resumes.

To get around the engines’ result limits, search queries are salted by adding words thought to be common to resumes (“available upon request”, “education”, etc.)

Then, the Python script “Duplinator” (open-source script by someone besides me) finds and deletes duplicate resumes based on file contents and hash sums.

(At this stage, resume results can also be hand-scrubbed to eliminate false positive “Sample Resumes”, depending on quality of search results).

Now, with the corpus of PDF resumes established, a workflow in AppleScript (built with Apple’s Automator) converts all resumes from PDF format to TXT format.

Another Python script takes these text versions of the resumes and extracts all detected zipcode information and references to cities in the united states, saving results into a new TXT file for each resume.

A list of city names to check for is scraped in real time from the zipcode-lat/long-city name mappings provided for user input recognition in Ben Fry’s “Zip Decode” project. The extracted location info for each resume is saved in a unique TXT file for later visualization and analysis.

Finally, Processing is used to read in and plot resume location information.

This information is drawn as a unique star graph for each resume. The zipcode representing the person’s current address as the center node, cities representing past addresses being the outer nodes.

(At the moment, the location plotting code is a seriously hacked version of the code behind Ben Fry’s “zipdecode,” allowing me to recycle his plotting of city latitude and longitudes to arbitrary screen locations in processing.)

Self-Critique

The visualization succeeds as a first exploration of the information space, in that it immediately causes people to start asking questions. What is all of that traffic between San Francisco and NY? Why are there so many people moving around single cities in Texas? What happened to Iowa? Do these tracks follow the normal patterns of, say, change of address forms (representing all moves people ever make and report?) Etcetra. It seems clear that resumes are a rich, under-visualized and under-studied data set.

That said, the visualization itself still has issues, both from a clarity point and an aesethetic point. The curves used to connect the points are an improvement over the straight-edges I first used, and the opacity of lines is a good start – but is clear that much information is lost around highly populated parts of the US. It is unclear if a better graph visualization algorithm is needed or if a simple zoom mechanism would suffice to let people explore the detail in those areas. The red and blue dots that denote work nodes versus home nodes fall on top of each other in many zipcodes, stopping us from really exploring where people only work and don’t like to live, and vice versa.

Finally, many people still look at this visualization and ask “Oh, does this trace the path people take in their careers in order?” It doesn’t, by virtue of my desire to try to highlight the places where people presently live in the graph structure. How can we make that distinction clearer visually? If we can’t, does it make sense to let individual graphs be clickable and highlight-able, to make it more discoverable what a single “graph” looks like? Finally, would it make sense to add a second “mode”, where the graphs for individual resumes are instead connected in the more typical “I moved from A to B and then B to C” manner?

Next Steps

I’m interested in both refining the visualization itself (as critiqued above) and exploring how this visualization might be tweaked to accomodate resume sets other than “all of the American resumes I could find on the internet.” I’d be interested to work with a campus career office or corporate HR office to use this same software to study a selection of resumes that might highlight local geography in an interesting way (such as the city of Pittsburgh, using a corpus of CMU alumni resumes.) Interestingly, large privacy questions would arise from such a study, as individual people’s life movements might be recognizable in such visualizations in a form that the person submitting the resume might not have intended.

Hi Solomon – here are the PiratePad notes from the crit.

Really good starting premise for a data-driven investigation. Strong data-investigation methods. Wow, you’re using both Google AND Bing API’s. Lots of different languages in use here — Python, AppleScript, etc — this kind of tool-use is exemplary.

How did you determine that a resume was not a “demo how to write a resume” example — did you have to hand-code/filter the 2000 resumes?

>>Yes, with Coverflow, by hand. It was painful (and frankly, only possible in Coverflow) – it took ~1hr for each 1,000 resumes. Fortunately you only have to do it once.

Some engines gave better results (Bing, almost all real resumes) than others (Google, many many fake resume results.) For real-time searches in the future, I’ll only use Bing and the sample resume issue shouldn’t significantly change results. -Sol

This might be good to run on the east-coast zoomed in — in the tri-state area (NY,NJ, CT). Perhaps the overview of the USA is the least-informative view to have.

Also, how about some tools for filtering & querying: people who move to SF, etc.

There’s a Monster API??

>>Now that I poke around, there may not be, and I may have misread something. Either way, I wouldn’t be able to afford to use it without serious grant money or Monster’s cooperation – resume access is $$$. -Sol

Setting the control points for the Bezier curves is open hard problem.

— GL

Yay, Boston! Very beautiful. However, I feel like I’ve seen this type of project before, just expressing different data (like that one piece that drew the united states by marking streets). I think the data is really interesting, although it is hard to interpret it: is there some interactive-ness going on? Maybe you could have some information pop up if you mouseover a certain point (if you haven’t already done that yet). “What’s the deal with this one guy?”, hehe! -Amanda

>>Boston! Woot! Thanks. No interactivity at the moment; that’s why I didn’t bother showing the actual Processing applet. Interactivity is exactly how I’d like to handle the individual graphs issue (maybe even letting people see the original PDFs? Privacy concerns abound.)

I would like there do be a difference between “from” and “to” for each arrow (maybe the line could get thicker as it moves to its destination.) It’s dificult to see where people are flocking to. -MH

>>Definitely, the direction of the lines is crucial. I was struggling with that element of the visualization, both how it would look and how Processing would handle it programmatically. Any advice (especially with applying colored gradients to lines and strokes) is appreciated. Thanks! -Sol

I think the data turned out surprisingly well, in term of the distribution of geographic locations. I think the visuals could use a little polishing (the color of the dots and the connecting lines).

If you could filter by city, and by who is entering/exiting, it would show more clearly where people are traveling. Even filtering by “traveling east” or “traveling south” could be useful. – Jon

The beziers should be drawn differently.–I agree, I think the “arch” effect obscures some of the information. Worse it provides a lot of misleading information – shooting people out into the atlantic.

I think theres a lot of visual potential here that you can tap. The lines if drawn visually (as commented above), this could become a really gorgeous artifact.

I’d really like to see it animated based on dates grabbed from the resumes.

>>My scripts right now are really dumb; I don’t have a good way to affiliate dates with cities regardless of layout used yet. That said, I want to see this too. 🙂 Thanks! -Sol