I’m interested in interactions that people can have with digital technology in public spaces. These ideas are not new, but digital technology has only recently reached the cost, effectiveness and social acceptability where someone can actually turn a crazy idea about a public interaction with computers into a reality.

As soon as I heard this project was about “real-time interactions”, I got it in my head to try to recreate the “Secret Word” skit from the 1980’s kids show “Pee Wee’s Playhouse.” (Alas, I couldn’t find any good video of it.) On the show, whenever a character said “the secret word” of each episode, the rest of the cast of characters (and most of the furniture in the house, and the kids at home) would “scream real loud.” Needless to say, the characters tricked each other into using the secret word often, and kids loved it. The basic gist of my interaction would be that a microphone would be listening in on (but not recording, per privacy laws) conversations in a common space like a lab or lobby, doing nothing until someone says a very specific word. Once that word was said, the room itself would somehow go crazy – or, one might say, “scream real loud.” Perhaps the lights would blink, or the speakers would blast some announcement. Confetti would drop. The chair would start talking. Etcetera.

Trying to build this interaction in two weeks is trivial in many regards, with lights being controlled electronically and microphones easily (and often) embedded in the world around us. However, one crucial element prevents the average Joe from having their own Pee Wee’s Playhouse – determining automatically when the secret word has been said in a casual conversation stream. (Ok, ruling out buying humanoid furniture.) For my project, I decided to see if off-the-shelf voice recognition technology could reliably detect and act on “secret words” that might show up in regular conversation.

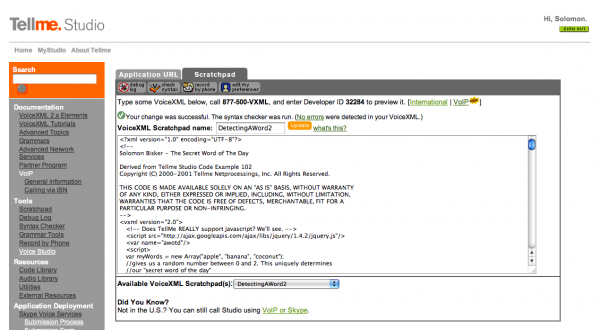

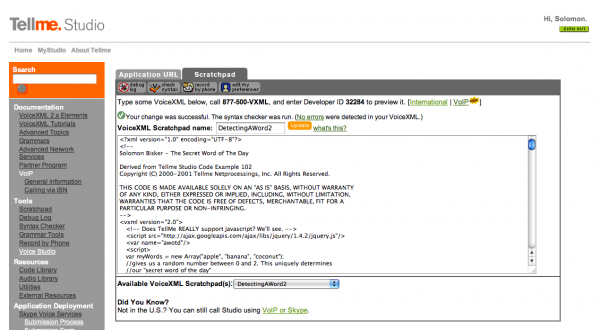

In order to ensure that the system could be used in a public place, I needed a voice recognition system that didn’t require training. I also needed something that I could learn and play with in a short amount of time. After ruling out open-source packages like CMU Sphinx, I decided to experiment with the commercial packages from Tellme, and specifically, their developer toolkit (Tellme Studio). Tellme, a subsidary of Microsoft, provides a platform for designing and hosting telephone-based applications like phone trees, customer service hotlines and self-service information services (such as movie ticket service Fandango).

Tellme Studio allows developers to design telephone applications by combining a mark-up development language called VoiceXML, a W3C standard for speech recognition applications, with Javascript and other traditional web languages. Once applications are designed, they can be accessed by developers for testing purposes over the public telephone network from manually assigned phone numbers. They can also be used for public-facing applications by routing calls through a PSTN-to-VoIP phone server like Asterisk directly to Tellme’s VoIP servers, but after much fiddling I found the Tellme VoIP servers to be down whenever I needed them – so for now, I thought I’d prototype my service using Skype. Fortunately, the number for testing Tellme applications is a 1-800 number, and Skype offers free 1-800 calls, so I’ve been able to test and debug my application over Skype free of charge.

So how would one use a phone application to facilitate an interaction in public space? The “secret word” interaction really requires individuals to not have to actively engage a system by dialing in directly – and telephones are typically used as very active, person to person communication mediums. Well, with calls to Tellme free to me (and free to Tellme as well if I got VoIP working), it seemed reasonable that if I could keep a call open with Tellme for an indefinite amount of time, and used a stationary, hidden phone with a strong enough microphone, I could keep an entire room “on the phone” with Tellme 24 hours a day. And since hiding a phone isn’t practical (or acceptable) for every iteration of this work, I figured I could test my application by simply recording off my computer microphone into a Skype call with my application in a public setting (say, Golan conversing with his students during a workshop session.)

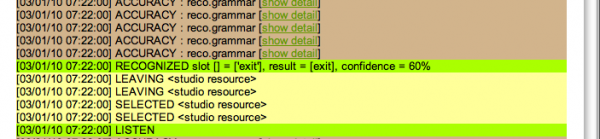

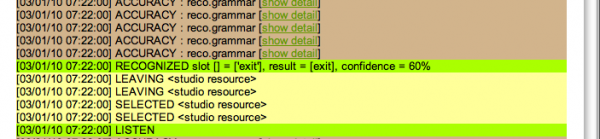

Success! It falsely detects the word "exit", but doesn't quit.

In theory, this is a fantastic idea. In practice, it’s effective, but more than a little finicky. For one, Tellme is programmed to automatically “hang up” when it hears certain words that it deems “exit words.” In my first tests, many words in casual conversation were being interpreted as the word “exit”, quitting the application within 1-2 minutes of consistent casual conversation. Rather than try to deactivate the exit words feature entirely, I found a way to programmatically ignore exit events if the speech recognition’s confidence in the translation was below a very high threshold (but not so high that I couldn’t say the word clearly and have it still quit.) This allowed my application to stay running and translating words for a significant amount of time.

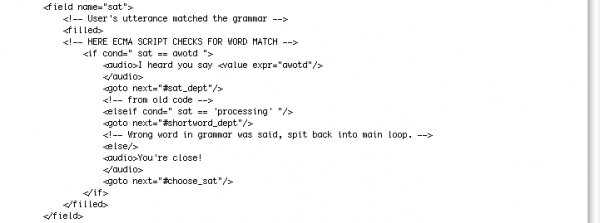

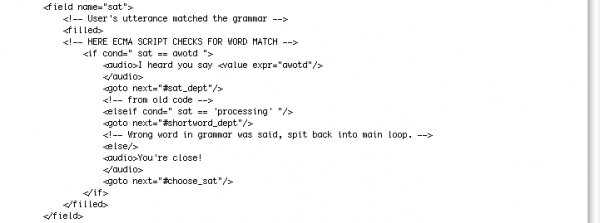

A bit of Tellme code, using Javascript to check the detected word against today's secret word

Secondly, a true “word of the day” system would need to pick (or at least be informed of) a new word to detect and act on each day. While the Tellme example code can be tweaked to make a single word recognition system in 5 minutes, it is harder (and not well documented) how to make the system look for and detect a different word each day. The good news is, it is not difficult for a decent programmer to get the system to dynamically pick a word from an array (as my sample code does) and have it only trigger a success when that single word in the array is spoken. Moreover, this word can be retrieved over an AJAX call, so one could use a “Word of the Day” service through a site like Dictionary.com for this purpose (although I was unable to get corporate access to a dictionary API in time.) The bad news is, while VoiceXML and Tellme code can be dynamically updated at run-time with Javascript, the grammars themselves are only read in at code compile time. Or, translated from nerd speak, while one can figure out what words to SAY dynamically, one needs to prep the DETECTION with all possible words of the day ahead of time (unless more code is written to create a custom grammar file for each execution of the code). Unfortunately, the more words that are added to a grammar, the less effective it is at picking out any particular word in that grammar – so one can’t just create a grammar with the entire Oxford English dictionary, pick a single word of the day out of the dictionary and call it a day. So in my sample code, I give a starting grammar of “all possible words of the day” – the names of only three common fruits (apple, banana and coconut). I then have the code at compile time select one of those fruits at random, and then once ANY fruit is said, the name of that fruit is compared against the EXACT word of the day. However, server-side programming would be needed to scale this code to pick and detect a word of the day from a larger “pool” of possible words of the day.

Finally, a serious barrier to using Tellme to act on secret words is the purposes for which the Tellme algorithm is optimized. There is a difference between detecting casual conversation, where words are strung together, versus detecting direct commands, such as menu prompts and the sorts of things one normally uses a telephone application for – and perhaps understandably, Tellme optimizes their system to more accurately translate short words and phrases, as opposed to loosely translating longer phrases and sentences. I experimented with a few ways of trying to coerce the system to treat the input as phrases rather than sentences, including experimenting with particular forms versus “sentence prompt” modes, but it seems to take a particularly well articulated and slow sentence for a system to truly act on all of the words in that sentence. Unfortunately, this particular roadblock is one that may be impossible to get around without direct access to the Tellme algorithm (but then again, I’ve only been at it for 2 weeks.)

"Remember kids, whenever you hear the secret word, scream real loud."

In summary – I’ve designed a phone application that begins to approximate my “Secret Word of the Day” interaction. If I am talking in a casual conversation, a Skype call dialed into my Tellme application can listen and translate my conversation in real time, interjecting with (decent but not great) accuracy with “Ahhhhhhh! You’ve said the secret word of the day!” in a strangely satisfying text-to-speech voice. Moreover, this application has the ability to change the secret word dynamically (although right now the secret word is reselected for each phone call, rather than “of the day” – changing that would be simple.) All in all, Tellme has proven itself to be a surprisingly promising platform for enabling public voice recognition interactions around casual conversation. It is flexible, highly programmable, and surprisingly effective at this task with very basic tweaking (in my informal tests, picking up on words I say in sentences about 50% of the time) despite Tellme being highly optimized for a totally different problem space.

Since VoiceXML code is pretty short, I’ve gone ahead and posted my code below in its entirety: folks interested in making their own phone applications with Tellme should be heartened by the inherent readability of VoiceXML and the fact that the “scary looking” parts of the markup can, by the by, be simply ignore and copy-pasted from sample code. That said, this code is derived from sample code, which is copyrighted by Tellme Networks and Microsoft, and should only be used on their service – so check yourself before you wreck yourself with this stuff. Enjoy!

<?xml version=”1.0″ encoding=”UTF-8″?>

<!–

Solomon Bisker – The Secret Word of The Day

Derived from Tellme Studio Code Example 102

Copyright (C) 2000-2001 Tellme Netprocessings, Inc. All Rights Reserved.

THIS CODE IS MADE AVAILABLE SOLELY ON AN “AS IS” BASIS, WITHOUT WARRANTY

OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION,

WARRANTIES THAT THE CODE IS FREE OF DEFECTS, MERCHANTABLE, FIT FOR A

PARTICULAR PURPOSE OR NON-INFRINGING.

–>

<vxml version=”2.0″>

<!– Does TellMe REALLY support javascript? We’ll see. –>

<!– <script src=”http://ajax.googleapis.com/ajax/libs/jquery/1.4.2/jquery.js”/> –>

<var name=”awotd”/>

<script>

var myWords = new Array(“apple”, “banana”, “coconut”);

//gives us a random number between 0 and 2. This uniquely determines

//our “secret word of the day”

var randomnumber = Math.floor(Math.random()*3)

awotd = myWords[randomnumber];

</script>

<!–Shortcut to a help statement in both DTMF and Voice (for testing) –>

<!– document-level link fires a help event –>

<link event=”help”>

<grammar mode=”dtmf” root=”root_rule” tag-format=”semantics/1.0″ type=”application/srgs+xml” version=”1.0″>

<rule id=”root_rule” scope=”public”>

<item>

2

</item>

</rule>

</grammar>

<grammar mode=”voice” root=”root_rule” tag-format=”semantics/1.0″ type=”application/srgs+xml” version=”1.0″ xml:lang=”en-US”>

<rule id=”root_rule” scope=”public”>

<item weight=”0.001″>

help

</item>

</rule>

</grammar>

</link>

<!– The “Eject Button.” document-level link quits –>

<link event=”event.exit”>

<grammar mode=”voice” root=”root_rule” tag-format=”semantics/1.0″ type=”application/srgs+xml” version=”1.0″ xml:lang=”en-US”>

<rule id=”root_rule” scope=”public”>

<one-of>

<item>

exit

</item>

</one-of>

</rule>

</grammar>

</link>

<catch event=”event.exit”>

<if cond=”application.lastresult$.confidence < 0.80″>

<goto next=”#choose_sat”/>

<else/>

<audio>Goodbye</audio>

<exit/>

</if>

</catch>

<!– Should I take out DTMF mode? Good for testing at least.–>

<form id=”choose_sat”>

<grammar mode=”dtmf” root=”root_rule” tag-format=”semantics/1.0″ type=”application/srgs+xml” version=”1.0″>

<rule id=”root_rule” scope=”public”>

<one-of>

<item>

<item>

1

</item>

<tag>out.sat = “sat”;</tag>

</item>

</one-of>

</rule>

</grammar>

<!– The word of the day is either “processing” (static) or the word of the day from our array/an API –>

<grammar mode=”voice” root=”root_rule” tag-format=”semantics/1.0″ type=”application/srgs+xml” version=”1.0″ xml:lang=”en-US”>

<rule id=”root_rule” scope=”public”>

<one-of>

<!– The dynamic word of the day –>

<!– WE CANNOT MAKE A DYNAMIC GRAMMAR ON PURE CLIENTSIDE!

DUE TO LIMITATIONS IN SRGS PARSING. WE MUST TRIGGER ON ALL THREE

AND LET THE TELLME ECMASCRIPT DEAL WITH IT. –>

<item>

<one-of>

<item>

<one-of>

<item>

apple

<!– loquacious –>

</item>

</one-of>

</item>

</one-of>

<tag>out.sat = “apple”;</tag>

<!– <tag>out.sat = “loquacious”;</tag>–>

</item>

<item>

<one-of>

<item>

<one-of>

<item>

banana

</item>

</one-of>

</item>

</one-of>

<tag>out.sat = “banana”;</tag>

</item>

<item>

<one-of>

<item>

<one-of>

<item>

coconut

</item>

</one-of>

</item>

</one-of>

<tag>out.sat = “coconut”;</tag>

</item>

<!– The static word of the day (for testing) –>

<item>

<one-of>

<item>

<one-of>

<item>

processing

</item>

</one-of>

</item>

</one-of>

<tag>out.sat = “processing”;</tag>

</item>

</one-of>

</rule>

</grammar>

<!– this form asks the user to choose a department –>

<initial name=”choose_sat_initial”>

<!– dept is the field item variable that holds the return value from the grammar –>

<prompt>

<audio/>

</prompt>

<!– User’s utterance didn’t match the grammar –>

<nomatch>

<!–<audio>Huh. Didn’t catch that.</audio> –>

<reprompt/>

</nomatch>

<!– User was silent –>

<noinput>

<!– <audio>Quiet, eh?</audio> –>

<reprompt/>

</noinput>

<!– User said help –>

<help>

<audio>

Say something. Now.

</audio>

</help>

</initial>

<field name=”sat”>

<!– User’s utterance matched the grammar –>

<filled>

<!– HERE ECMA SCRIPT CHECKS FOR WORD MATCH –>

<if cond=” sat == awotd “>

<audio>I heard you say <value expr=”awotd”/>

</audio>

<goto next=”#sat_dept”/>

<!– from old code –>

<elseif cond=” sat == ‘processing’ “/>

<goto next=”#shortword_dept”/>

<!– Wrong word in grammar was said, spit back into main loop. –>

<else/>

<audio>You’re close!

</audio>

<goto next=”#choose_sat”/>

</if>

</filled>

</field>

</form>

<form id=”sat_dept”>

<block>

<audio>Ahhhhhhh! You’ve said the secret word of the day!</audio>

<goto next=”#choose_sat”/>

</block>

</form>

<form id=”shortword_dept”>

<block>

<audio>That’s a nice, small word!</audio>

<goto next=”#choose_sat”/>

</block>

</form>

</vxml>

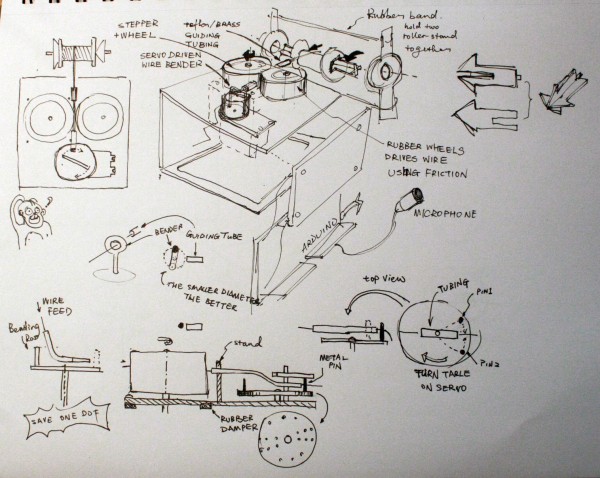

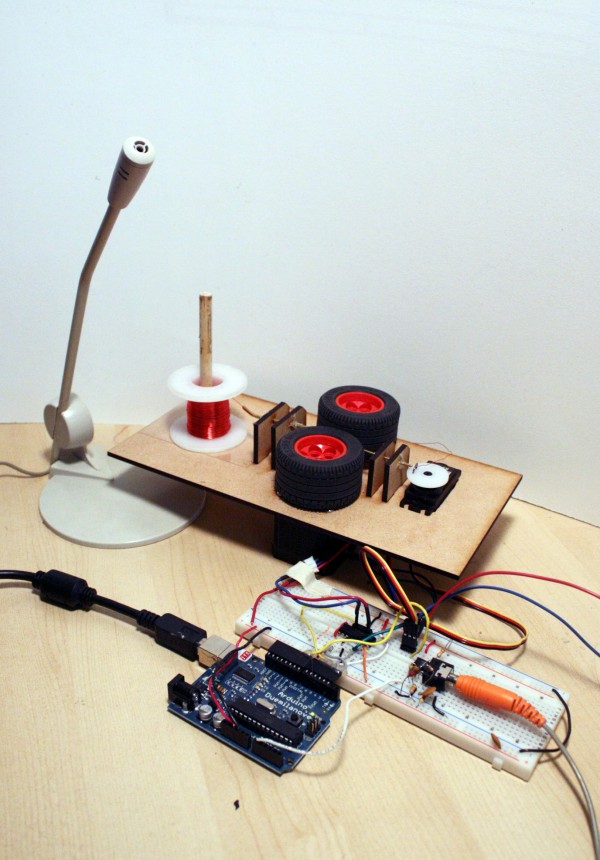

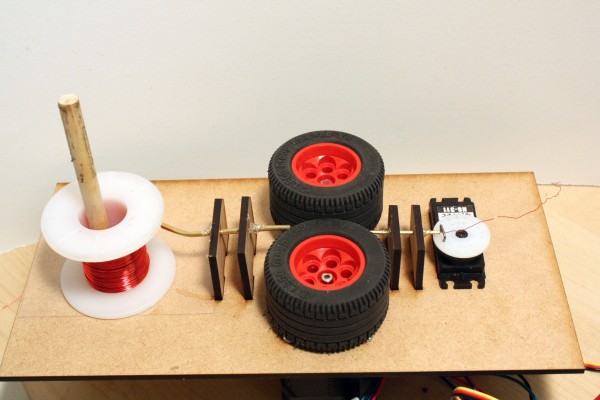

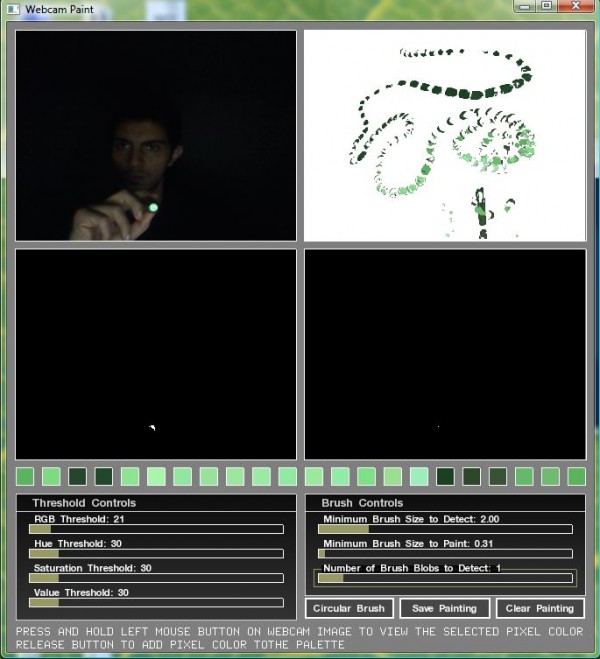

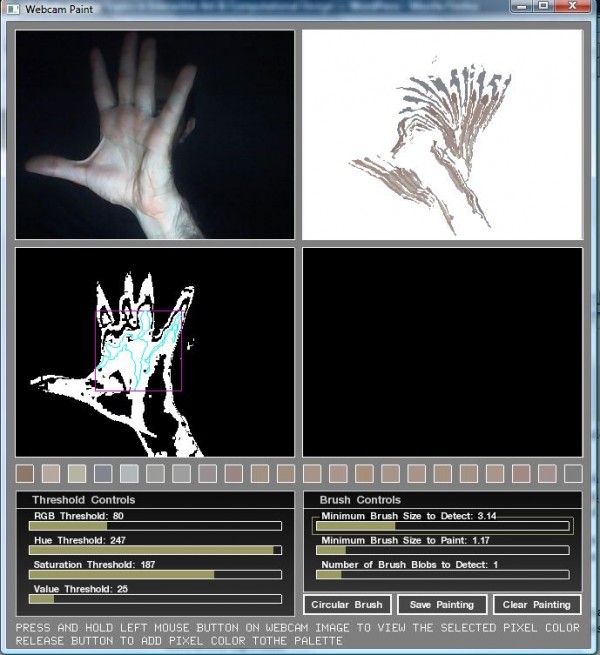

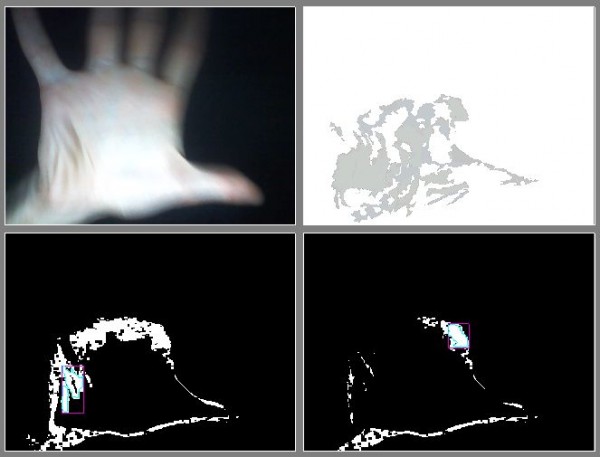

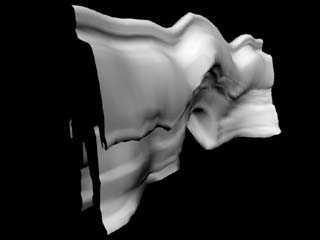

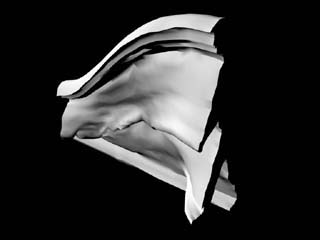

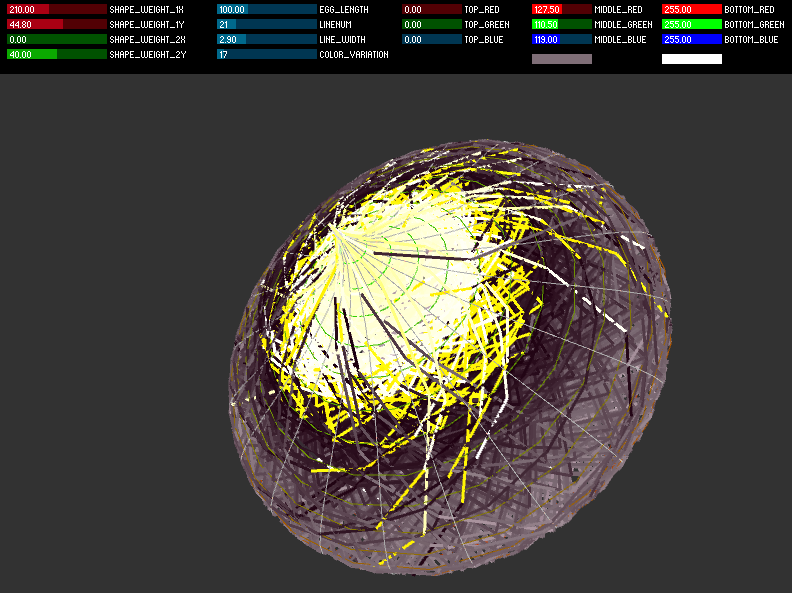

This was an attempt at creating an interface that would “spin” the users drawing around a defined object. I drew inspiration from the way a spider spins silk around it’s prey.

This was an attempt at creating an interface that would “spin” the users drawing around a defined object. I drew inspiration from the way a spider spins silk around it’s prey.