For my project on computer simulation, I decided to focus my project on the children’s toy Mr. Potato Head. Mr. Potato Head allows children to explore their creativity by placing body parts and accessories in a toy potato in any configuration they wish. With twelve parts included and nine positions on which parts can be placed, there are literally tens of thousands of possible ways Mr. Potato Head can be assembled. I became curious as to how I could apply simulation techniques to search through the space of possible Mr. Potato Head assemblies. In particular, could I figure out which configuration of parts makes the most “awesome” Mr. Potato Head?

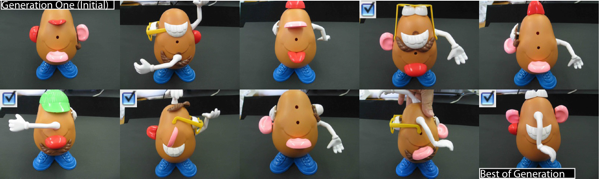

I started by using simple genetic algorithms to create a population of 10 Mr. Potato Heads, starting from naked bodies (containing just the shoes, to insure each generation is able to stand upright.)

These 10 initial spuds were produced by an algorithm (developed in Processing) that would go through each hole on the body, one by one, and either place a part on that hole in the body (chosen at random) or leave that hole empty. Special rules had to be created for the Hat, Moustache and Glasses parts, given their unusual physical operation – the hat could only be placed when it was placed on the head hole (causing the hat to be extremely unlikely), the glasses could only be placed when the eyes are also present (causing glasses to appear fairly infrequently), and the moustache would always be placed under the piece called for previously (making the moustache itself somewhat common, but any particular combination of moustache and specific part hard to pass on).

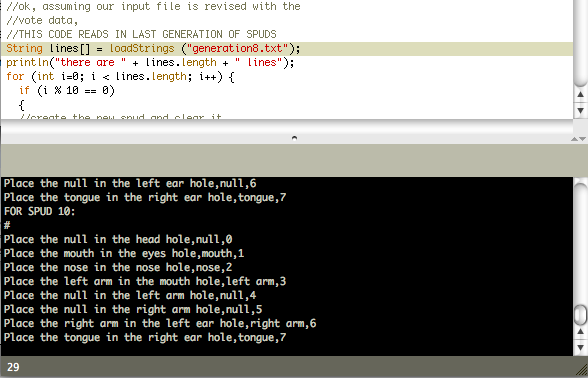

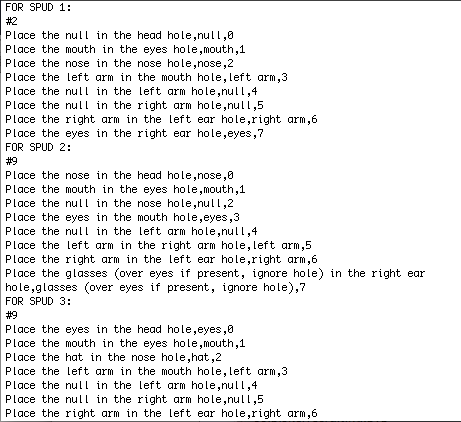

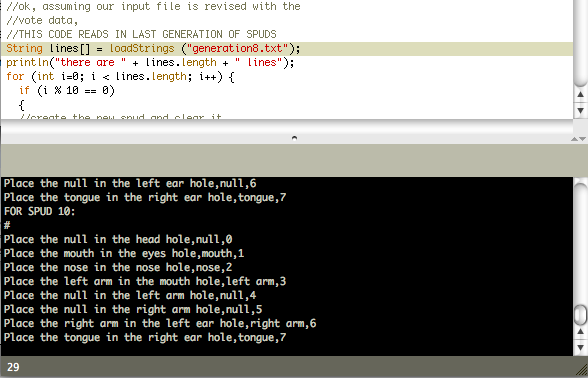

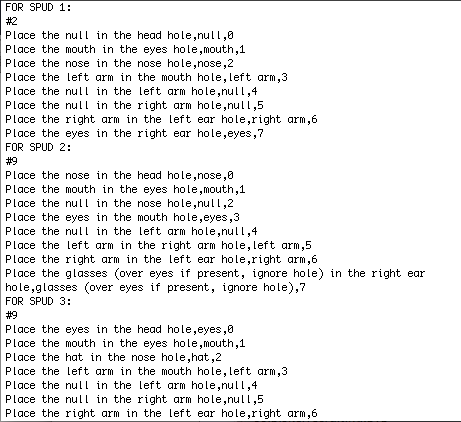

This produced a set of directions on how to create the initial 10 Mr. Potato heads, output in a text file in the format shown below.

In addition to construction instructions, the file also contains # signs for each spud, which mark placeholders for me to type in information about that spud later.

With these instructions, me (and my girlfriend) constructed each of the 10 configurations and took a picture of Mr. Potato Head in that configuration. This allowed us to “realize” each Mr. Potato Head specified by the algorithm.

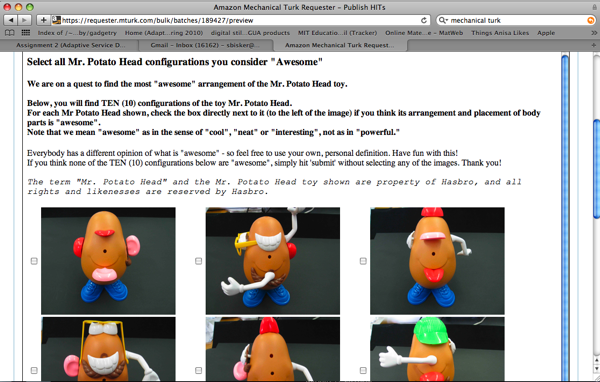

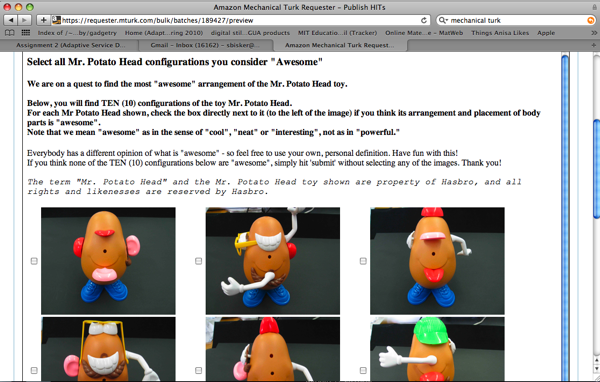

Then, with all ten configurations photographed, we uploaded those photographs to Mechanical Turk and paid people for their opinions on them. We instructed 20 Turkers to look at all 10 configurations and tell us which ones that they considered “awesome”. We made it clear that Turkers should use their personal judgement and opinion in deciding which (if any) were “awesome”, with the only stipulation being that they interpret the word “awesome” as “cool, neat or interesting, not as in powerful”.

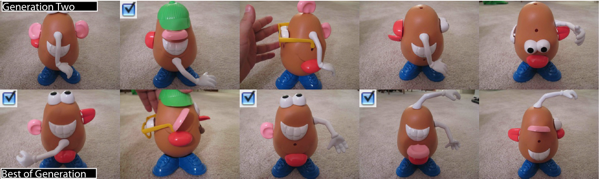

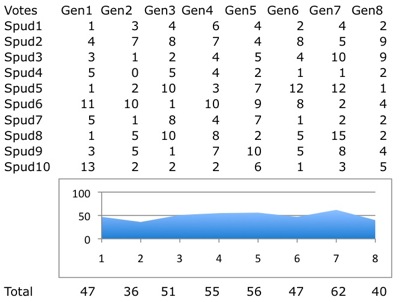

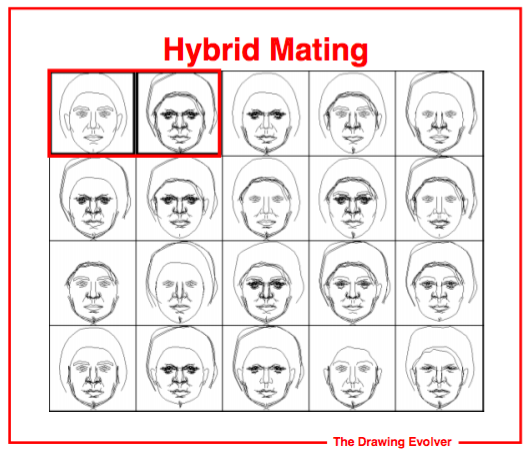

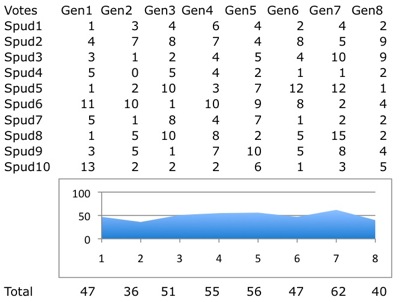

These votes on the Mr. Potato Heads from the Turkers were tallied and used to rank the various configurations against each other. The four Mr. Potato Heads receiving the most votes are then chosen to “reproduce” with each other and themselves, in order to create the next generation of spuds.

With no real genes to speak of, no real concept of gender, and a limited number of parts, Mr. Potato Head reproduction is a bit of an odd process. (Yes, there’s Mrs. Potato Head, but we’ll leave her as an exercise for the reader.) For each hole on the child, a coin is tossed, and depending on the outcome the mom or the dad is allowed to place their part in that hole into the child. If that part has already been used in a previous hole, the dad places his part in the hole instead, and if that is not possible, the hole is left empty. There is also a 20% chance of “mutation” for any given hole, which means that the contributions of both parents are ignored and the hole is instead filled with a piece at random still available for the body (or, sometimes, is left empty).

An example of this evolution in progress (as shown through debug code) can be seen below. The row of numbers at the top of represents the algorithm taking in the vote counts, sorting them and picking the best four spuds for reproduction.

This continued for 8 rounds of evolution, creating 10 spuds, uploading them, collecting Turker vote feedback and using that feedback to evolve instructions for the next batch of 10 spuds. (Specifically, the vote counts for the current round are typed into the outputted instruction file for that round, and that file is given as input to the Processing script to select and rebuild the winning spuds so they can reproduce to make the next round’s spuds.)

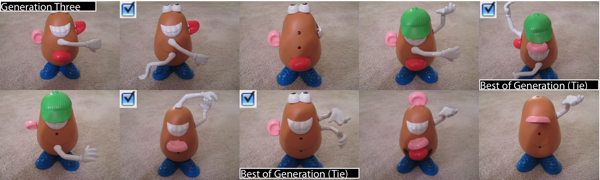

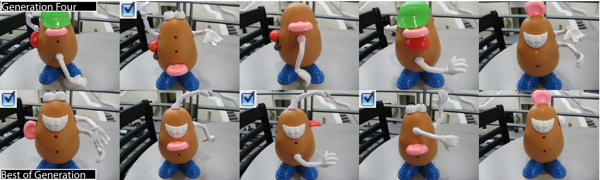

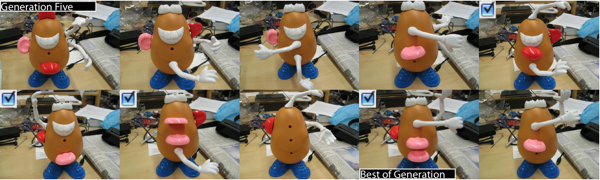

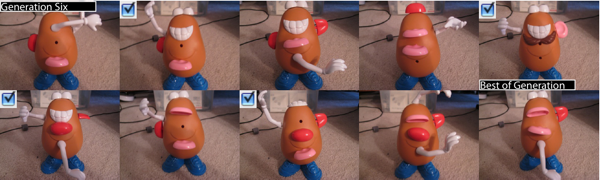

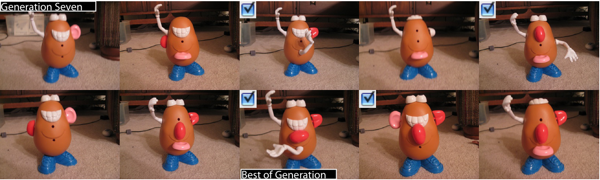

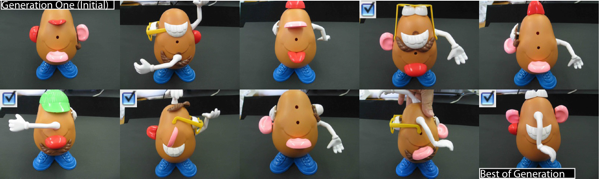

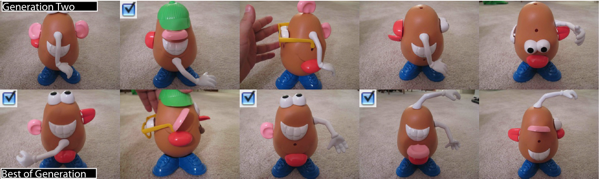

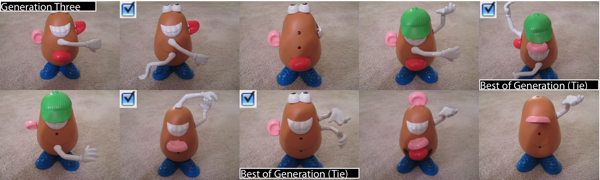

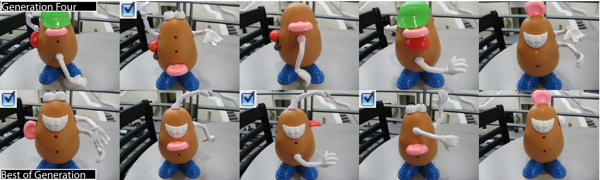

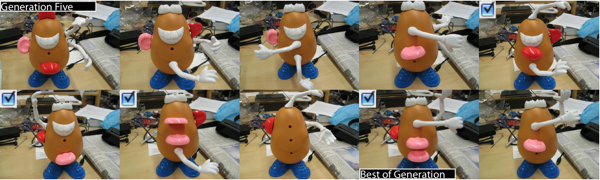

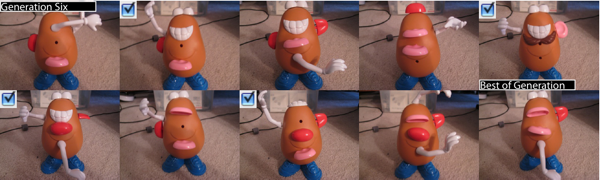

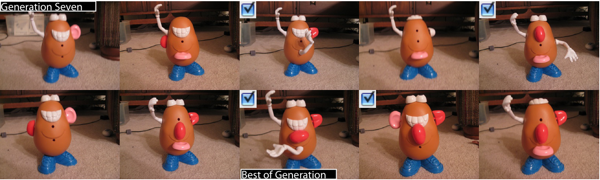

Below, you see the results from all 8 rounds of evolution, with checkmarks indicating which four spuds were selected from that round to seed the next:

and, as voted by Mechanical Turkers at the final round of spud evolution, here are the two Mr. Potato Heads tied for the title of Most “Awesome” :

Analysis:

There are a few things that jump out from this little experiment in pseudo-genetics:

*People really find it “awesome” when the hat is on top, and when the glasses are on the eyes. Those are two recessive traits, as they only occur when two parts are pulled up in combination – but when they do occur, they tend to survive to the next generation.

*Unexpected combinations that make the spud still look “lifelike” but that are different from the “expected” placements tended to fair well. For instance, a combination where the eyes are on the head and the mouth is on the eyes survived for multiple generations, likely because the resulting spuds looked like they were normal Mr. Potato Heads, but simply “looking upward.”

*We have at least anecdotal evidence that the “awesomeness” improved over time. One Turker who participated in both an early and a later batch commented “I am completely stunned.:). The poses are awesome. It has improved greatly.:)”

However, as the chart of votes below shows, it seemed that people did not mark more spuds as awesome in later rounds as in earlier rounds. The top-scoring spuds in the later rounds got a similar number of votes to the top-scoring spuds in earlier rounds. However, this may speak more to how people restrain their praise in surveys than the “absolute” level of awesomeness present.

*When performing this experiment, I really felt like I was building based on a script, and that a computer was telling me what to do. At the same time, the Turkers were expressing quite a bit of autonomy, even having a lot of fun with the assignment (see Turker comments below.) This caused me to feel like I was the one doing the work for the Turkers, not the other way around (despite me paying them for their efforts.) The people at Threadless do very similar work to this, making shirts based on the orders of an online community of designers and voters – but somehow this process feels depersonalized, and I don’t feel like the “curator of community” that Threadless makes themselves out to be. Perhaps it’s because it is evolution, luck, making the final calls as to what an “awesome” spud means in terms of how votes influence the next round, not solely the votes of the Turker community themselves.

*People really do take seriously their own opinions of what makes a configuration “awesome.” In that sense, Turker votes were a perfectly valid and usable fitness function for consistently gauging “awesomeness.”

Below is just some of the feedback and comments I got on this experiment from Turkers. Full feedback is included in the code download.

“To be really honest I think that this toy is horrible. And I think that these configurations simply suck, mostly because they all lack symmetry and I can’t really stand asymmetric things.”

“Hello Sir, I do appreciate your kindness of understanding that we are entitled to our own opinion and so I would like to support the pictures that I didn’t choose with an explanation.:) I didn’t choose 3 out of 10. Those images of Mr. Potato appear to be vague in nature and didn’t depict much of Mr. Potato’s character. I also could not agree it to be part of the “awesome” as in the sense of “cool”, “neat” or “interesting” group because of it didn’t show enough action or emotion. I hope that you will not take what I said as something negative but it’s just a constructive criticism to help you improve.:). Best Regards, Christine”

“the one i picked looks awesome because its misleading, hat could be sideways or forward because the lips are on the side, but the teeth are on the front”

“The arms give him so much personality but I like the one with the mustache the best. I think I might buy a Mr. Potato Head again, I really enjoyed the one I had when I was young.”

“Why don’t you use both ears? I would have thought the one with the one arm in the head and the other in the mouth would have been cool with both ears. It looks off balance.”

“Thanks. Very funny and lots of fun. It would have been nice to have the normal non-awesome configuration to compare each of your configurations with.”

The project code, vote counts, evolution output and Turker feedback can all be downloaded here: Source code and supporting files