Final Project: Minute

Overview

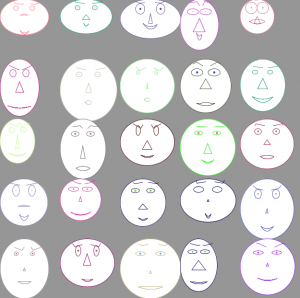

Minute is an examination of individual perception of time–specifically, how everyone perceives the duration of one minute differently. Participants in the project submit videos of what they perceive to be a minute to a database, from which a selection of the videos are chosen randomly to create a visual arrangement of people perceiving time in relation to one another.

Background

I first became interested in this project due to my own inability to accurately perceive time. When I was younger, I would always be the last to finish anything and I’d wonder if everyone was really fast or if I was just slow. This got me thinking about what time meant to me personally, and why I have the perceptions about it that I do. My investigation began about a year ago in Ally Reeve’s concept studio class, in which I conceived the idea of creating an video which contained an arrangement of people perceiving a minute based on background habits, such as whether or not they drink coffee. Then for my first assignment in this class, I expanded upon the idea by creating an application that pulls the videos from a database and arranges them on the screen, generally based on some background factor. For the final project, I wanted to carry this idea even further my making an interactive piece in which people can contribute their own minutes to the database, and then observe how their perception of a minute compares to all the others who have contributed their own minutes.

Implementation

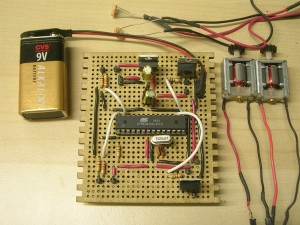

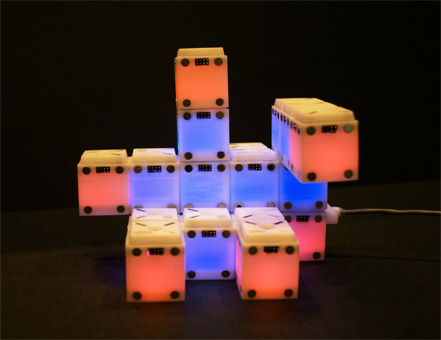

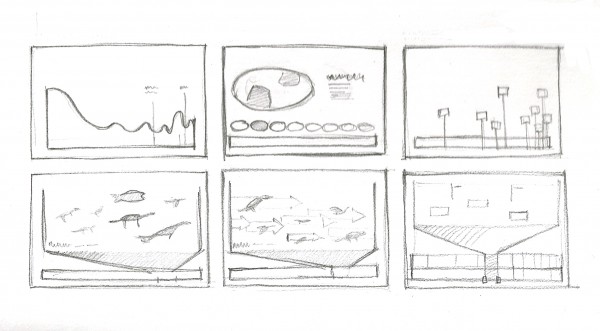

To collect the temporal perceptions of the masses, I constructed a recording booth in which participants can create videos of themselves perceiving their minute. The booth’s frame is made out of plumbing pipes so that it is easy to transport, and the backdrop is a stretch of light-blocking curtain backing. The material is pretty stiff, so it stays in place during the recordings and doesn’t cause too much distraction. Additionally, I hung a short curtain on the side of the booth to make it clear where people should enter, as well as make it easy to see if someone else was in the booth already without disturbing them. The whole structure white colored so that light reflects off the surfaces, as I only used one drawing light aimed at the wall behind the camera as my main source of illumination. (The booth is small, so I didn’t have room for more lights than that and shining the light directly on the booth’s occupant completely washed them out.) Inside the booth is a computer, a desk with a monitor and webcam, and a stool. The computer runs an openFrameworks application which automates the recording process in an effort to make it as simple as possible. Participants are invited to sit inside the booth, and instructions for recording their minute are displayed on the screen with a live stream from the webcam (so that they can adjust their appearance and placement inside the frame as necessary before they begin). When they are ready, they click the mouse to begin recording, and then click again when they feel that a minute has gone by. During this time, the webcam feed is not displayed so that it is not a distraction from perceiving the passage of time. After the final click, the video is sent wirelessly to the computer outside the booth, where it is saved in a directory containing all the minutes recorded so far.

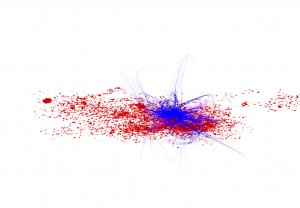

The computer outside the booth runs another openFrameworks application that displays a sample of twenty minutes on a large widescreen monitor, arranged either randomly or by duration. As each video representation of a perceived minute ends, it immediately cuts to black and leaves a gap in the arrangement. After the last minute has disappeared from the screen, the program creates a band new arrangement of videos–no two iterations of the display will be the same. At the beginning of each iteration, the twenty minutes are chosen at random from the directory mentioned above. (All the videos are saved in their own file, separately from the application, so that they can be used the next time the program is run.) My hope is that after participants record and submit their minute to the database, they can step outside and see their minute in the next iteration on the display.

Here is a short sample of the minutes I collected at the Miller Gallery during the BFA/BXA senior exhibit (Fraps unfortunately limits the clip to 30 seconds):

Results

I’m pleased with the outcome of this project, although of course there are many areas which I could improve. One aspect of the project I deliberated over until the end was whether or not I should include the aspect of how a person’s background potentially influences their perception of time. One criticism I’ve heard is that doing so makes the project seem overly scientific for an artistic exploration. As the senior exhibit drew near, I decided that my time would be better spent keeping the piece simple but bringing it to a highly polished state, and looking back I think this definitely the right decision. It’s far more interesting just to arrange the videos by duration and listen to the audience’s conjectures about perception.

After completing the project, I made a couple observations. While the first participants were very withdrawn in their recordings, people became more and more adventurous in how they conveyed themselves. This was extremely interesting to observe, since I’m still very interested in how personality might play a role in how people perceive time. Also, I originally intended that only one person record their minute at a time, but some people really wanted to have someone else in the booth with them. I felt that this conveyed some aspect of their personality that might otherwise be lost, so I ended up deciding to let pairs of people perceive a minute together.

Lastly, there are a few other details that could be a little tighter, but I don’t feel that they detract from the piece in any major way. The booth is hard to keep clean because it’s all white, and sometimes the pipes don’t stick together very well. I ended up taping and nailing them into place, but it would have looked much cleaner if I had drilled a hole through each of the overlapping sections, and then placed a pin through the whole to keep them in place. Also, the recording application takes a few seconds to copy the video over to the other computer, and during that time it looks as if the program has frozen. Occasionally, people will click the mouse multiple times. Each click is queued and sent to the application after it has recovered, resulting in a number of quick scrap recordings that are still sent to the database. Also, it would have been nice to include a feature in the display application so that recently submitted minutes will always be selected. This way, people would be guaranteed the opportunity to see themselves right after recording.

Documentation and reactions to the project: