Tim Sherman – Project 4 – Generative Monocuts

When I began this project, I knew I wanted to make some kind of generative cinema, in particular, wanted to be able to make Supercuts/Monocuts, videos made of sequences of clips cut from movies or TV shows all containing the same word or phrase. The first step for me was figuring out exactly how to do this.

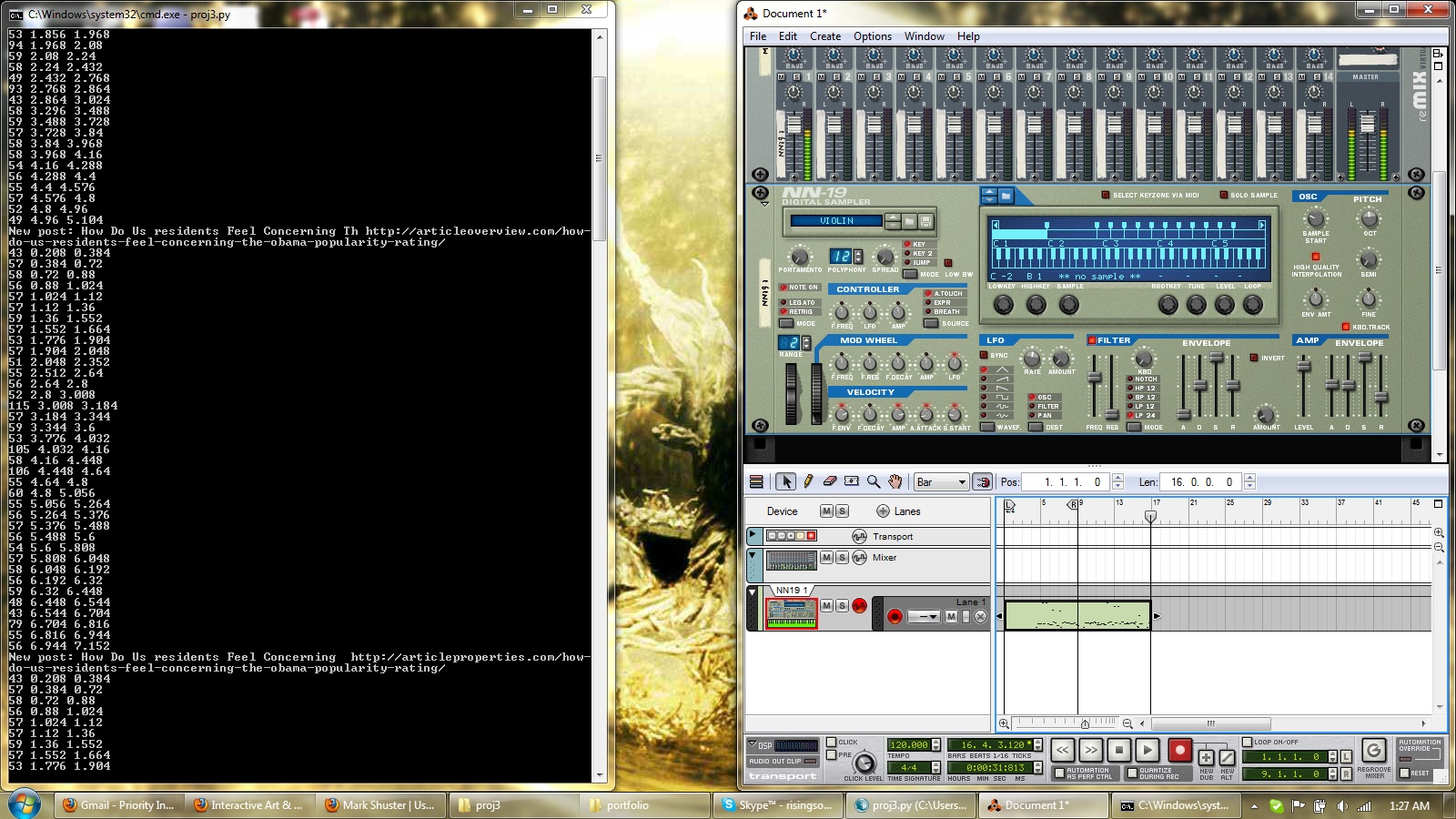

I decided to build a library of paired movie files and subtitle files of the .srt format. This subtitle format is basically just a textfile made up of a sequence of timecodes and subtitles, so it’s easy to parse. I then planned to parse subtitles into a database, and then search it for a term, get the timecodes, and use that information to chop the scene I wanted out of the movie.

I modified code Golan had written to parse .srt’s into processing, so that they were stored into an SQLite3 database using SQLibrary. I then wrote a Ruby application that could read the database, find subtitles that contained whatever word or phrase you wanted to search for, then run ffmpeg through the command line in order to chop up the movie files for each occurrence of the word.

The good news was, the code I wrote totally worked. Building and searching the database went smoothly, and the program wasn’t missing any occurrences, or getting any false positives. However, I soon found myself plagued with problems beyond the scope of code.

The first of these problems was the difficulty I had in building a large library of films with matching subtitles quickly. There were 2 real avenues I found for getting movies: Ripping and converting from DVD, or Torrenting from various websites. Each had it’s own problems. While ripping and converting allowed me to get a subtitle with the file guranteed, it took a very long time to both rip the DVD and then convert from Video_TS to avi or any other format. Torrenting, while much quicker. didn’t always provide .srt files with the movies. I had to use websites like openSubtitles to try and find matching subtitle files, which had to be checked carefully by hand to make sure they lined up – being off by even a second meant that the chopped clip wouldn’t necessarily contain the search term.

These torrenting issues were compounded by the fact that working with many different undocumented video files was a nightmare. Some files were poorly or strangely encoded, and behaved very strangely when ffmpeg read them, sometimes ignoring the duration specified by the time codes and putting the whole movie into the chopped clips, or simply not chopping at all. This limited my library of films even further.

The final issue I came across was a more artistic one, and one that while solvable with code, wasn’t something I was fully ready to approach in a week. I had to figure out, once I had the clips, how to assemble them into a longer video, and I wasn’t quite sure the best way to do this. I considered a few different things, and made sketches of them using VLC and other programs. I tried sorting by the time a clip occurred in the movie, by the length of the clip, but nothing produced consistently interesting results. As I couldn’t find one solution I was happy with, I decided to leave the problem of assemblage up to the user. The tool still is incredibly helpful for making a monocut, as it gives you all the clips you need, and you don’t need to watch or crop what you want by hand.

Embedded below is a video made by cropping only scenes containing the word “Motherfucker” out of Pulp Fiction. While the program supports multiple movies in the database, due to my library building issues, I couldn’t find a great search that took interesting clips from multiple movies.

*Video coming, my internet’s too slow to upload at the moment.*