Abstract

Knitting is often dismissed as a way to pass time for the excessively hip, or the excessively old, and it’s potential for digital fabrication ignored. But really, at its most basic level, knitting is an extremely robust method of transforming one continuous line into a three dimensional flexible form, incorporating color pattern, and also texture and volume seamlessly.

For this project I wanted to explore knitting as a way to fabricate textiles based off of generative systems, choosing to focus on Reaction Diffusion.

Inspiration

Although digitally controlled generative patterns aren’t really in existence, hand knitters for ages have been applying mathematical principles in order to not only produce interesting color patterns, but also form and texture.

(from left to right, machine knit generative fair-isle by Becky Stern, hand knit from Sandra Backlund's pool collection, and Cables with Whisky sweater by Lucy Neatbody)

My inspiration for this project largely came from Sandra Backlund, one of my absolute favorite knitwear designers. By hand, and without any particular pattern she creates beautiful volumes and textures. However, due to the tedious nature of the work, she is only able to produce a handful of pieces each season, each entirely unique and unreplicable. I started wondering if this process of creating knitwear could be digitalized and algorithmically generated, like the work I’d done before with flocking. Becky stern also has been doing some amazing work with hacking knitting machines to print out patterns in fair-isle, and I was delighted to find out that similar texture producing stitches could also be created in the same methodology. However, as anyone who has knitted before knows, just randomly generating stitches, crossing your fingers, and hoping for the best often produces horrible and ill fitting results. Fortunately, there is a large community of knitters who have been applying mathematics, such as fibonacci sequences, cellular automata, and mobious strips, to the craft for ages. Lucy Neatbody was actually one of the first to apply such methodology to texture, using probability to pseduo randomly cable a sweater.

Process

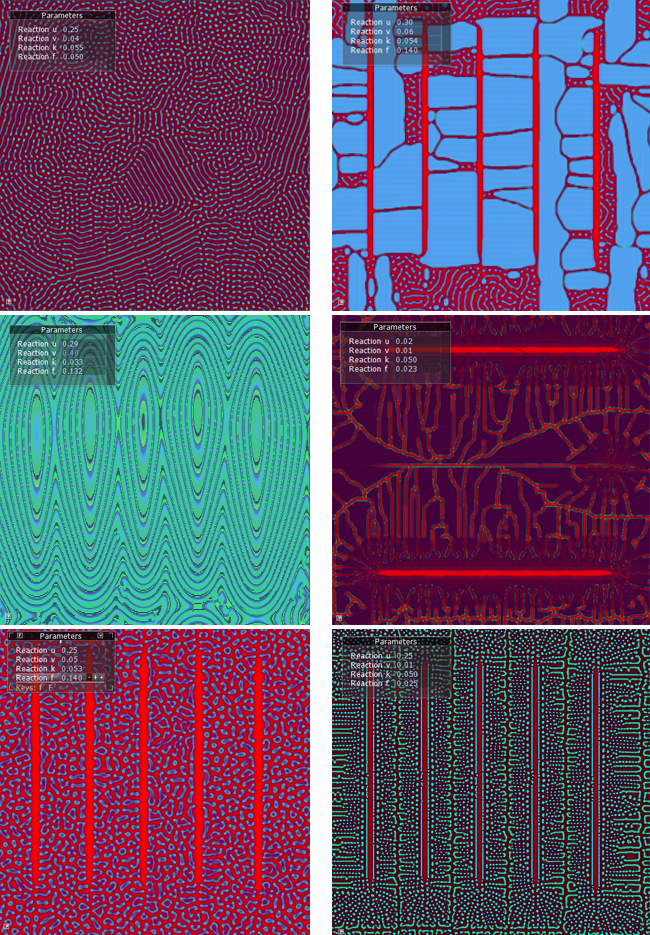

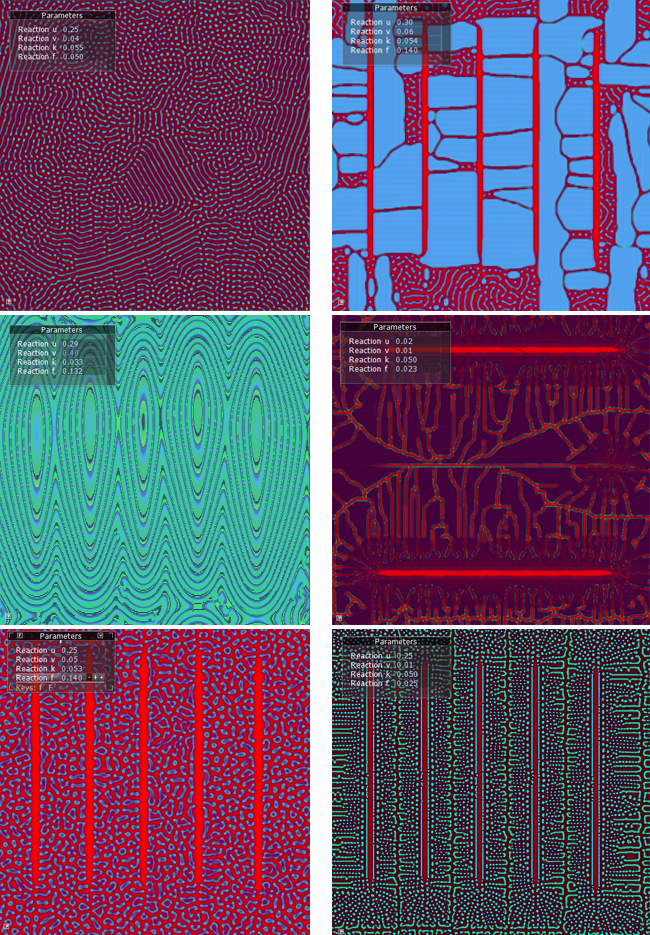

Since even the most intricate patterns can be reduced to a very simple set of rules, knitting lends itself well to combining with more traditional generative system algorithms. After experimenting with several, including Voronoi diagrams and Diffusion-limited aggragation, I decided to focus on Reaction Diffusion, using Grey Scott’s equations.

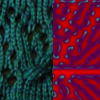

Reaction Diffusion is a chemical reaction that produces a large range of emergent patterns in nature, from fish scales, to leopard spots and zebra stripes, whose hosts are often killed so their pelts can be used for textiles. By using it as a basis for a pattern making application, I liked the potential for creating and then fabrication patterns of our own.

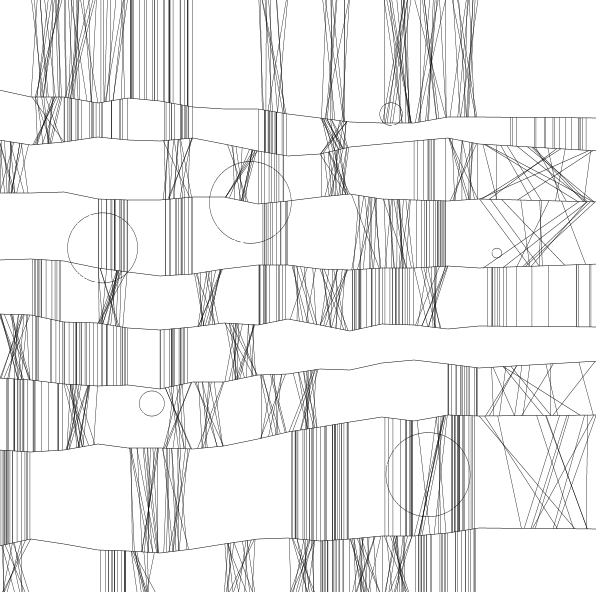

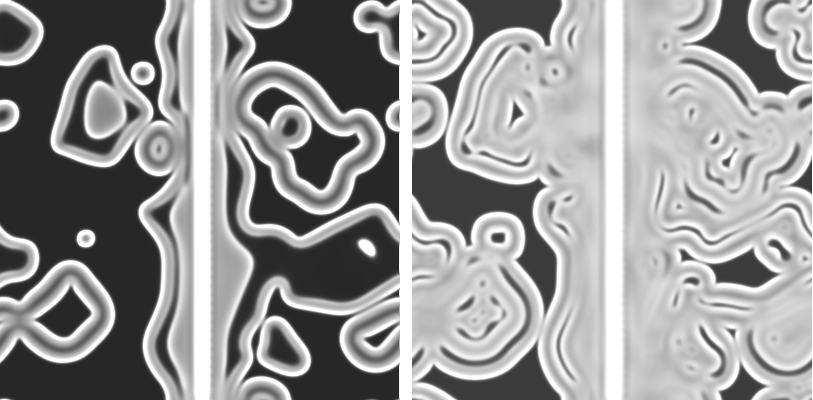

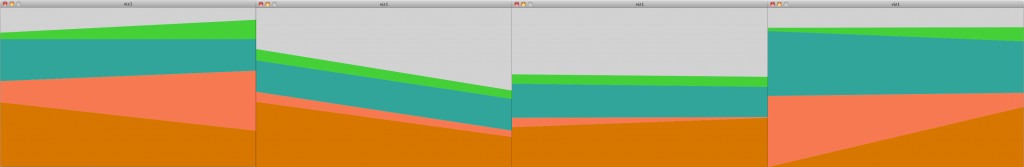

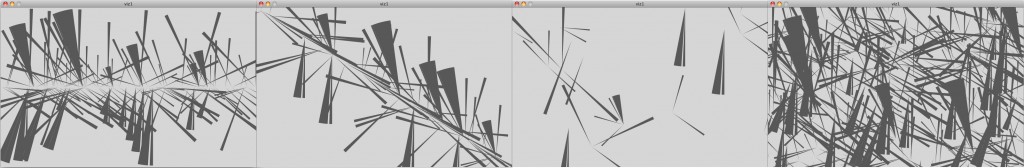

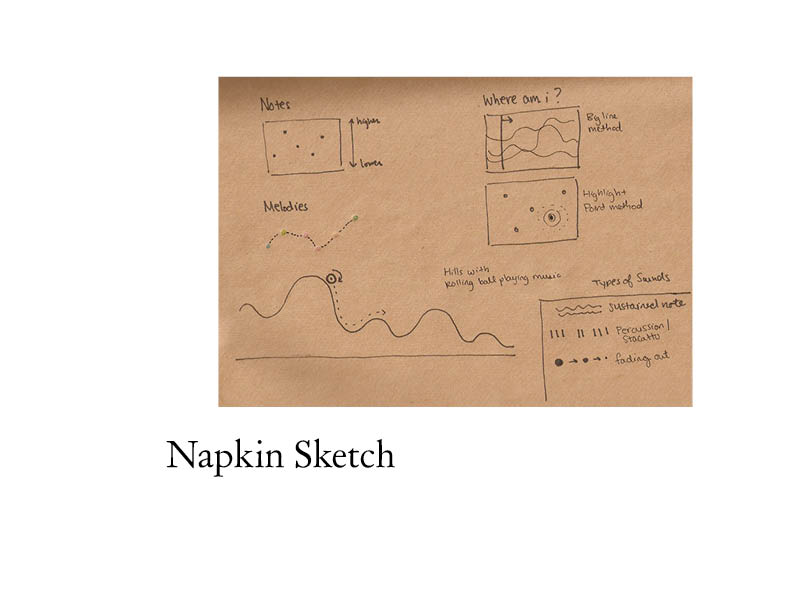

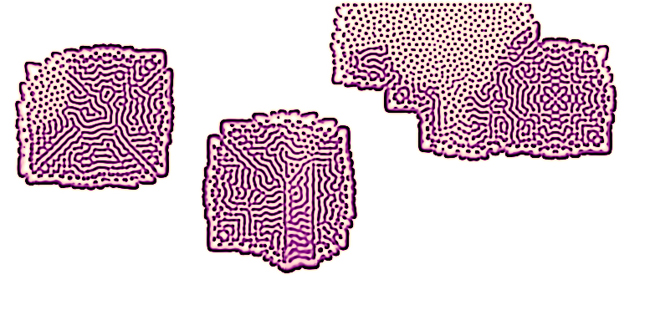

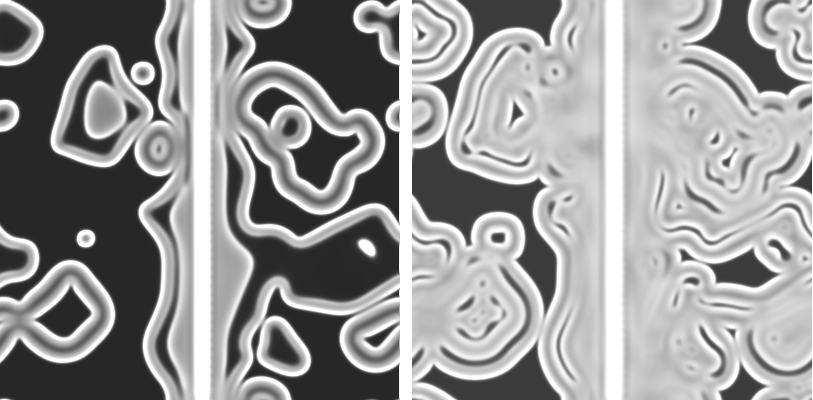

I began experimenting in processing, using Toxiclib’s excellent simUtils library, “growing” reaction diffusion to fit various patterns and forms, and then focusing on creating multiple layers of it in order to produce variety in pattern

However, Processing became frustratingly slow and unwieldy, especially when it came to seeding the diffusion off an underlying layer of the reaction. So following a tutorial by Robert Hodgin and rdex-fluxus on how to recreate Reaction Diffusion with shaders in Cinder I developed my final application.

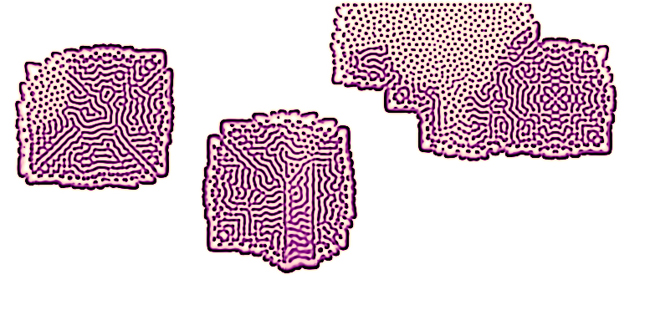

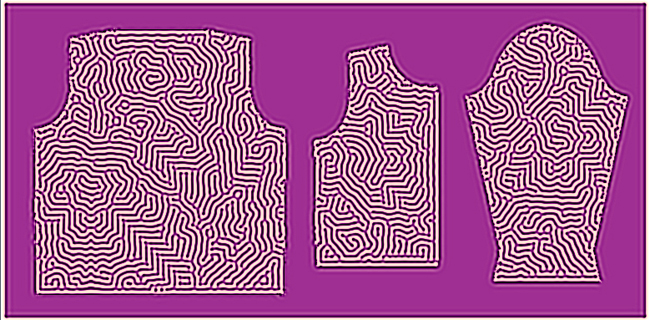

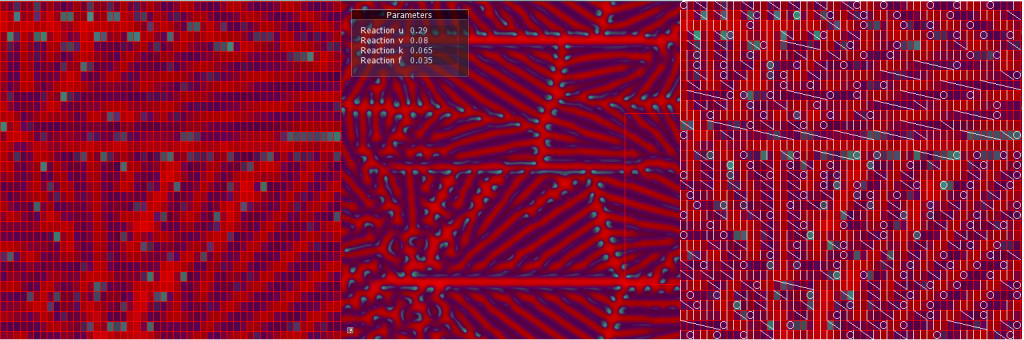

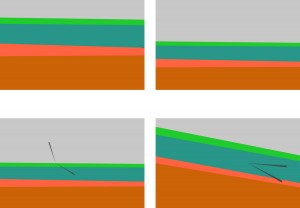

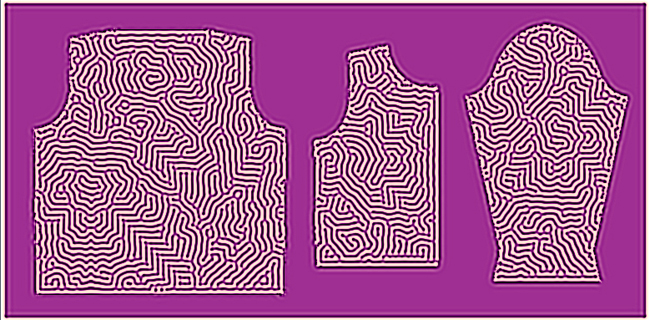

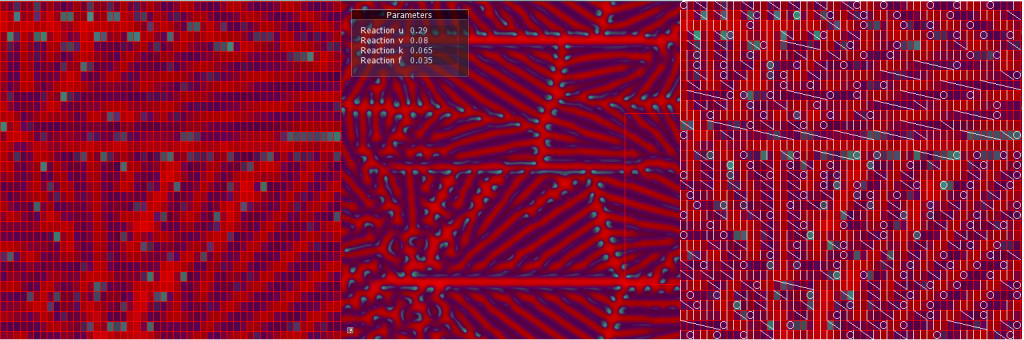

Producing “swatches” of pattern by adjusting the parameters of the equation and user interaction, I then translated them into patterns for knitting by analyzing the colors of pixels in the corresponding row, and translating them into a series of cables and eyelets.

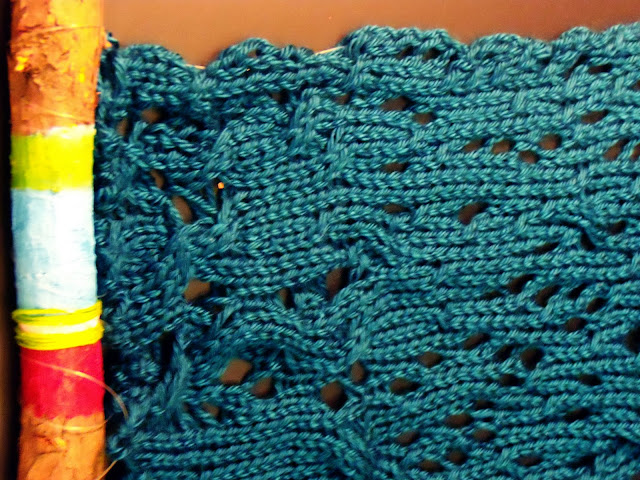

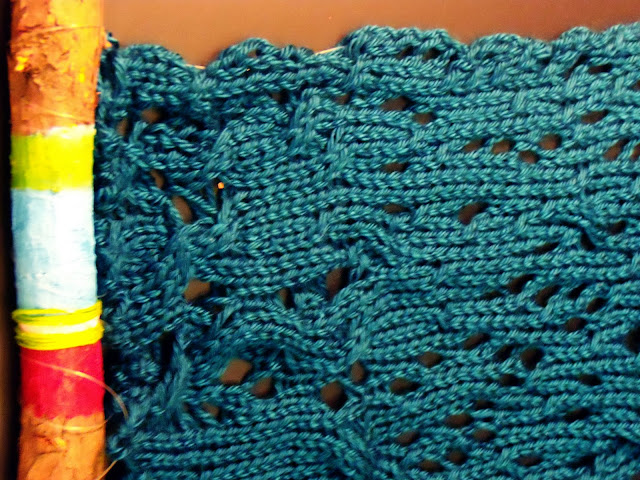

Since plans for using a computer controlled knitting machine fell through (which ended up being a much larger part of the project than I had ever intended), I then hand produced the above pattern on my own machine.

Doing it by hand instead of on a computer controlled machine took a significantly longer time (for something that was rather small), but it allowed me to incorporate certain textural stitches such as cables that would have been impossible otherwise. I really like the final product, and actually wherever there were 3×3 cables in the pattern produced the best results, but those are really difficult to produce on the machine (you have to knit those rows manually since the stitches are too tightly pulled). The 1×1 cables of which there was an abundance of in that middle section are much more subtle.

Conclusion

Although I am extremely pleased with the final swatch, ideally I wanted this project to operate on a much larger scale. Hopefully, one could have the application and machine set up so that once the user finishes creating their own swatch and entering their measurements, garments could be printed out immediately incorporating their unique texture in a few hours rather than the 28-30 it took to produce the swatch. Working with more advanced shaders in Cinder, and learning more than I ever thought possible about knitting machines, how they operate, (and also how to fix terrible broken ones purchased from craigslist!) were also excellent things to know I learned from this project. I’m very excited to pursue this project further, and am working on making a prototype dress using the algorithm, and modding a machine to just print this during the summer