I am looking into implementing a novel approach for 2-D camera rectification as an OFx plugin. This method of rectification requires no input from the user (provided your image/camera has EXIF data which is almost always the case) – forget about making and using a checkerboard to rectify your image!

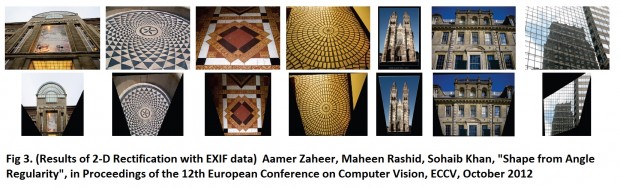

I learned about this technique when I took the Computer Vision course (taught by Dr. Sohaib Khan) in Fall 2012 at LUMS. We covered one of the recent publications by the CV Lab at LUMS: “Shape from Angle Regularity” by Zaheer et al. in Proceedings of the 12th European Conference on Computer Vision, ECCV, October 2012. This paper uses ‘angle regularity’ to automatically reconstruct structures form a single view. As part of their algorithm, Zaheer et al. first identify the planes in the image and then automatically 2D rectify this image solely relying on ‘angle regularity’. That’s the part I’m interested in.

Angle regularity is a geometric constraint that relies on the fact that in structures around us (buildings, floors, furniture etc.), straight lines in 3-D meet at a particular angle most commonly 90 degrees. Look around your room, start counting the number of 90 degree angles you can find and you’ll see what I mean. Zaheer et al. use the ‘distortion of this angle under projection’ as a constraint for 3D reconstruction. Quite simply, if you look at plane from a fronto-parallel view you shall see the maximum number of 90 degree angles possible. That’s what we’ll search for: we look for the “… homography that maximizes the number of orthogonal angles between projected line-pairs” (Zaheer et al.).

Following the algorithm used in the paper (and MATLAB code available at http://cvlab.lums.edu.pk/zaheer2012shape/) I plan to generate a few more results to see how practical it is- from the results given in the paper, it seems very promising. The algorithm for 2D rectification relies on searching the lines in the image, assuming line-pairs to be perpendicular, and then using RANSAC to separate inliers and outliers line-pairs in order to optimize 2 variables (camera pan and tilt, Note: focal length is known from EXIF data). The MATLAB code relies on toolboxes provided within MATLAB (for example RANSAC) which I should be able to find open-source C++ implementations of- The algorithm, although conceptually straight-forward, might not be as easy to implement and optimize when working with C++. I would work towards it and judge the time commitment it requires.

Once I’m done coding the plugin, I would want to make some cool examples that demonstrate the power of this algorithm. If the frame-to-frame optimization is fast (i.e. the last frame’s homography seeds the initial value for the next one), I could try to make this real-time.

I have not yet come across a C++ implementation of this technique, and the only OFx plugin for camera rectification that exists right now (ofxMSAStereoSolver) depends on the checkerboard approach.

Paper: http://cvlab.lums.edu.pk/sfar/

Aamer Zaheer, Maheen Rashid, Sohaib Khan, “Shape from Angle Regularity”, in Proceedings of the 12th European Conference on Computer Vision, ECCV, October 2012

Related OFx Plugin: ofxMSAStereoSolver by memo