My capstone project has changed significantly since the initial proposal for a State of the Union Text Visualization. I decided instead to make something less serious and more whimsical.

The Self-Help Help Line is an introspective service designed to help the user pinpoint exactly what is bothering them today. Using hierarchical data from the WordNet database, this phone service answers a call and through a series of menus brings the user from broad concepts to specific concepts that may be bothering them.

In practice, this traversal of the huge hierarchy is pretty random and hopefully a little entertaining. Few people have been able to find the “problem” they’re having instead finding random subtrees like musical genres or types of public speeches.

To-do List

1. Make WordNet data usable in Node.js environment

2. Create logic around selecting trees through series of menus.

3. Optimize database calls by caching most expensive queries.

4. Connect to Twilio Phone service.

5. Create website to show the different paths people have taken.

6. Create short promo video.

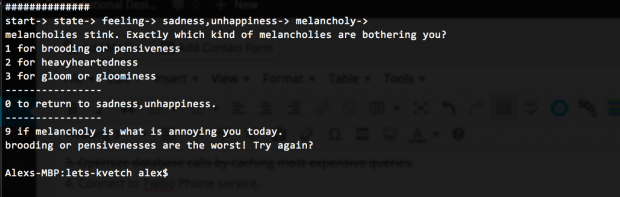

Example Trees

start->

abstract entity->

communication->

expressive style,style->

genre,music genre,musical genre,musical style->

african-american music,black music->

hip-hop or raps

start->

event->

act,deed,human action,human activity->

speech act->

address,speech->

lecture or public lectures

start->

state->

feeling->

sadness,unhappiness->

melancholy->

brooding or pensivenesses