(Last week, I posted a more lengthy “documentation” post outlining my process. In light of updates, I thought I would also post a condensed version, which hopefully is more attuned to quick comprehension)

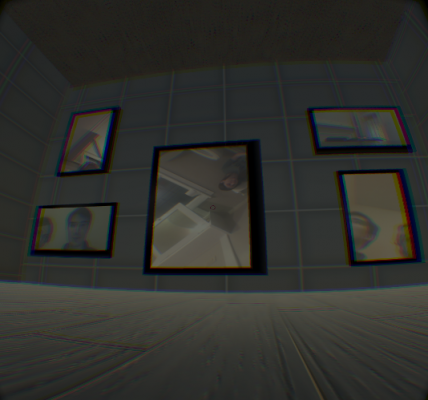

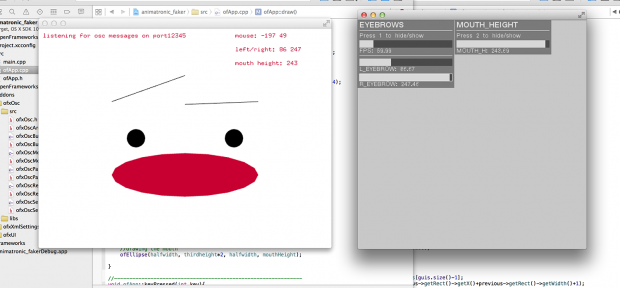

Some screenshots from the experience

Overview

A Theatrical Device is an original play, seen through the front-facing cameras on laptops, desktops and cellphones held by the actors — these devices provide multiple, simultaneous perspectives of the same timeline across multiple rooms, showcasing the progression of different characters. Audiences find their own way through the play by exploring it in a 3D, virtual reality world (via the Oculus Rift)

The Play

Inspiration

It wasn’t until very recently — probably within the last five years — that high-quality, front-facing cameras became standard on cell phones, laptops, tablets and other consumer electronics. Suddenly, whenever we’re staring at a screen, we are also staring into a camera.

This is hardly an original observation. Many of my friends live in true fear that their front-facing cameras might be accessed by hackers or the NSA or bored strangers on a website, and no amount of hardware-enabled indicator lights can reassure them. Some cover these cameras with stickers, disable them entirely, or go so far as to destroy them. I think these reactions speak a lot to just how complete a voyeur’s picture of us could be: how much he or she would be able to discern about our private lives by staring at us through our screens. I started to wonder: what exactly would someone be able to discern through these perspectives? How much intimacy could the audience have with their subject? What kinds of stories might we be able to tell?

The advent of these cameras ends up being very interesting from a storytelling perspective. While I’m by no means a film director, I do know that being able to shoot multiple, simultaneous perspectives of the same scene is sort of an impossible dream for many filmmakers. For one, assuming you can even get ahold of multiple cameras, filming from multiple angles requires hiding the cameras from one another: no movement, no interior angles, and a very limited number of possible shots. However, it’s 2015: there are plenty of contexts, conflicts and actions for which cell phones and laptops are natural, invisible parts of the landscape. Using the cameras on devices allows us to seamlessly integrate any number of cameras into a scene, compelling us to ask: exactly what new kinds of experiences are we now able to create?

Story

To me, writing the script became the most interesting part of the project. After all, in order to justify a cool, new platform, you needed a story it would be able to tell better than anyone else.

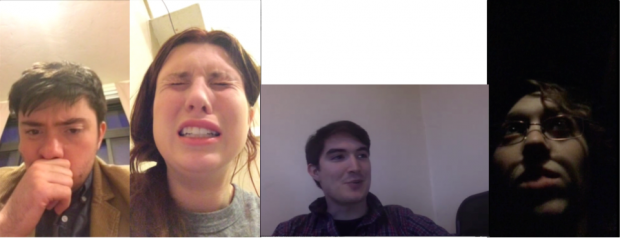

A Theatrical Device centers around three college alums visiting their former classmate, still a student, two years after graduation. The student, Hattie (who is played by me for no more meaningful a reason than the fact that the actor got sick on shooting day), suffers from uncontrollable bouts of emotional instability. The reunion grinds to a halt after a casual comment triggers her to hole up in a bedroom and suffer a breakdown, leaving the rest of her guests struggling to figure out what to do next. My hope is that the different perspectives manage to present the play as either a comedy or a tragedy: from the outside, you have friends trying to reconnect in spite of the awkwardness of their friend’s ill-timed “moment”. Other perspectives, however, will reveal the interior struggles of Hattie and her friends, showing what it means to be unable to hide, cure, or explain one’s inner turmoil.

At its core, each character’s story revolves around the notion of sensitivity — to what extent must we go out of our way to accommodate others? How “real” can someone’s struggle be when it’s all in their head? I’ll admit, the script was hastily written, and perhaps presents too much of a bleeding heart liberal perspective on this question to have particularly complex commentary. In future iterations, though, I’d like to more strongly point each character to a different conclusion.

(for more about the process, please see the previous documentation post)

The Virtual Reality

Inspiration

Virtual reality is pretty new, and while there are a lot of very cool things it will one day be able to do well, many of those simply aren’t possible or passable with an Oculus DK2. One thing the DK2 is very good at, I’m told is providing realistic cinema experiences. While movement and rendering is still iffy, VR cinemas showing 2D films are able to create surprisingly effective presence.

Of course, no one finds that particularly exciting. However, this meant it could be very effective at displaying a movie — MY movie. In VR I could have as many screens as I wanted, with any sizes I wanted. The DK2 was thoroughly equipped to make the perfect 3D interface for navigating through films.

On that note, I’m very interested in what sort of new interfaces we can create with VR. In VR, the two banes of any set designer’s existence — cost of construction and the laws of physics — can be ignored entirely. How does this change the ways in which we can interface with data or explore an idea? How might we be able to see things differently?

Implementation

Ideally, I would have come up with some kind of interface that’s literally impossible in the physical world — something mindblowing and earth shattering, which can only be done in VR. In all honesty, someone who was very rich and kind of clever could probably make a physical version of my VR world, which failed to meet my expectations but will hopefully be rectified in another iteration (hopefully by then, I will be more clever)

The world is a series of rooms, like a gallery — each room in the gallery is a scene in the play, with framed videos on the walls showing the many camera angles. Walking into a room causes the scene to restart from the beginning, and an audience member can focus a cursor on a video to bring it into full focus. Admittedly, this is all a little hard to describe.

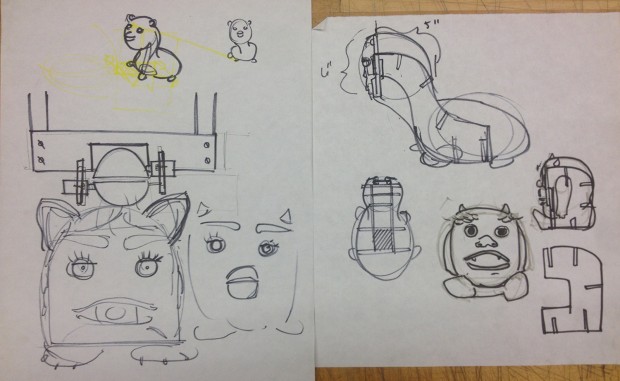

Each room provides a slightly different way to interface with the videos. In one, there is a fake living room with actual cell phones and laptops representing each perspective. In another, the audio perspective is chosen at random, and you watch all the videos at once on a single wall as one story is told. I tried to make each room larger and more fantastical as the play progresses, to give each new scene a progressively larger sense of gravity and power. By the end, the videos are literally towering over you.

Since each scene is in a different room, you can enter and re-enter a scene at will. This gives the audience the option of exploring the same scene multiple times, and having the play progress in a vaguely nonlinear fashion.

Future Work

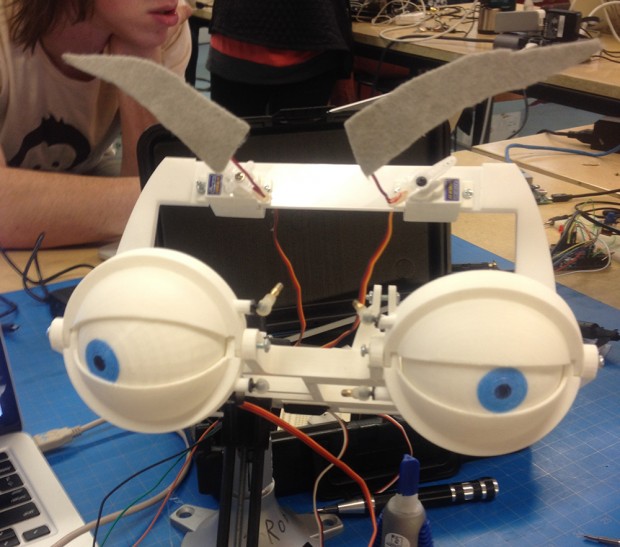

At the end of the day, so much about this platform and process were new to me that I saw it very much as a first draft. Feeling like it all went pretty well, I’d love to try it all again with another iteration. First, I’d love to revise my script, integrating what I discover about how the many narratives were communicated. Second, I’d love to better organize my shooting process to avoid the deadly expense of phones without memory/battery, as well as the time spent synchronizing in post. Finally, I’d love to create a less amateur VR world, and make use of the technology on hand to create truly novel ways to experience media.

I wasn’t sure when I began how interesting or effective this platform would be. Now, I feel like it has a ton of power and potential. I’ll upload the final version of this iteration, soon, but I hope to have something even better for the future.

I will also post a video once I figure out how to do that! Apparently streaming game footage is something everyone can do except me!

Find the source at github.com/amwatson/Device-Theatre