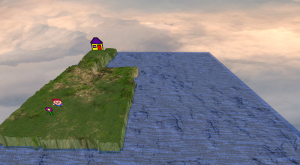

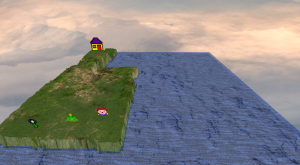

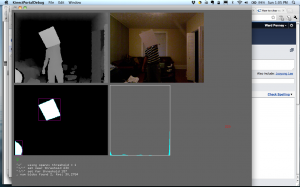

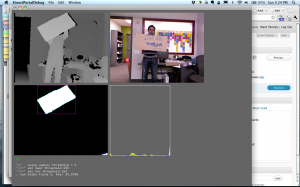

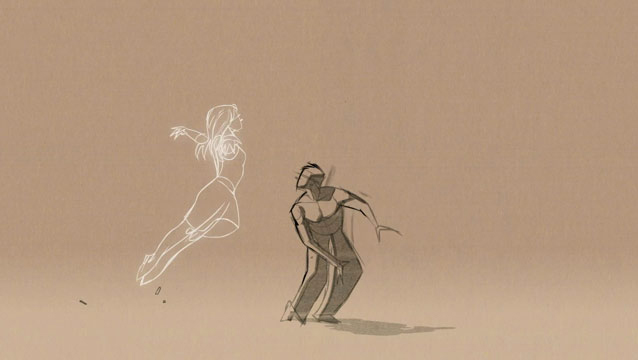

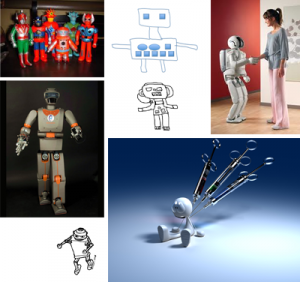

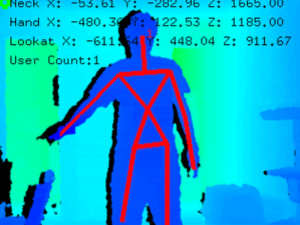

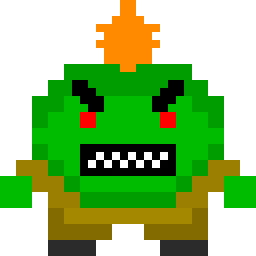

Over this weekend, I’ve succeeded in finishing a basic build of a game using the Magrathea system. The game is for any number of players, who build a landscape so that the A.I. controlled character (shown above) can walk around collecting flowers, and avoiding trolls.

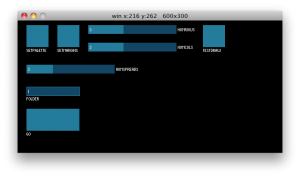

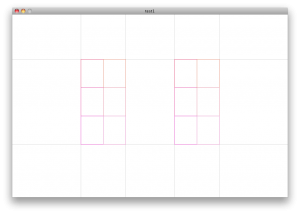

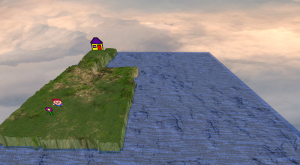

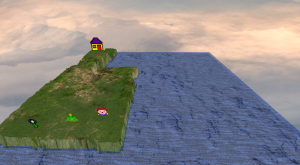

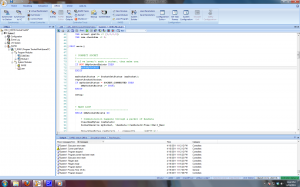

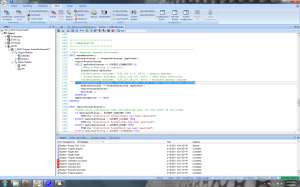

Building this game involved implementing a lot of features – automated systems for moving sprites, keeping track of what sprites existed, etc, and finding modular, expandable ways to do this was a lot of my recent work. The sprites can now move around the world, avoid stepping into water, display and scale properly, etc.

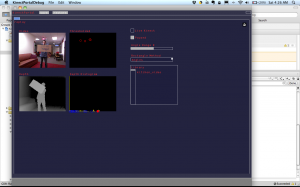

The design of the game is very simple right now. The hero is extremely dumb – he basically can only try to step towards the flower. He’s smart enough not to walk into water, and not to walk up or down too-steep cliffs, but not to find another path. The troll is pretty dumb too, as he can only step towards the hero (though he can track a moving hero).

I’m not keeping track of any sort of score now (though I could keep track of how many flowers you’ve collected, or make that effect the world), because I’m concerned about the game eclipsing the rest of the tool, and I think that’s what I’m struggling with now.

Basically, I’m nervous that the ‘game’ isn’t really compelling enough, and that it’s driven the focus away from the fun, interesting part of the project (building terrain) and pushed it into another direction (waiting as some dumb asshole sprite walks across your arm).

That said, I do think watching the sprites move around and grab stuff is fun. But the enemies are too difficult to deal with reliably, and the hero a little too dumb to trust to do anything correct, requiring too much constant babysitting.

I also realize that I’ve been super-involved with this for the last 72 hours, so this is totally the time when I need feedback. I think the work I’ve done has gone to good use – I’ve learned how to code behaviors, display sprites better, smooth their movement, ensure they are added onto existing terrain, etc. What I’m trying to decide now is if I should continue in the direction of refining this gameplay, or make it into more of a sandbox. Here’s the theoretical design of how that could happen (Note, all of the framework for this has been implemented, so doing this would require mostly the creation of more graphical elements).:

The user(s) builds terrain, which is populated with a few (3? 4?) characters who wander around until something (food, a flower, eachother) catches their attention. When this happens, a thought balloon pops up over their head (or as part of a GUI above the terrain? this would obscure less of the action) indicating their desire for that thing, and they start (dumbly) moving towards it. When they get to it, they do a short animation. Perhaps they permanently affect the world (pick up a flower then scatter seeds, growing more flowers?)

This may sound very dangerous or like I’m in a crisis, but what I’ve developed right now is essentially what’s described above, but with one character, one item, and the presence of a malicious element (the troll), so this path would really just be an extension of what I’ve done, but in a different direction than the game.

I’m pretty pleased with my progress, and feel that with feedback, I’ll be able to decide which direction to go in. If people want to playtest, please let me know!!

(also, i realize some sprites (THE HOUSE) are not totally in the same world as everything else yet, it’s a placeholder/an experiment)

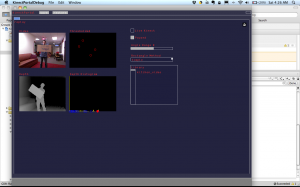

screen shots (click for full):