This post documents the final project carried out by Sama Kanbour and Afnan Fahim.

Couplets

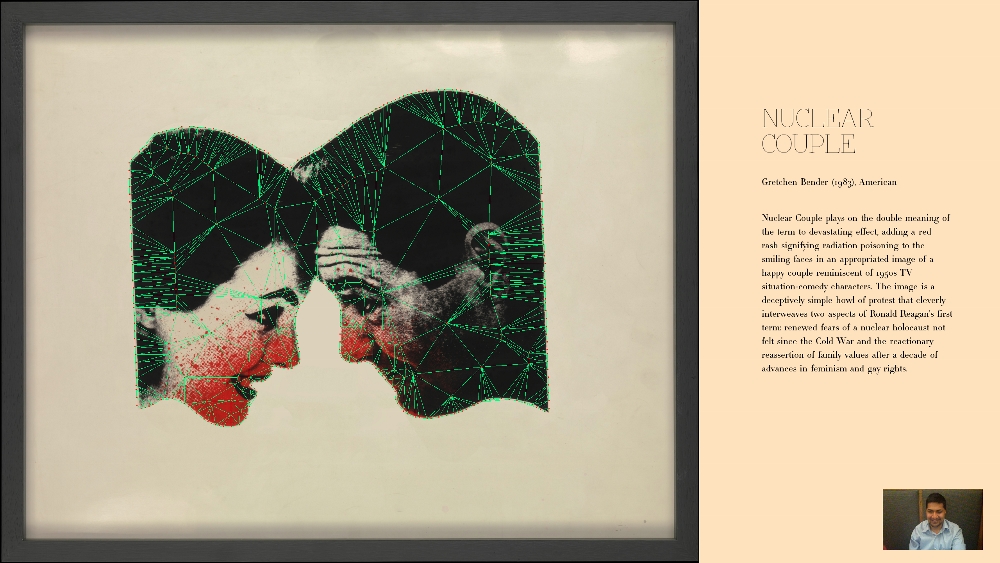

For our final project, we built a way for people to interact with artworks using their face as a puppeteering tool. Visitors to the project use their face to distort and puppeteer images of male/female “couples”. Each half of the user’s face controls one member of the couple.

We found the couples by exhaustively searching the online image archives made available by the Metropolitan Museum of Art. We then edited these images using Photoshop to make the edges of the couples clear, and then used computer vision techniques to find contours of the bodies and/or faces of each of the couples. We then triangulated the couples, and built puppets from the resulting mesh. We then used a face-tracker to detect the facial expressions of the user, and then used this data to control the couples’ puppets.

The project was built with the openFrameworks arts-engineering toolkit, and various addons including ofxCV, ofxDelaunay, ofxPuppet, and ofxFaceTracker. Source code for the project is available here: https://github.com/afahim/Mimes

Tweet:

We brought couples from the Met museum back to life.

Abstract:

This interactive art piece brings fourteen couples from the Metropolitan Museum back to life. It allows people to discover the stories of different couples in history. Viewers can animate facial expressions of these couples by use of puppeteering. The left half of the viewer’s face puppeteers the male partner, while the right half of the face puppeteers the female partner. By using the two halves of their face, the viewer can simulate the conversation that was potentially happening between these couples back in history.

Narrative:

This interactive art piece brings fourteen carefully selected couples from the Metropolitan Museum of Art back to life. It allows people to discover the stories of different couples in history. Viewers can animate facial expressions of these couples by use of puppeteering. The left half of the viewer’s face puppeteers the male partner, while the right half of the face puppeteers the female partner. By using the two halves of their face, the viewer can simulate the conversation that was potentially happening between these couples back in history.

We desire to make historical art more tangible and accessible to today’s audience. We hope to help garner meaningful interactions between the audience and our selected artwork, and perhaps interest the audience to learn more about the artwork presented.

The piece was built using OpenFrameworks. We used Kyle McDonald’s ofxFaceTracker to detect the viewer’s facial features; ofxCV and ofxDelaunay for creating a mesh out of couples; and ofxPuppet to animate their expressions. Oriol Messia’s prototypes helped kickstart the project. The artworks were handpicked from the Metropolitan Museum of Art’s online Collections Pages. The project was carried out under supervision of Professor Golan Levin, with additional direction by Professor Ali Momeni.

Video:

Couplets : https://vimeo.com/96155271