zaport-lookingoutwards07

“Armory Captures” (2015), Smart Objects

“Armory Captures” is an artwork that creates an augmented reality gallery space of the 2015 Armory Show. The work uses the phone application 123Dcatch to create 3D scans of the physical artworks that would appear in AR format in his show. When a visitor enters the gallery, they are prompted to use their phones to scan QR codes on the walls that are linked to the artwork of the Armory show. Thus, visitors can virtually engage with the gallery space and the pieces that were displayed in the Armory Show. This piece makes me think about accessibility related to art and the exclusivity that prevents many people from art venues. When significant artworks are transformed into this format, it has the potential to bring art to communities and populations that would otherwise not have access to such works. The piece also makes me think about the reproduction of artwork. Unlike photography, AR and VR can provide spatial and 3-dimensional data about a piece. Thus, it comes closer to providing an experience of the real object. The relationship between authenticity and accessibility, as is present here, is interesting to think about.

Jackalope-LookingOutwards7

I really like the AR Super Mario Bros made by Abhishek Singh. His documentation can be watched here:

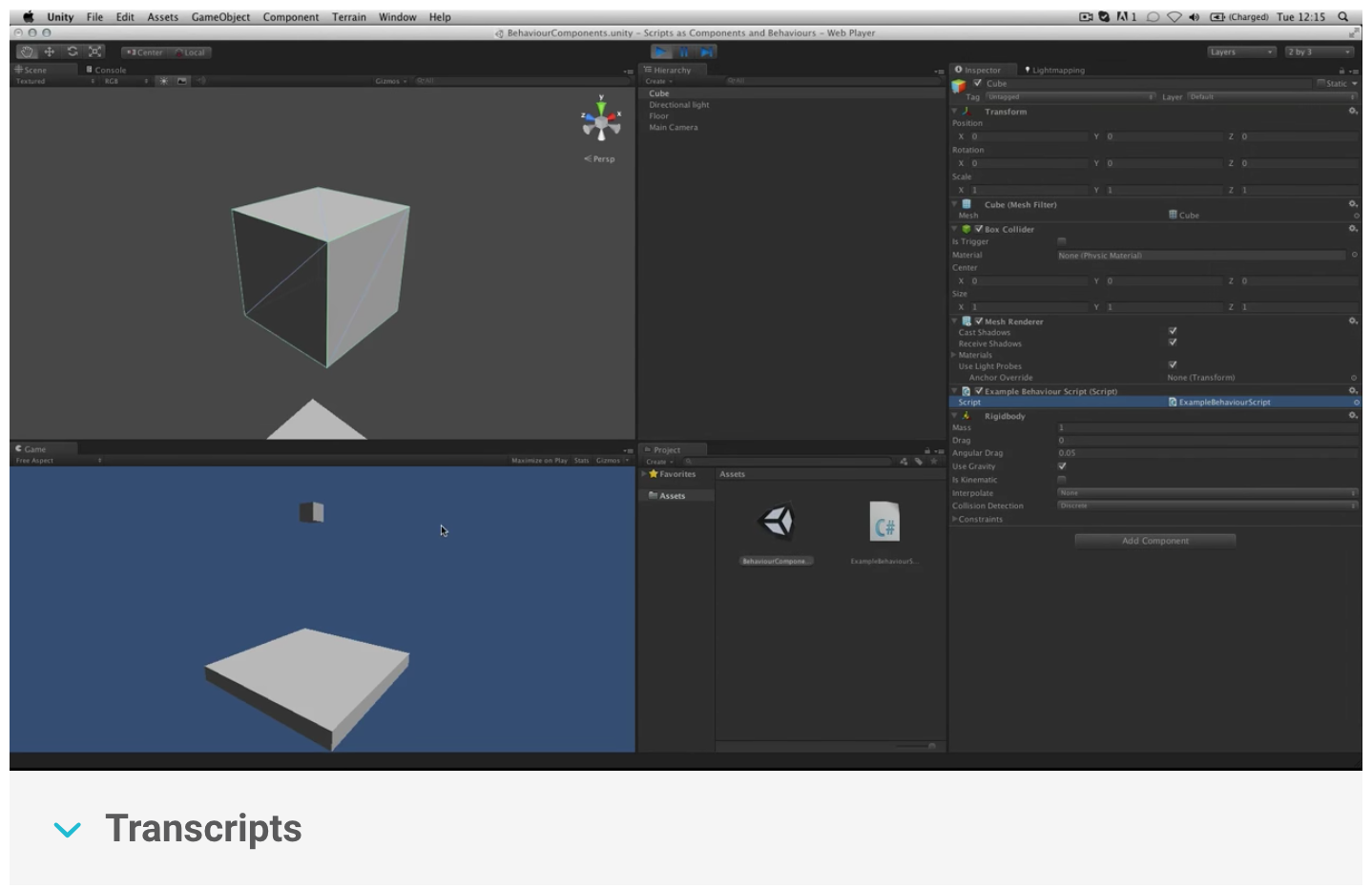

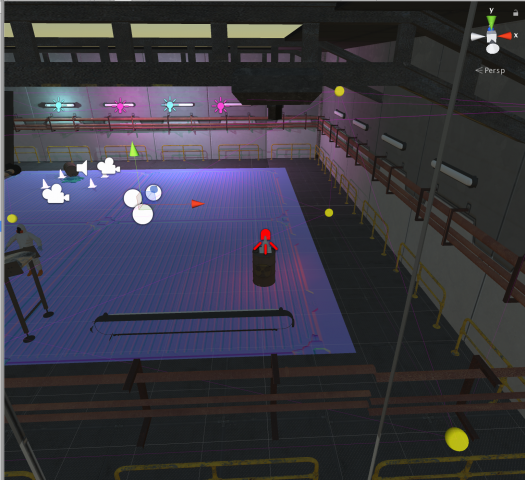

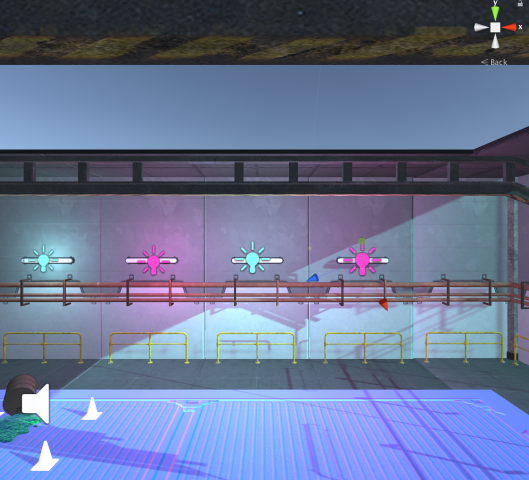

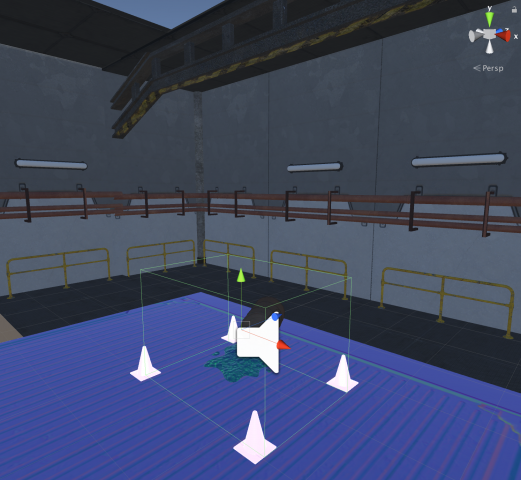

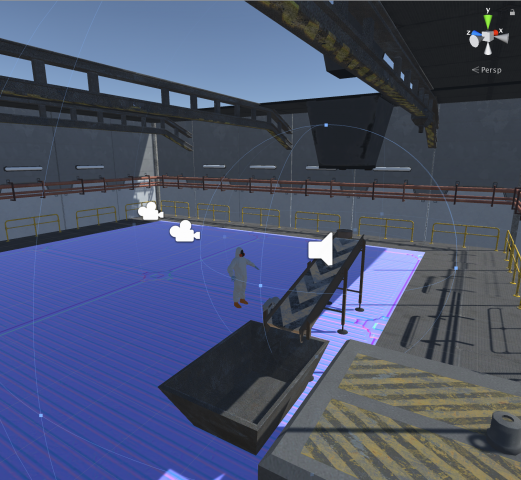

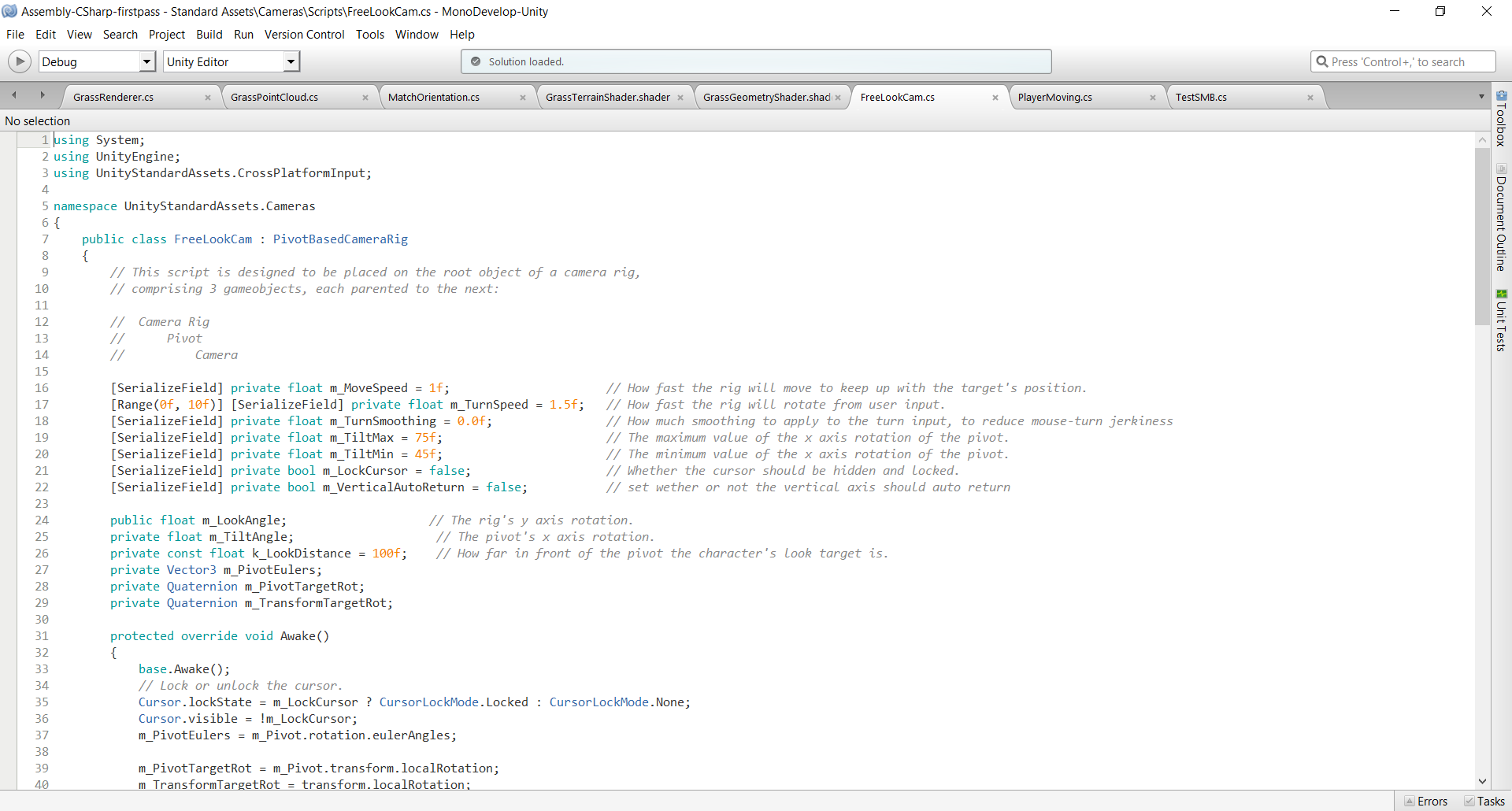

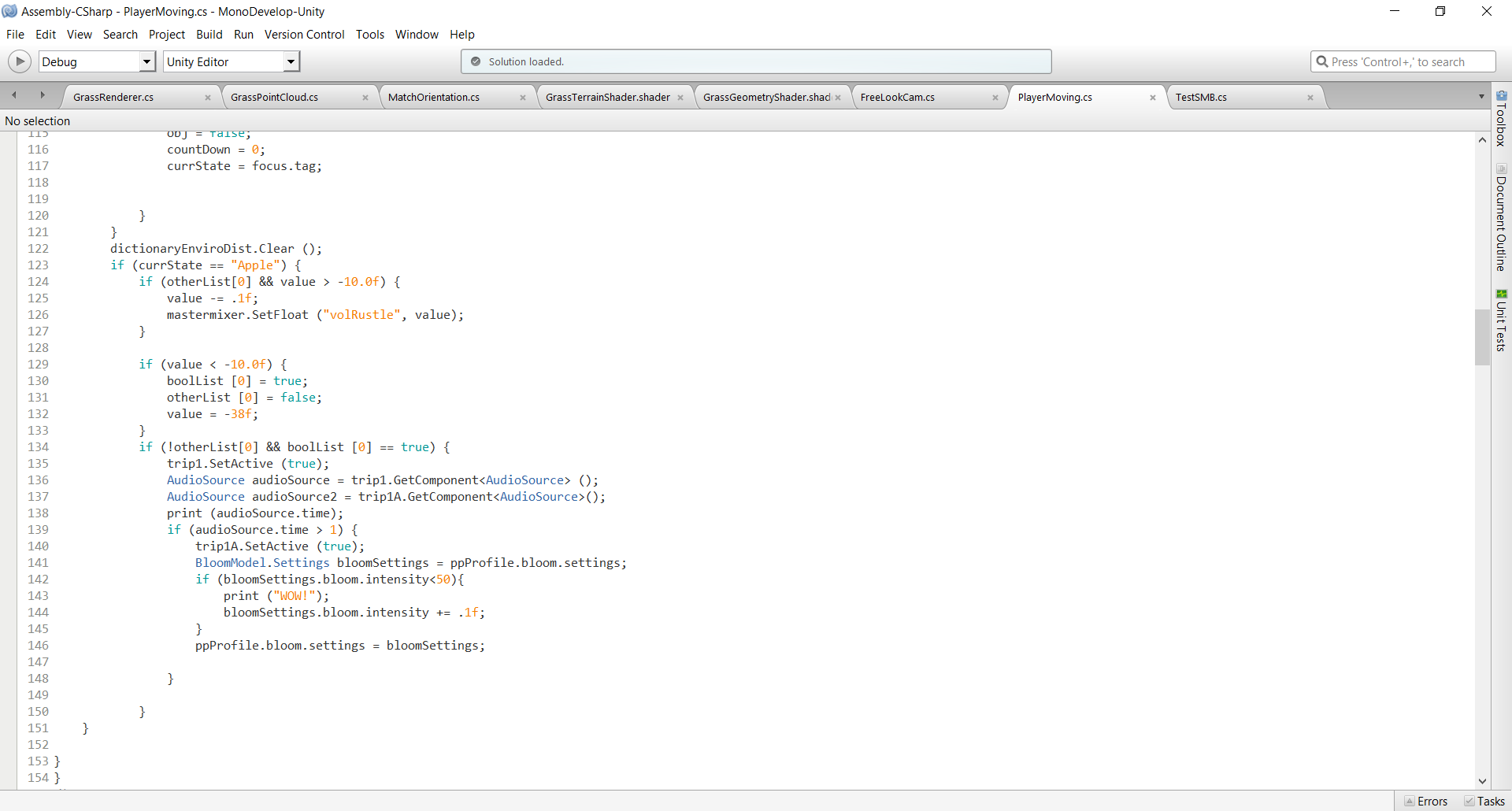

I love this project because the idea is fun, and recently because I’ve been much more active in Parkour Club than before the idea of running around and jumping and doing wall jumps and stuff is very appealing. Also, this project kind of reminds me of Pokemon Go, which for all its flaws, was so exciting and the month that it came out was one where I was the happiest and most excited I’ve been in quite a while. The graphics are also very well integrated and look nice. Although I imagine the game might be a bit disorienting/overwhelming to play. Additionally, it makes it kind of hard to see where you’re going and it’d be pretty easy to run into people or things while playing this. He doesn’t have a ton of documentation or explanation. The project was built just by him in Unity 3D and Fusion360 and used a Hololens.

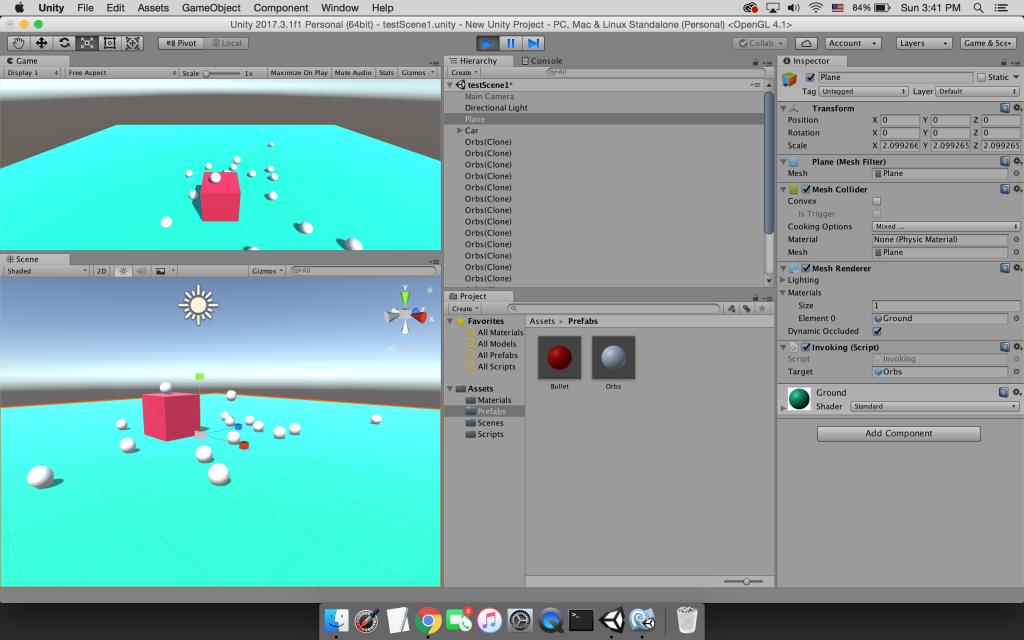

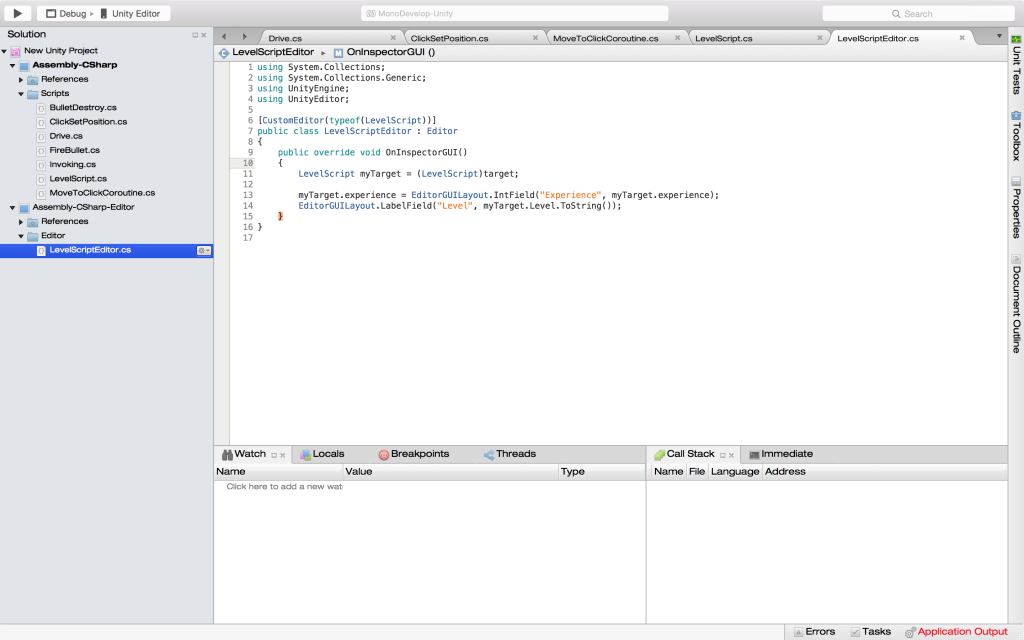

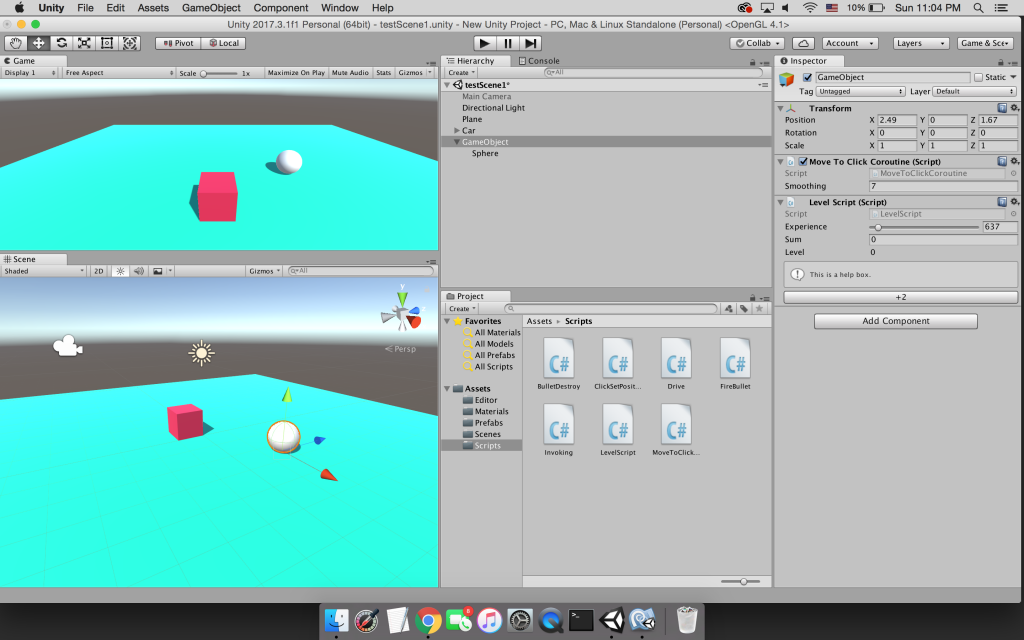

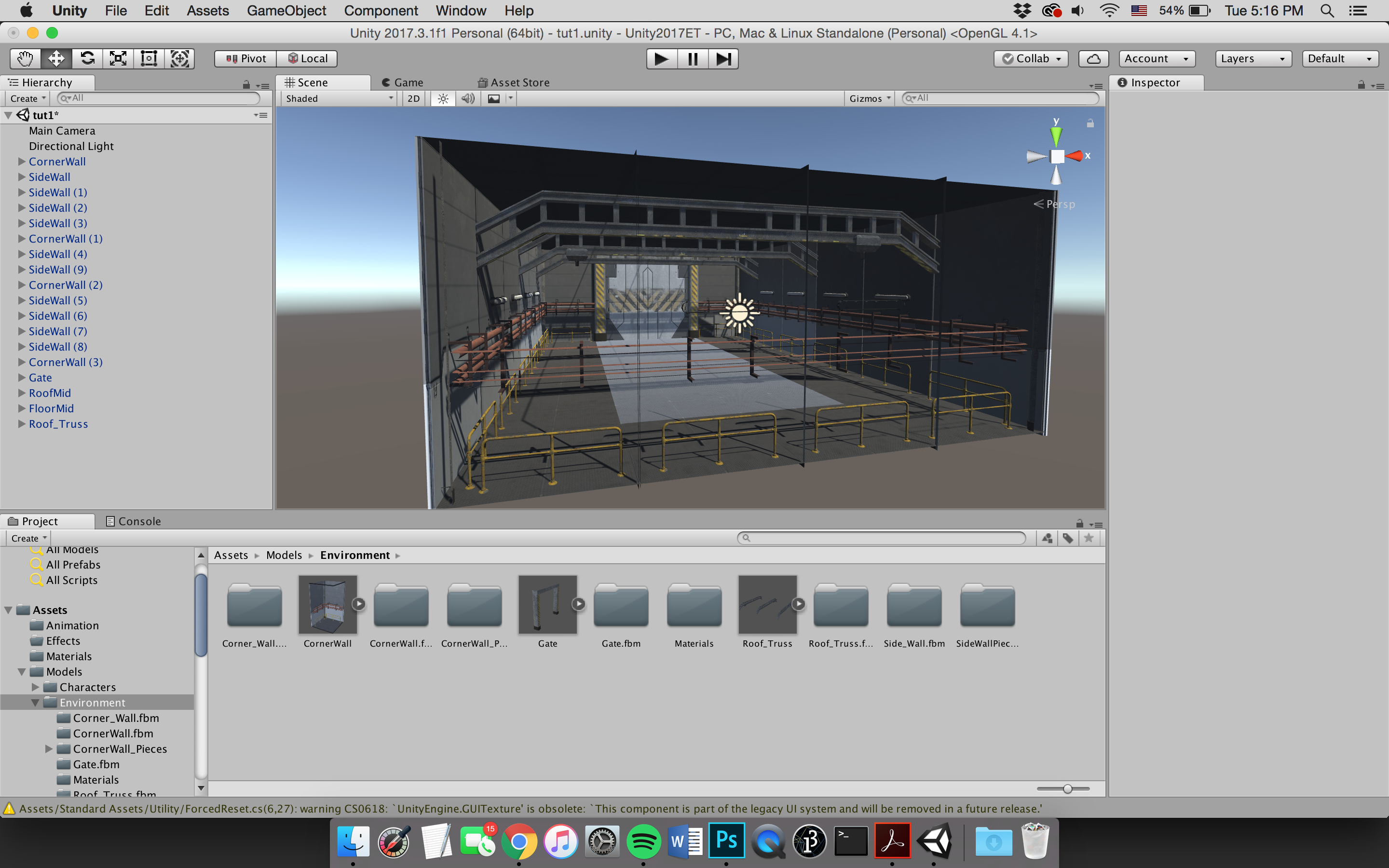

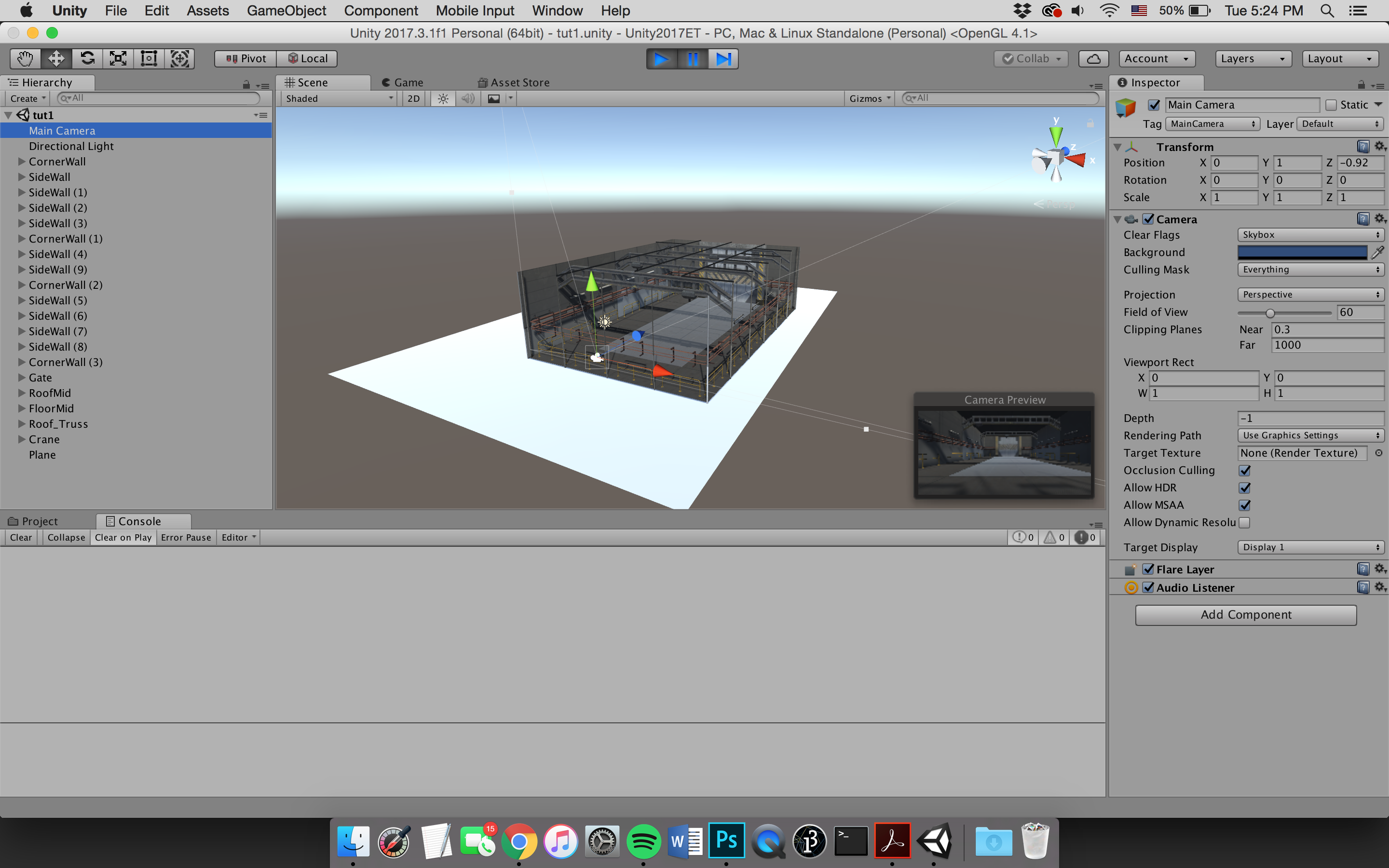

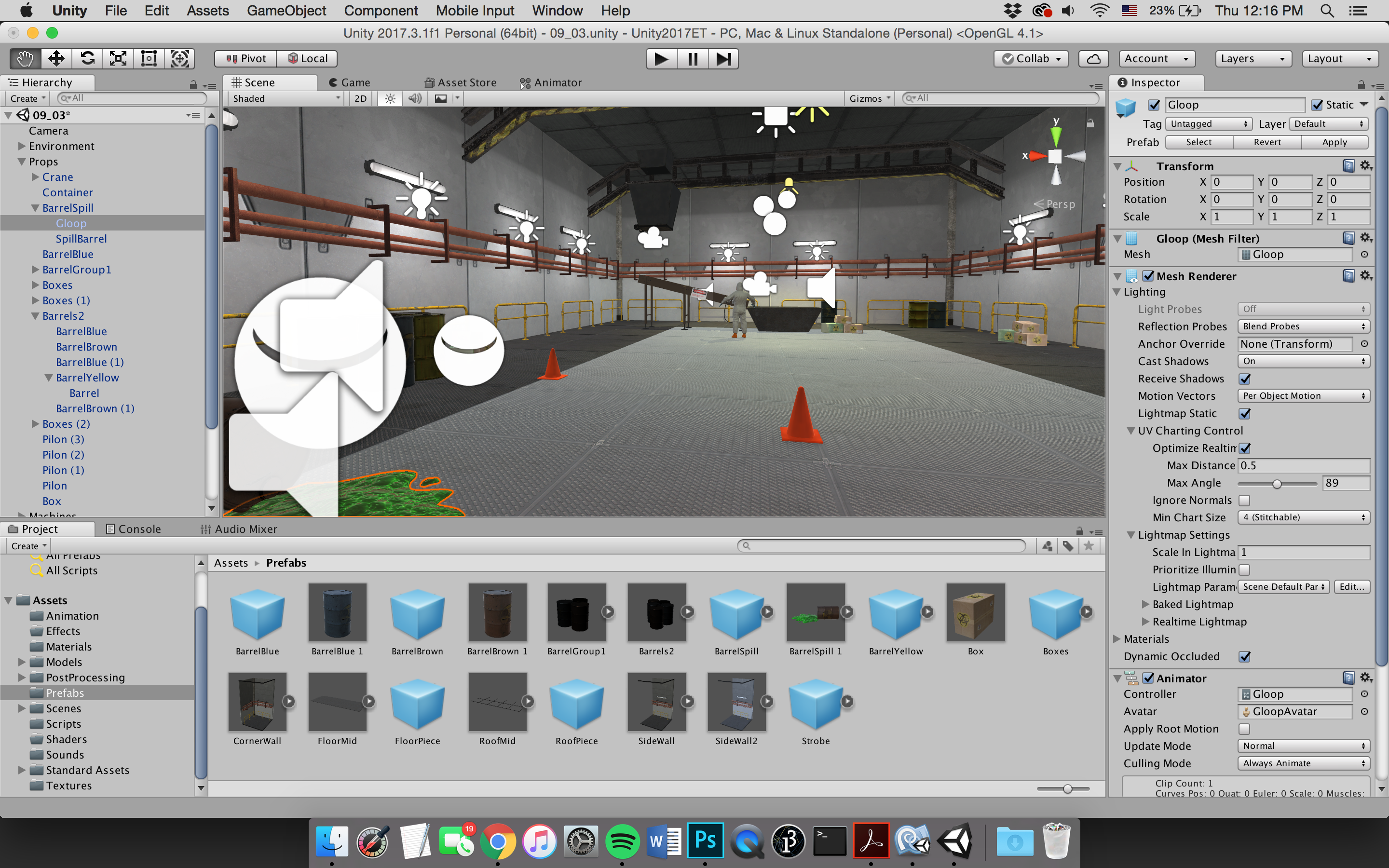

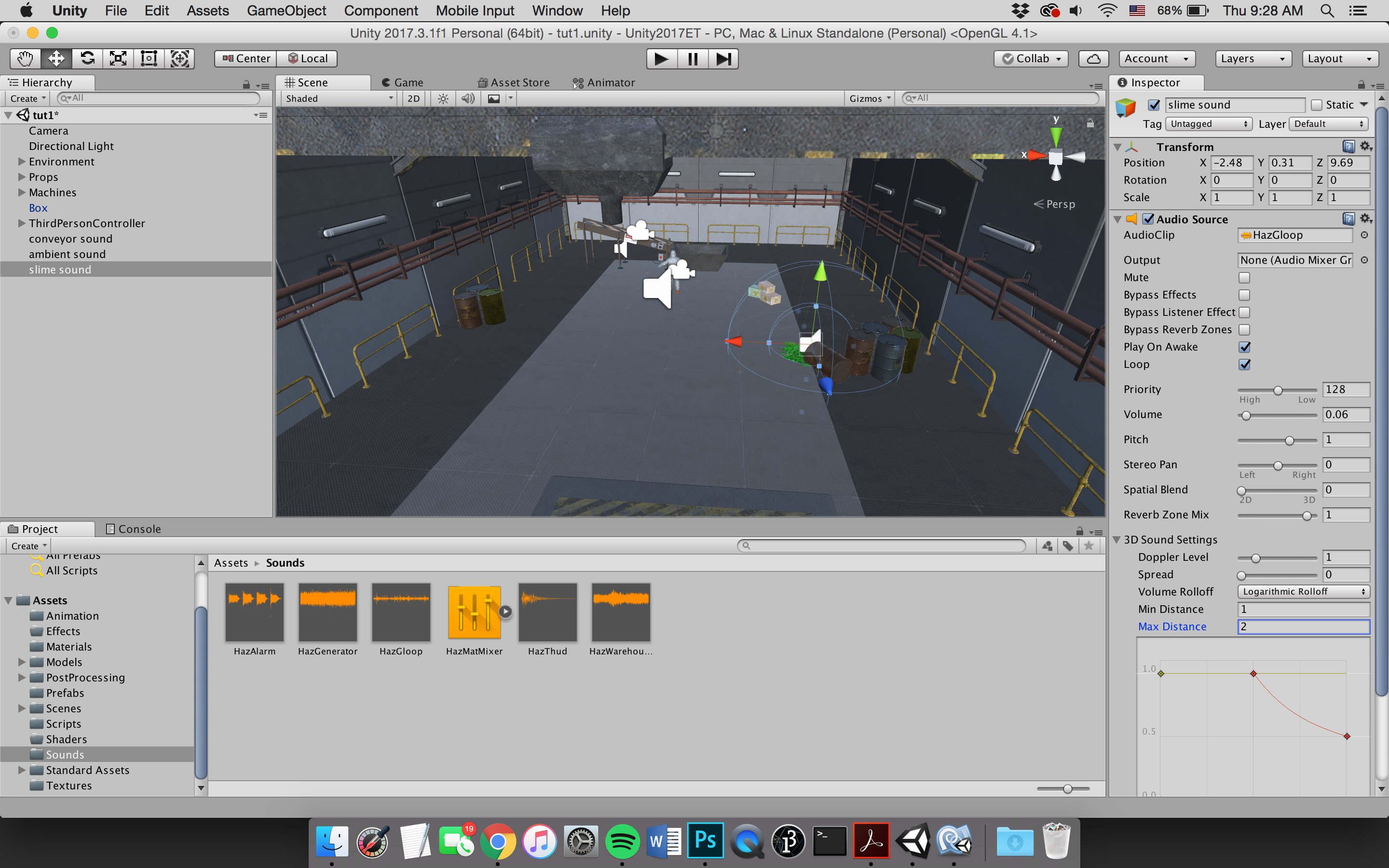

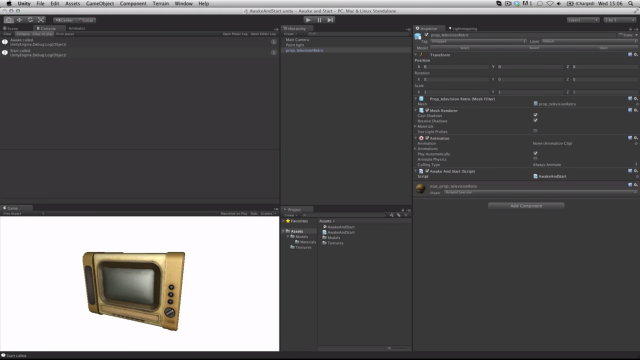

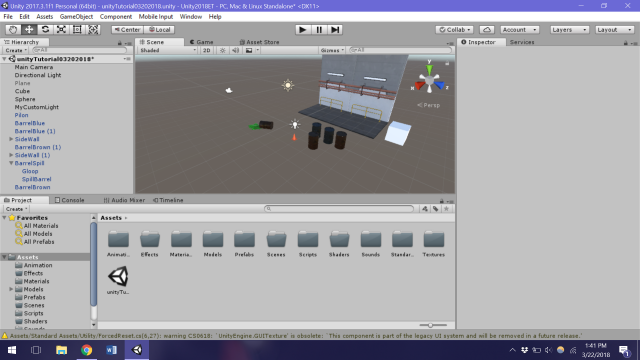

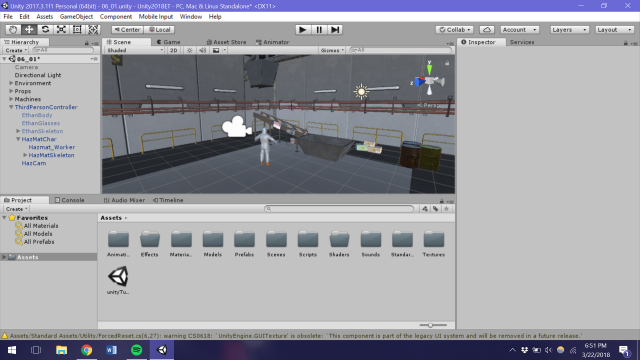

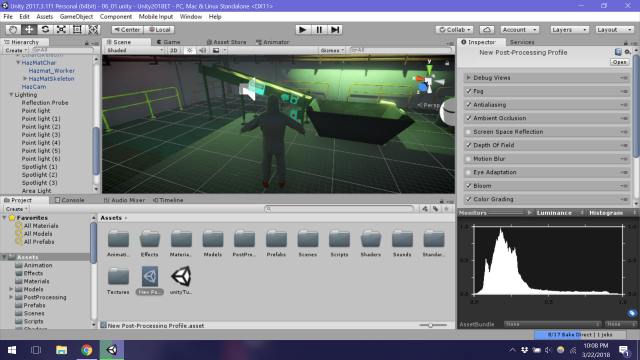

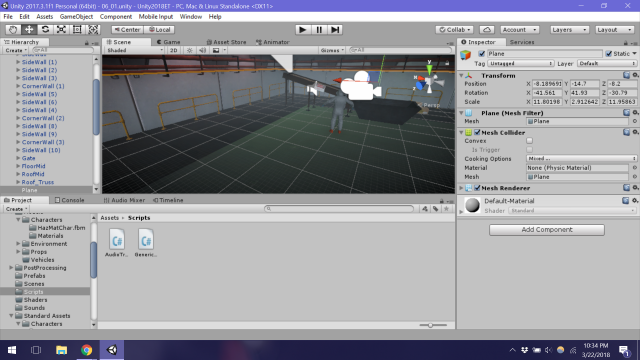

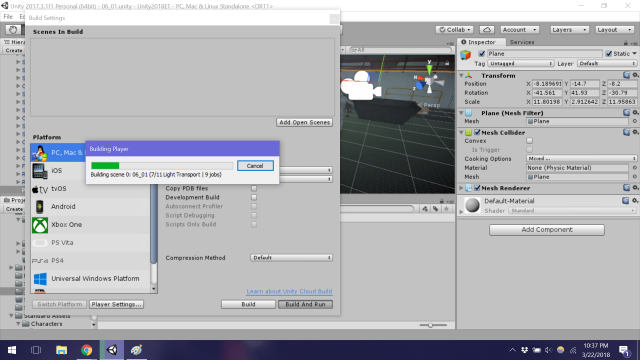

Zaport-Unity2

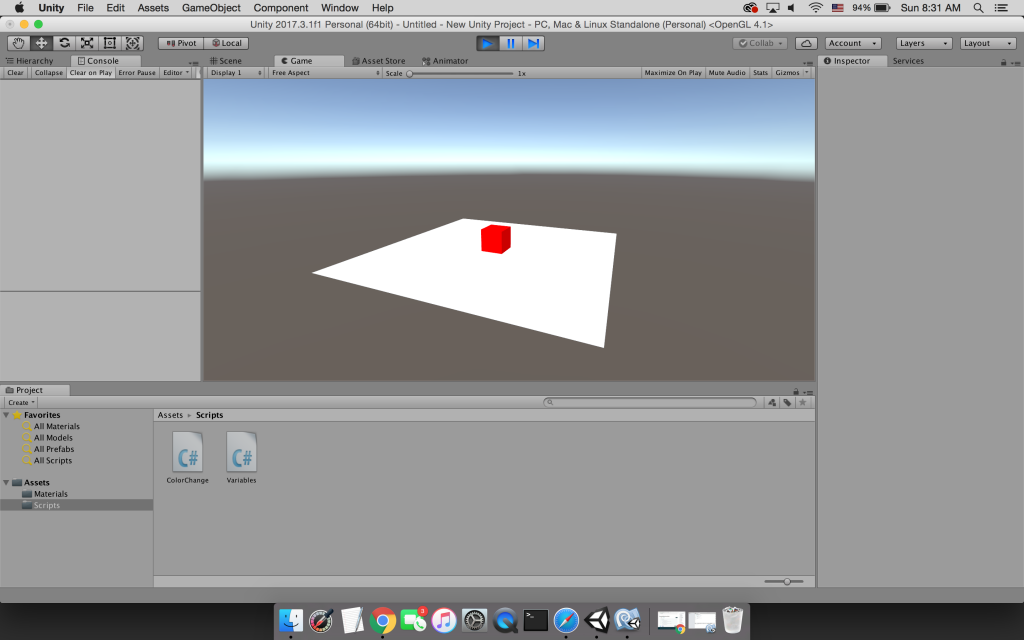

Zaport-UnityEssentials

tyvan-LookingOutwards07

Lampix is an AR projector for tables, desks, and counters. This product is modeled after a lamp, as expressed by the first two thirds of its name, but the piece of design extends beyond conventional light-emitting furniture to depict a digital interface on flat surfaces (the second half of its name).

“Think of [Lampix] as a new pedigree of computing devices… it’s a totally new paradigm; it’s a totally new concept.” Stripped Linux as a processor for text and HTML5 overlays, could have innovative benefits for curious minds wondering about breadbox-sized objects. And this contextual skin will even allow for a set of throwback interactions to the days before keyboards. But the most impressive feat of this augmentation is the ability to withhold information from a user with incorrect posture.

What are the next steps for tabletop augmentation? As a practicing futurist, I firmly believe the integration of “Ok Google” is on this Kickstarter’s horizon. But unfortunately as a craftsperson, physical tools, analogous to a mouse, a keyboard, or a touchbar, will likely be replaced by individualized and unversed cv recognition of micro expressions.

Jackalope-Unity2

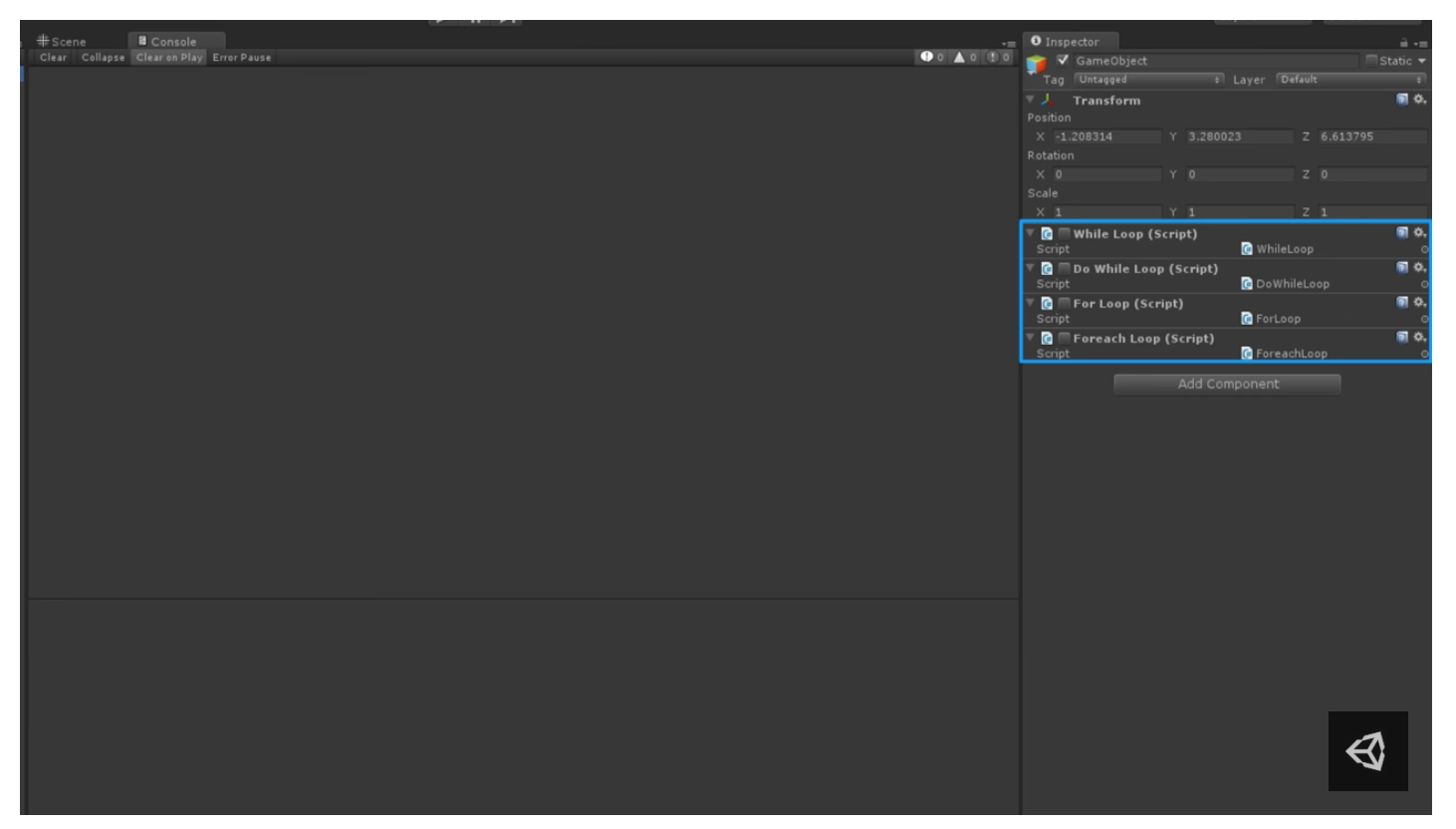

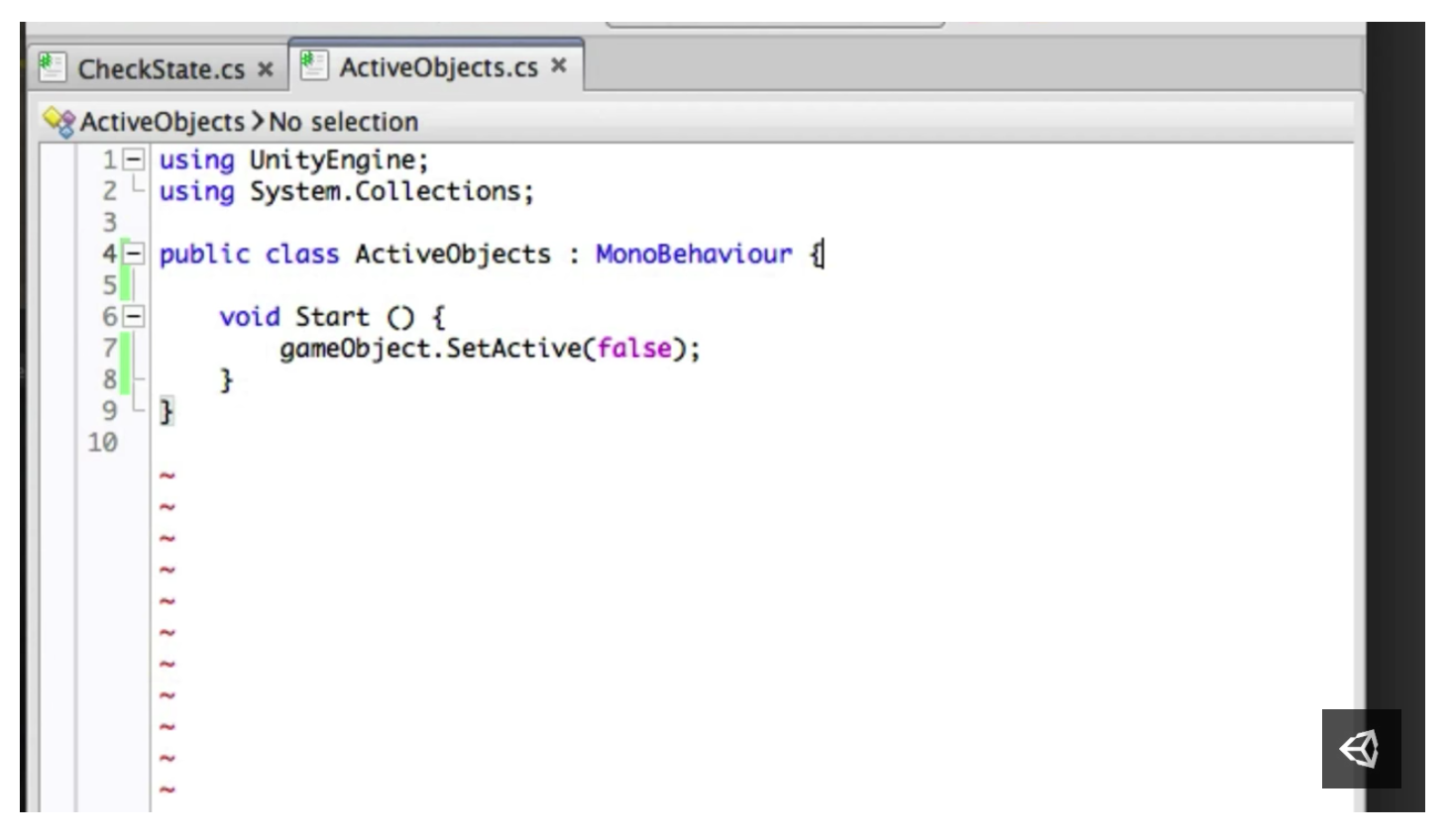

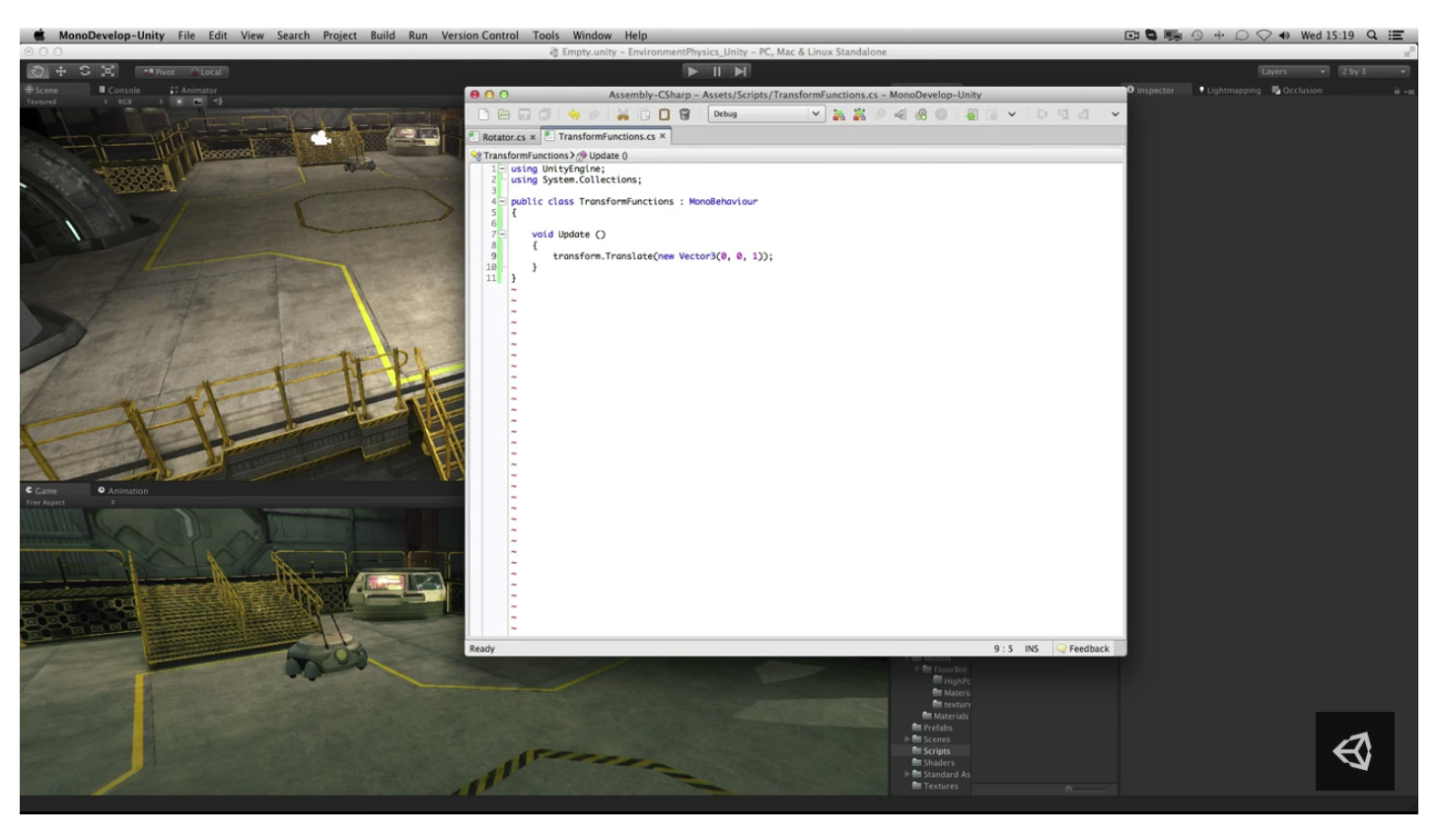

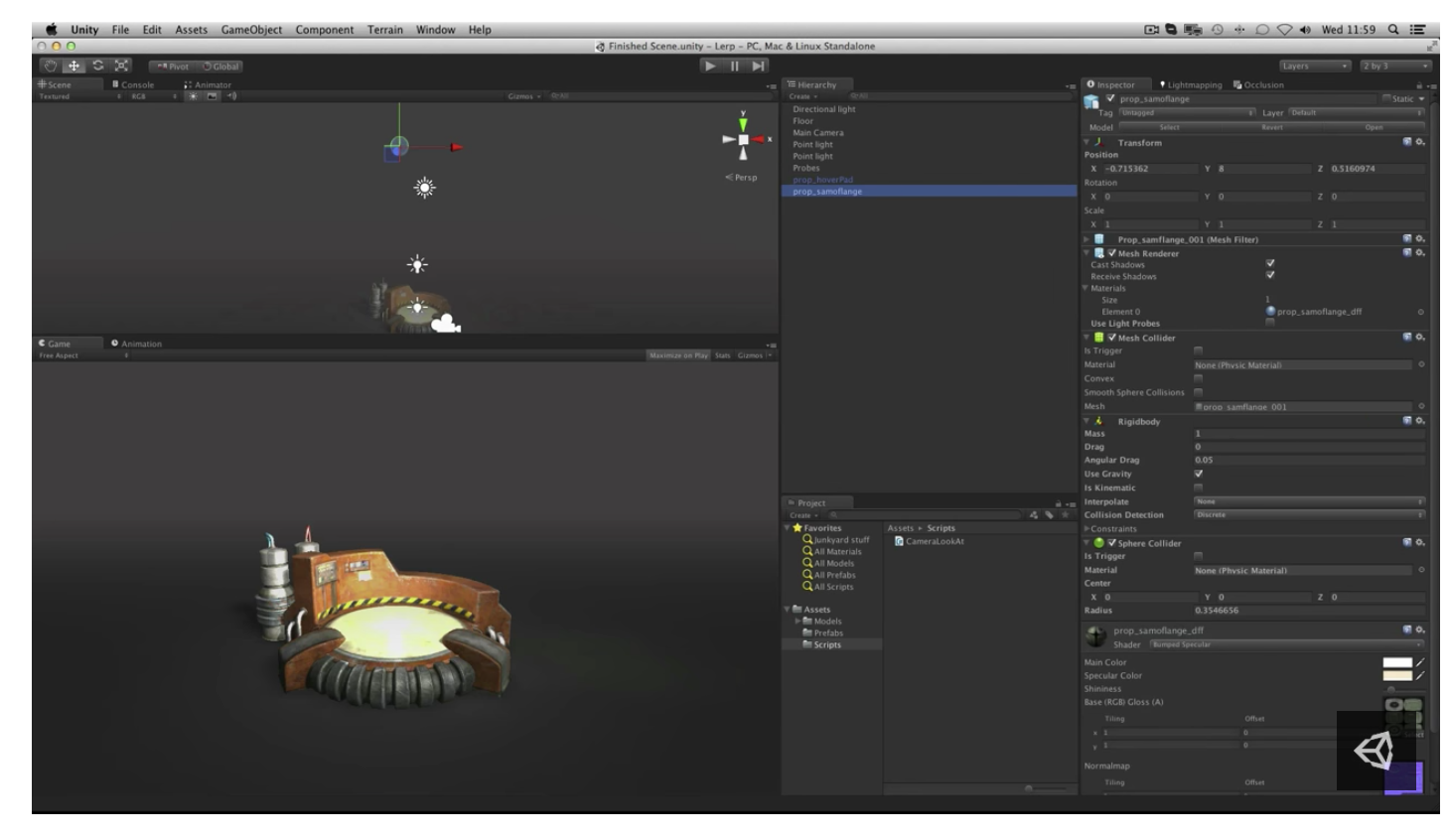

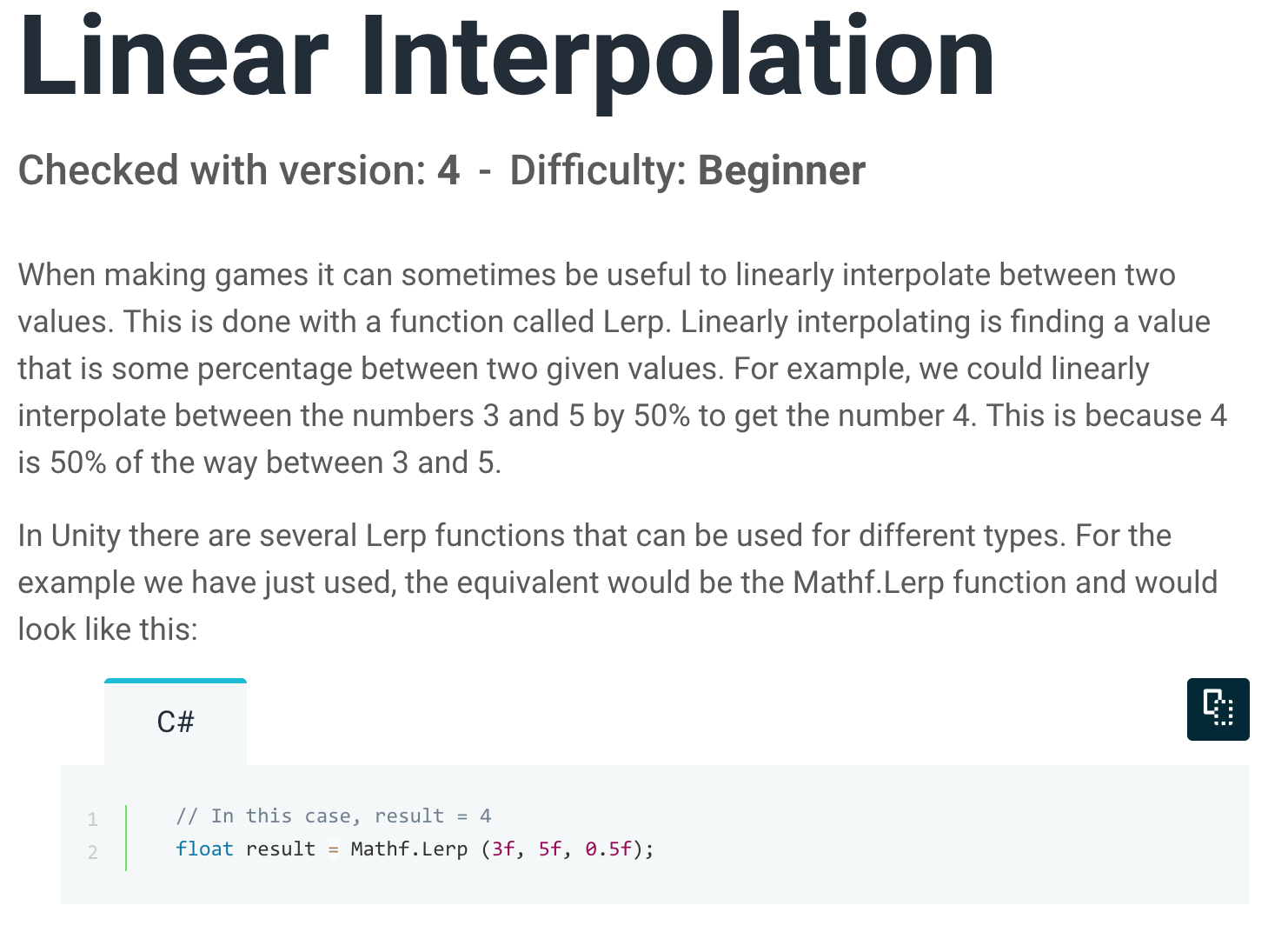

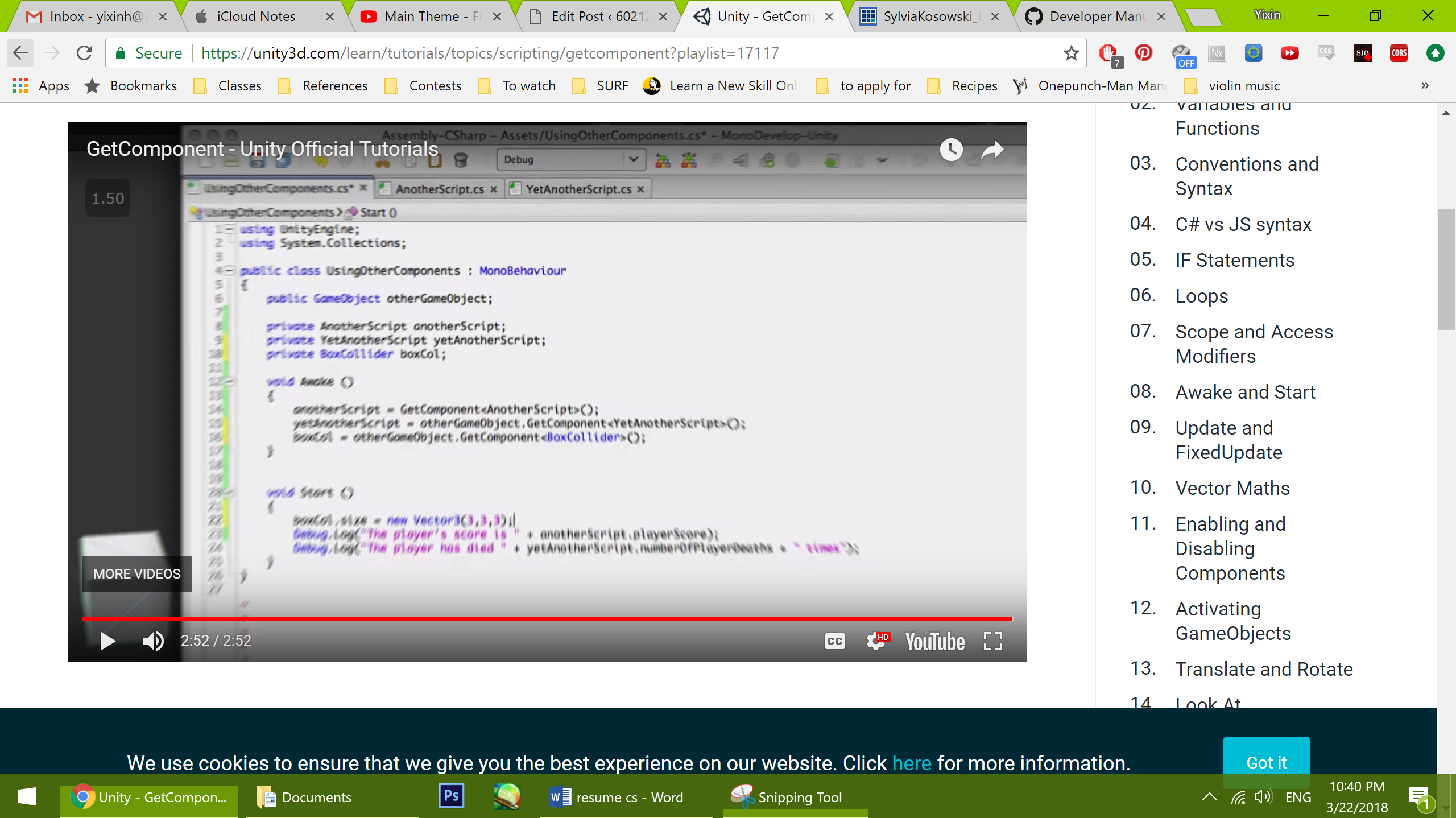

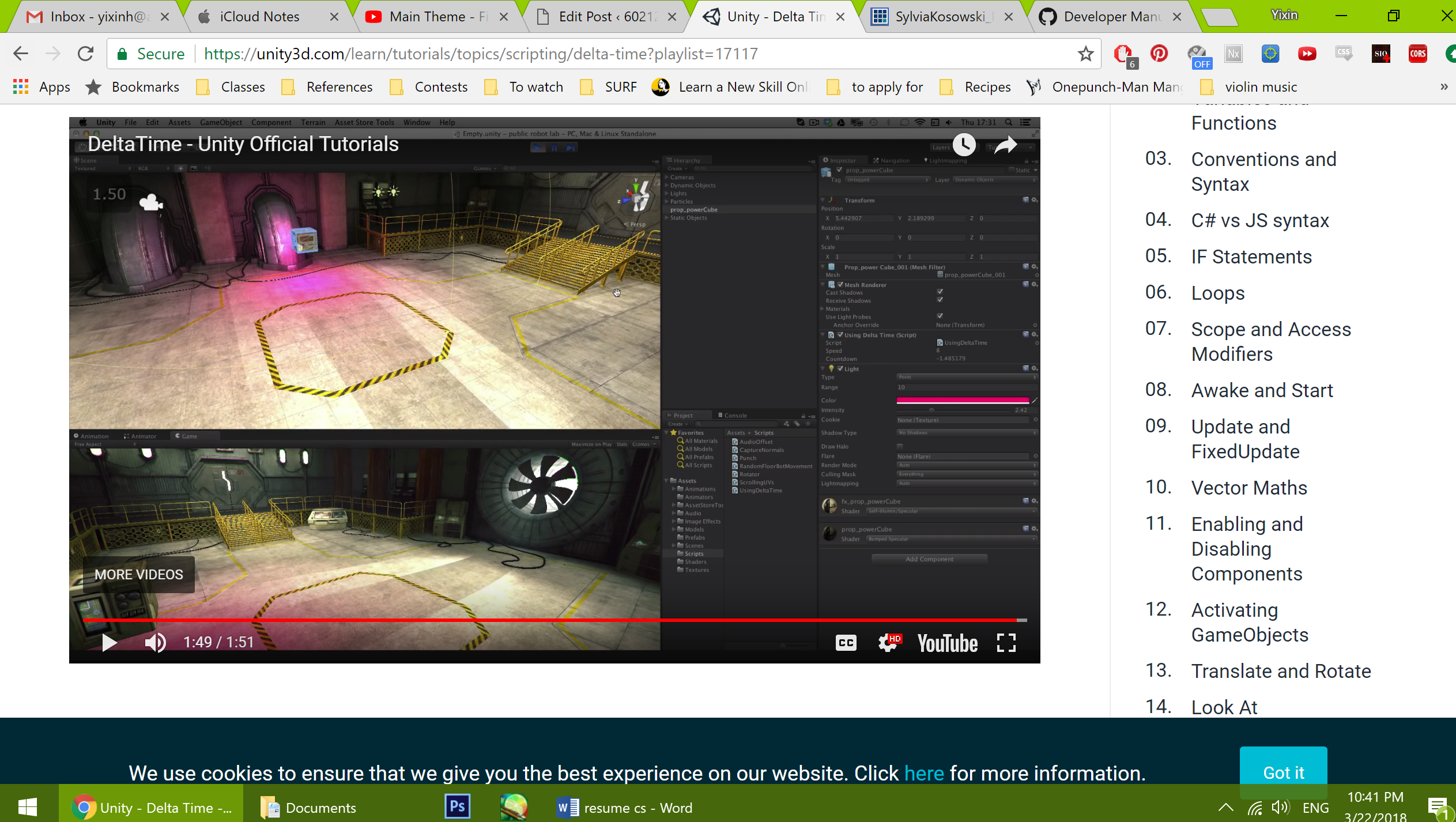

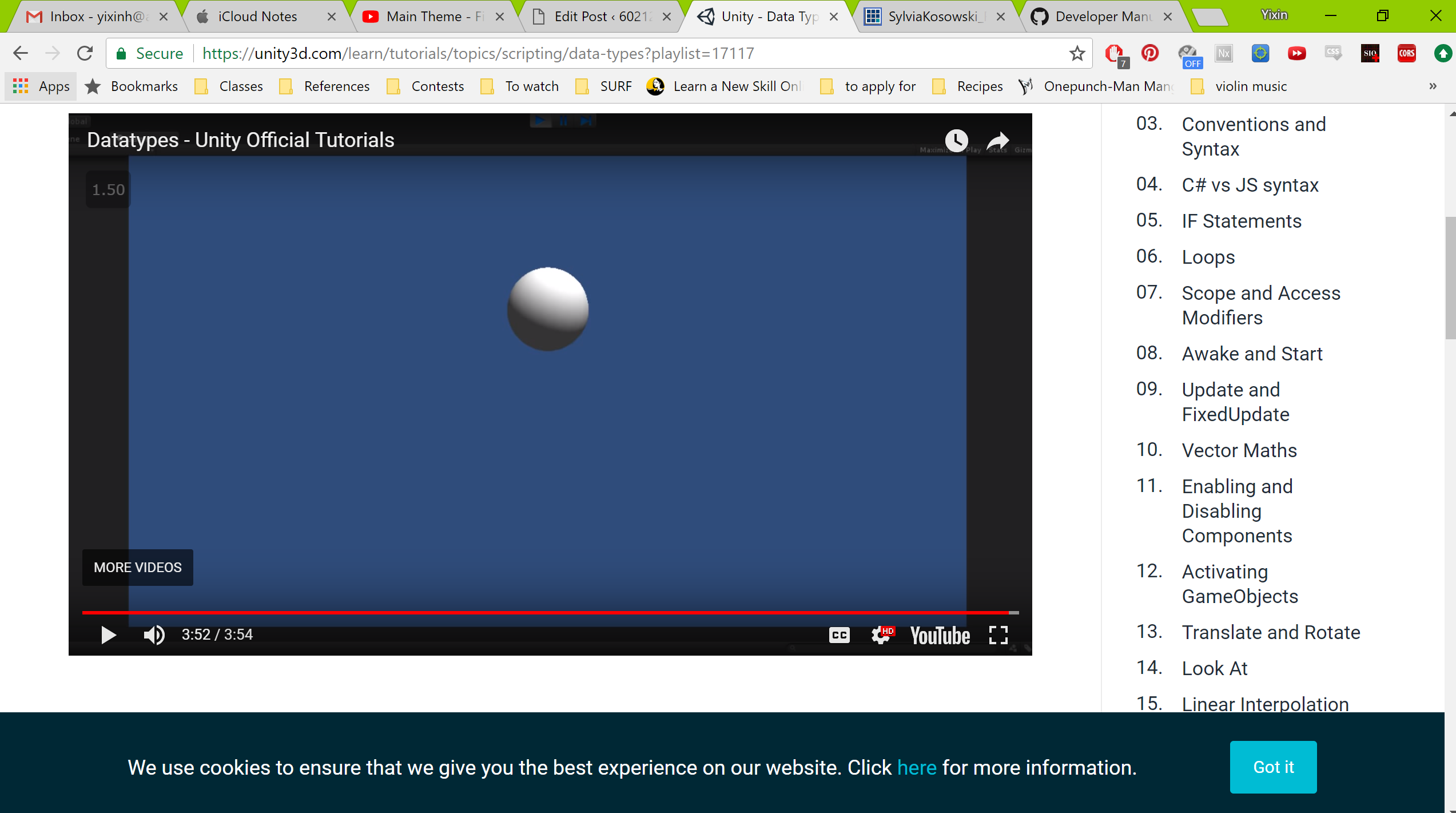

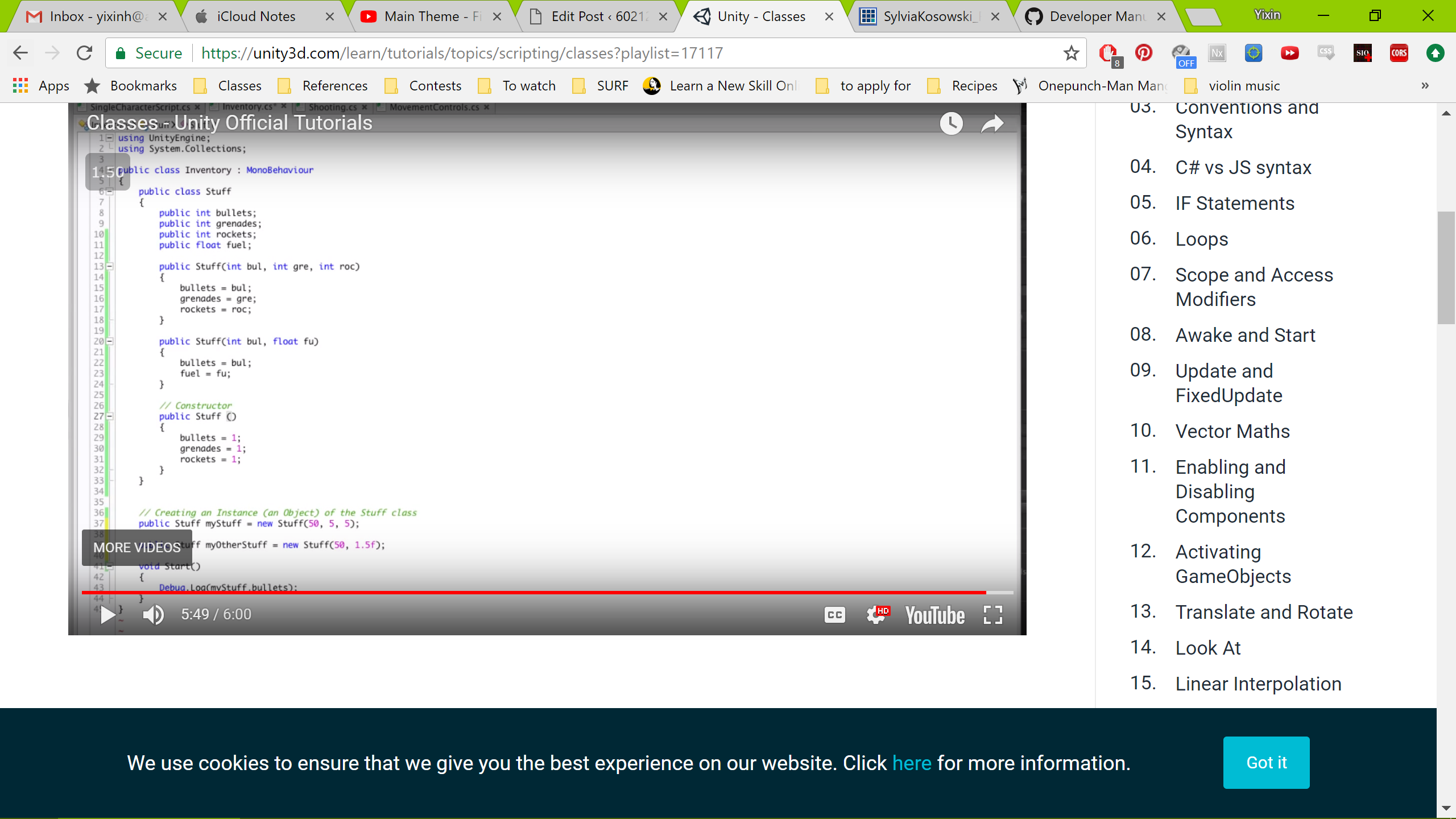

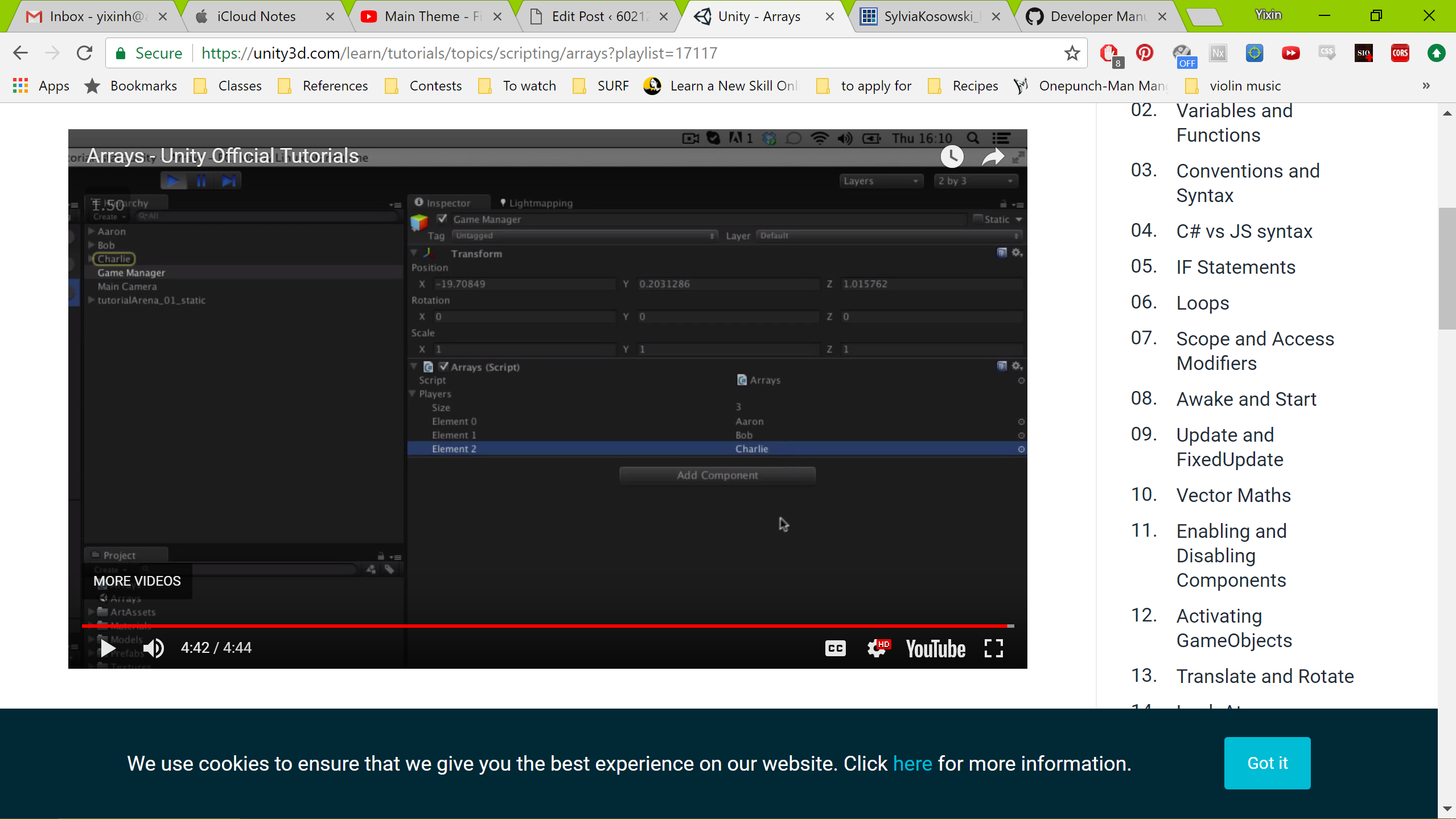

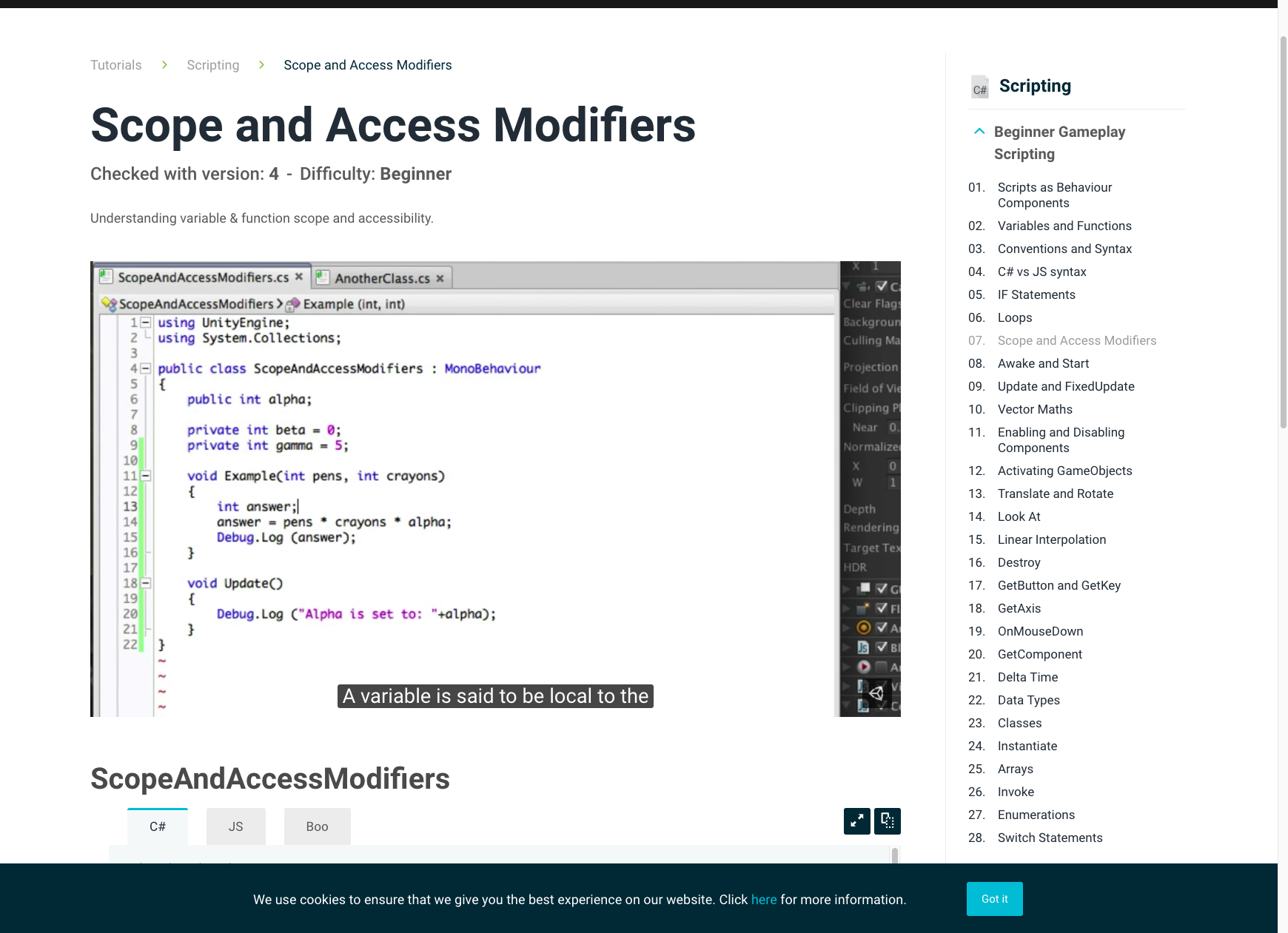

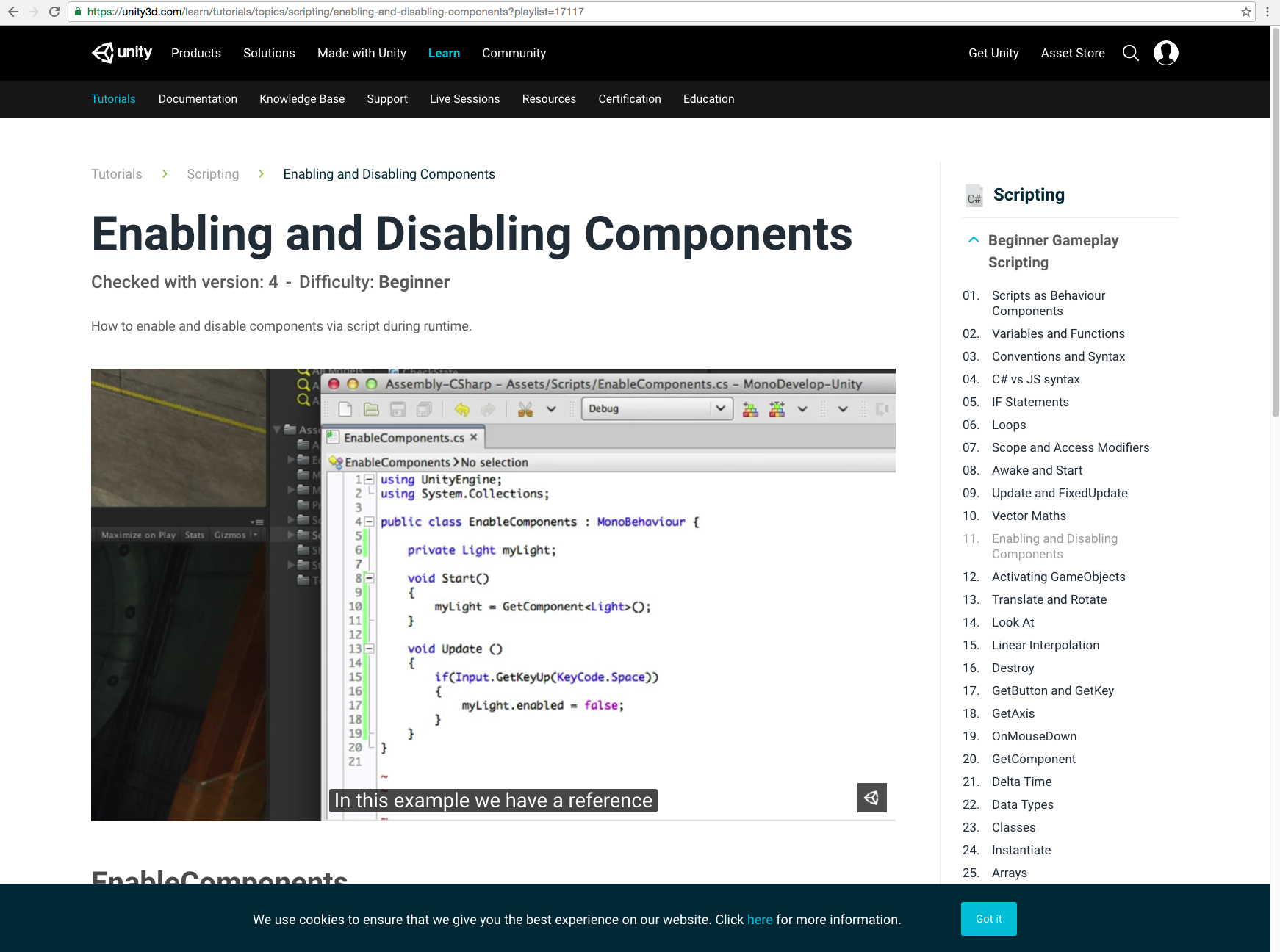

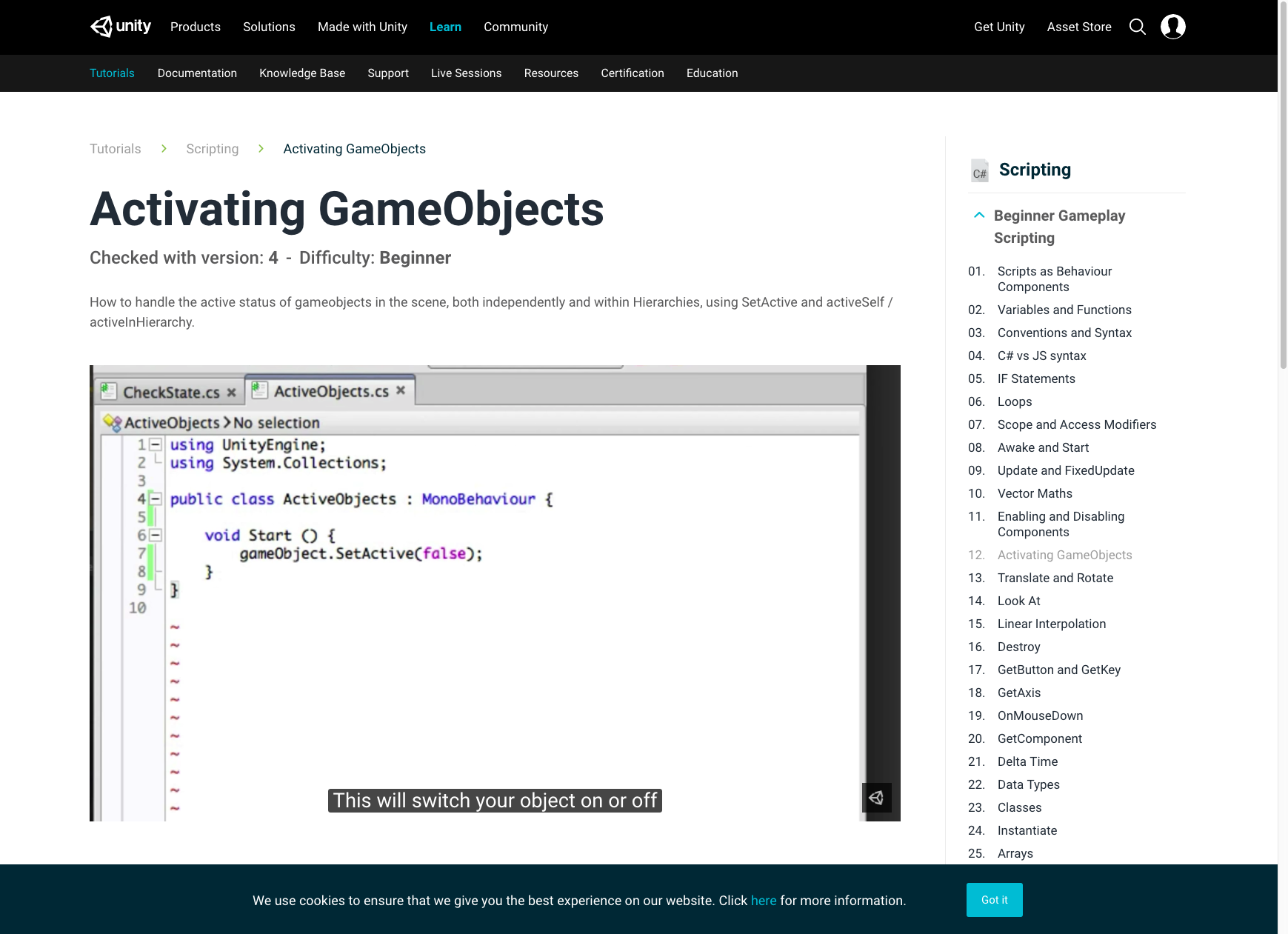

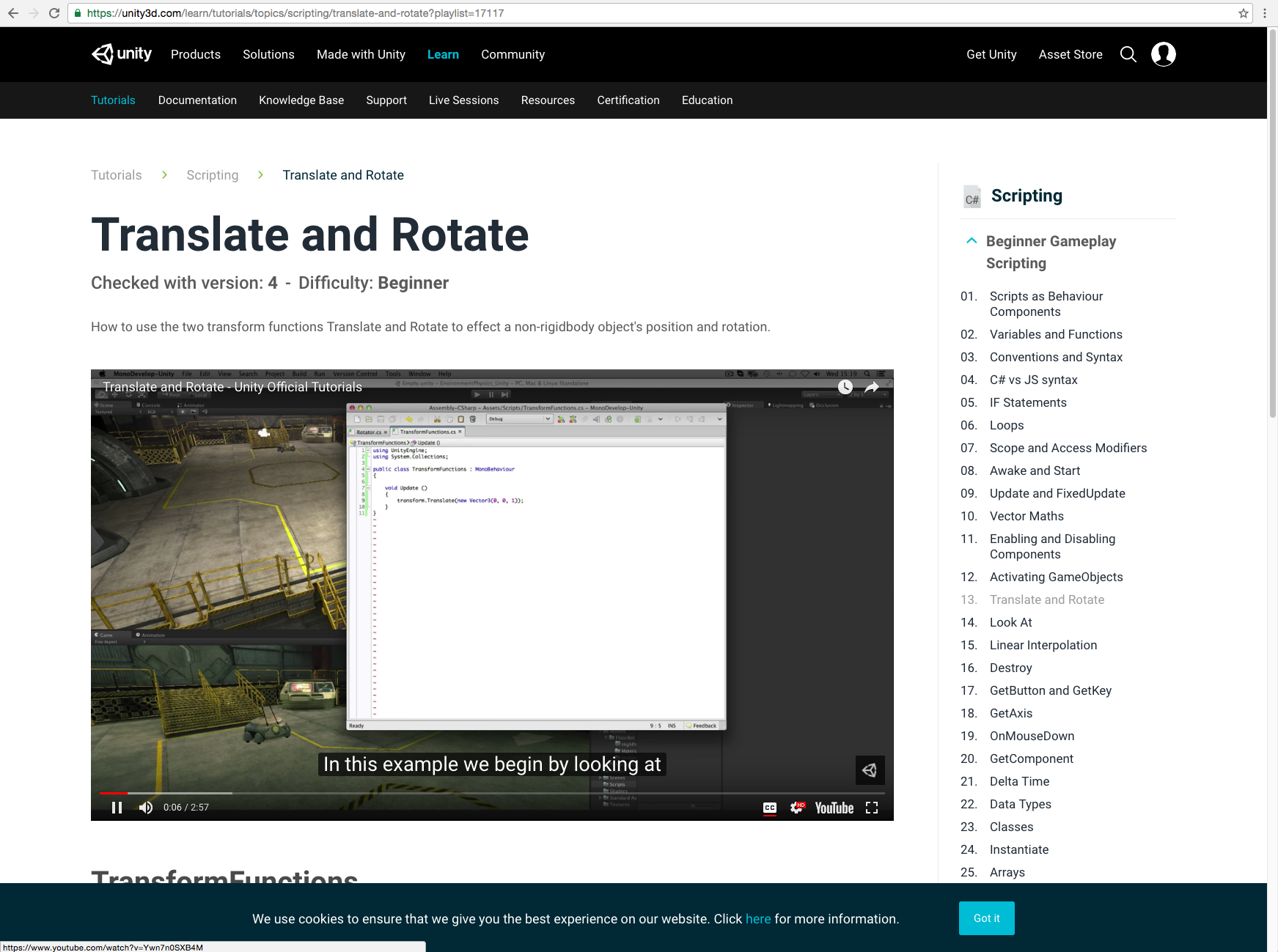

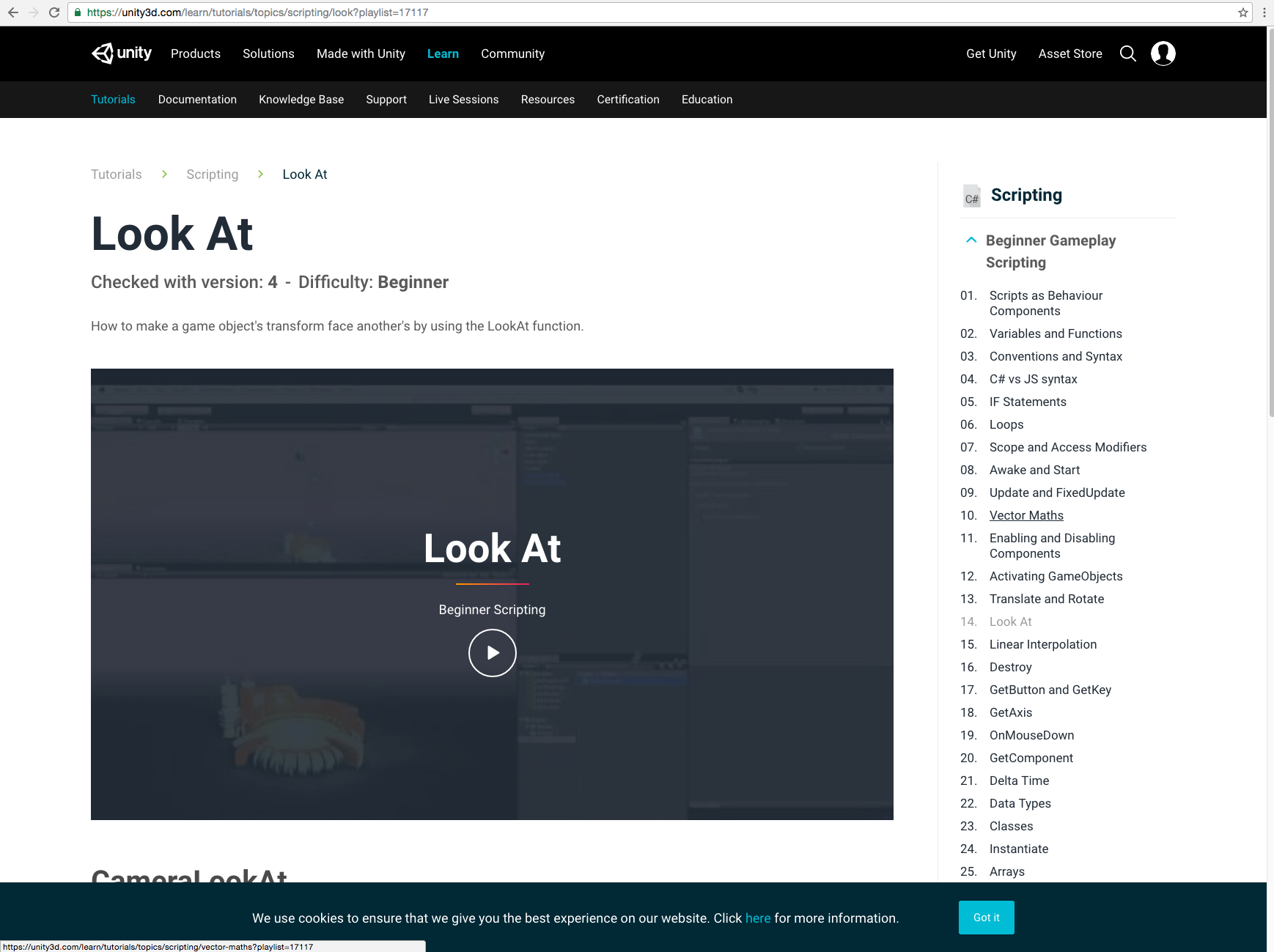

Comments/notes I guess:

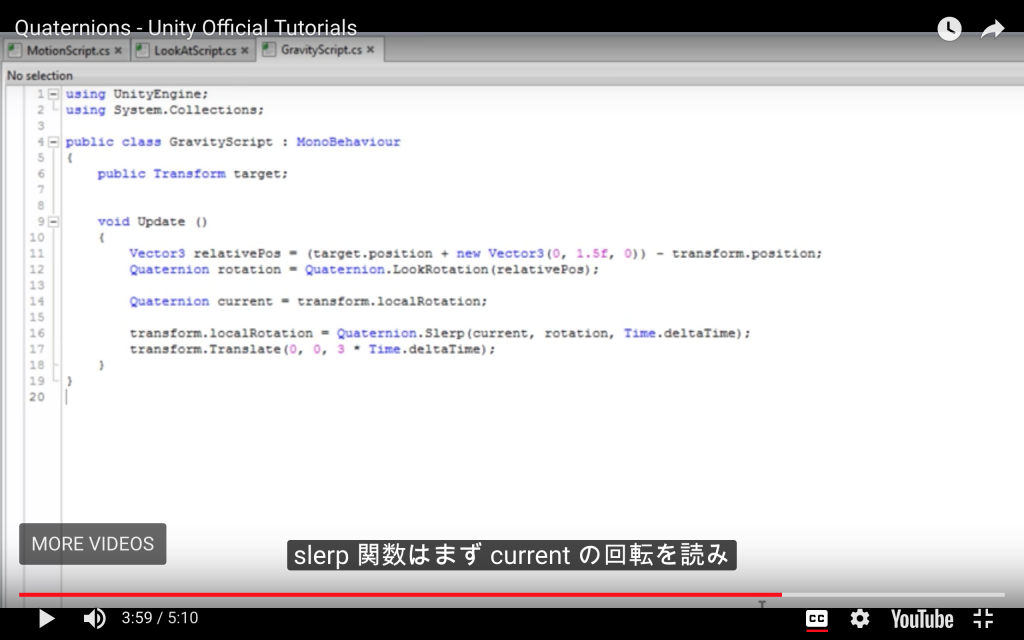

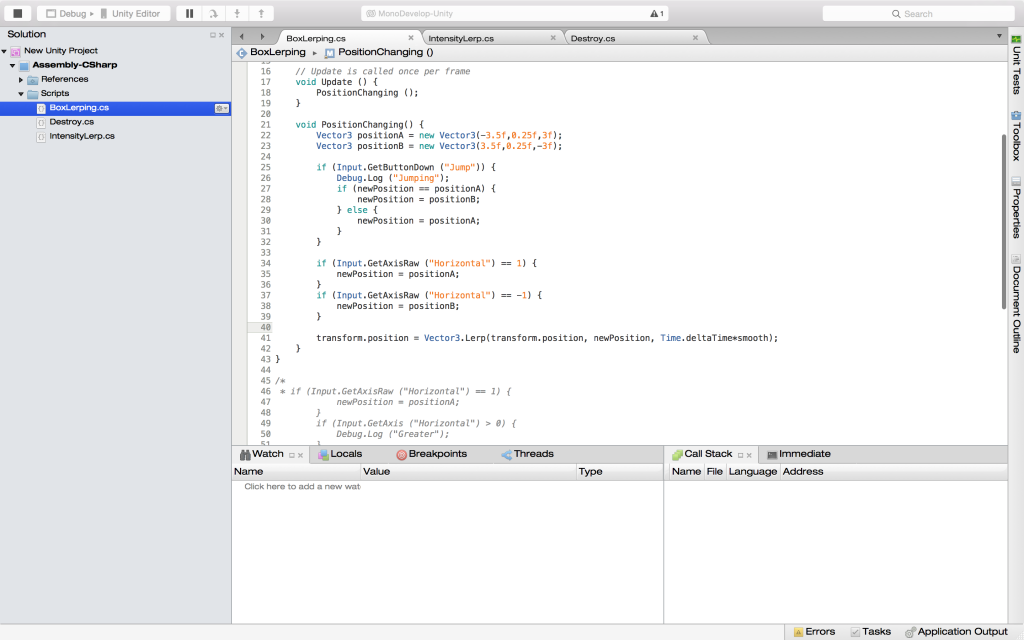

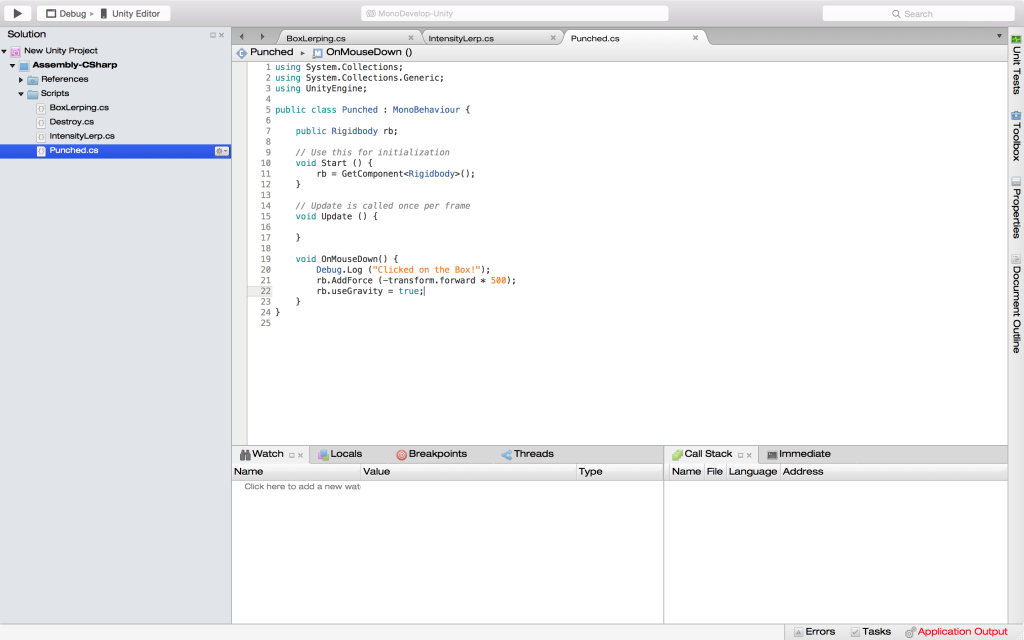

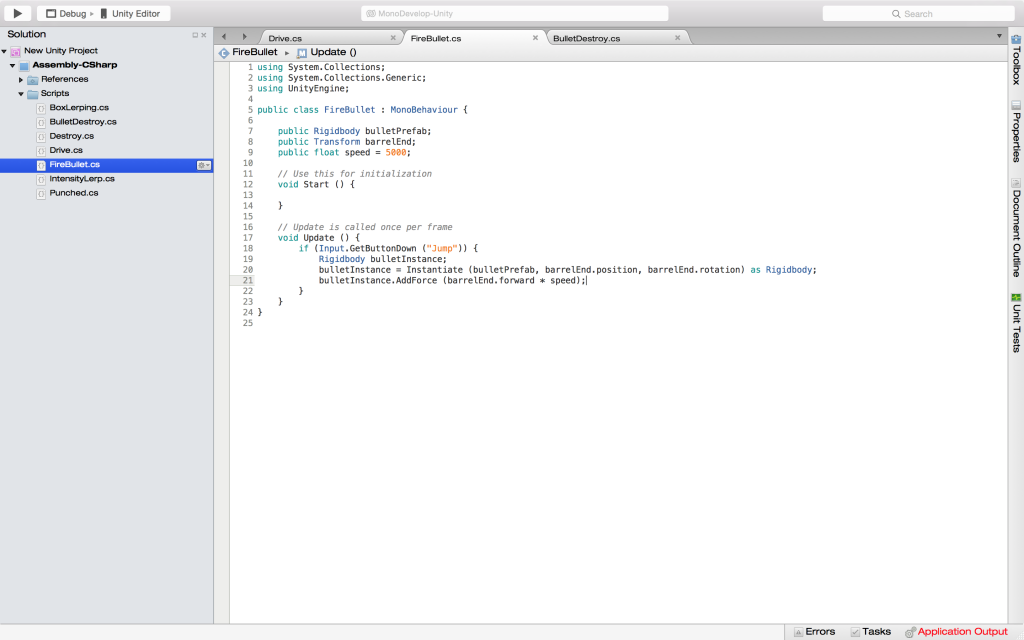

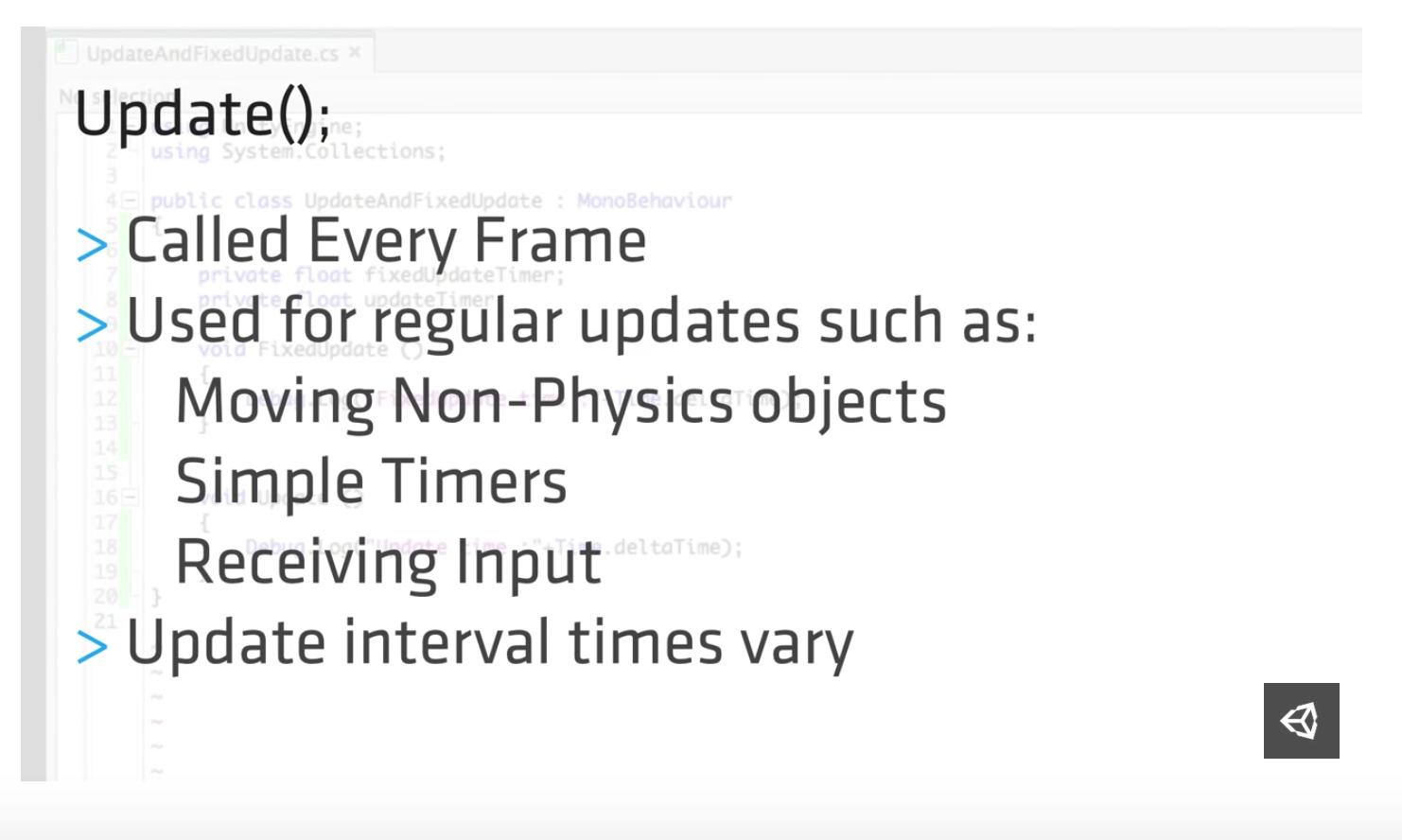

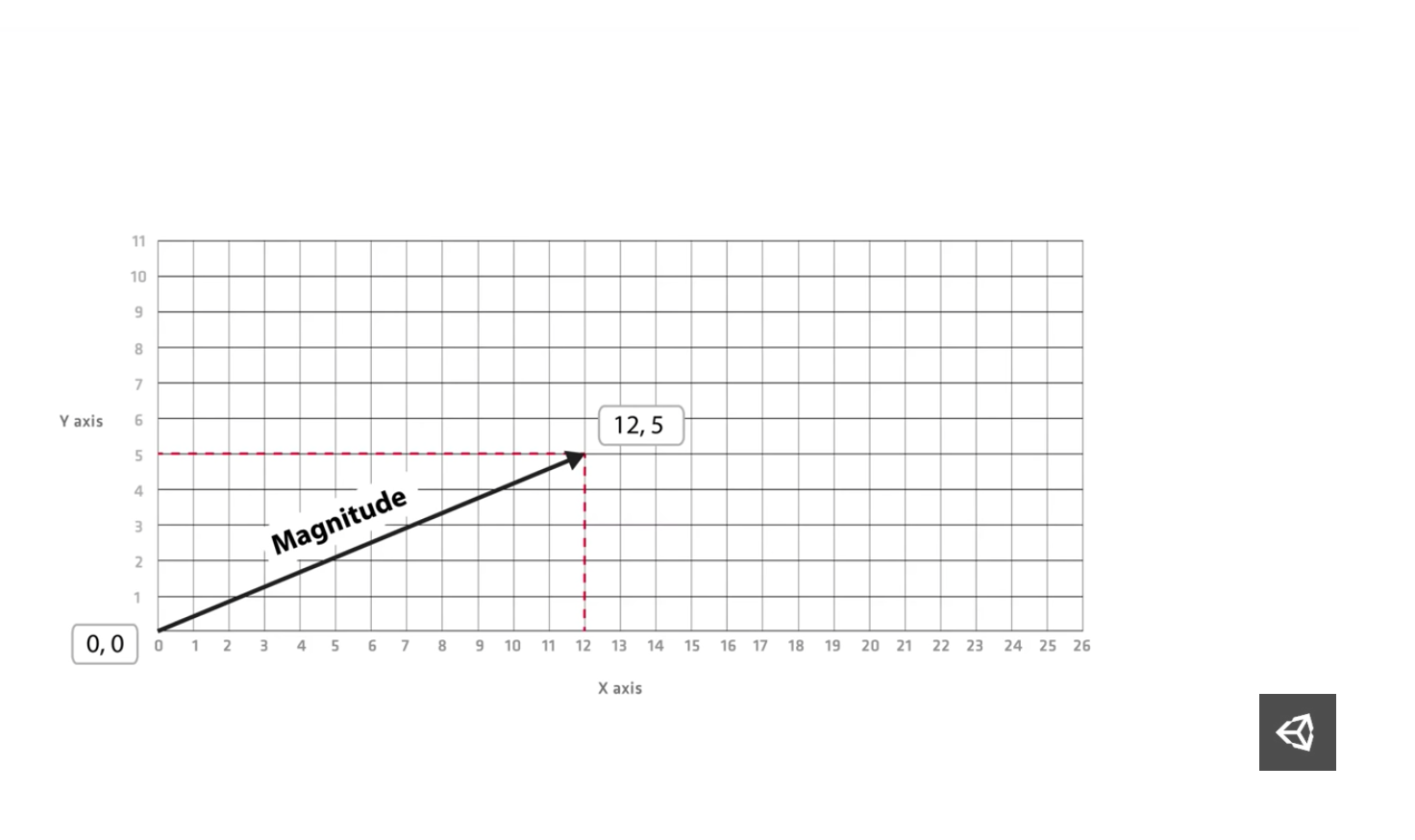

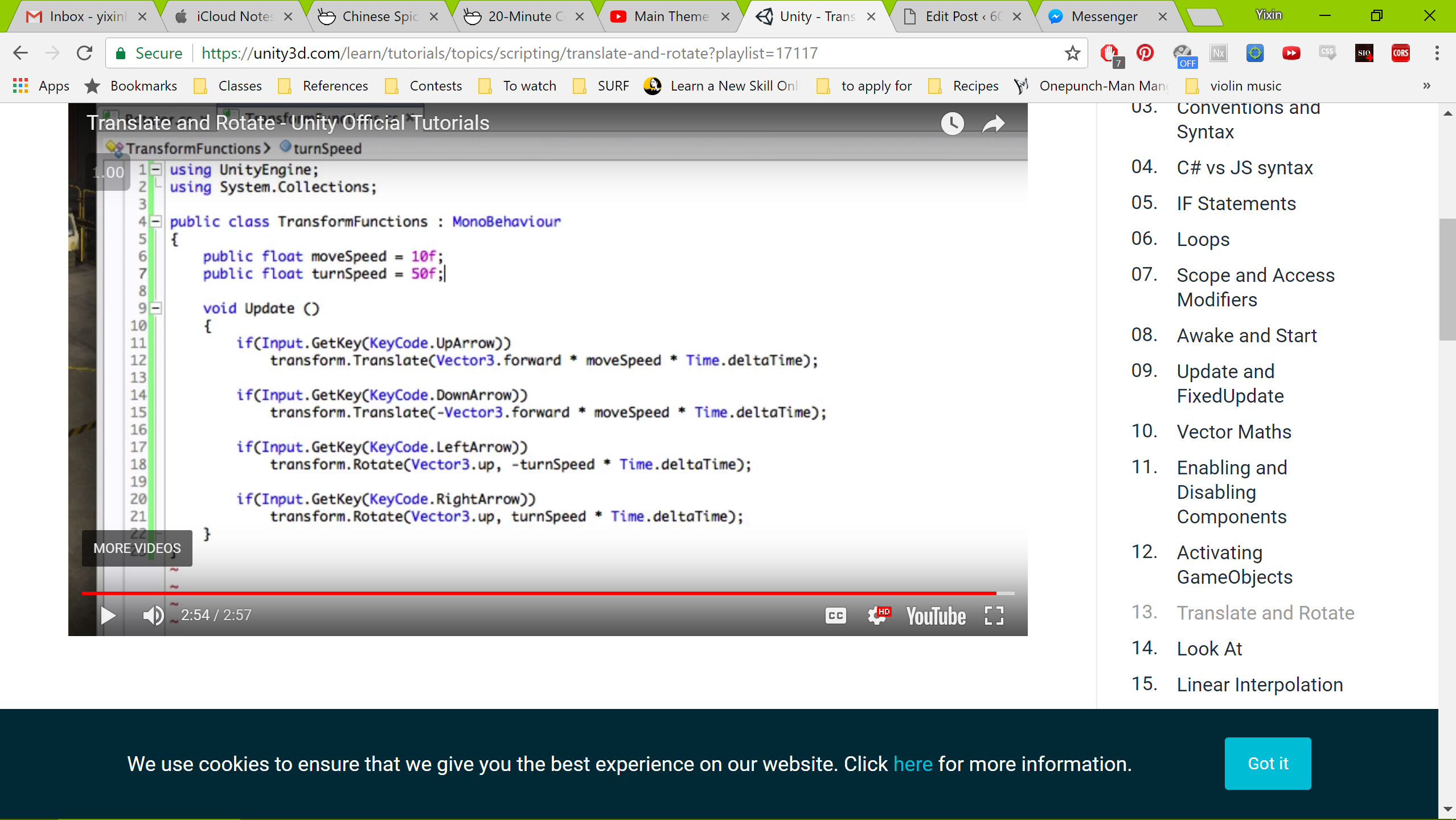

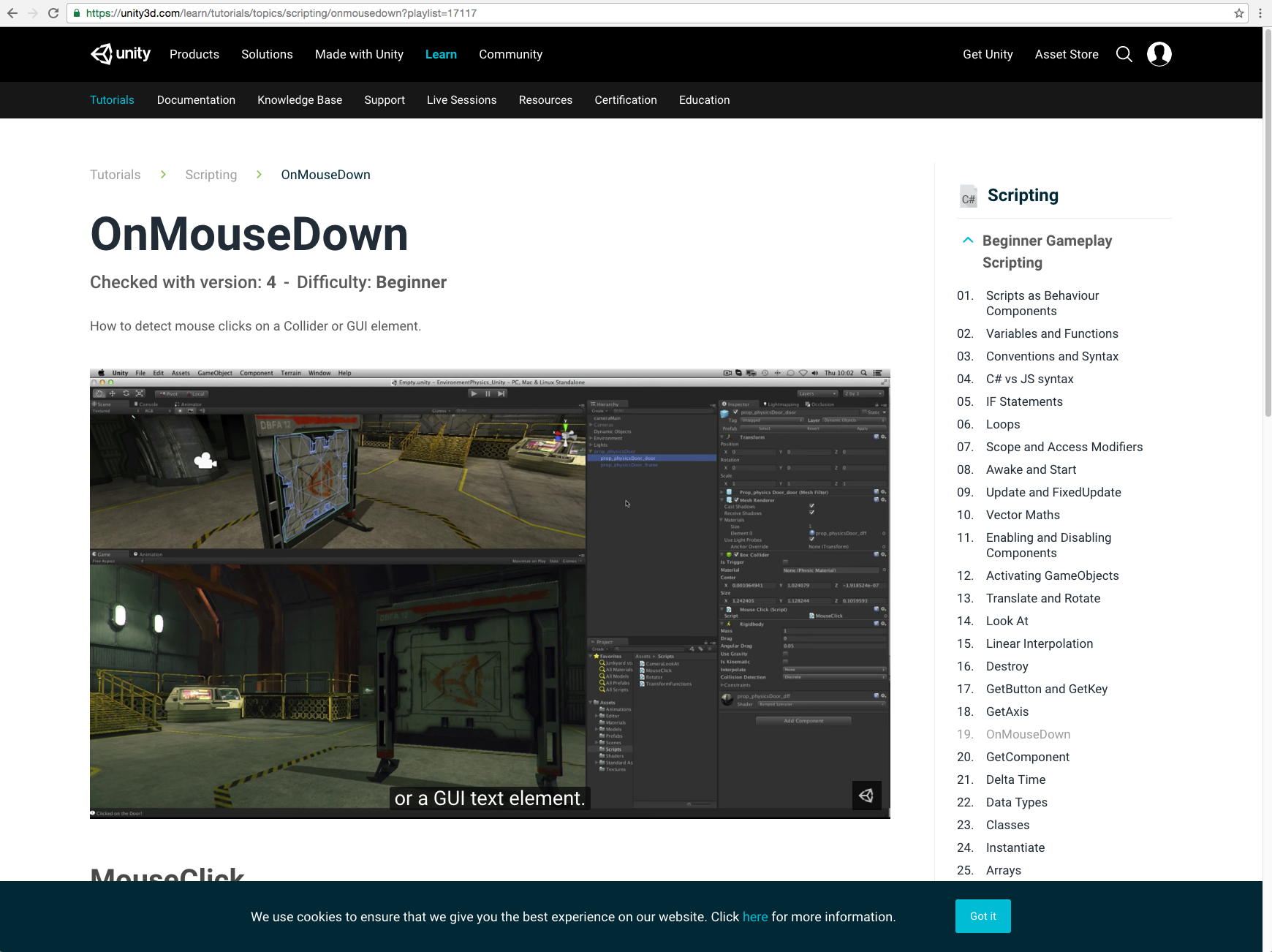

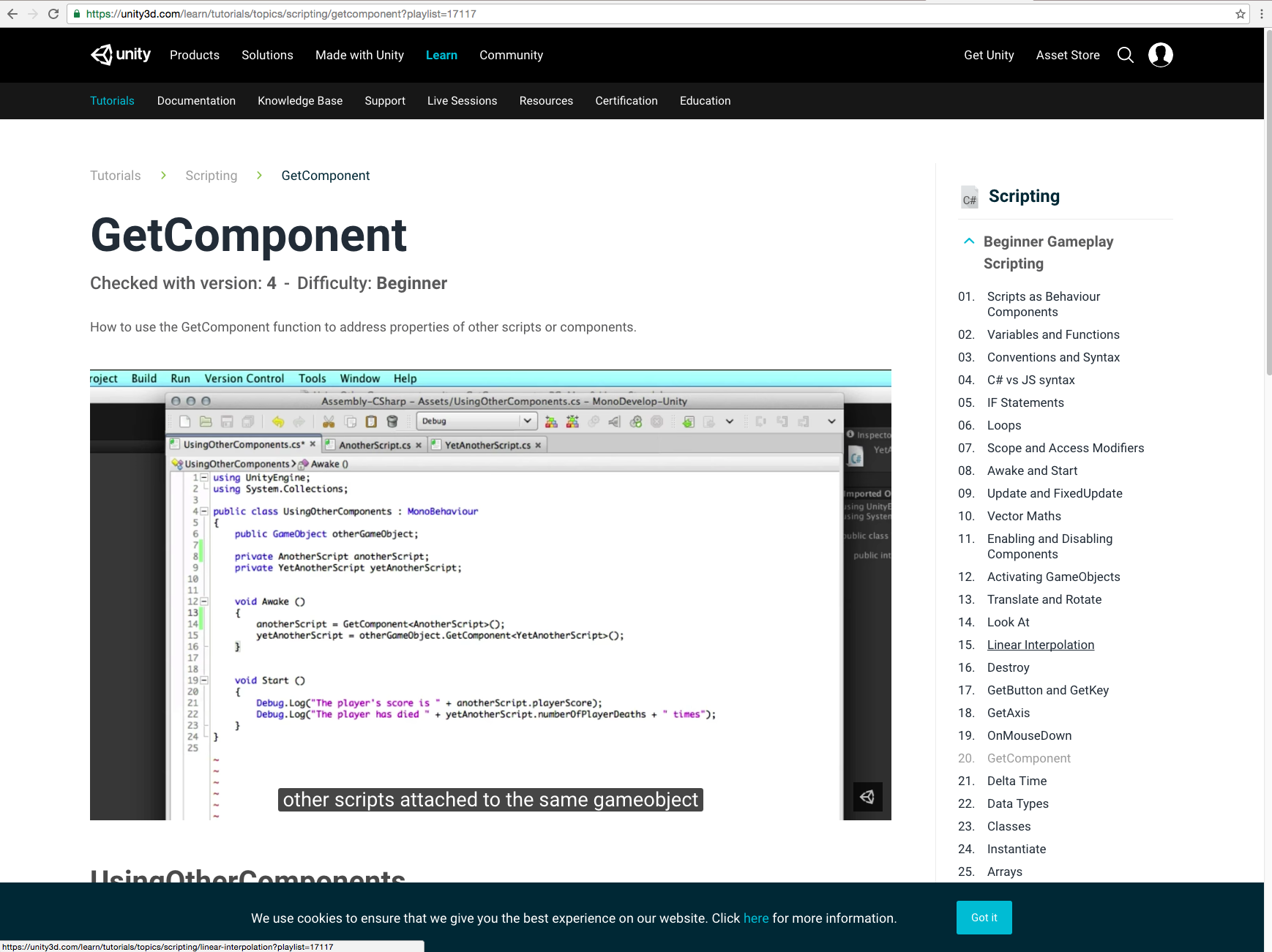

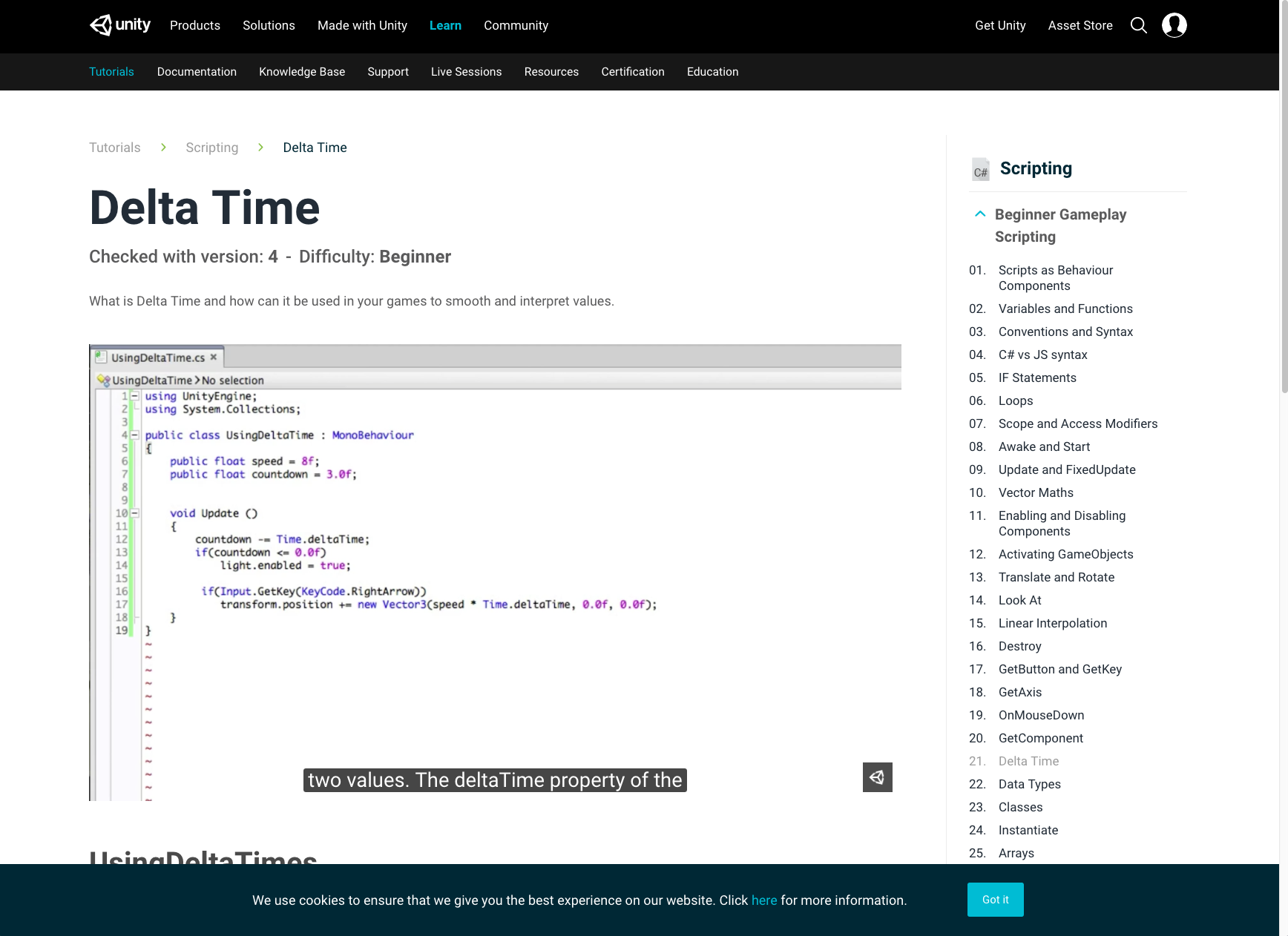

-The first half was rather painful to watch. Seeing some practical examples was pretty interesting though, such as in the section of vectors the tutorial talks about using cross product to determine the direction to apply torque to a tank.

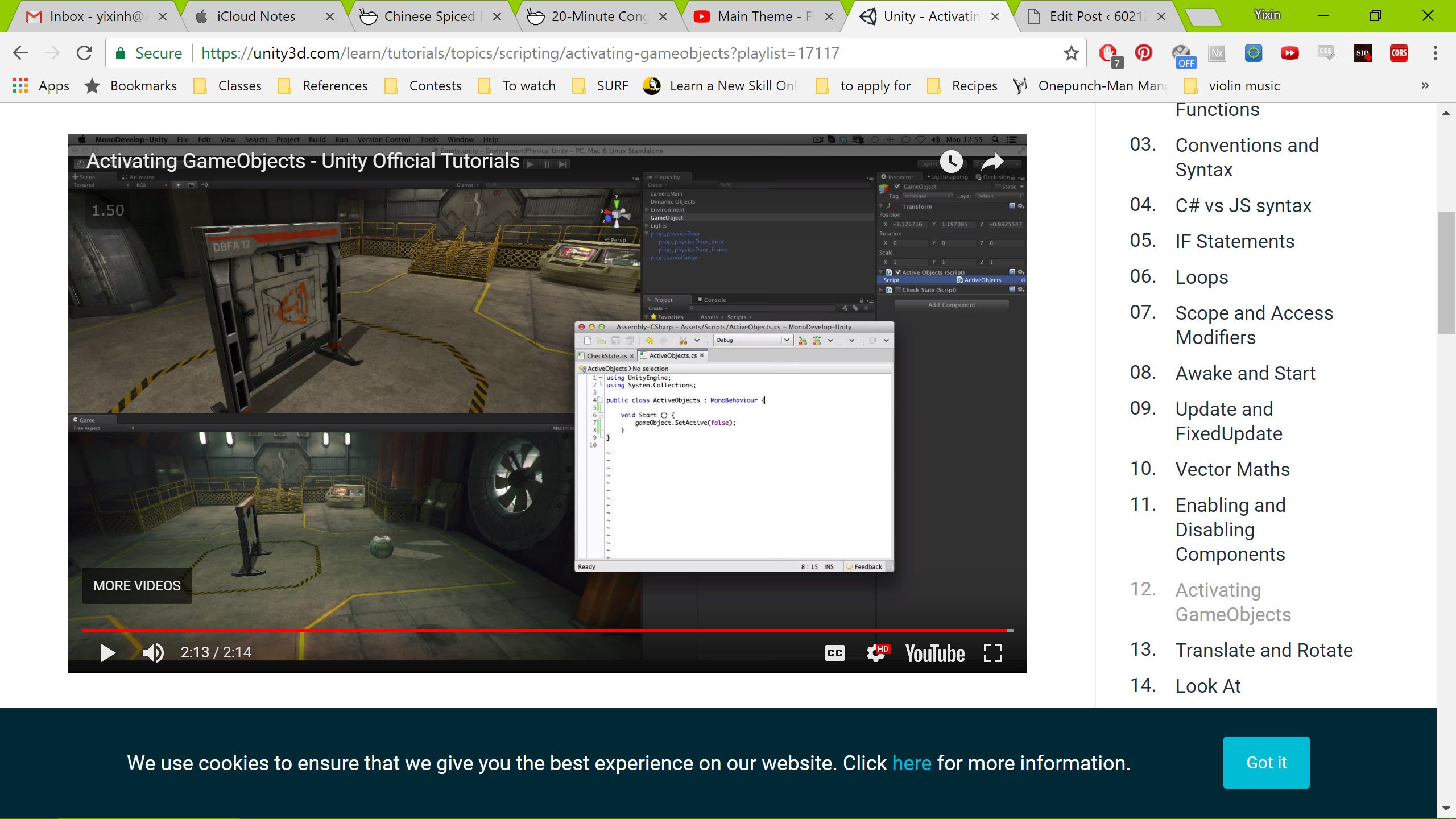

-Thinking about active self vs in hierarchy is a bit confusing, but I also feel like that’s probably not a hugely used function for scripting anyways?

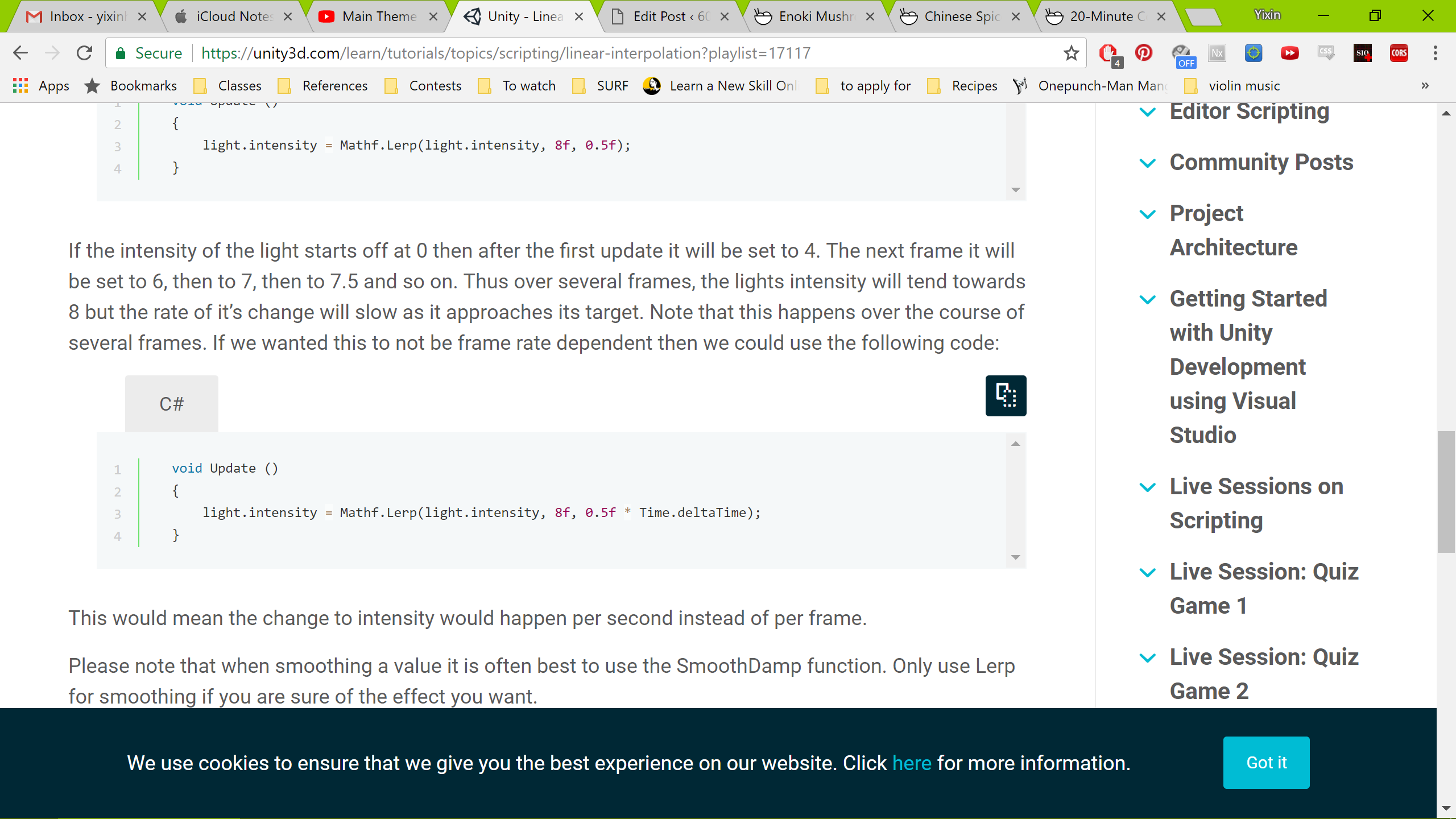

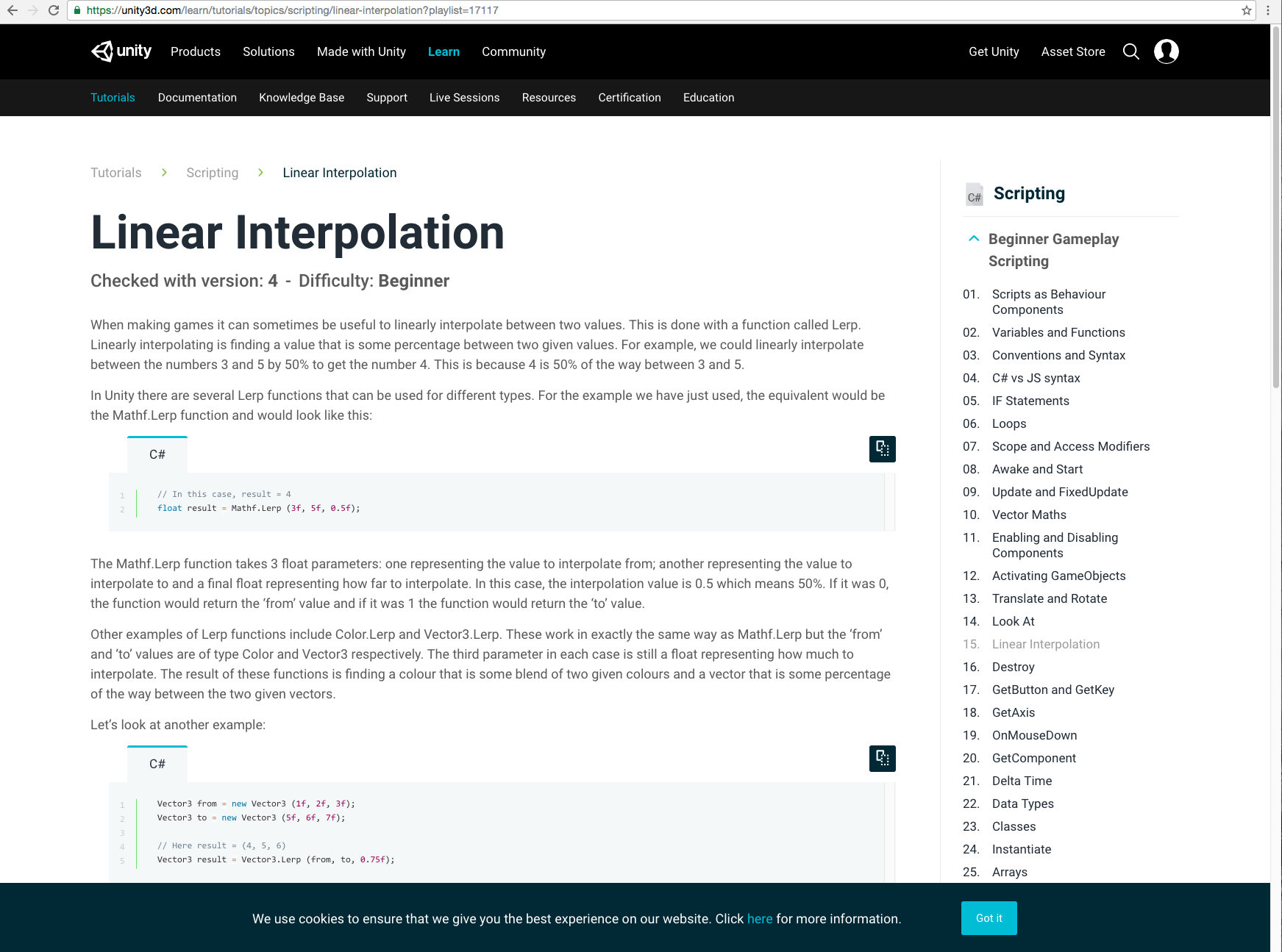

-The linear interpolation reminds me of some stuff we did in Computer Graphics

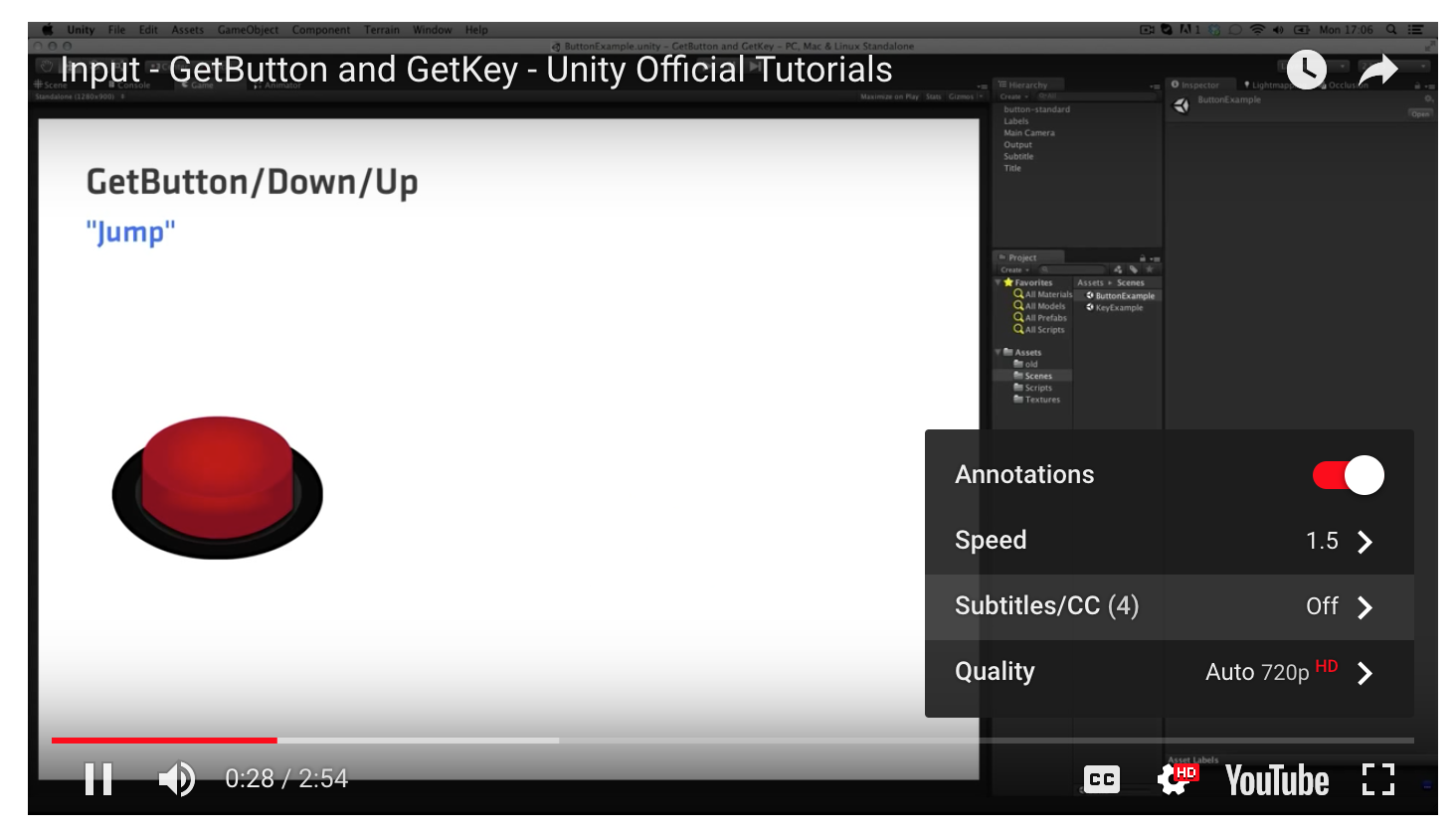

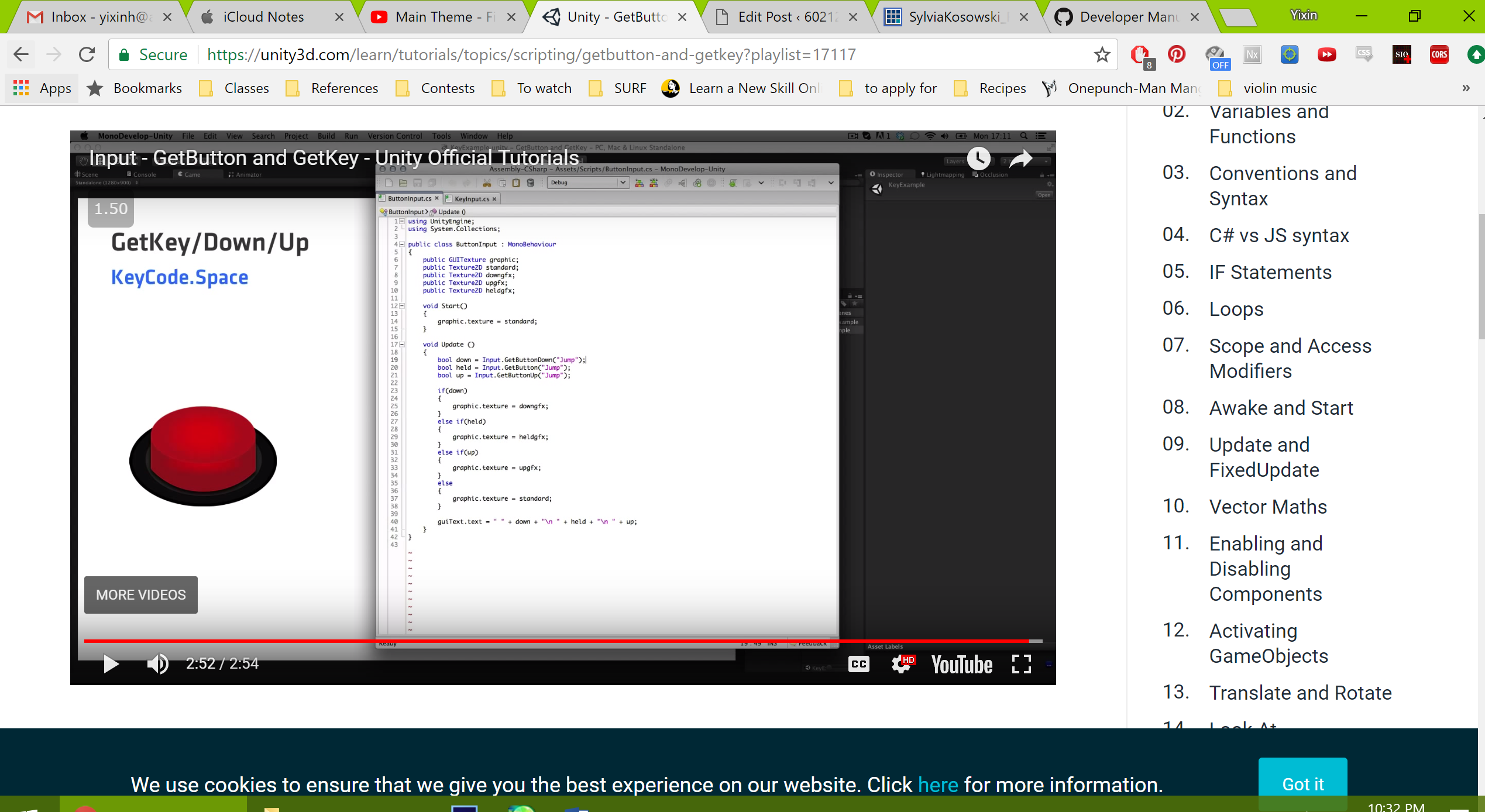

-GetButton and GetKey are probably things I’ll have to reference later.

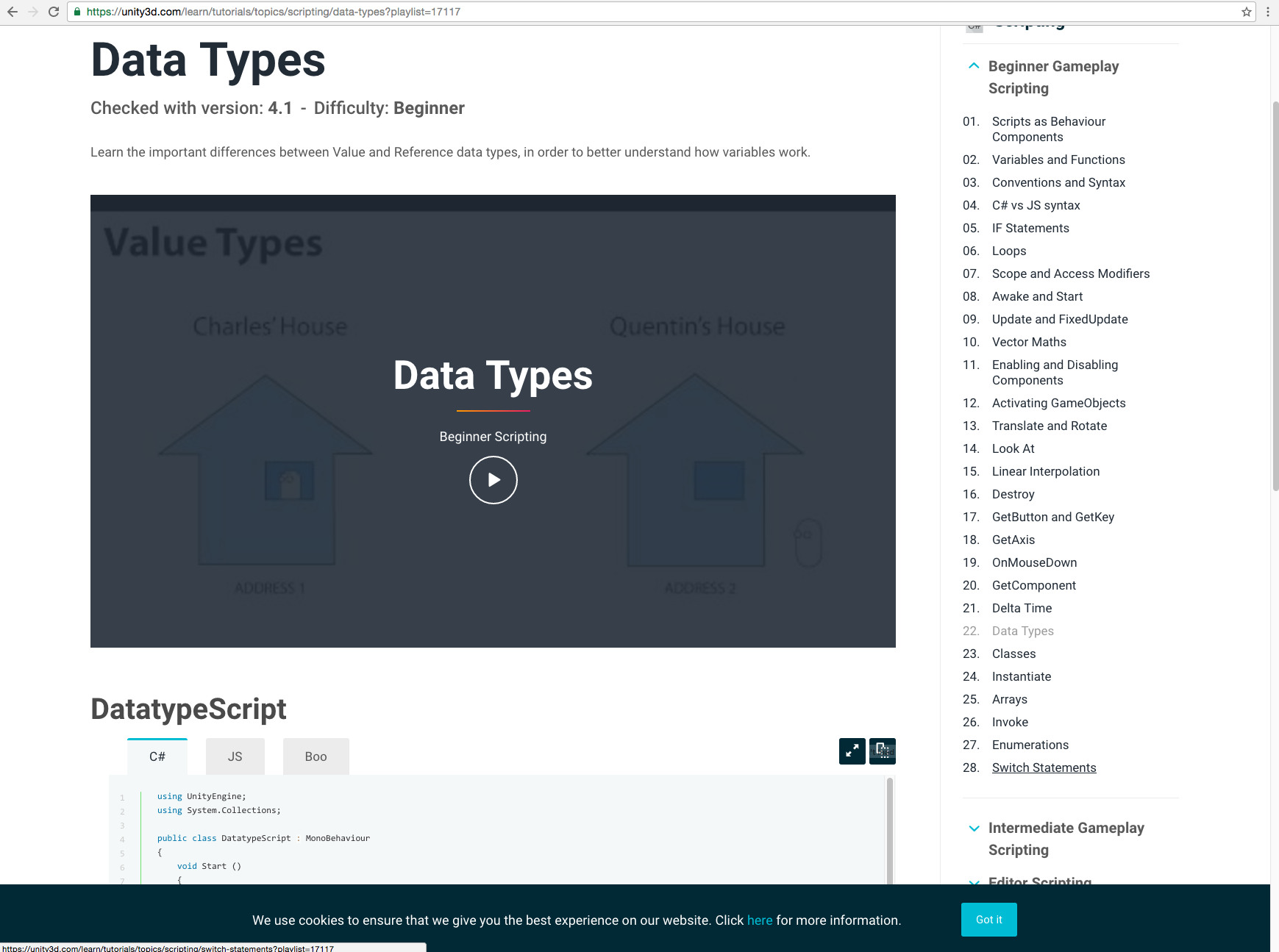

-The analogy for value vs reference types is very easy to understand and memorable haha

-The use of the colon to change the type is like in SML

Part 1

Part 2

Part 3

Part 4

Part 5

Part 6

Part 7

Part 8

Part 9

Part 10

Part 11

Part 12

Part 13

Part 14

Part 15

Part 16

Part 17

Part 18

Part 19

Part 20

Part 21

Part 22

Part 23

Part 24

Part 25

Part 26

Part 27

Part 28

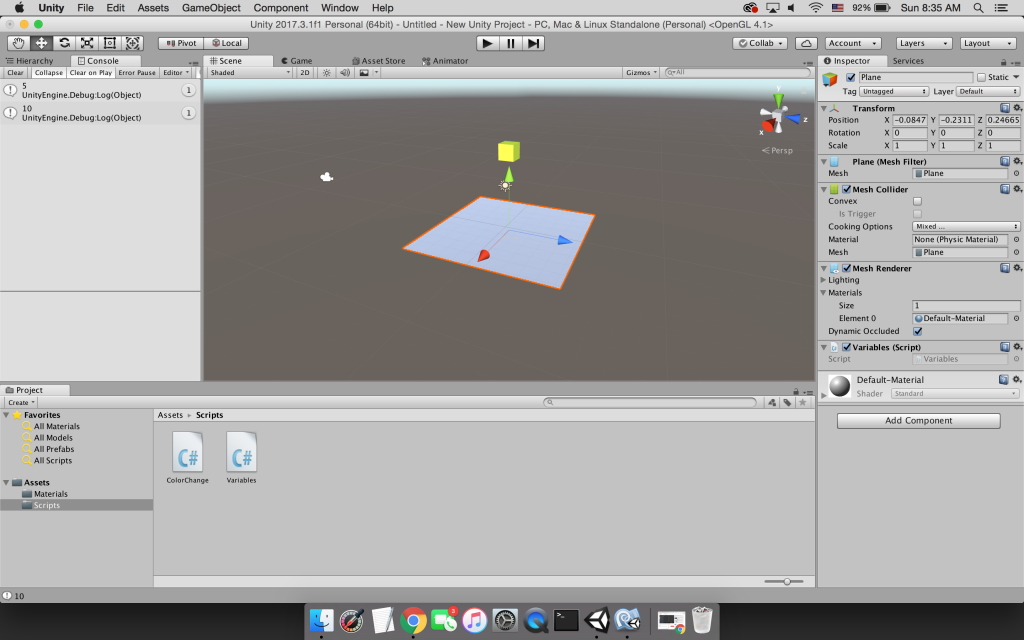

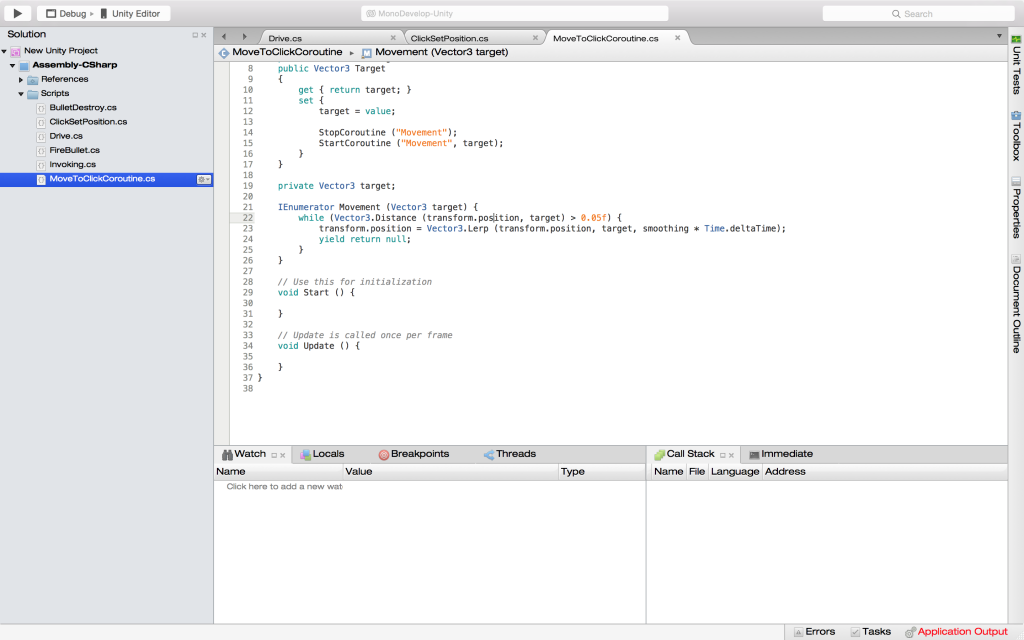

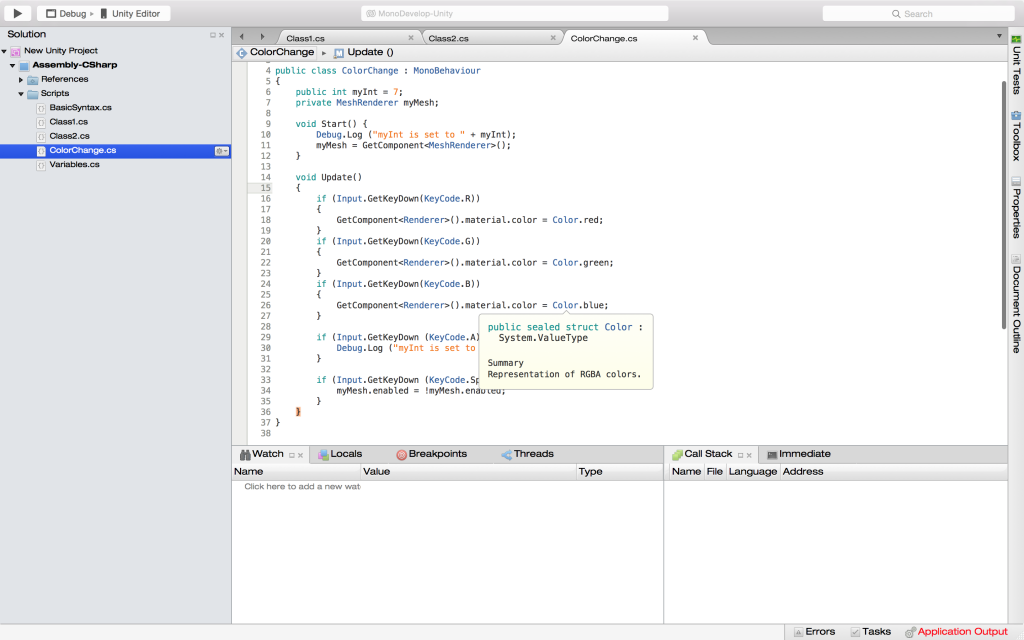

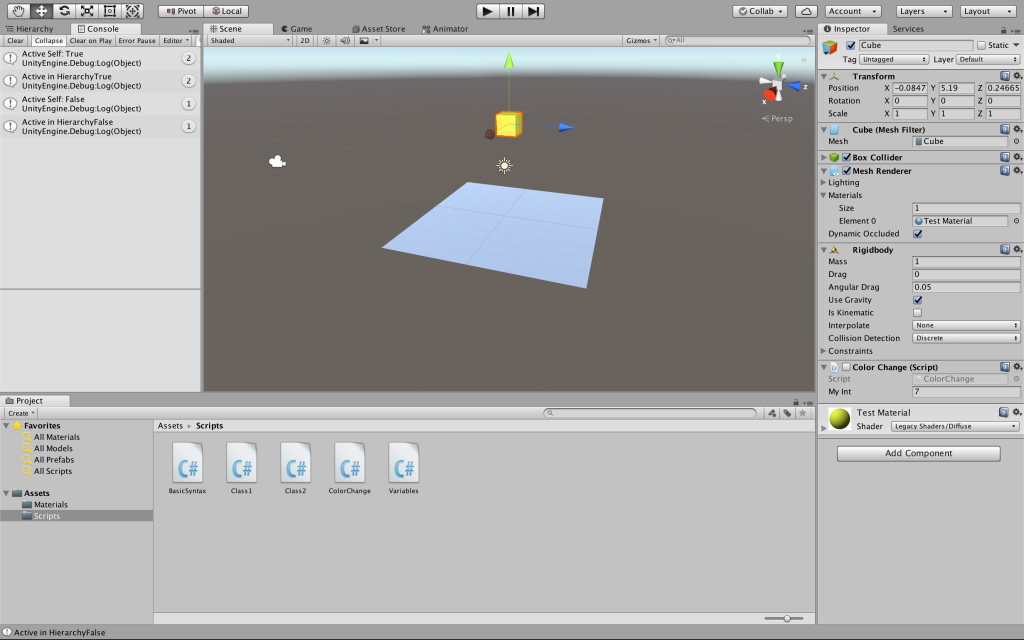

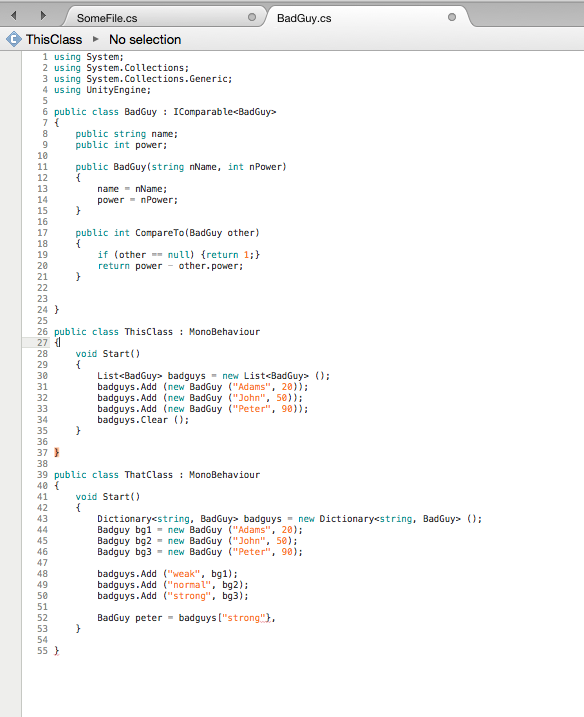

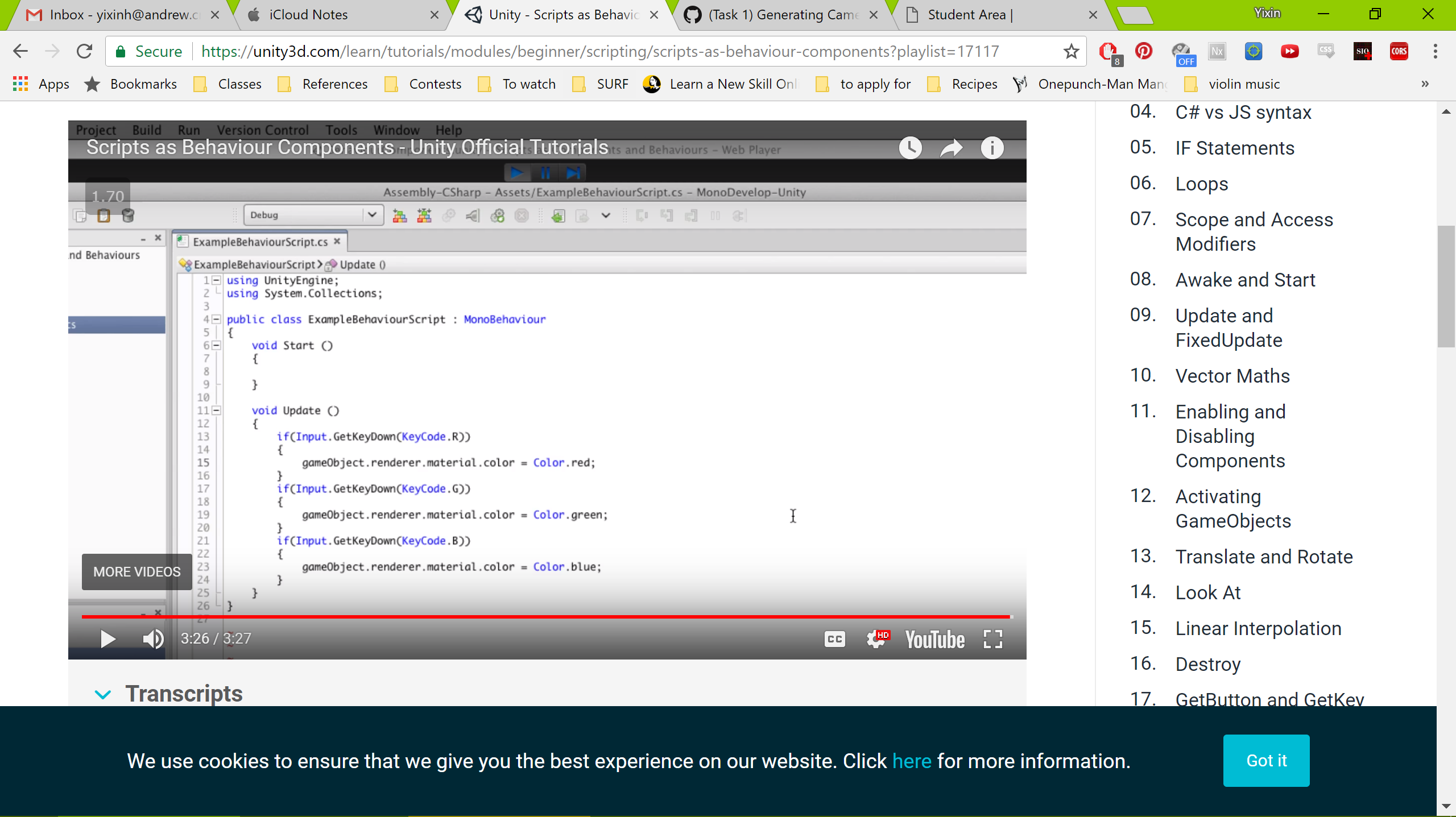

ookey-Unity2

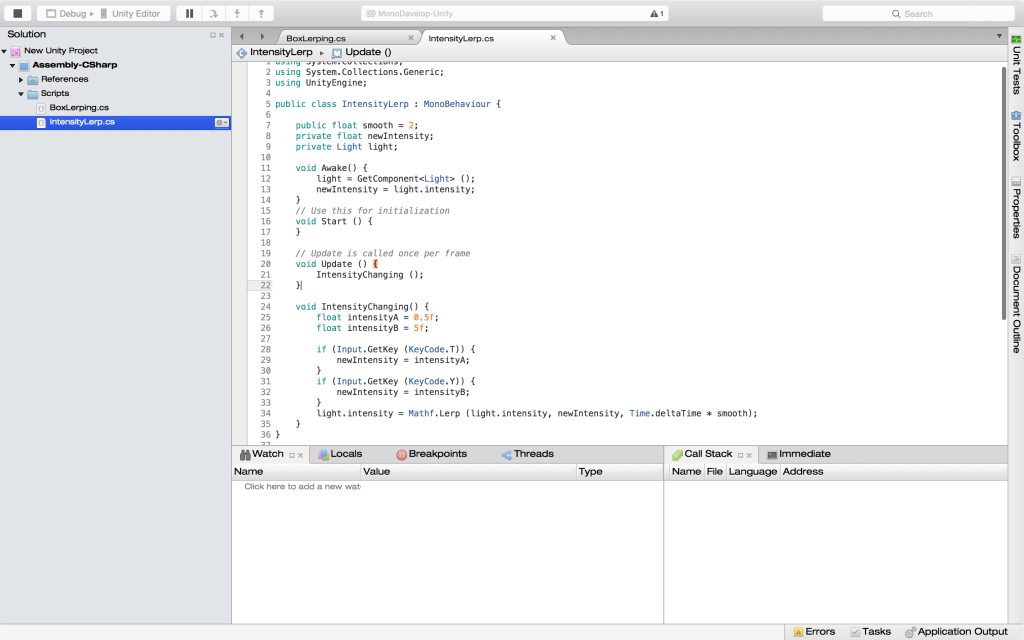

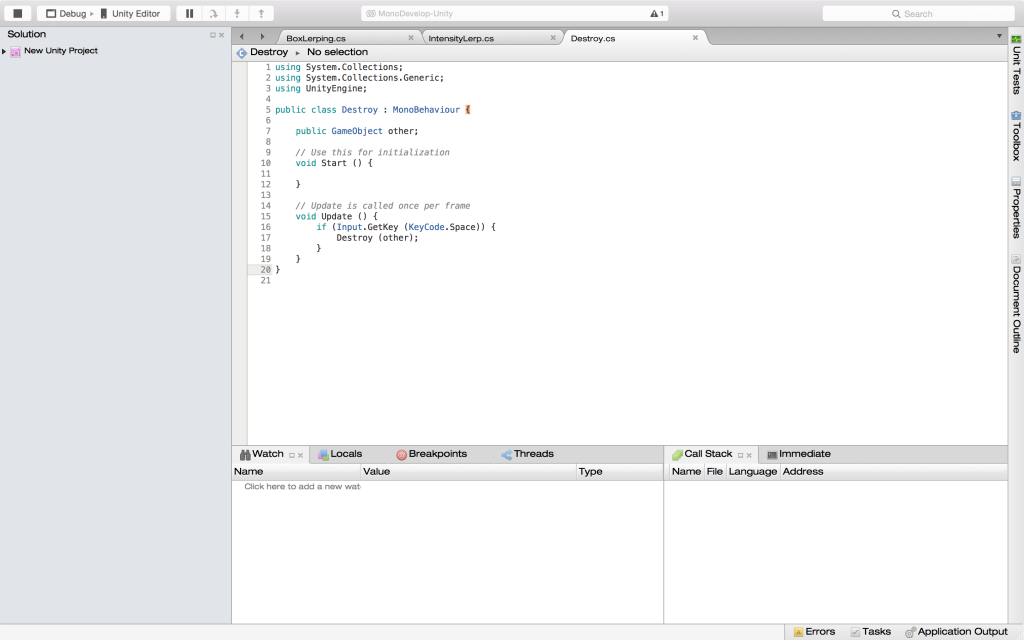

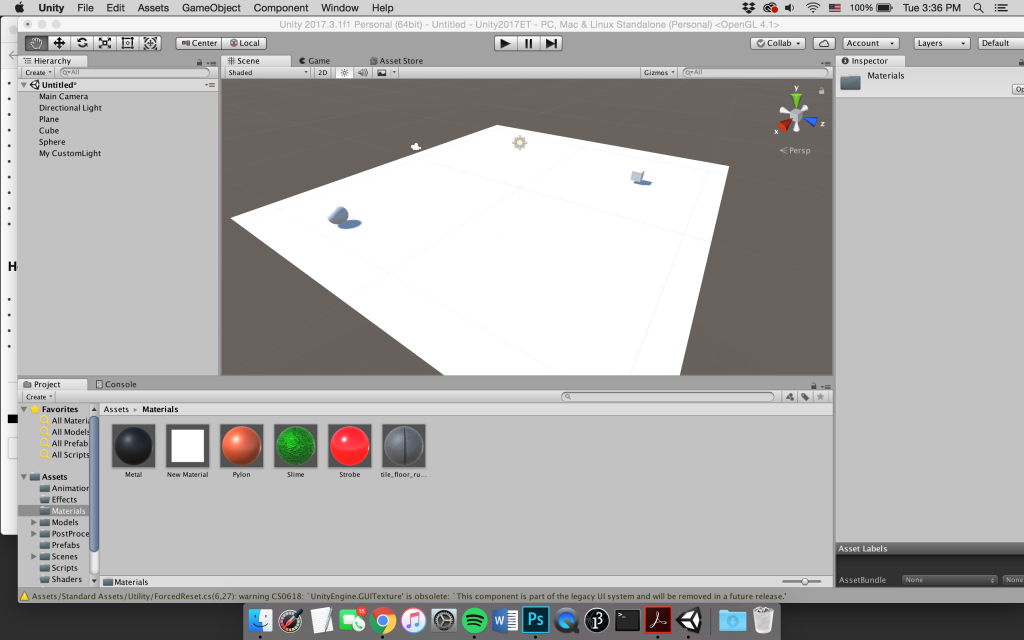

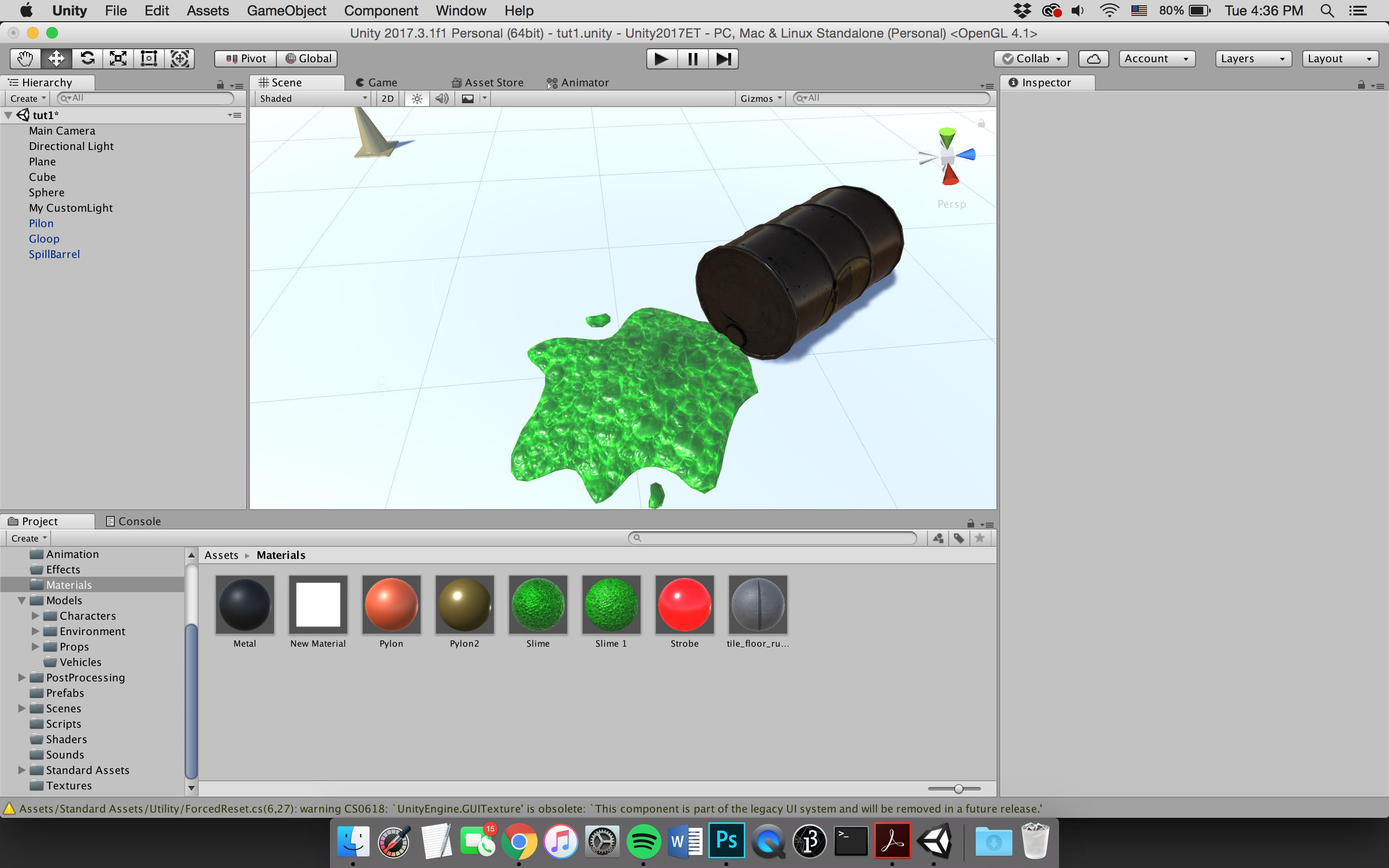

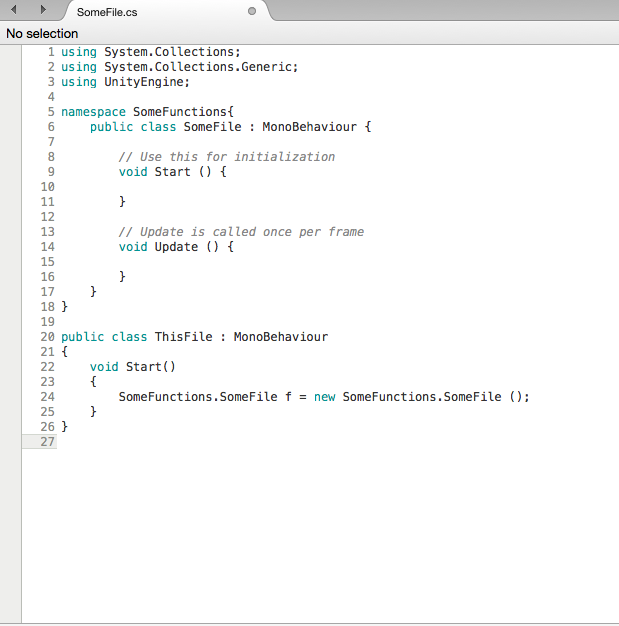

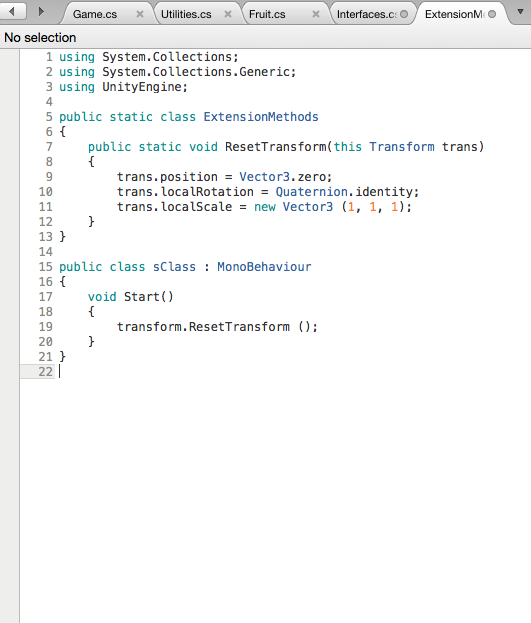

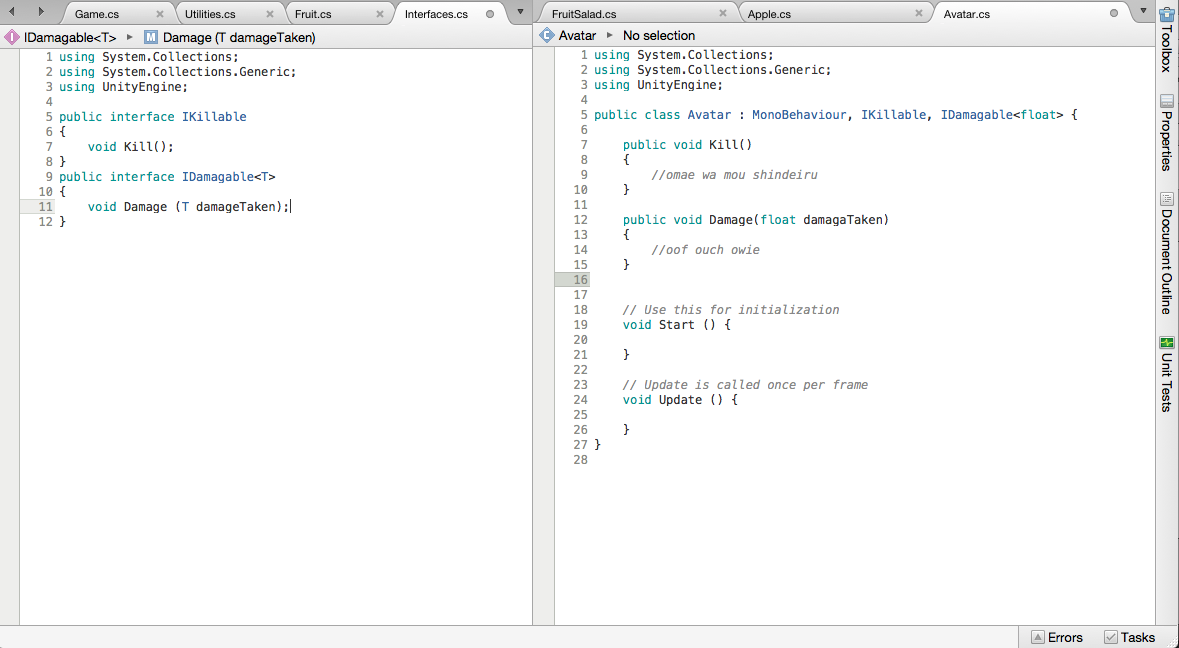

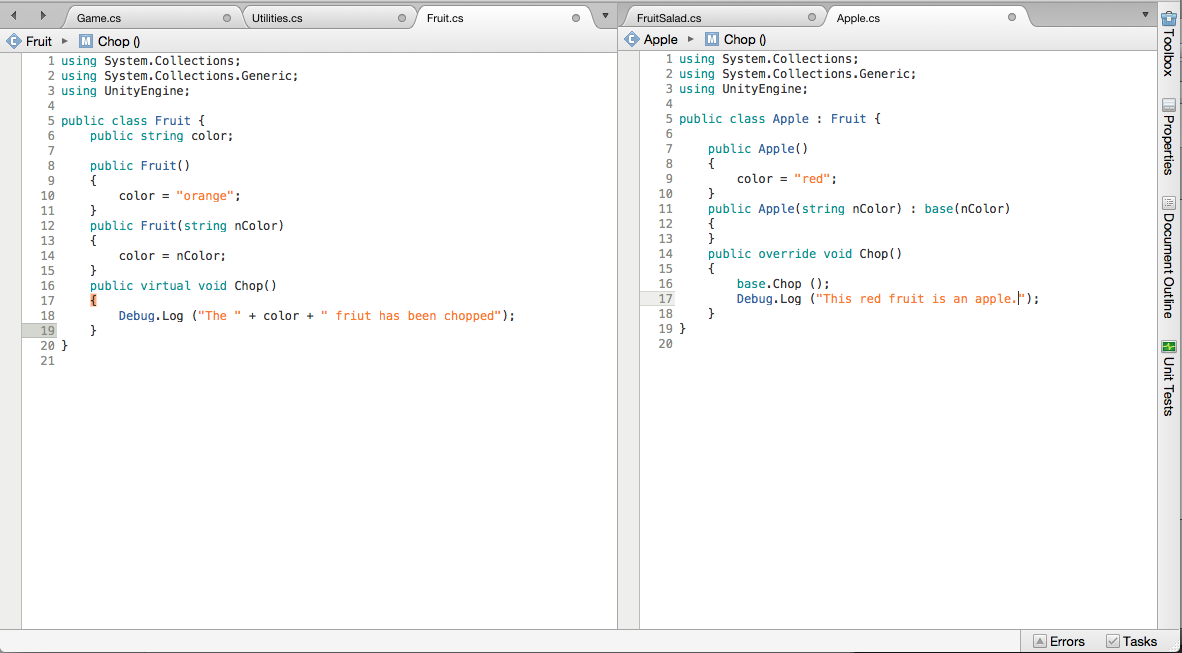

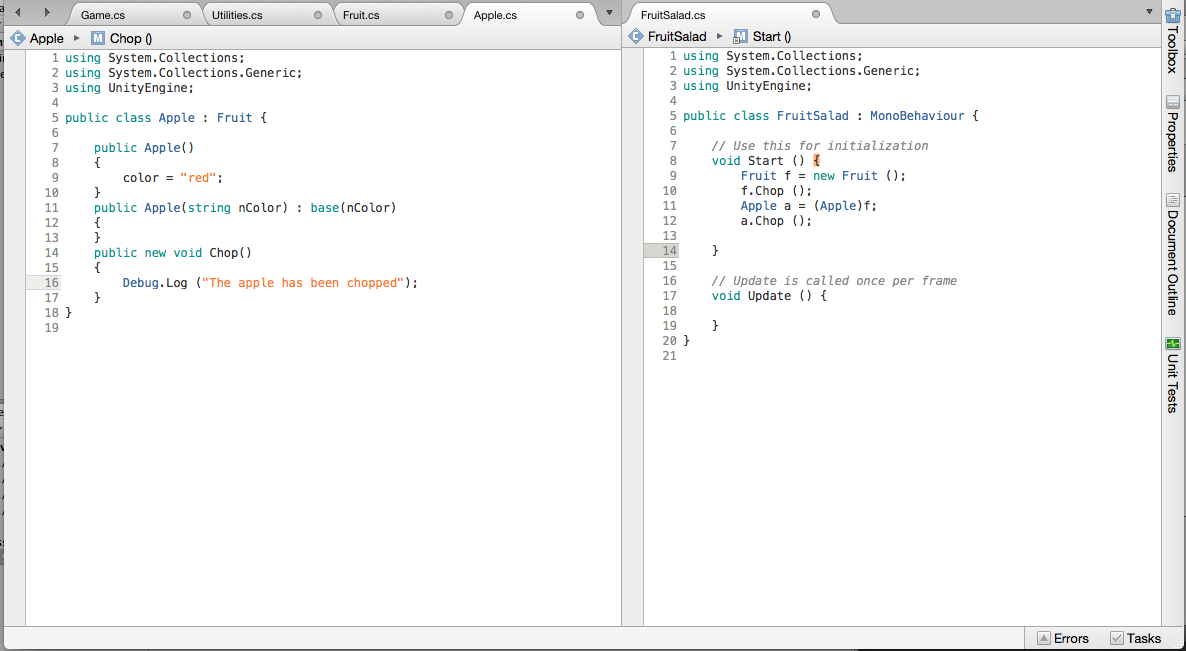

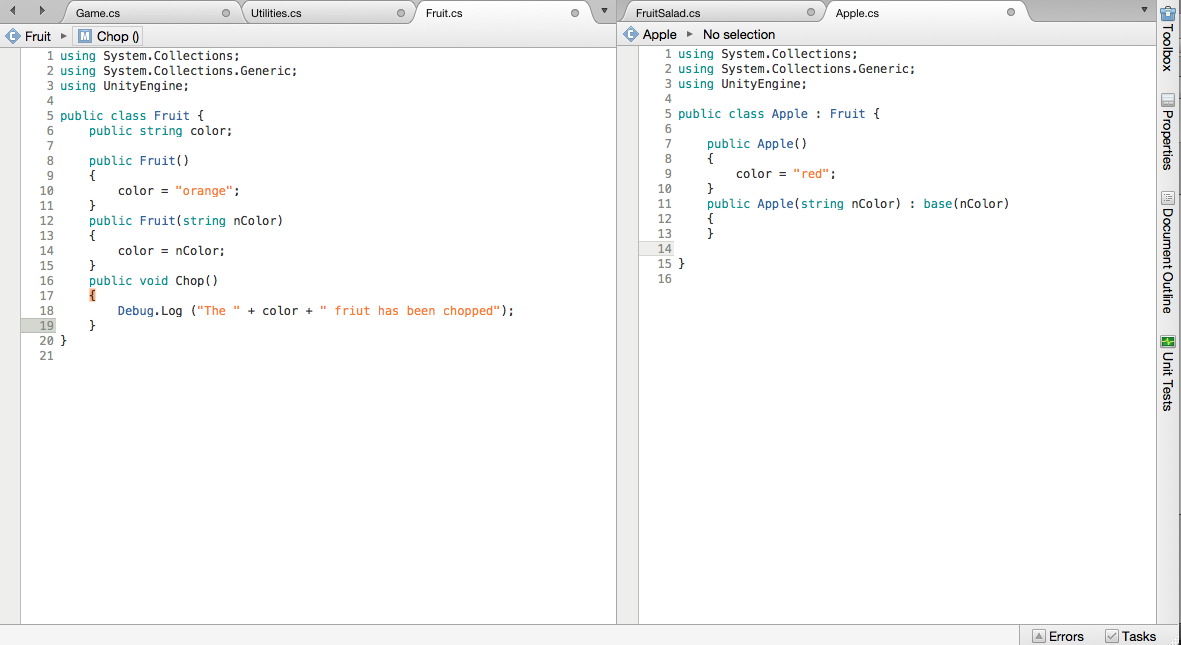

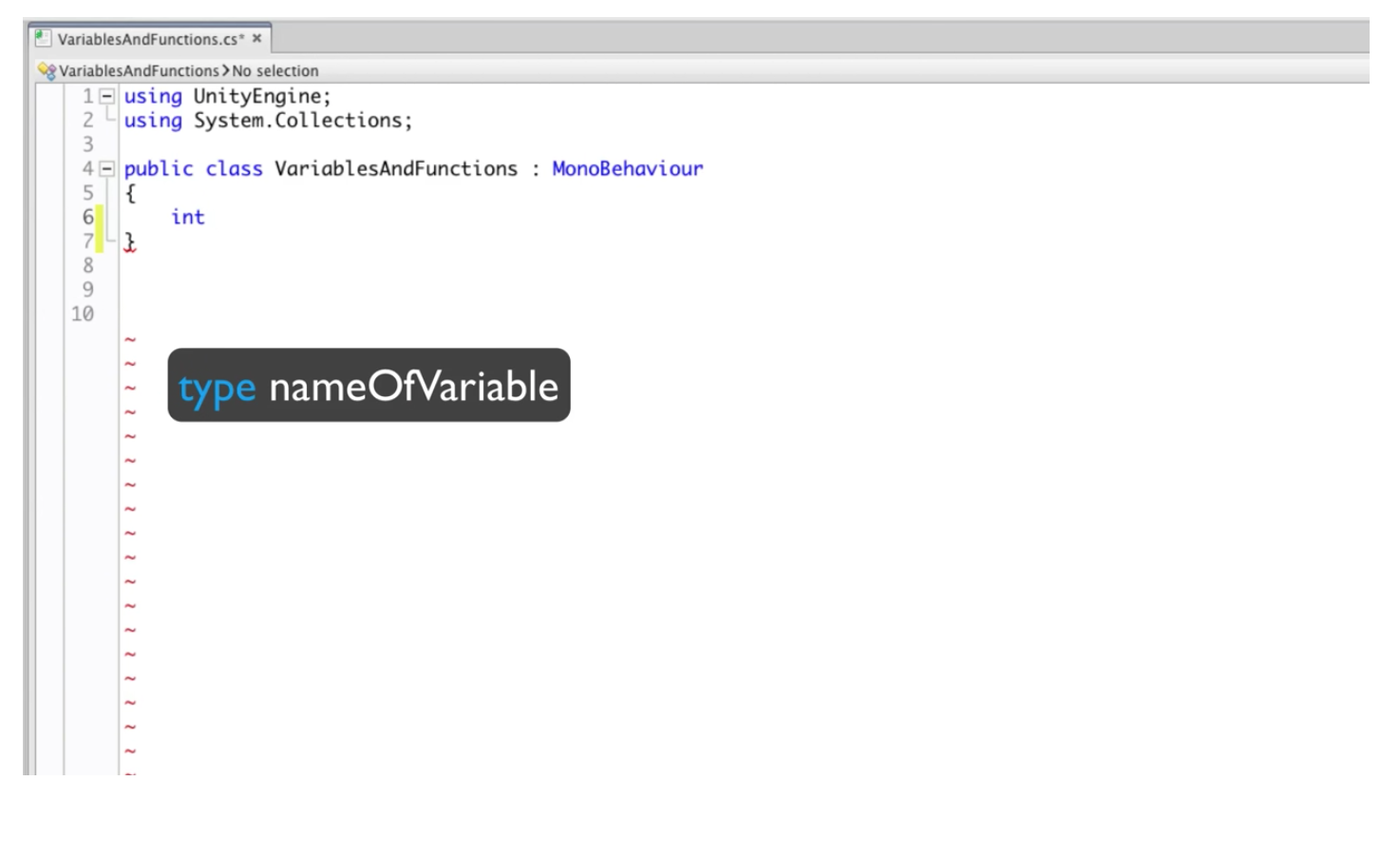

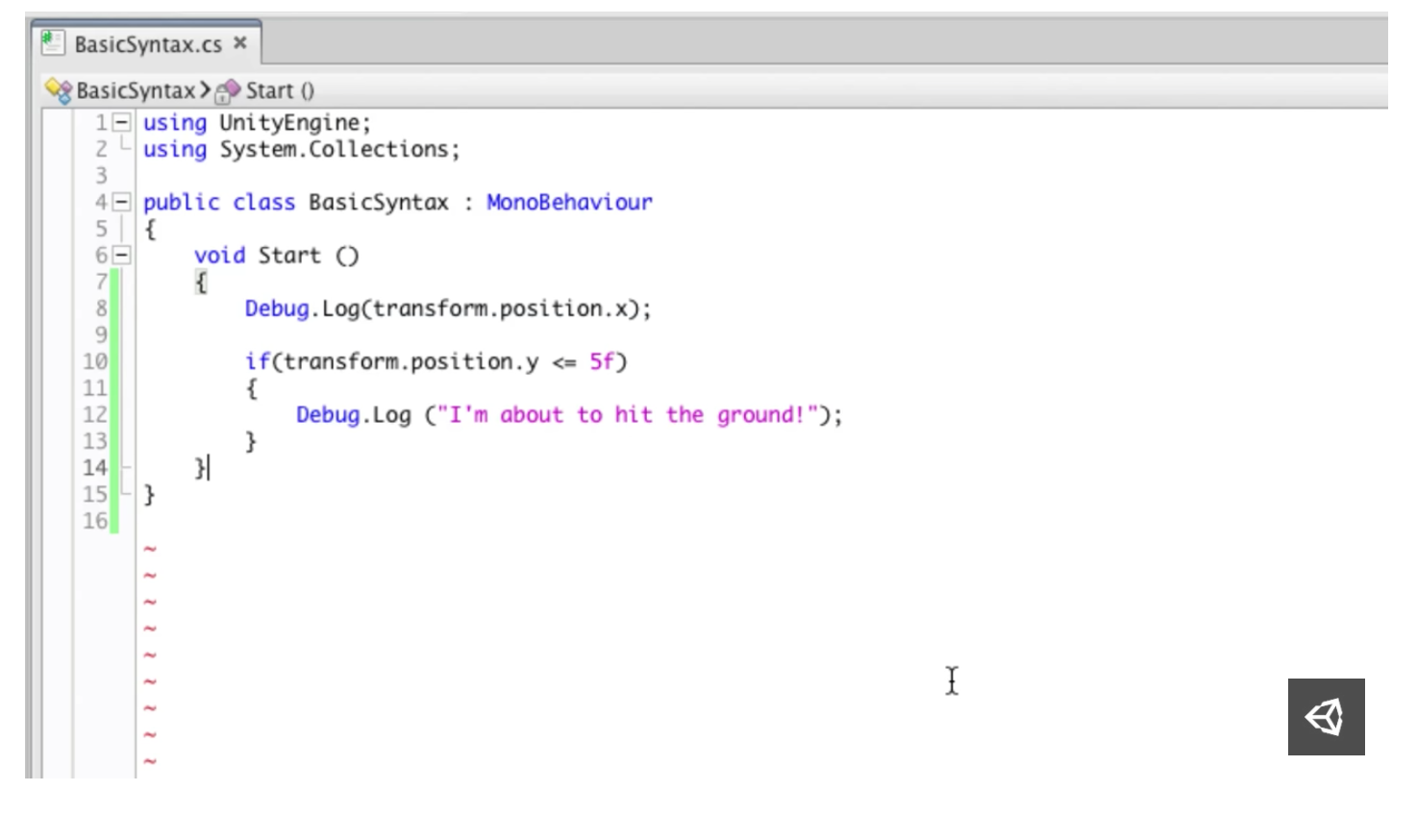

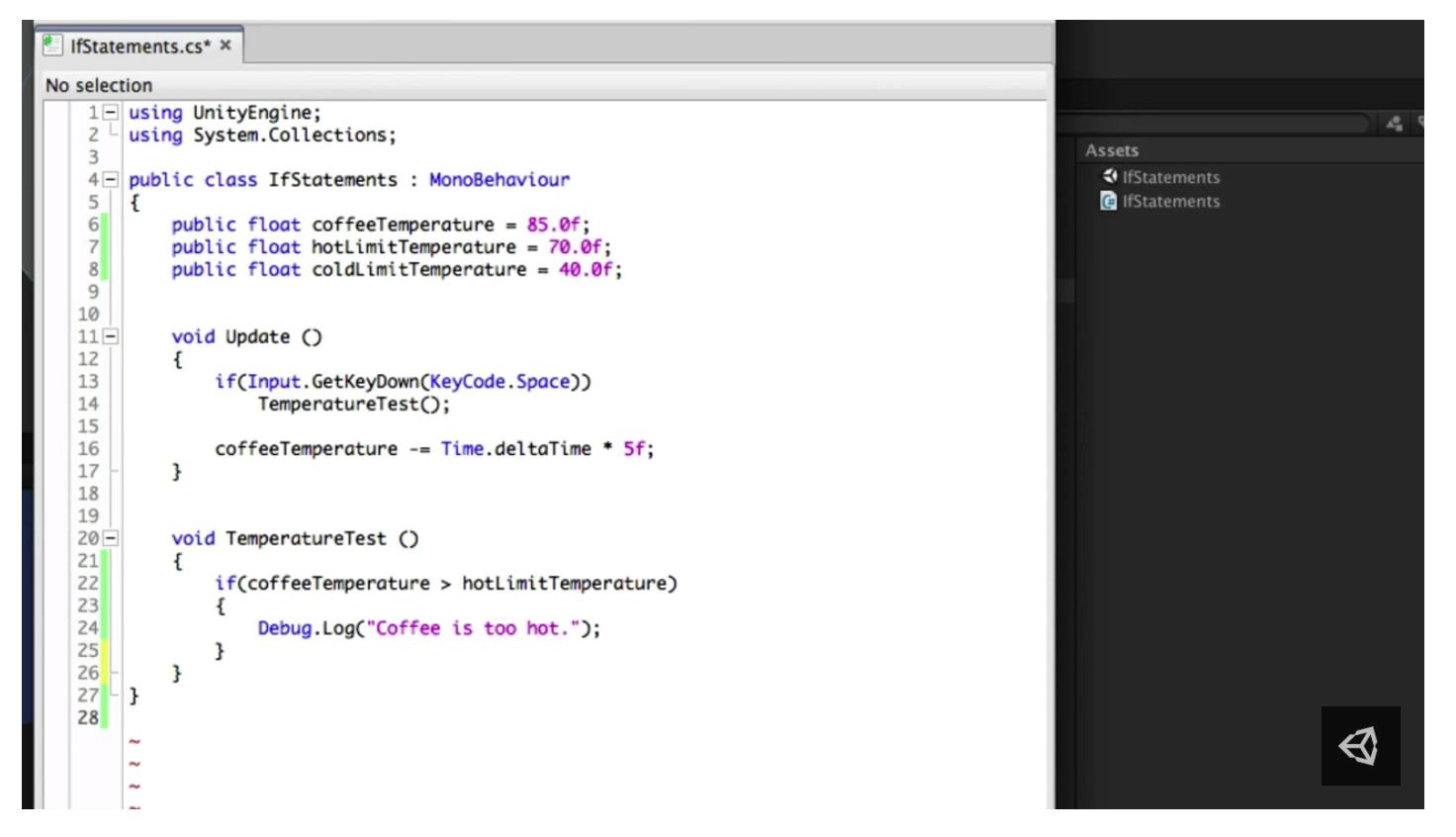

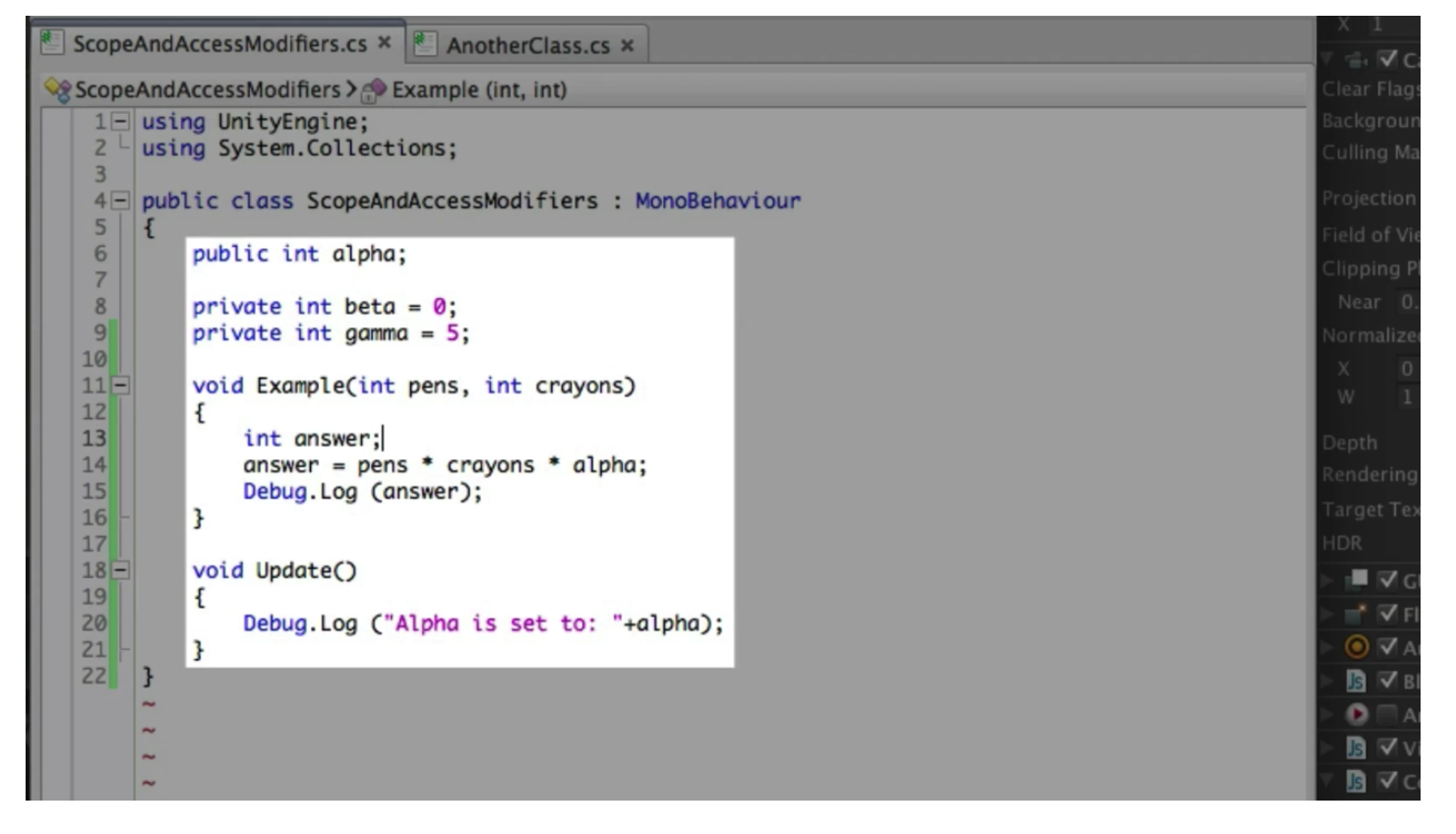

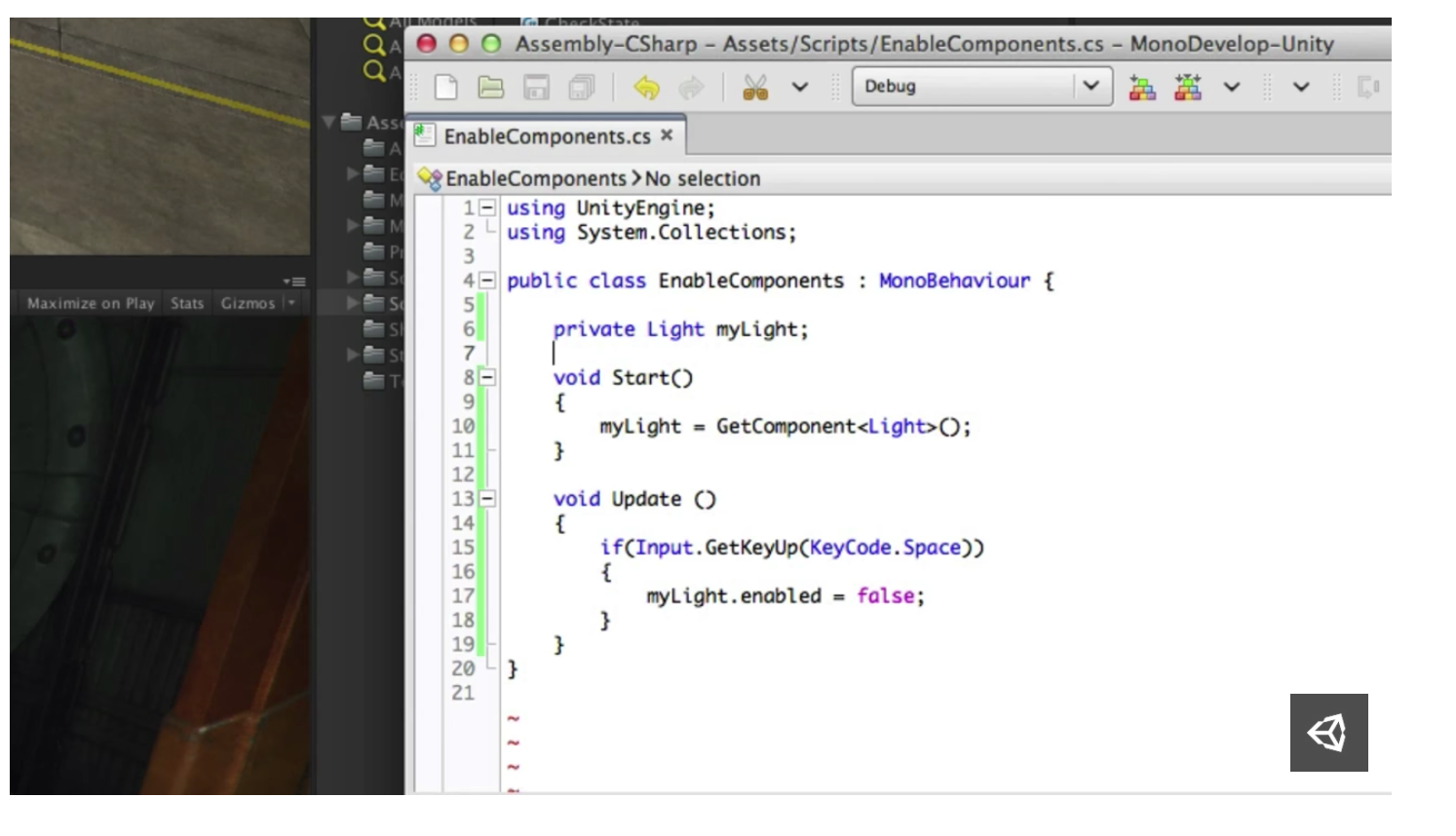

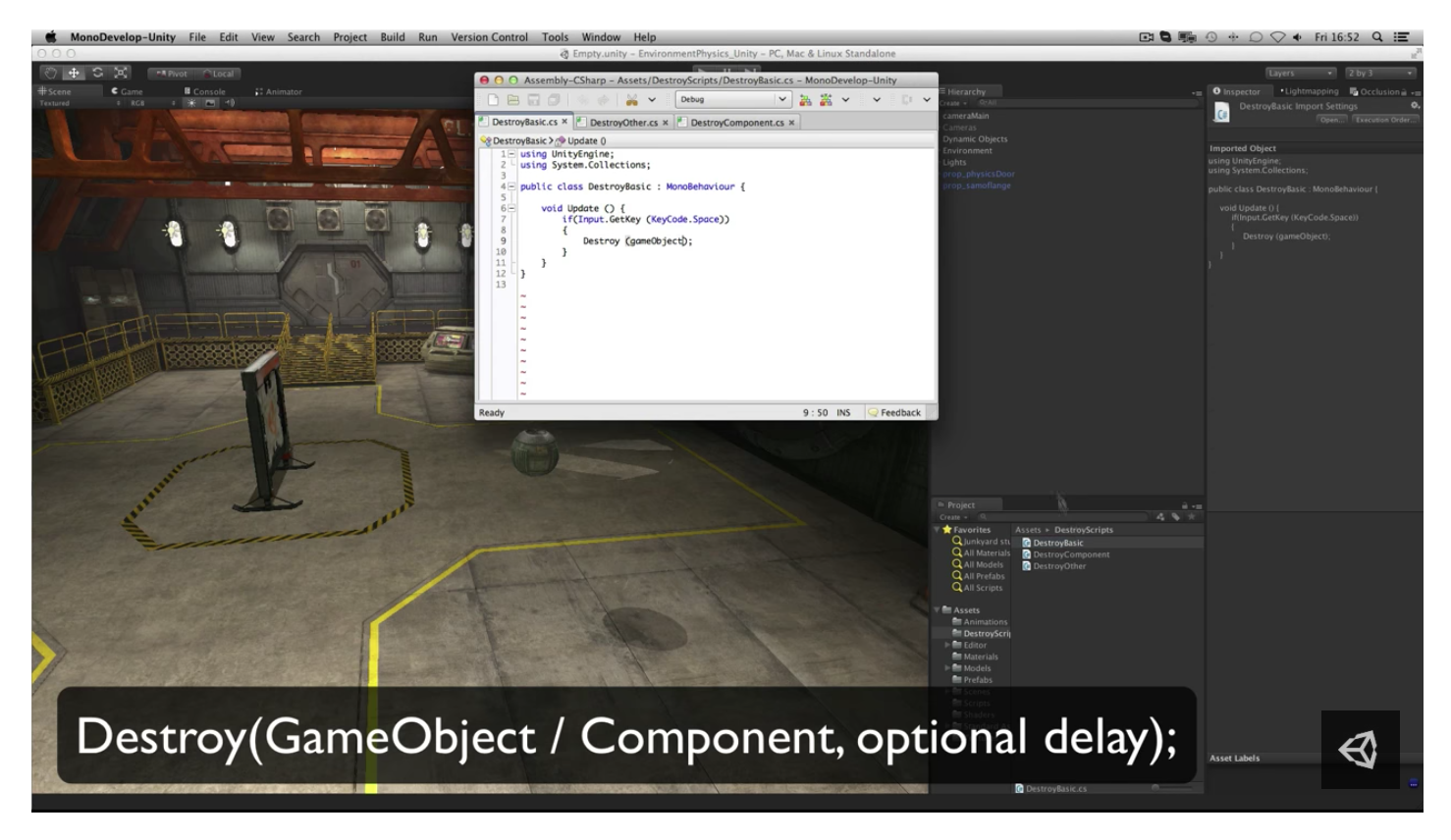

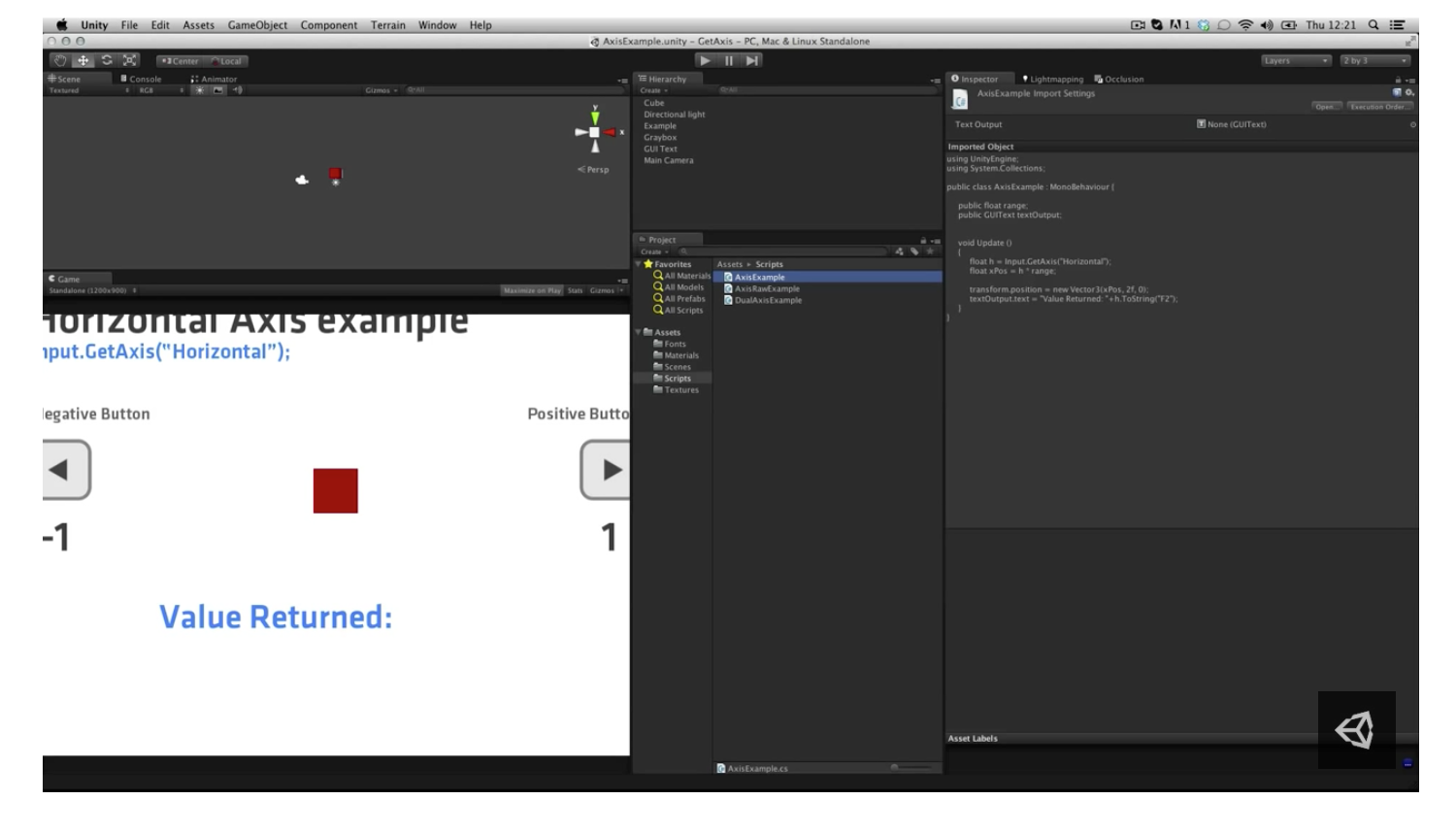

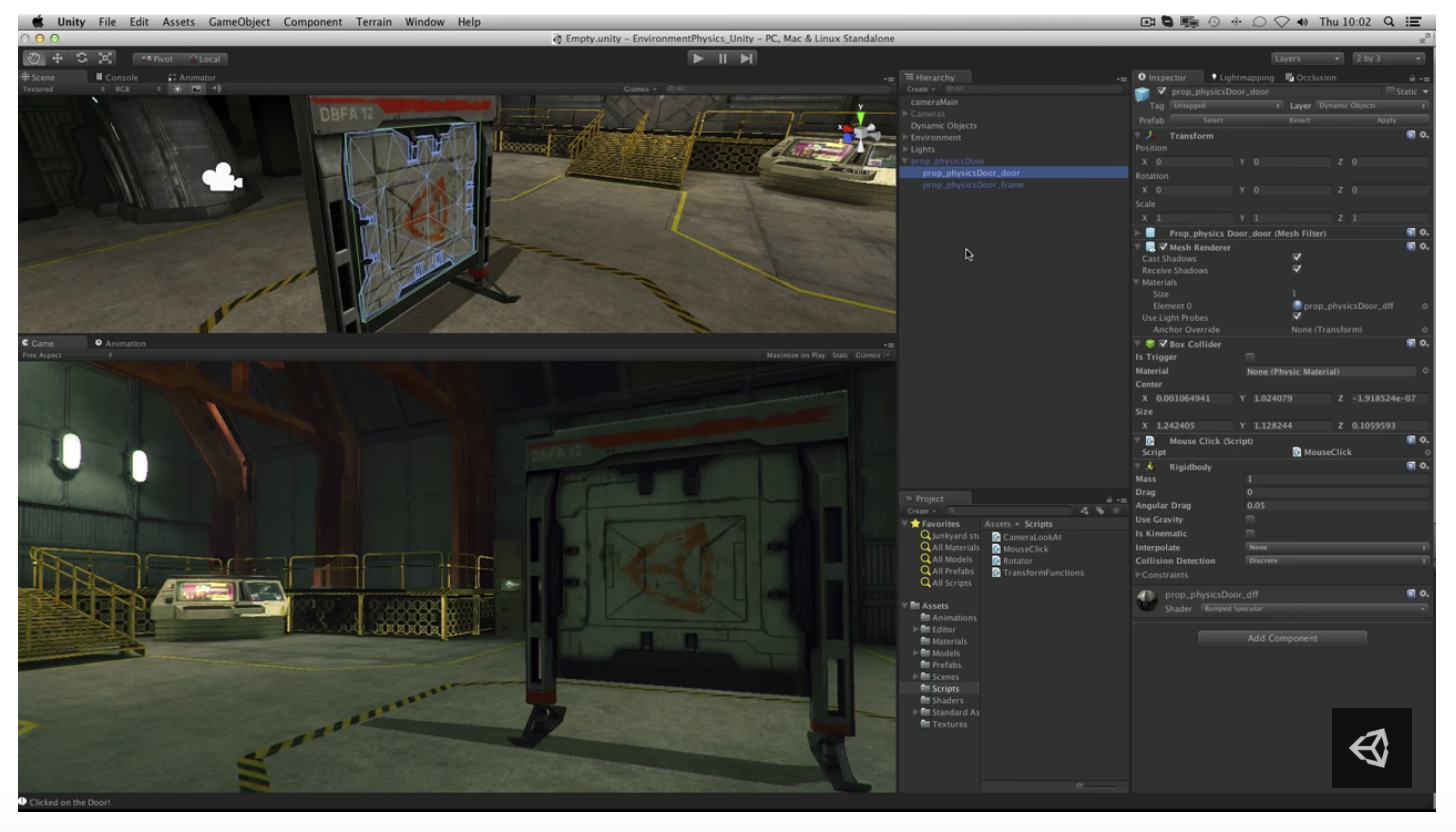

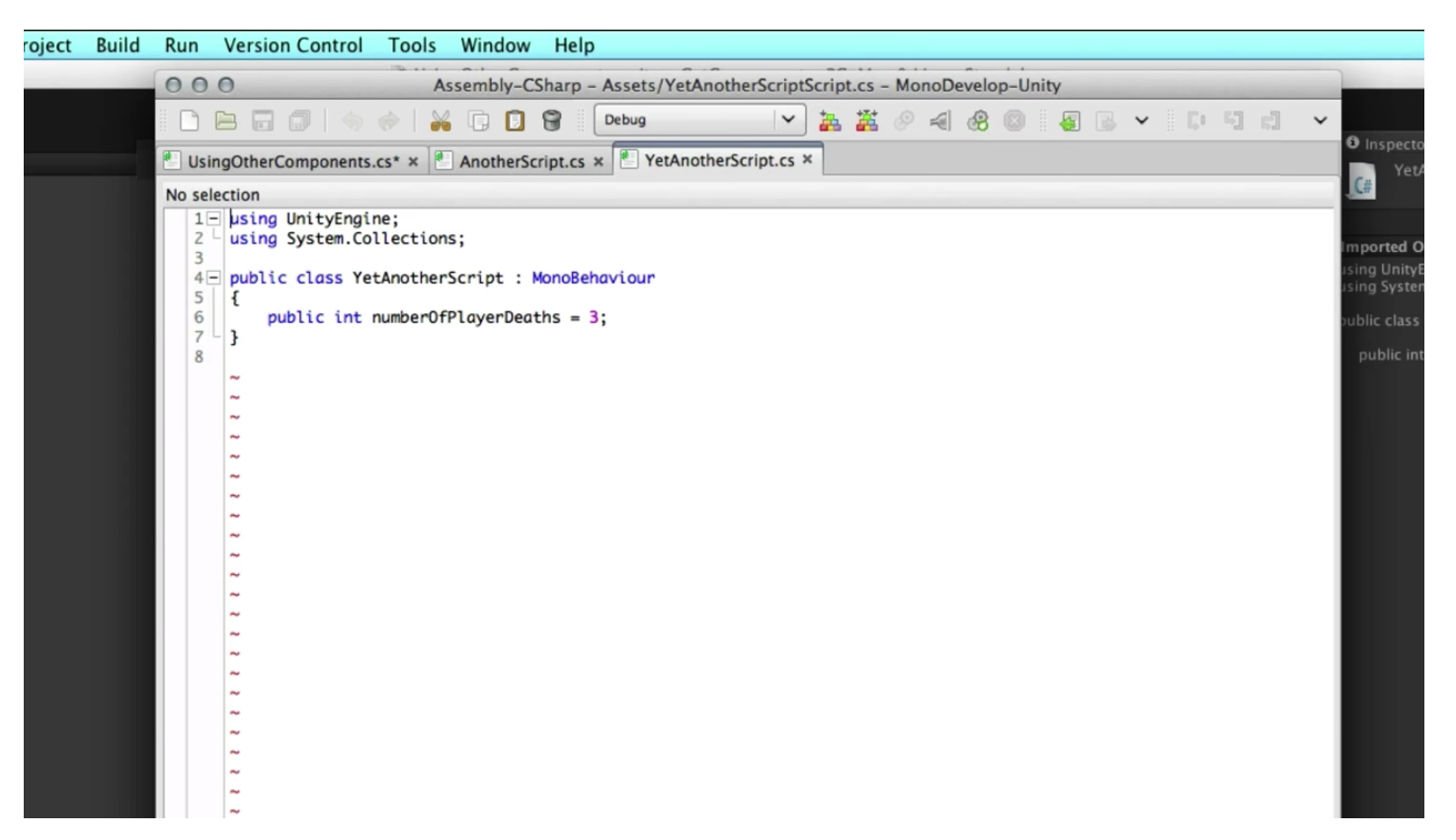

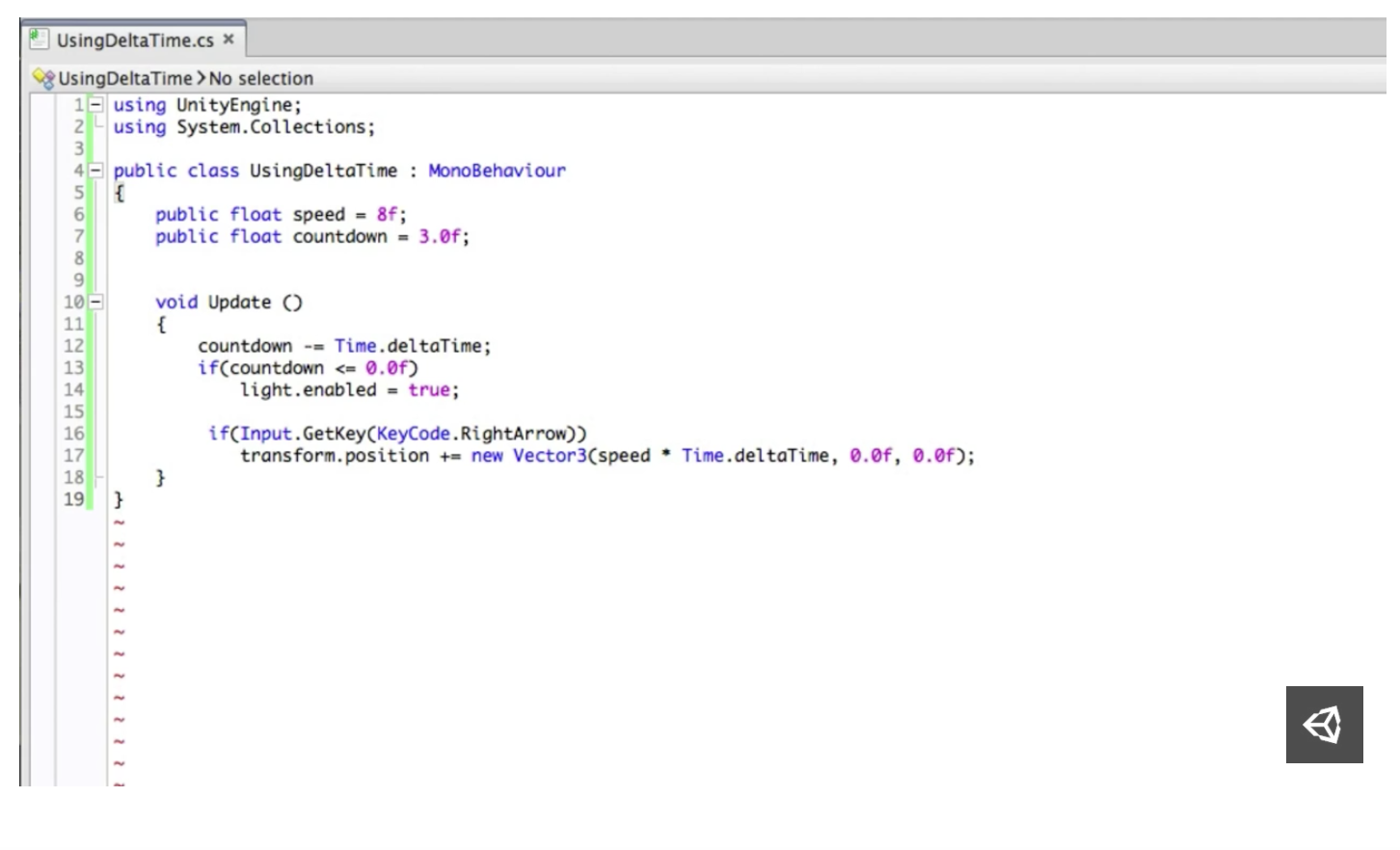

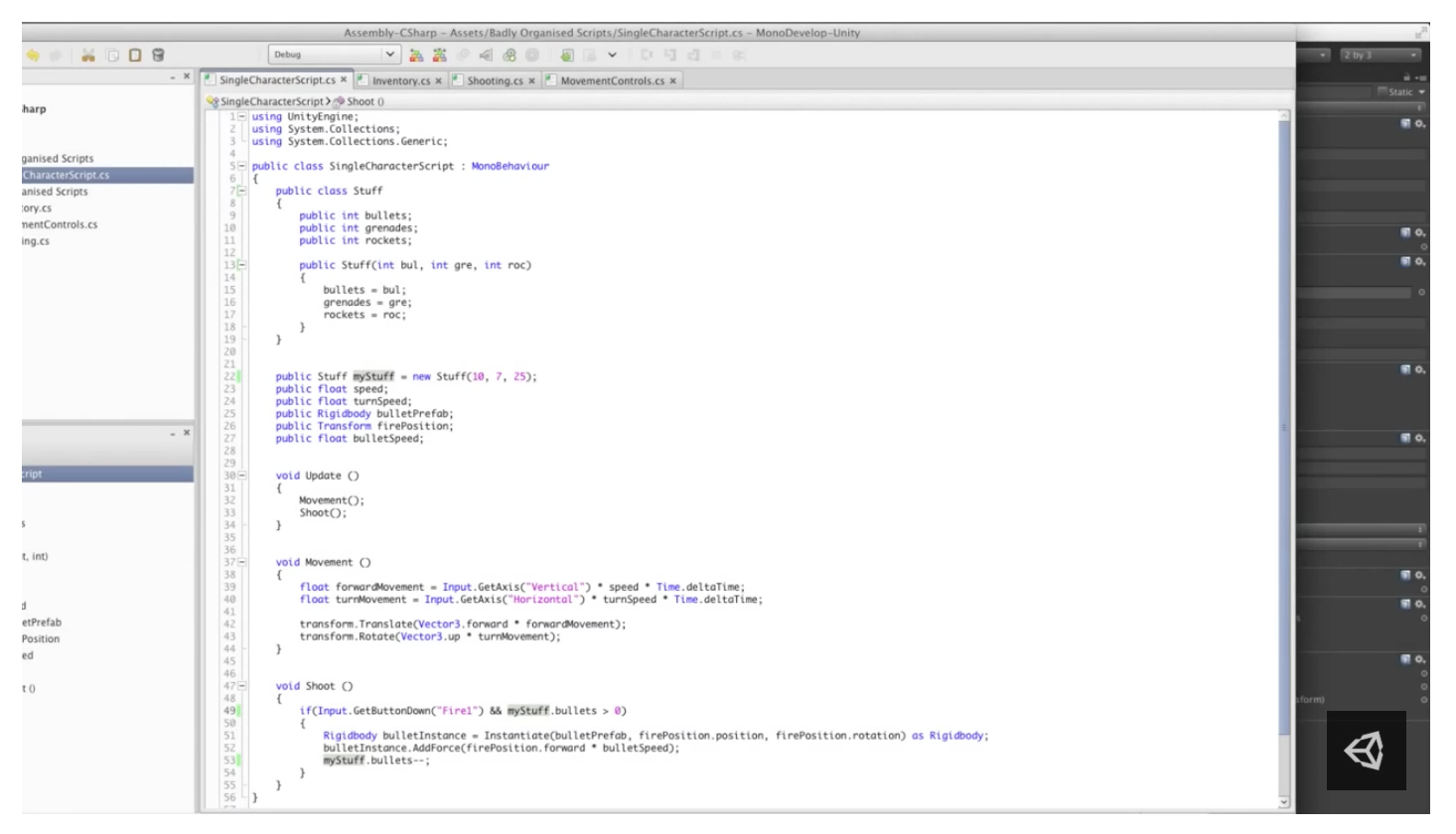

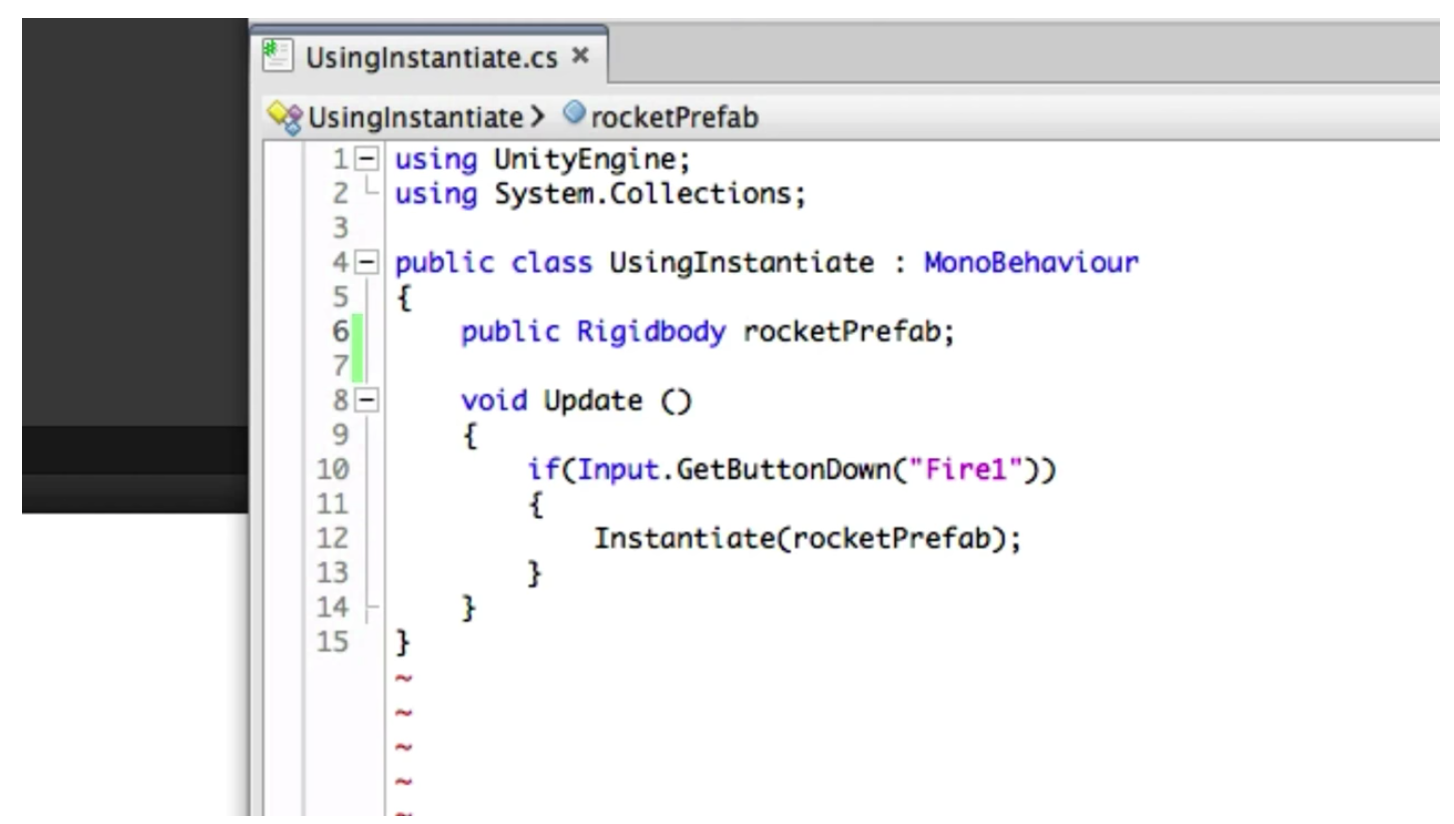

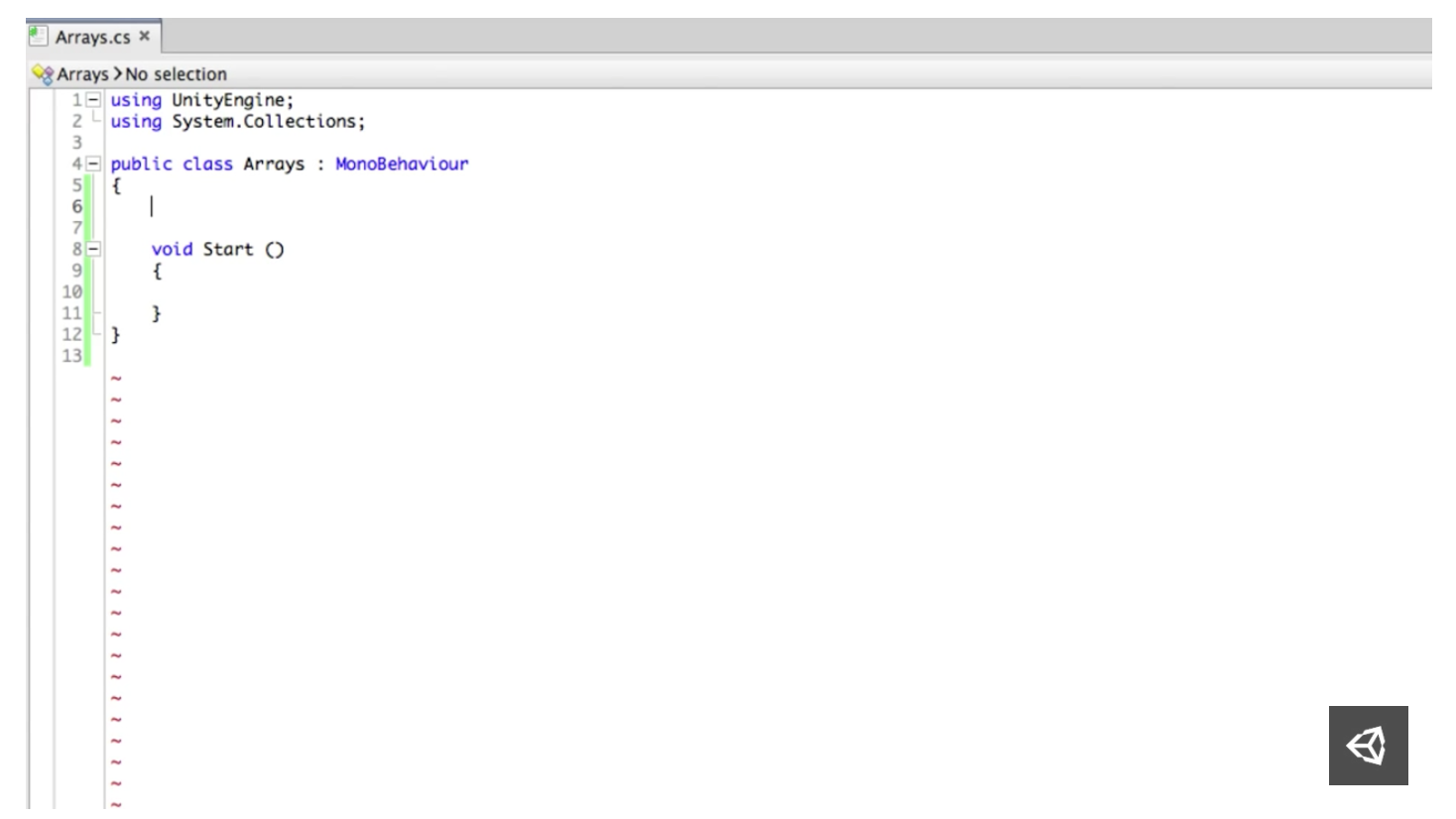

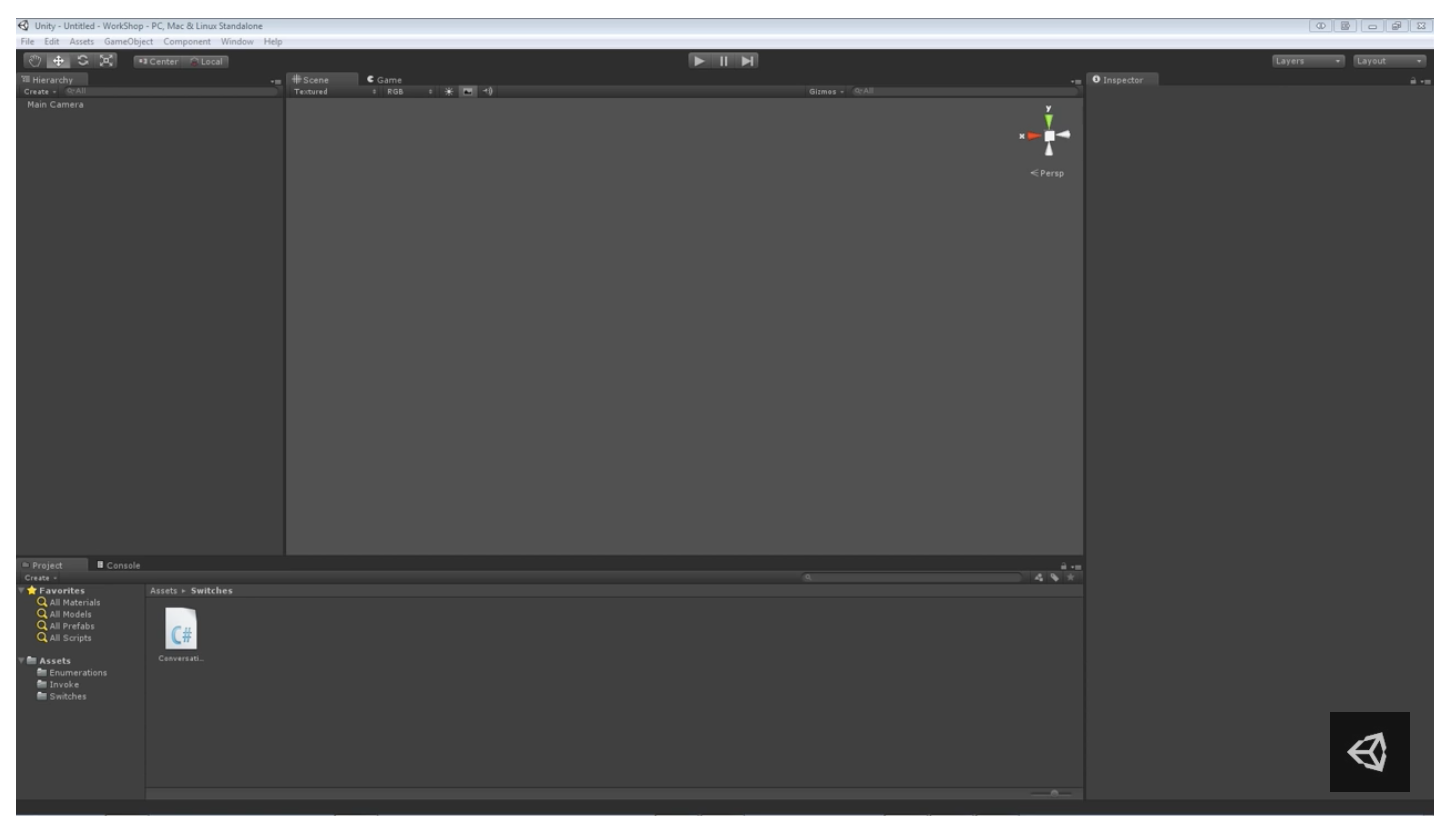

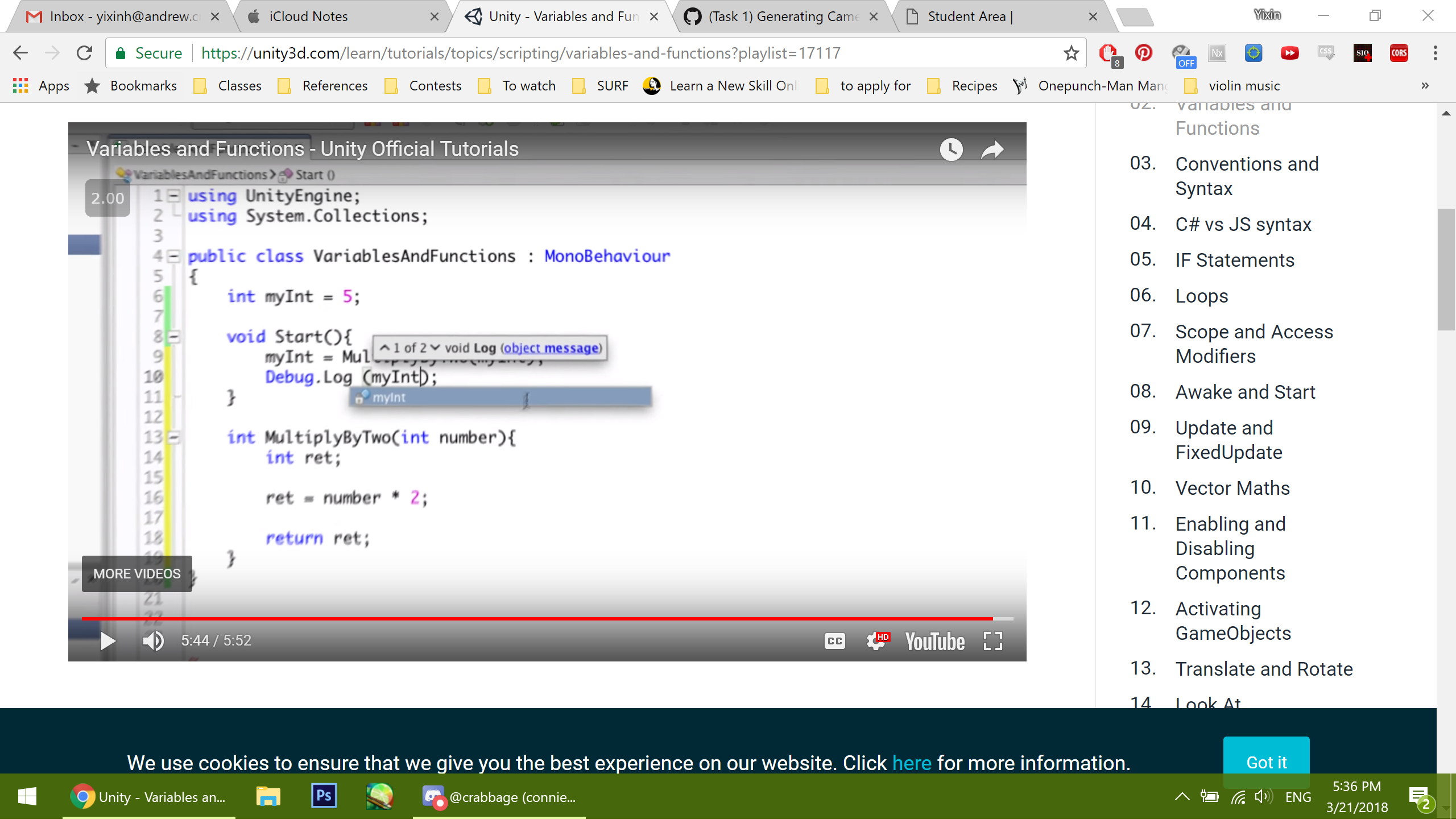

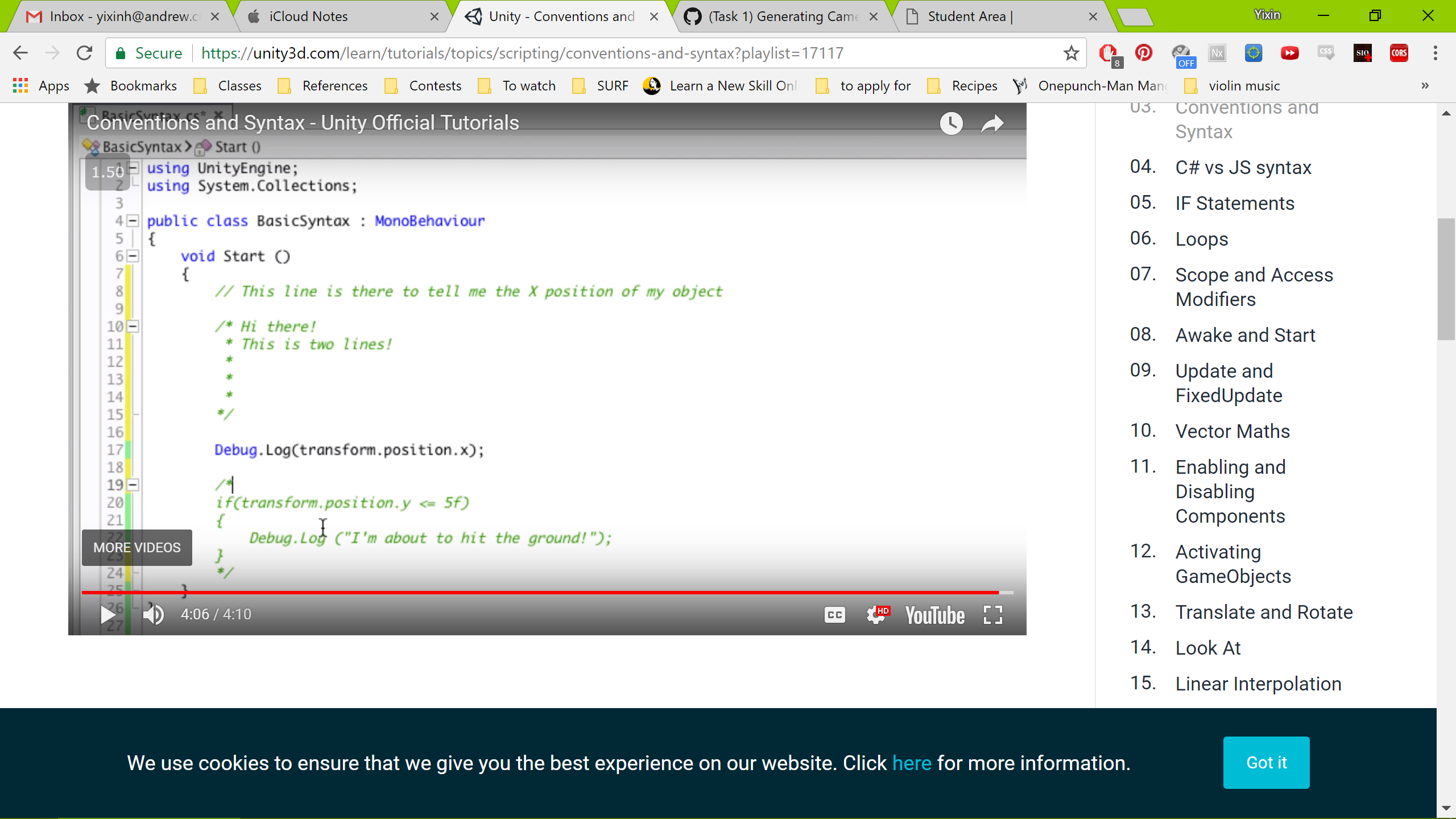

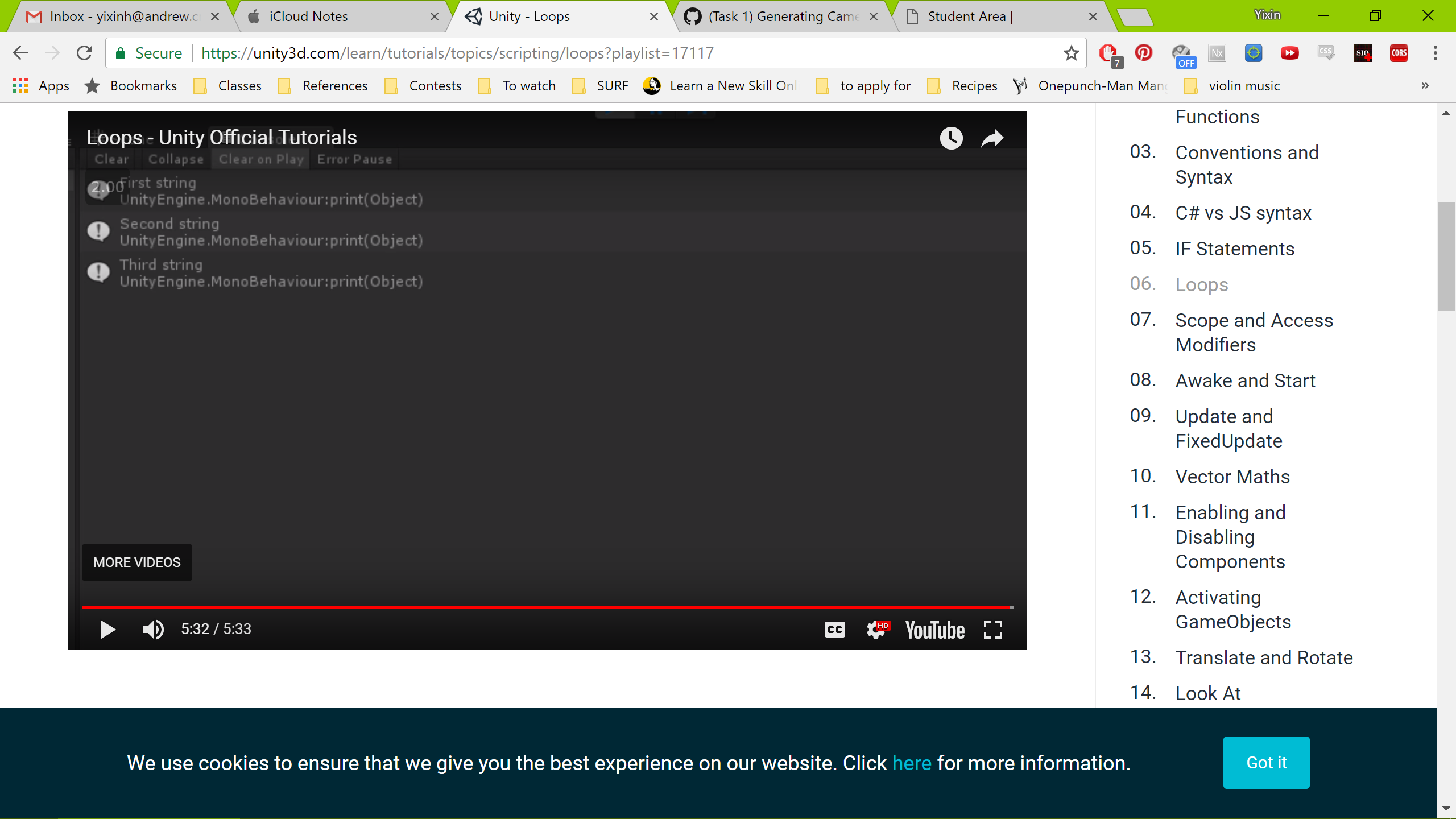

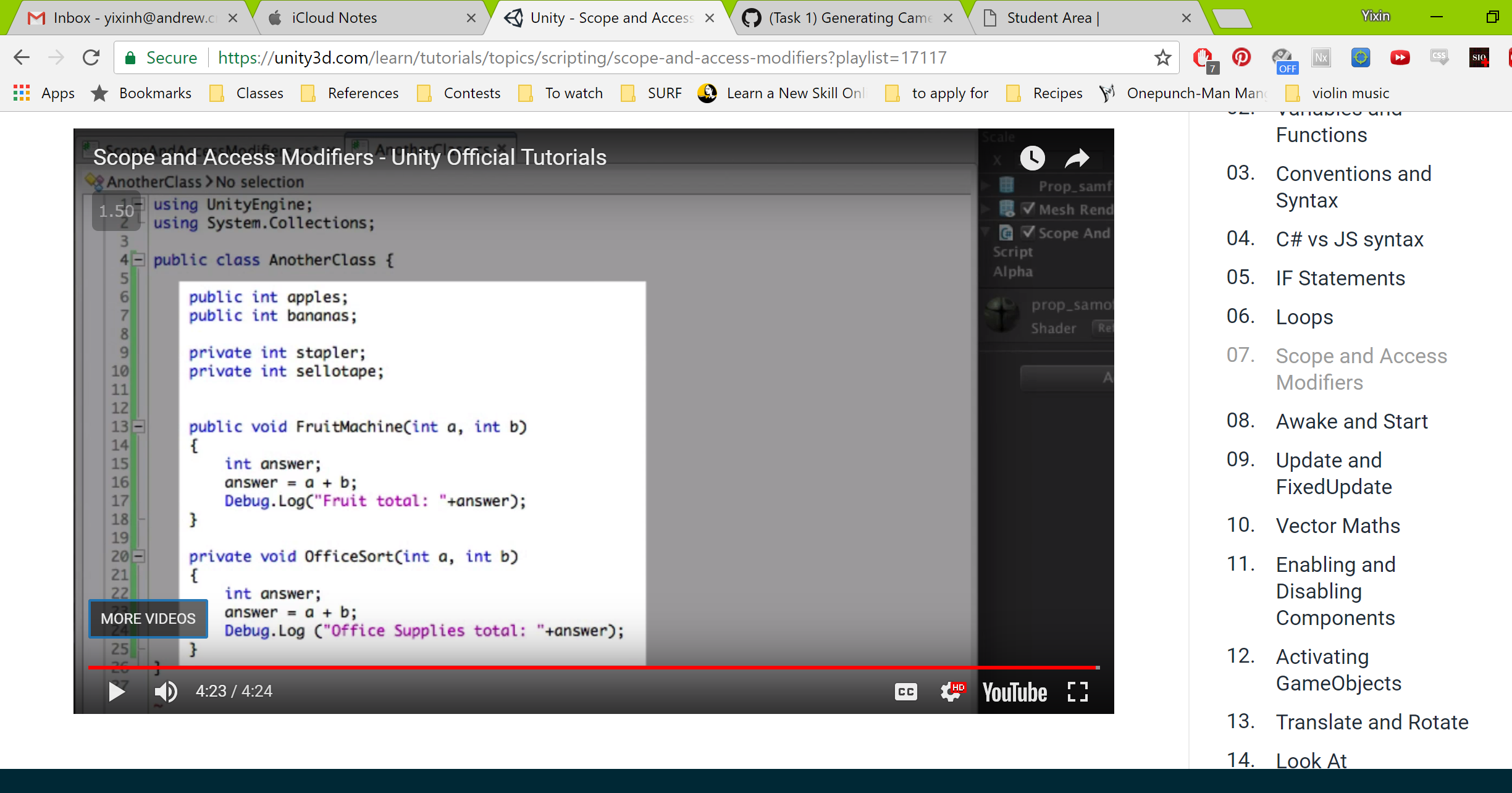

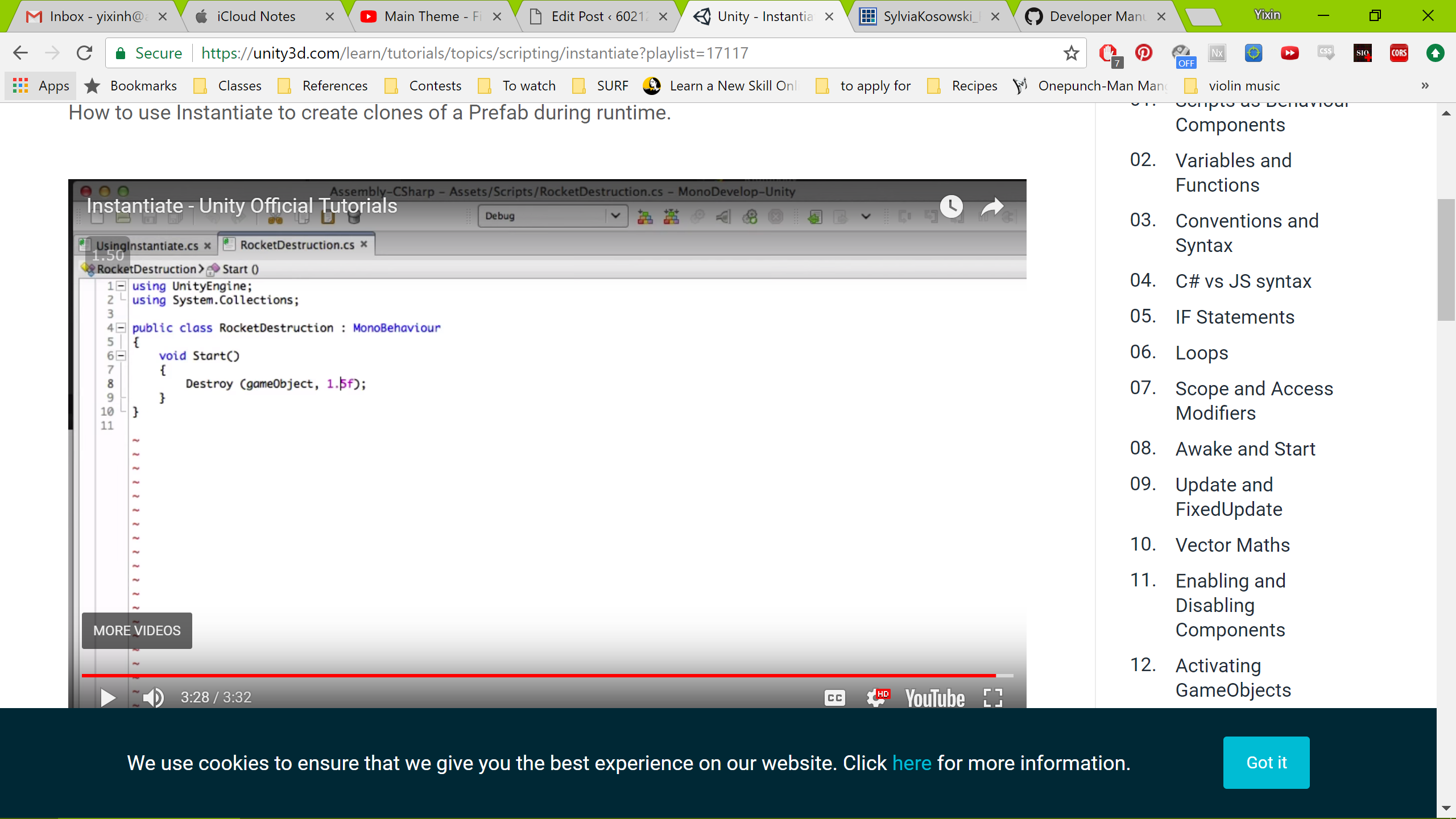

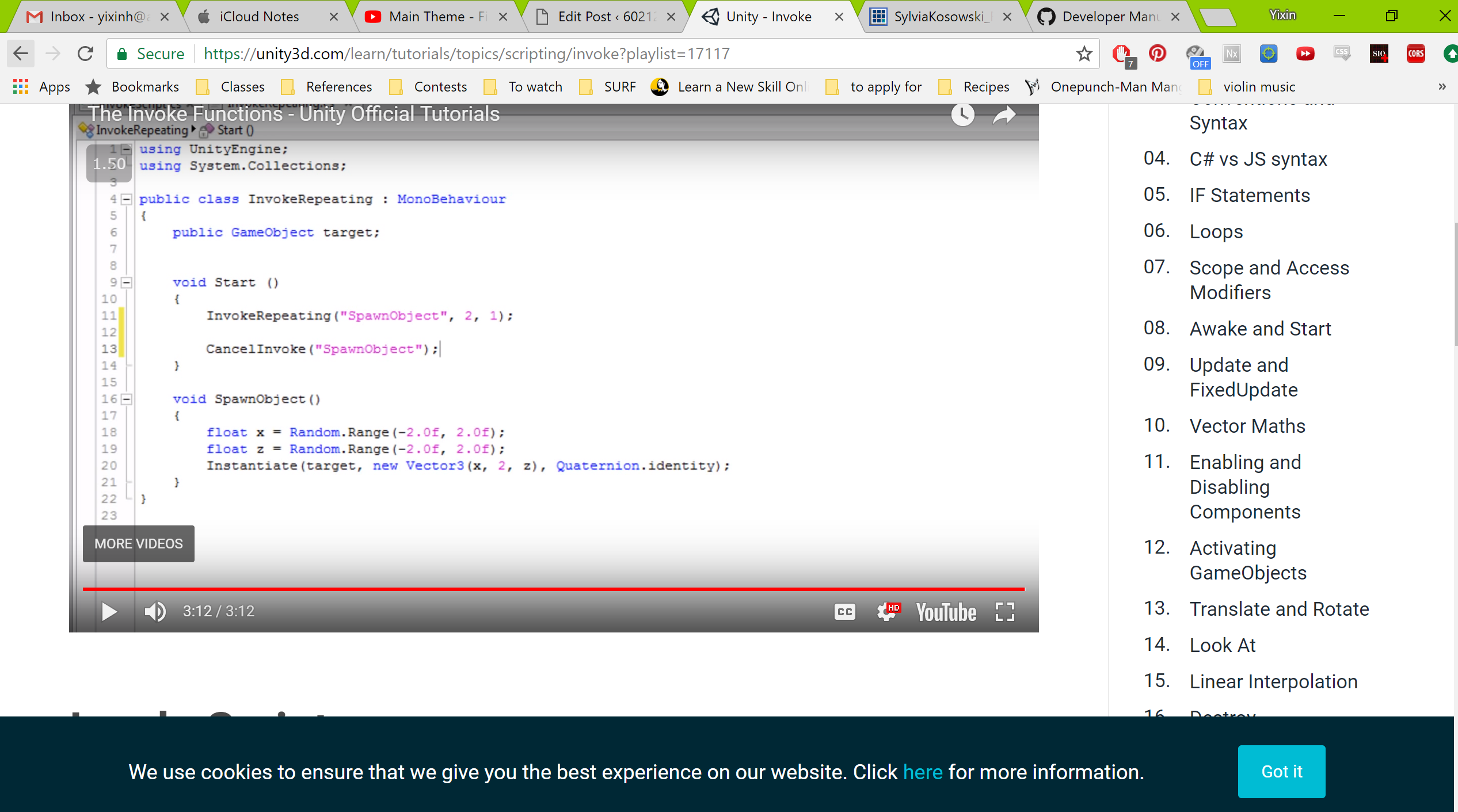

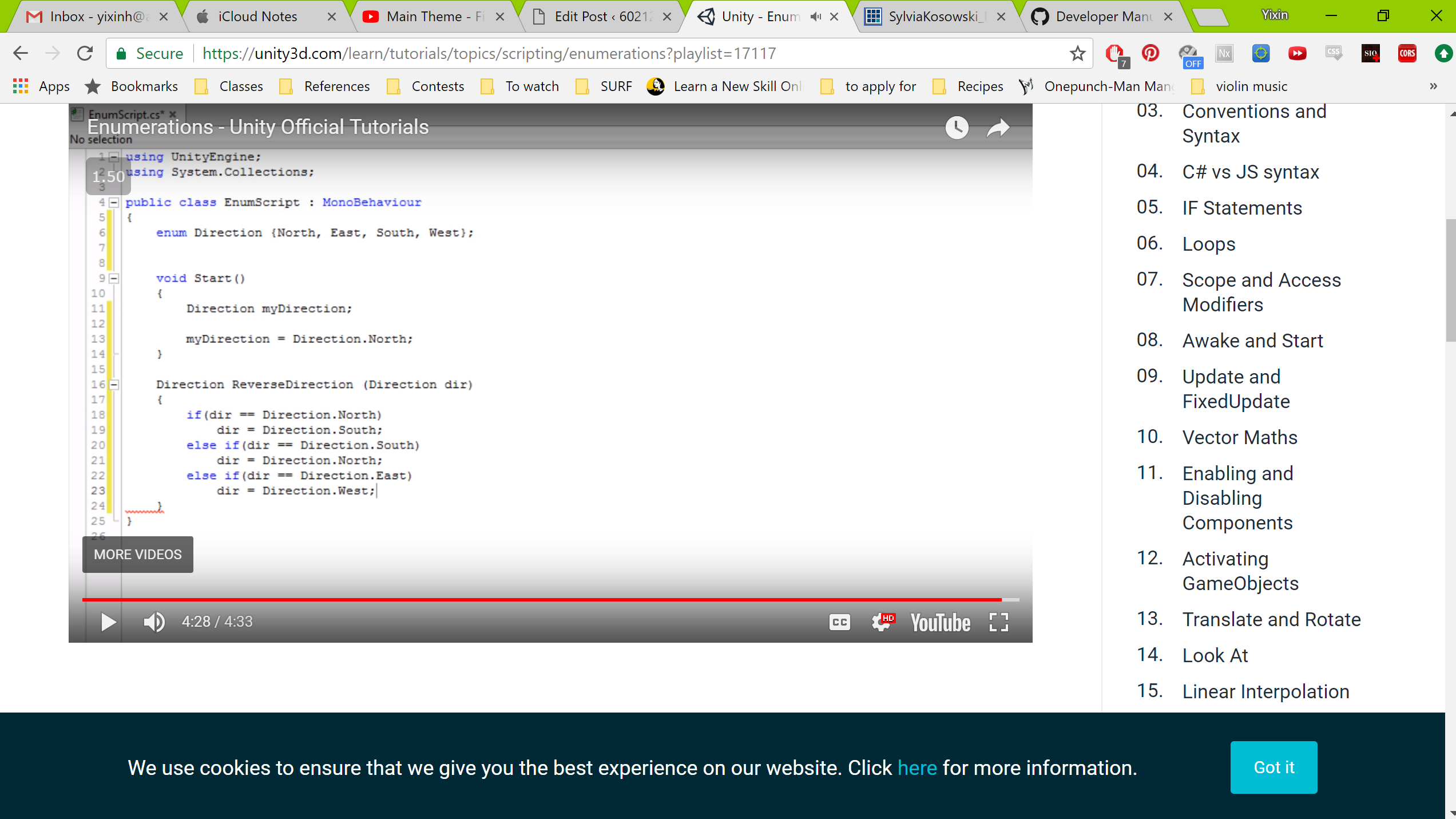

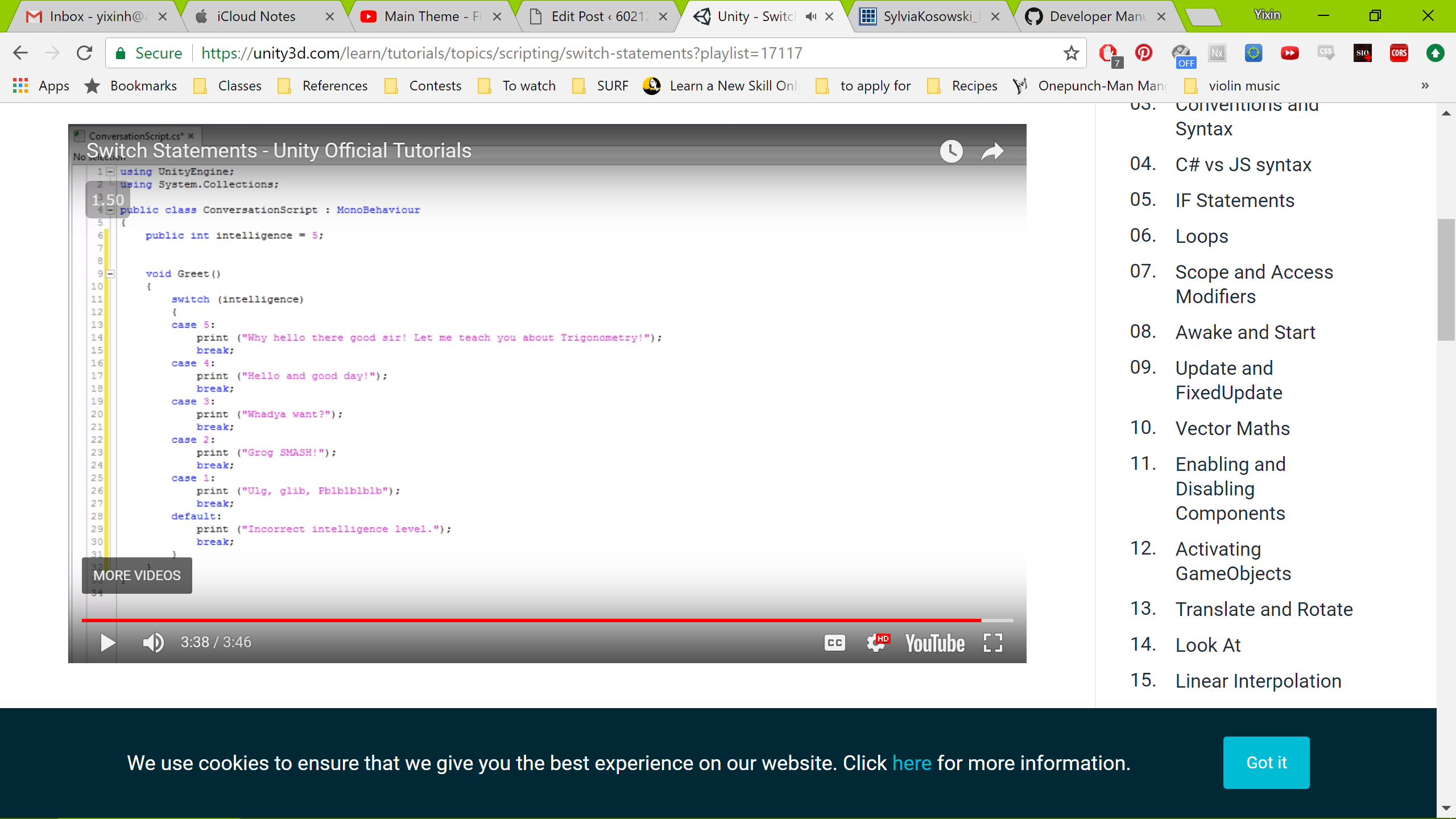

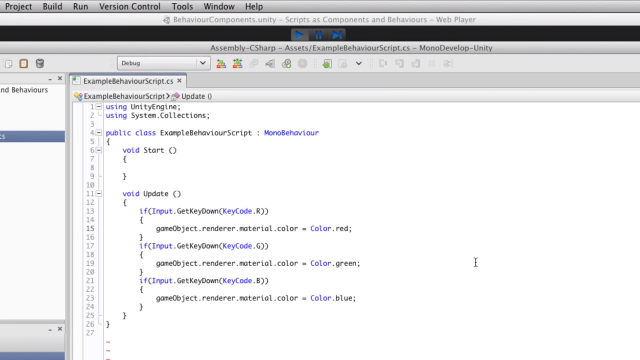

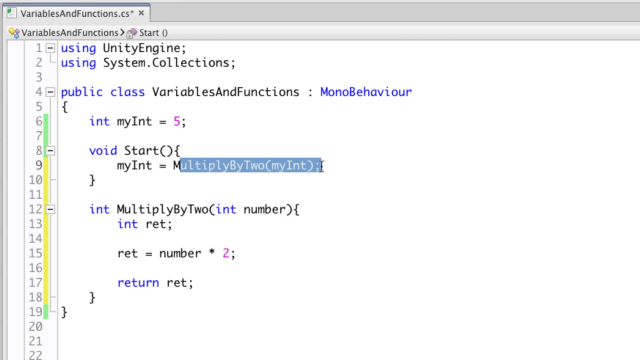

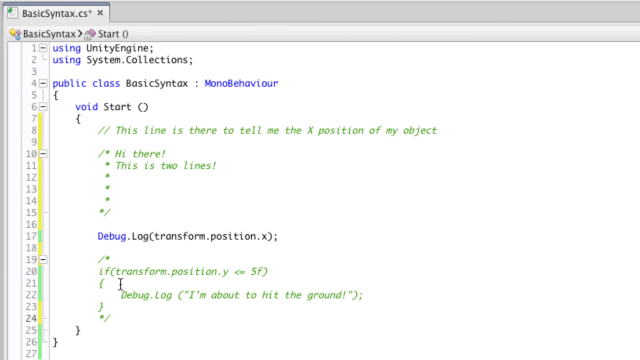

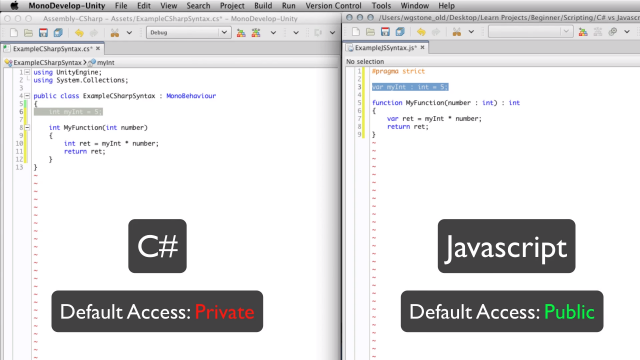

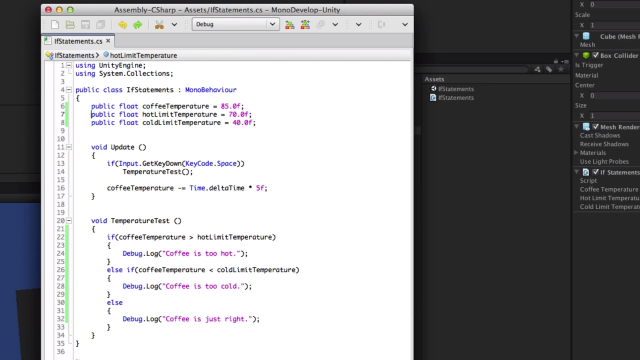

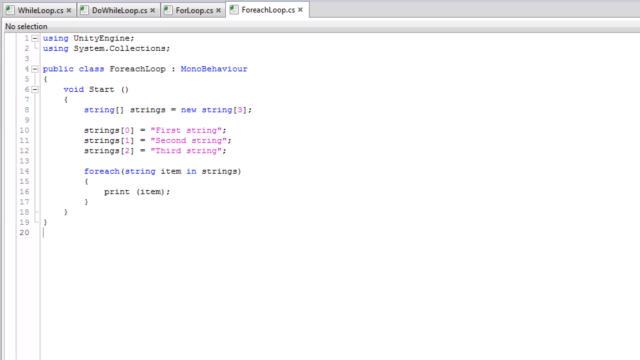

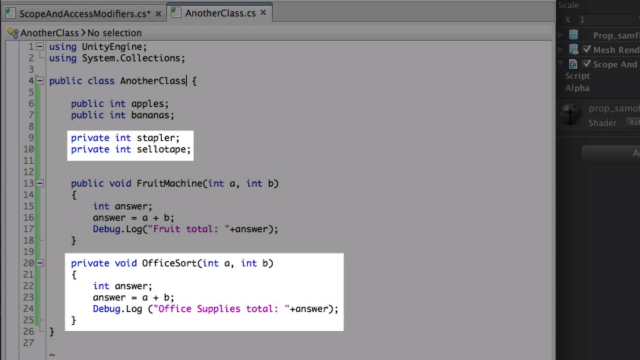

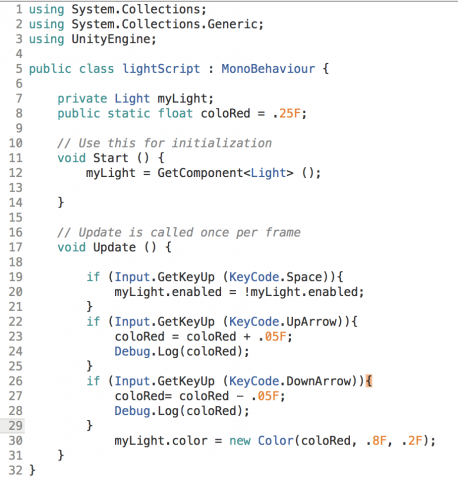

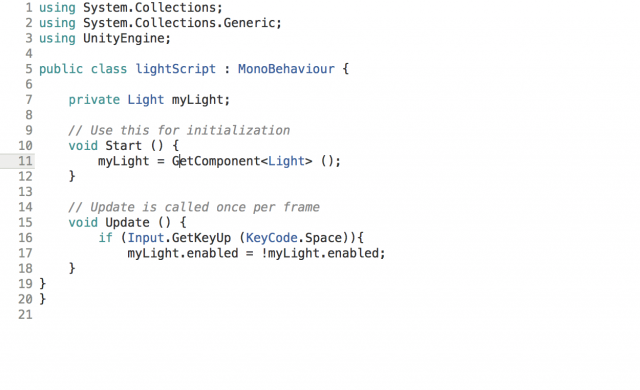

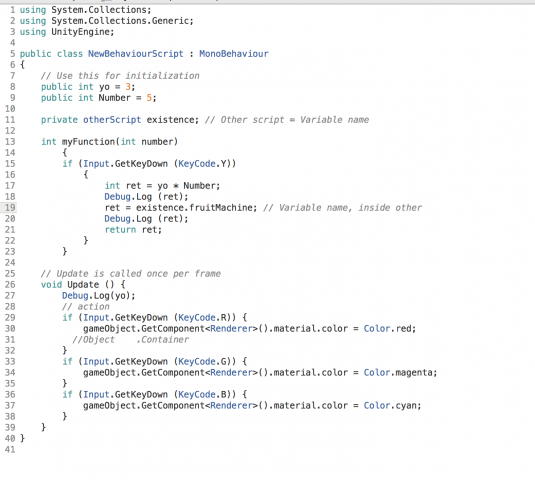

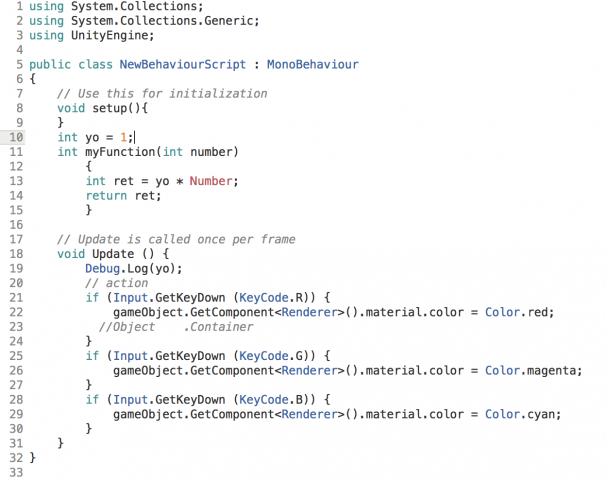

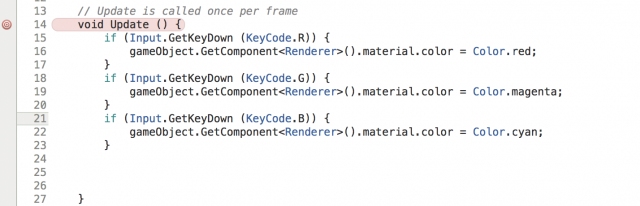

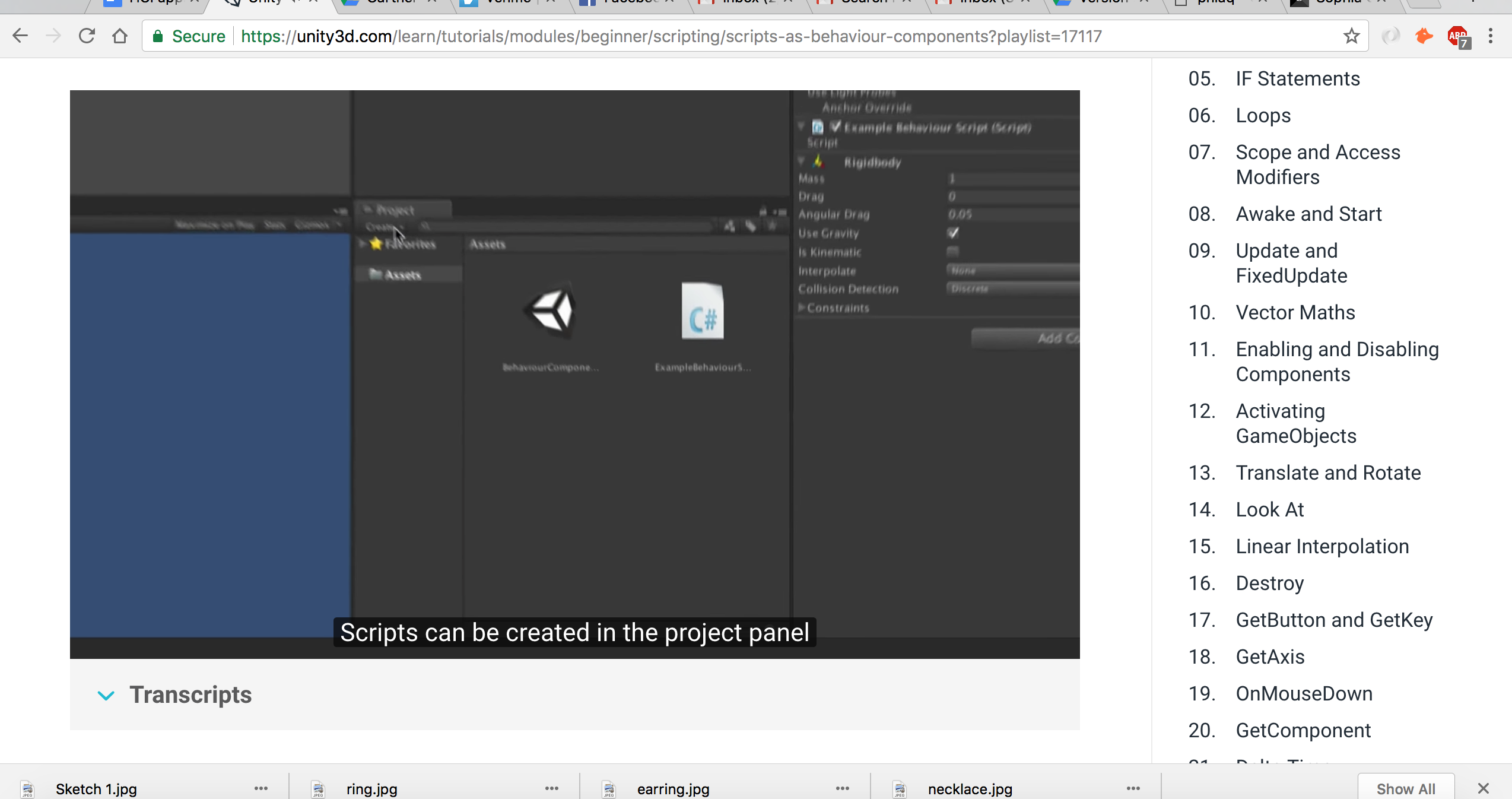

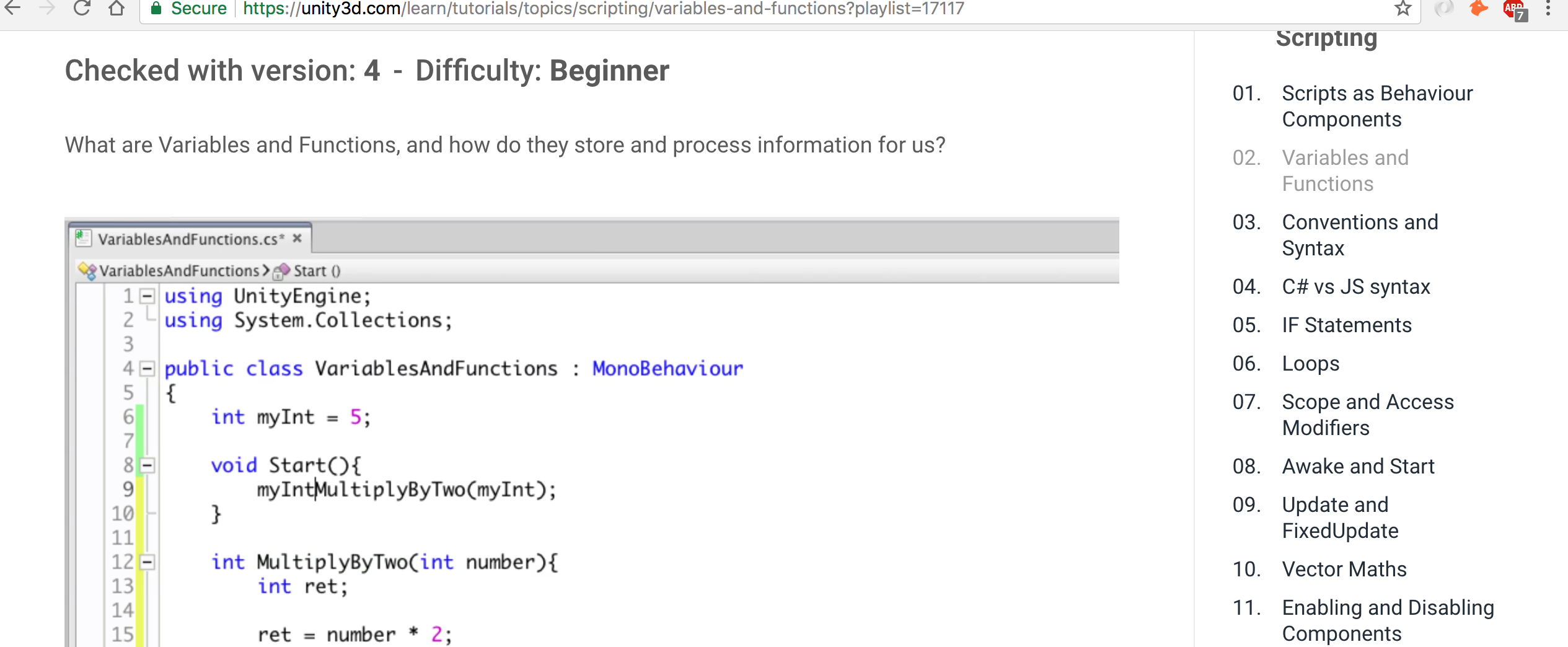

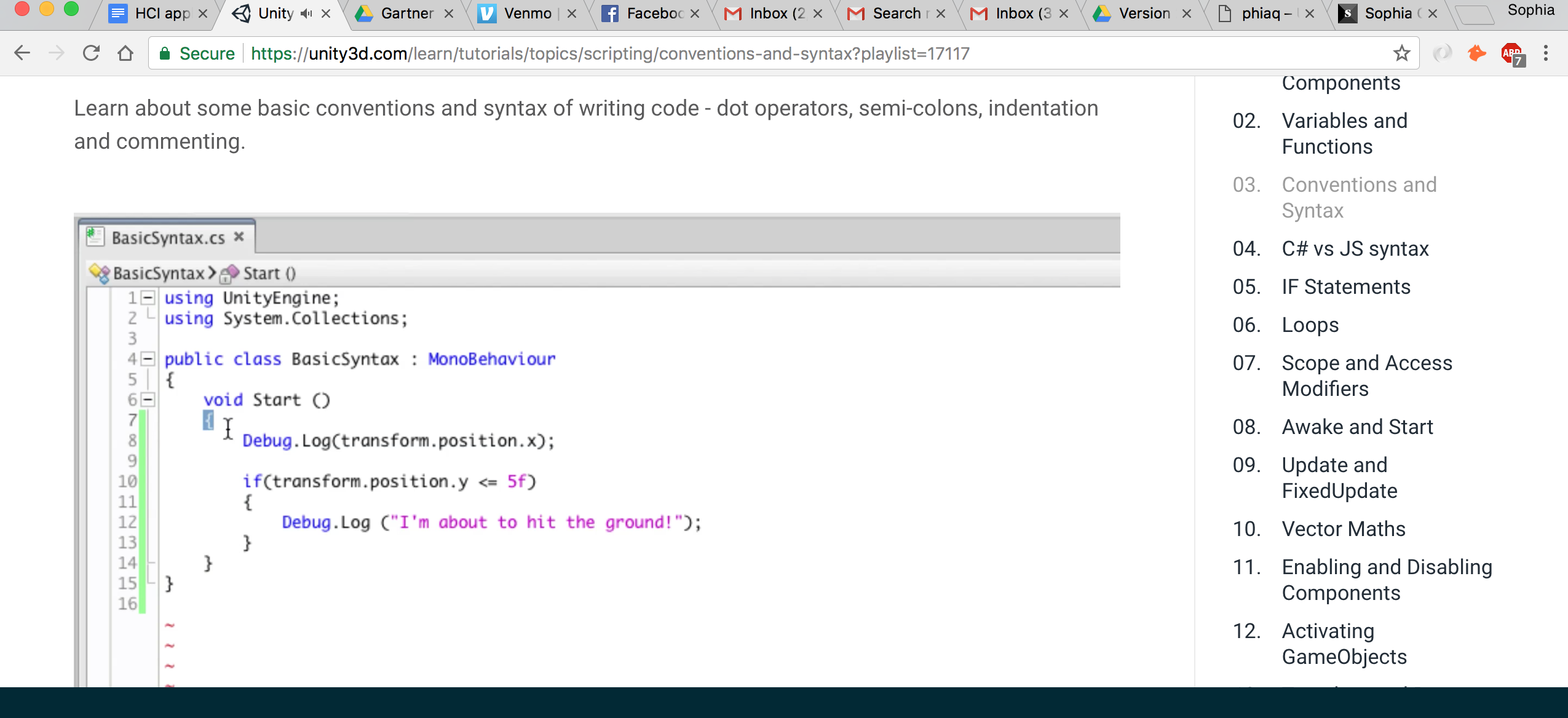

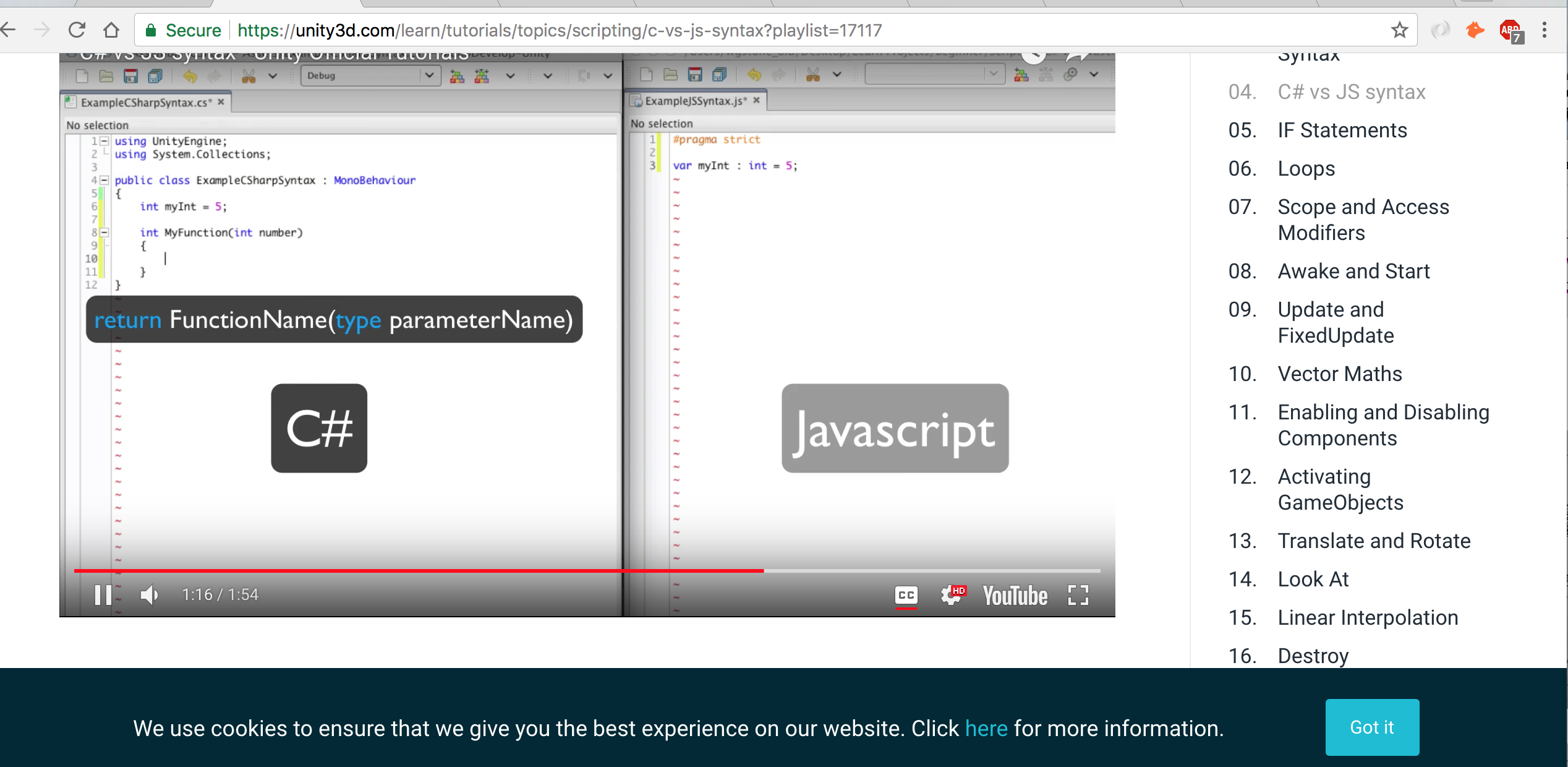

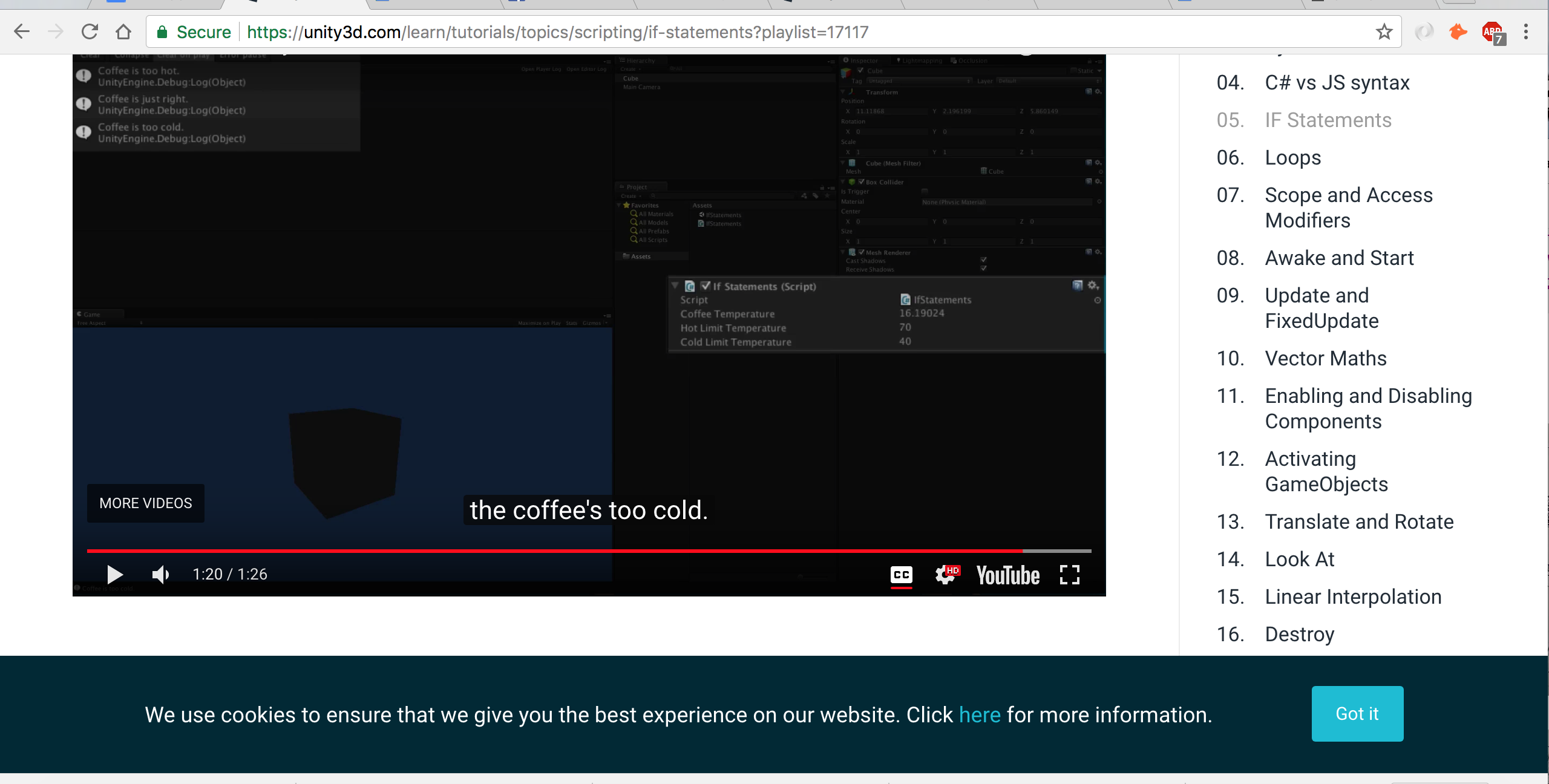

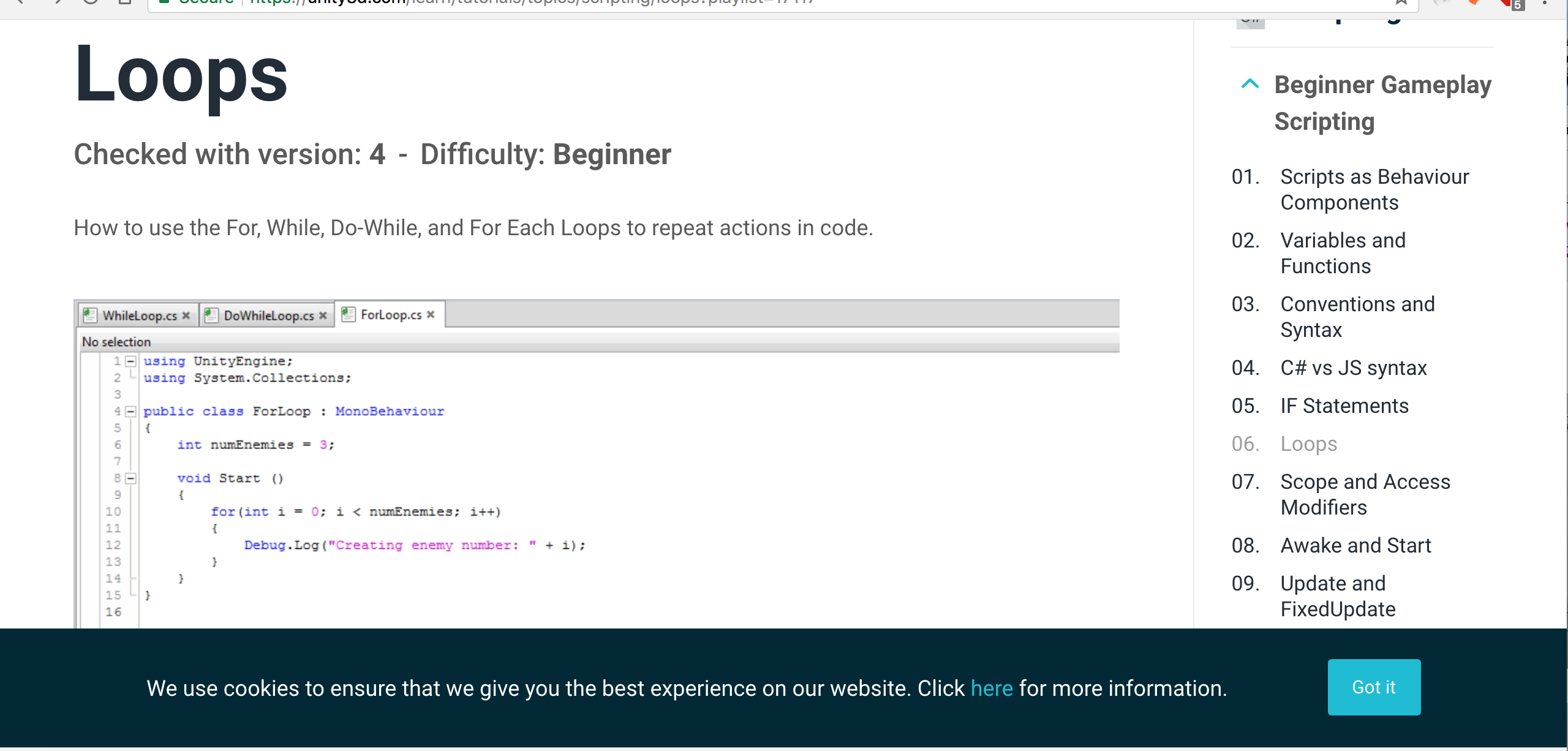

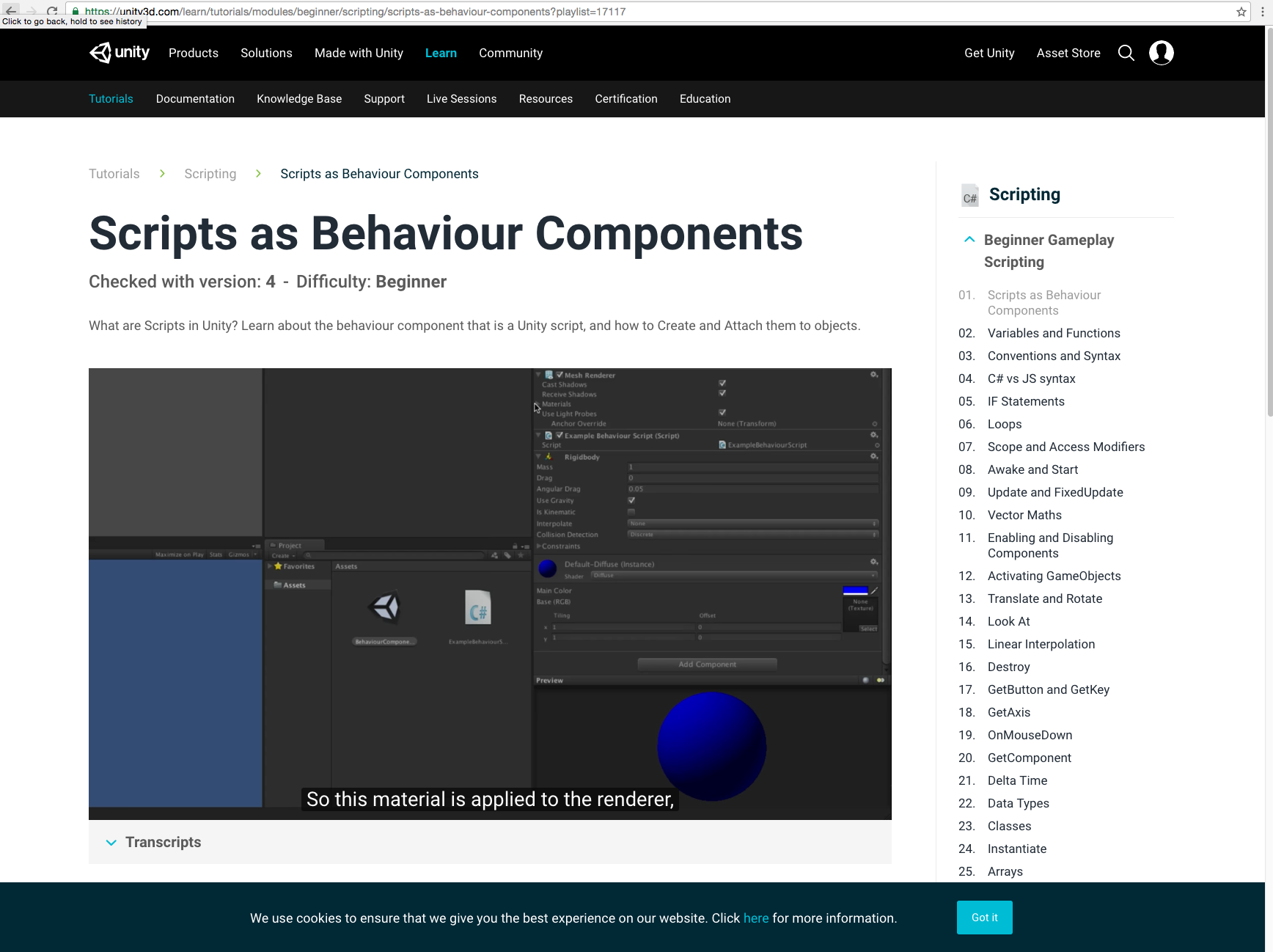

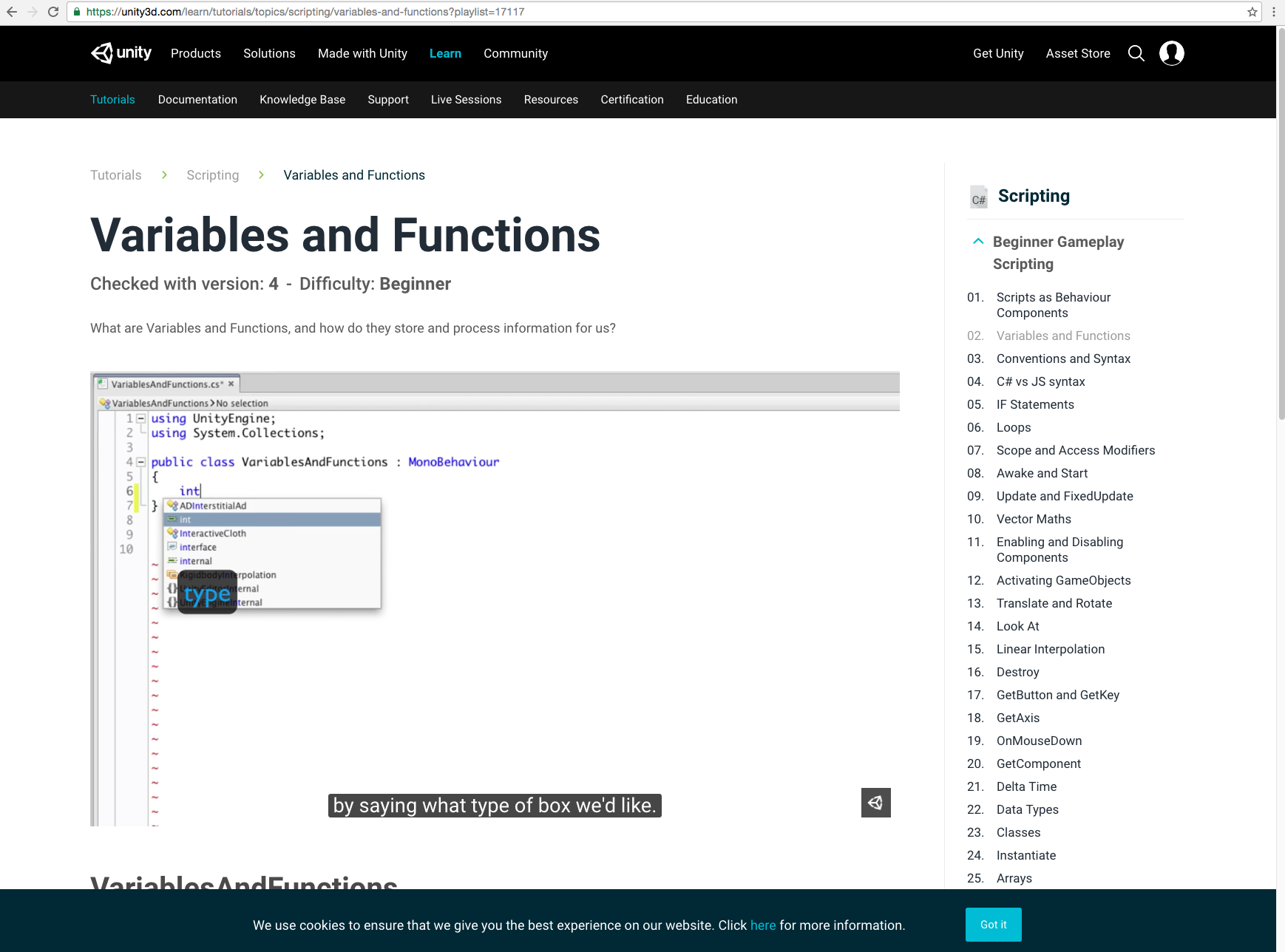

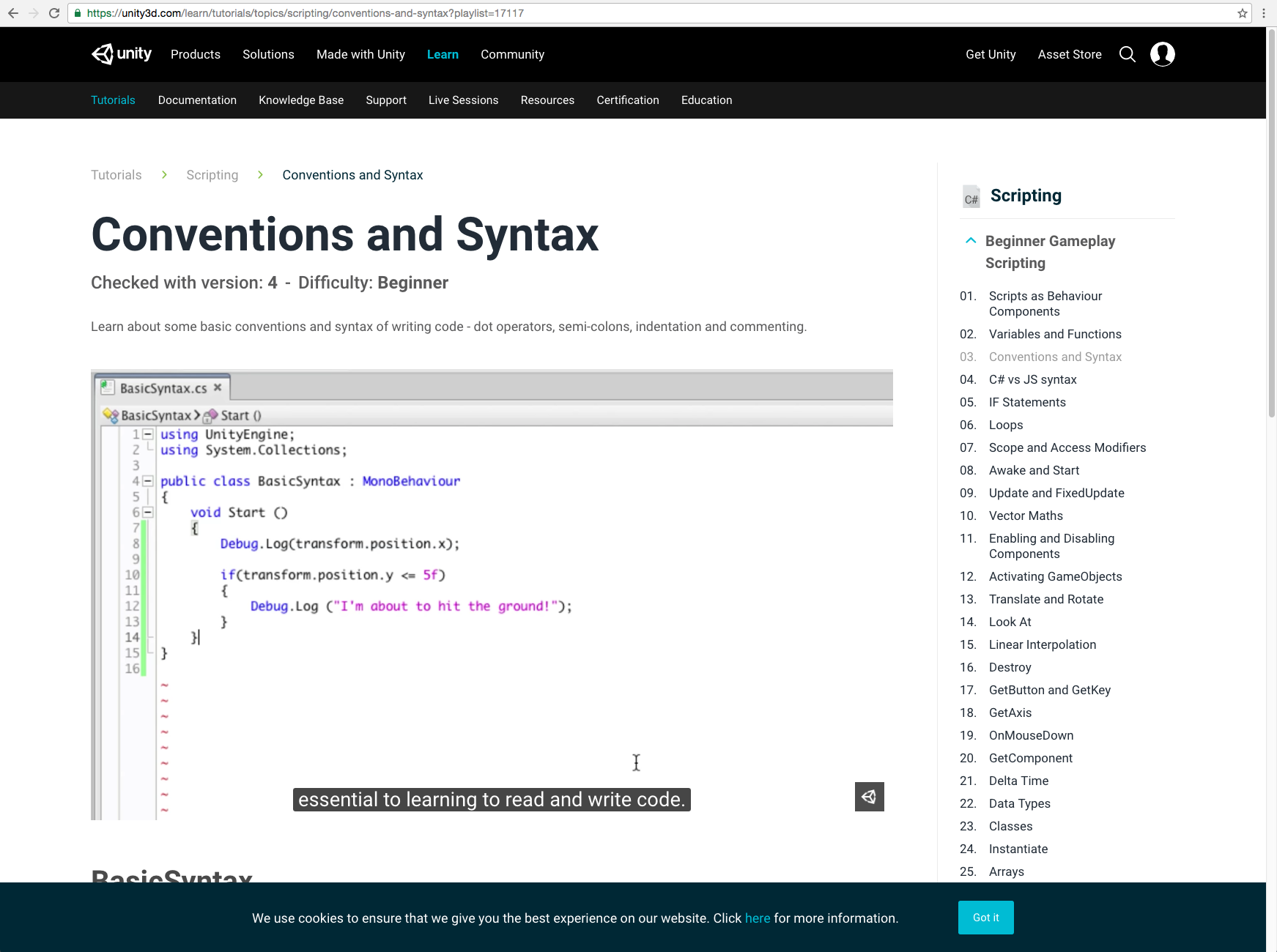

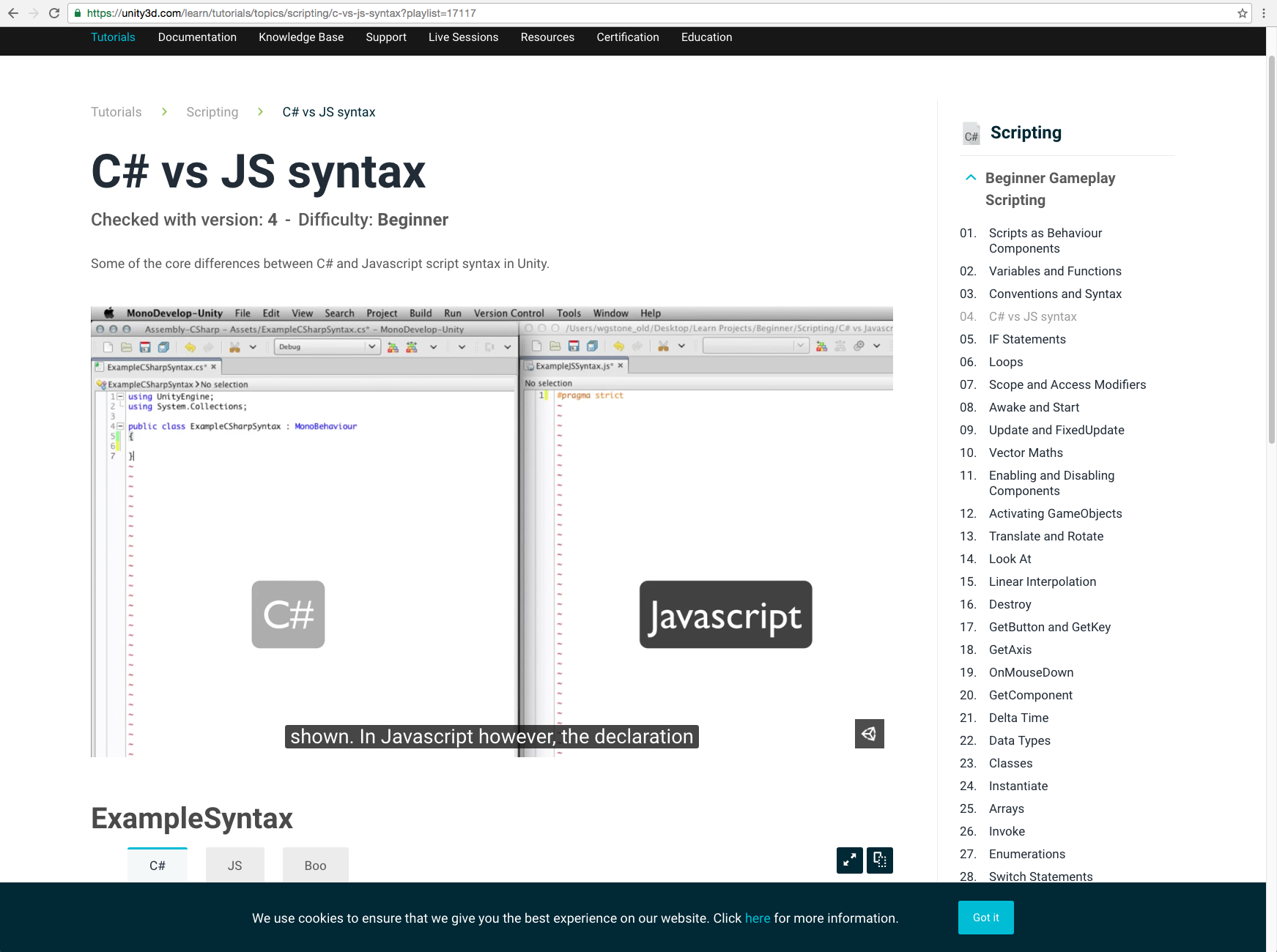

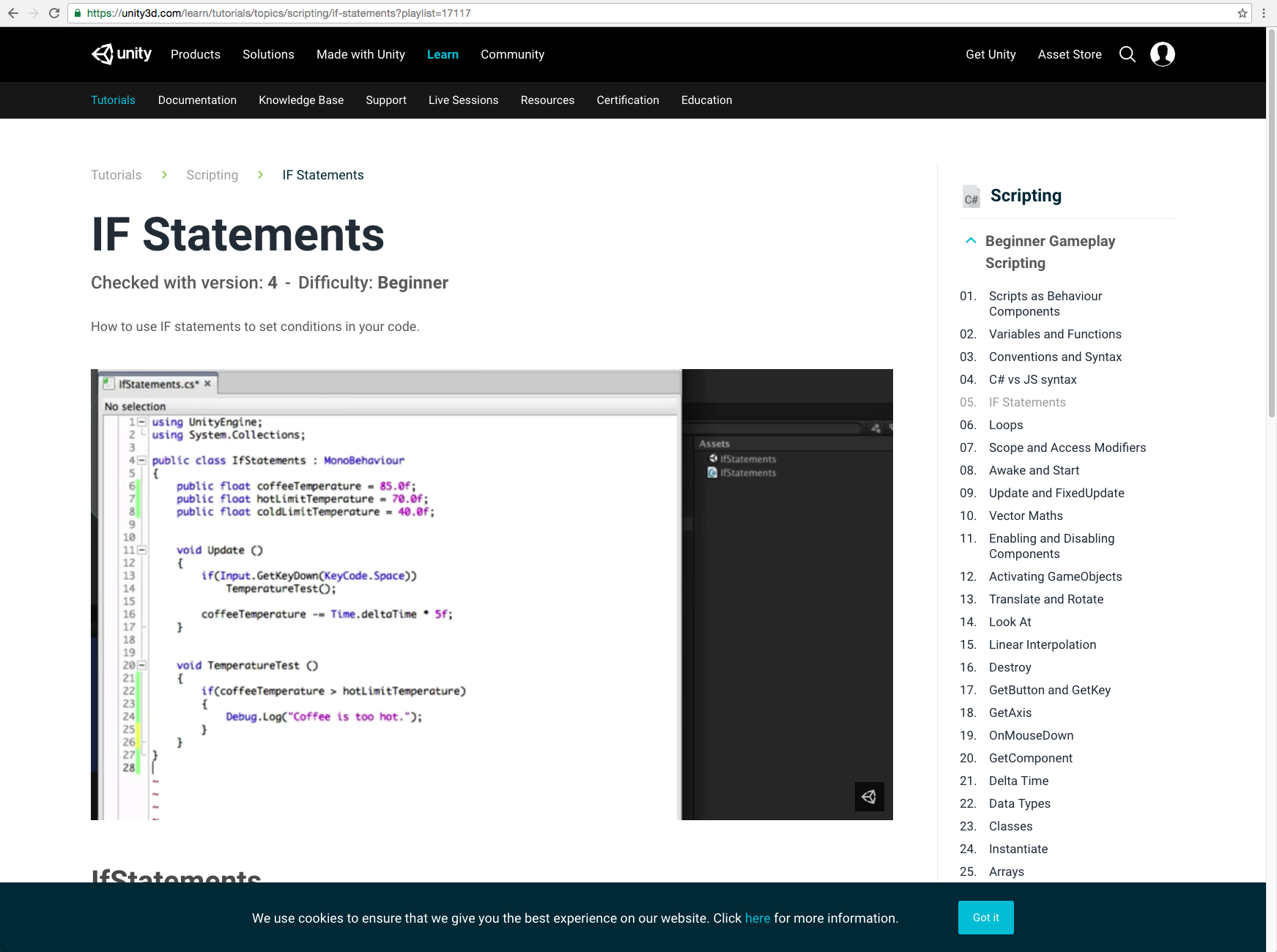

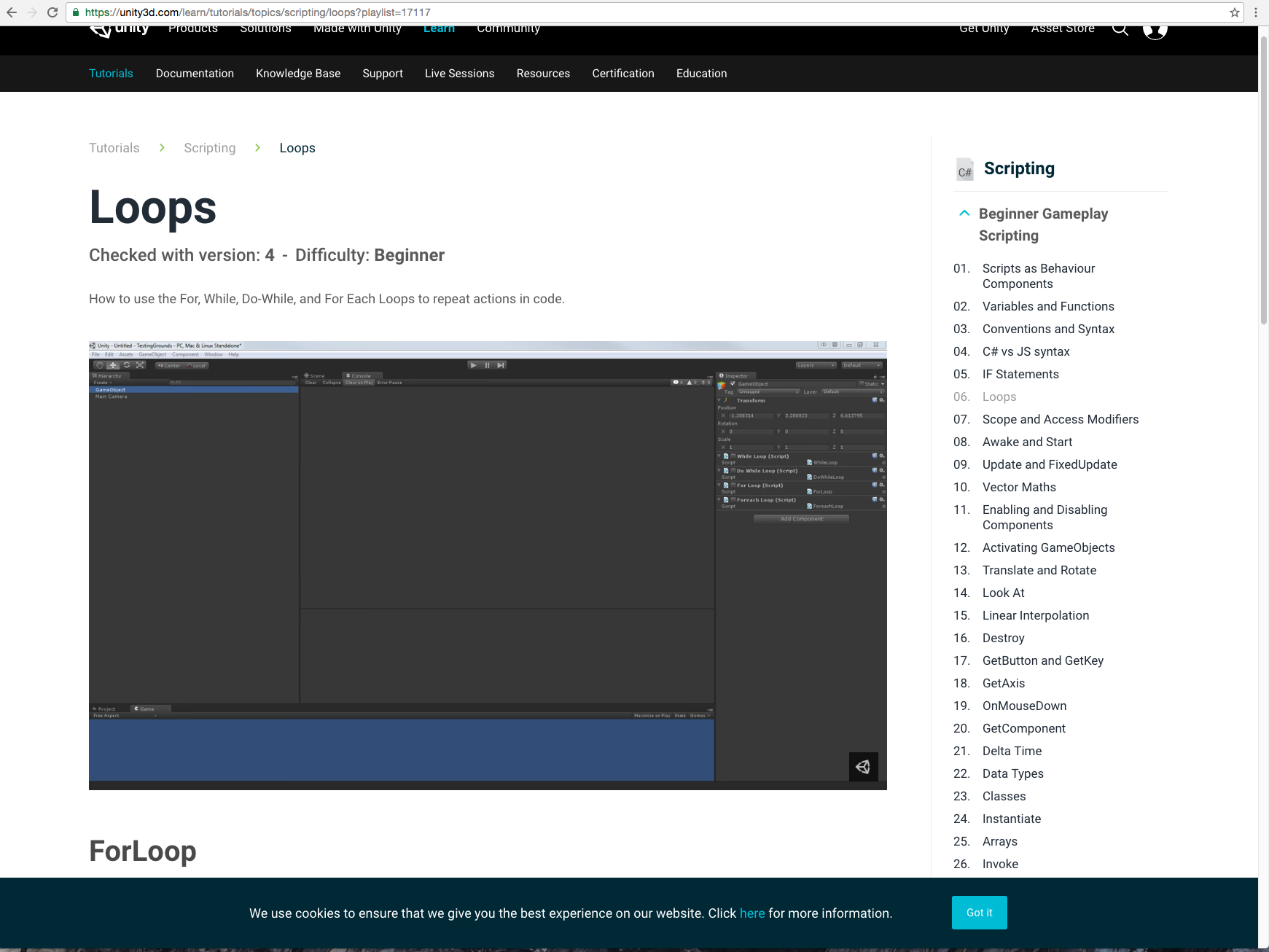

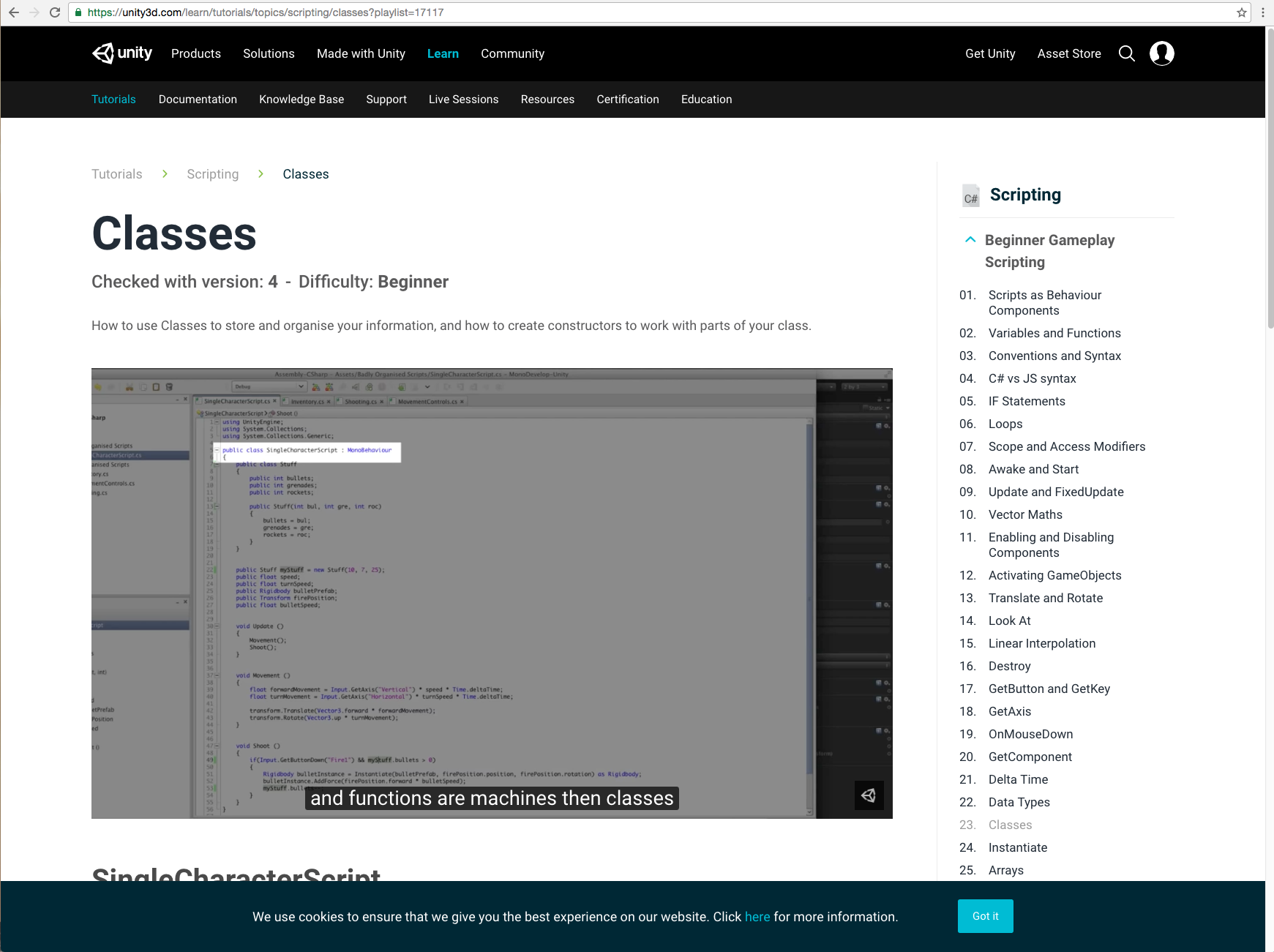

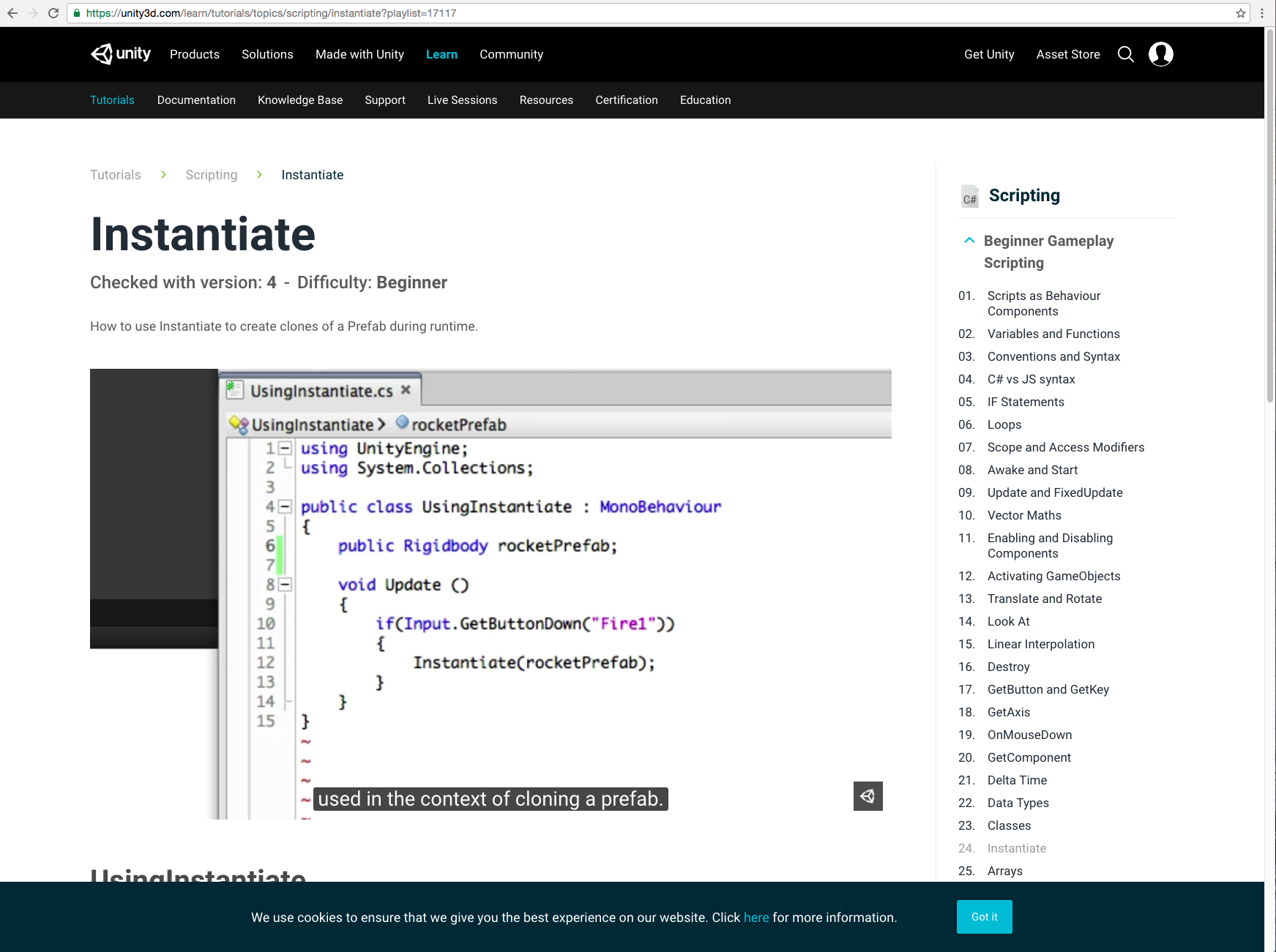

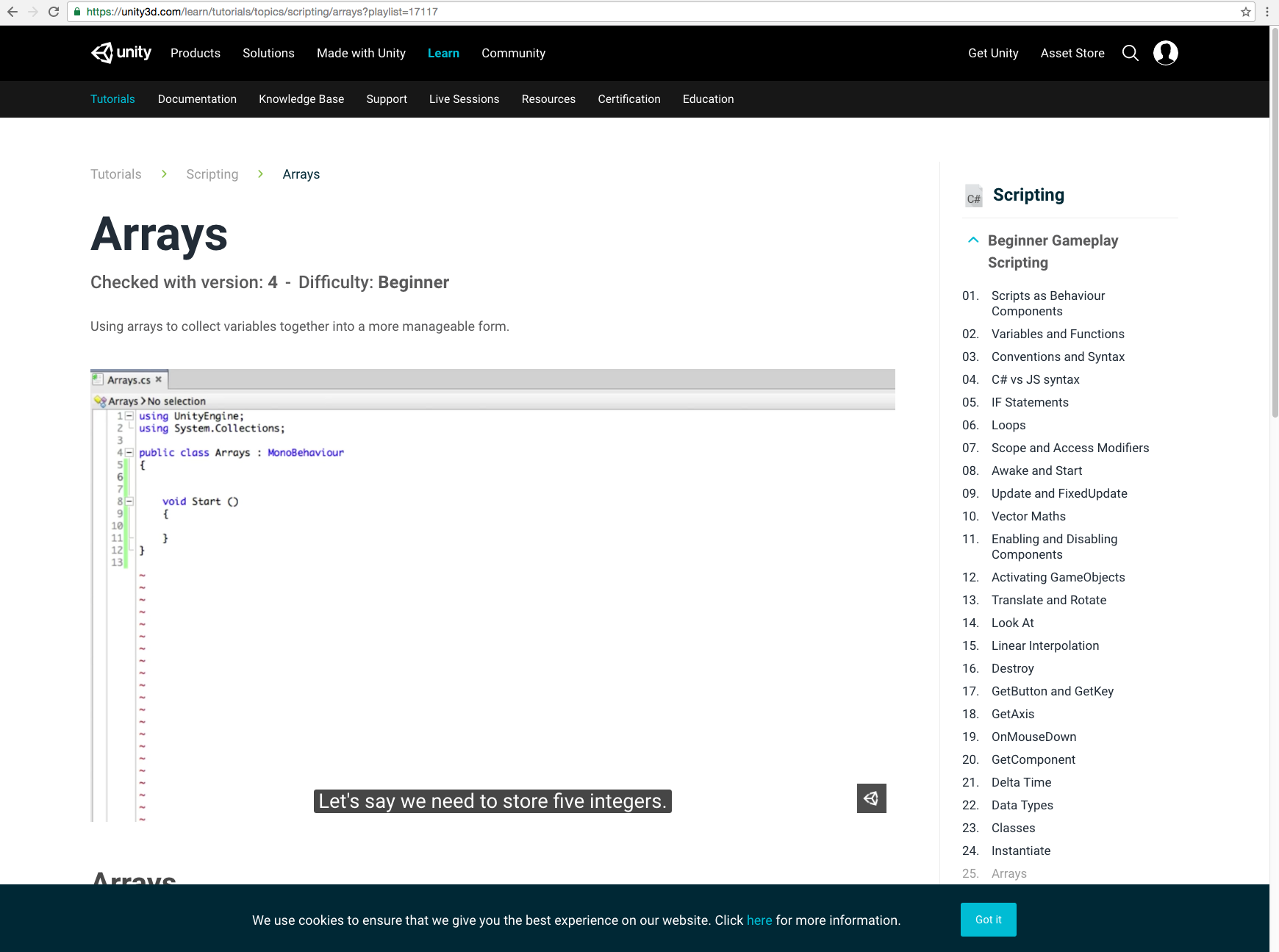

I watched the first few Beginner Scripting tutorials, documented here:

ookey-UnityEssentials

tyvan-Unity2

kerjos-lookingoutwards07

Memo Akten: “Learning to See”: You Are What You See. 2017.

On Tuesday, Chelsea Manning called for an ethics of engineering from programmers bringing new algorithmic tools into the world. This came during her conversation with Heather Dewey-Hagborg, as part of the School of Art’s Spring lecture series, which also focused on the machine-learning based models of Manning’s face on which the two of them had collaborated.

It’s fortunate that artists like Dewey-Hagborg are among the earliest to help articulate the warnings of activists against the predictive algorithms that are quickly becoming integrated into all of our daily lives, because the task of representing something as esoteric and shrouded in fantasy as machine learning is a difficult one at best.

This is what Memo Akten is claiming to do with his “Learning to see” project: By visualizing the limits of the machine-learning algorithms that in other contexts are deployed to sell us products and identify enemies in occupied countries, Akten is suggesting that they are just as capable of error as human operators in these contexts.

But I think it’s a bit disappointing that, with such a powerful tool at his disposal—in particular one that is now live—Atken is choosing to turn his algorithm on the same subject-dataset pairings that Google is using: The programmer and clouds. Or flowers and something as banal as his headphones and keys. If Atken is claiming to use his live-action, neural-network predictions to address the flaws of the algorithms, he’s doing just the opposite by generating beautiful AR visions of himself and the view from his office window. Why not blog about the applications of his work to different subjects?

tyvan-UnityEssentials

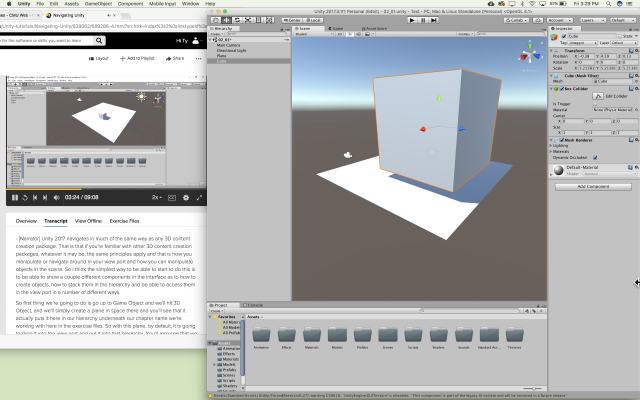

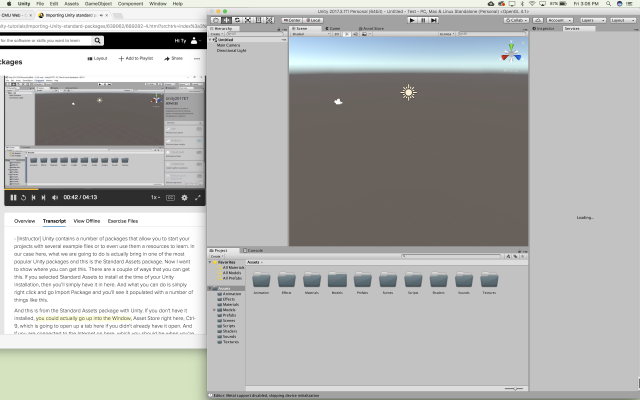

phiaq – Unity2

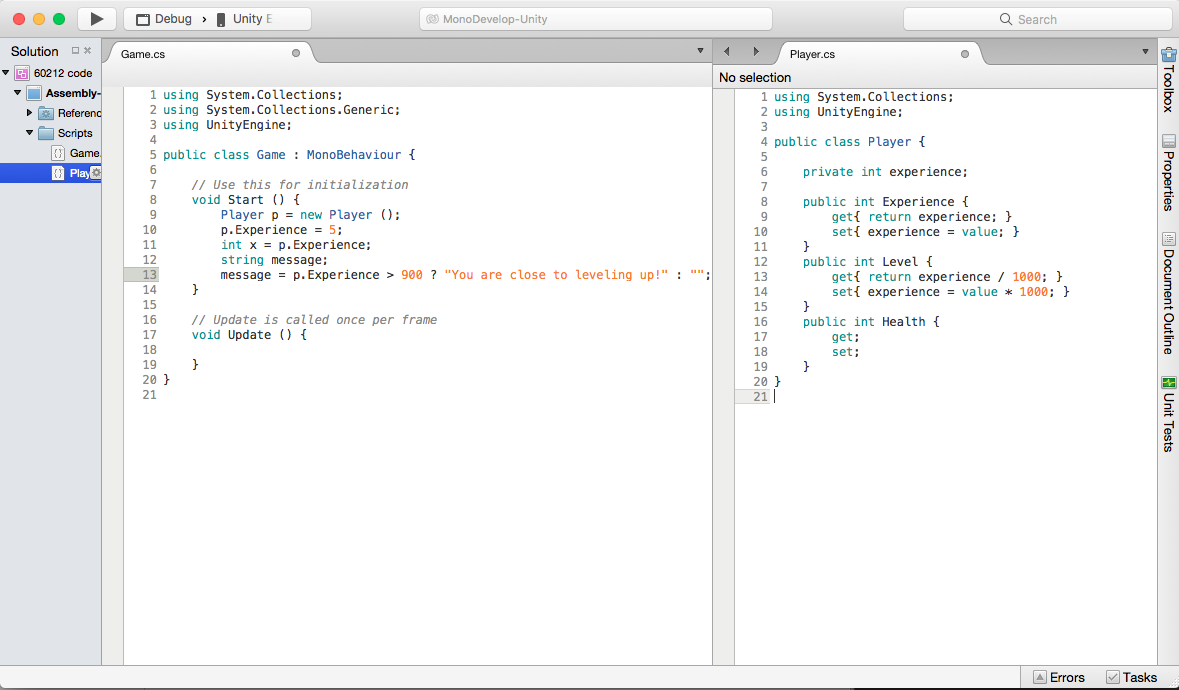

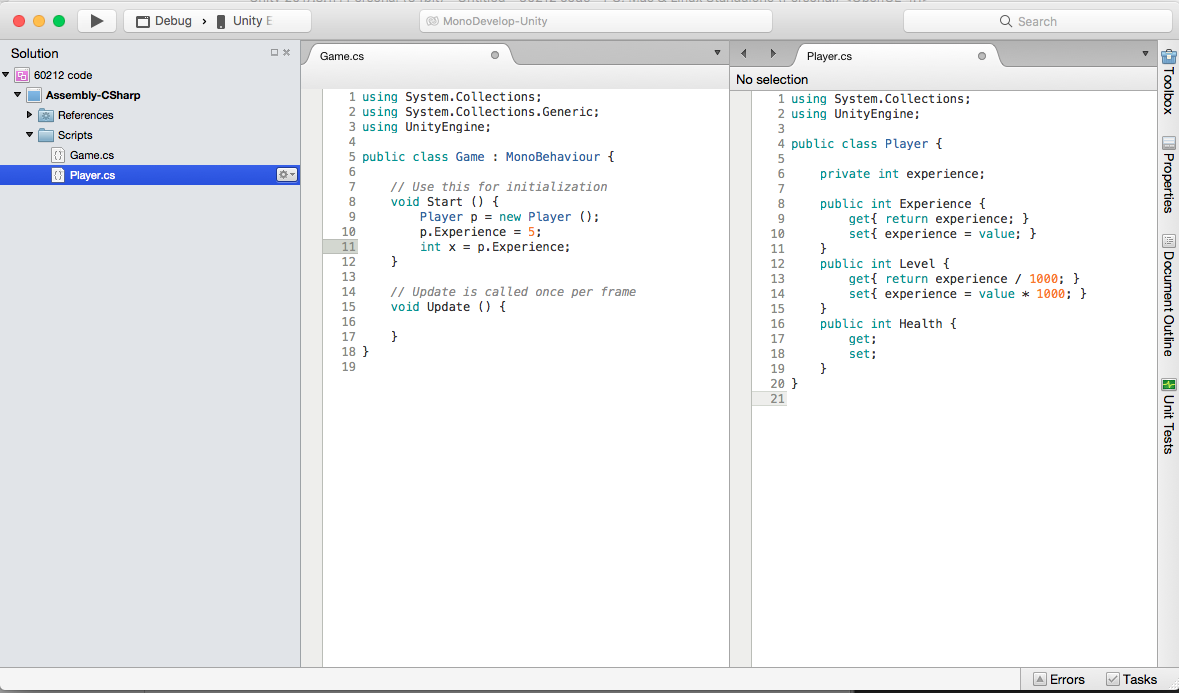

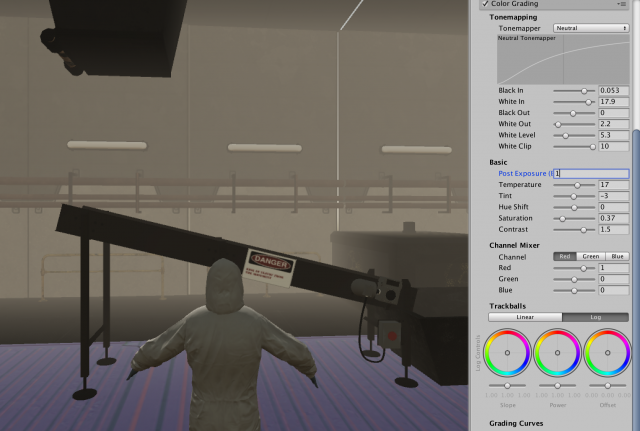

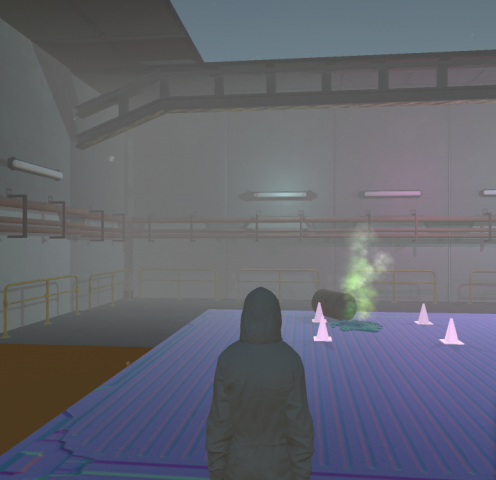

I watched the beginning tutorial for Unity 2, and got through the first few. Here are the screenshots of the videos I watched

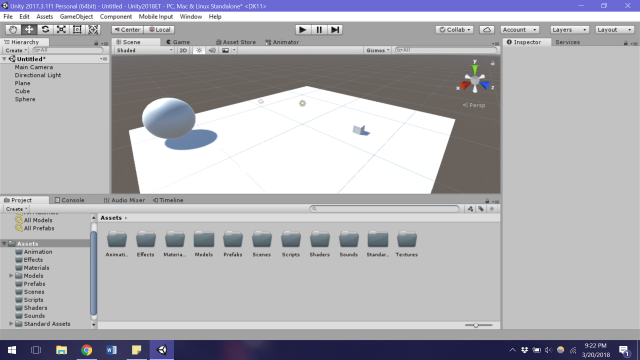

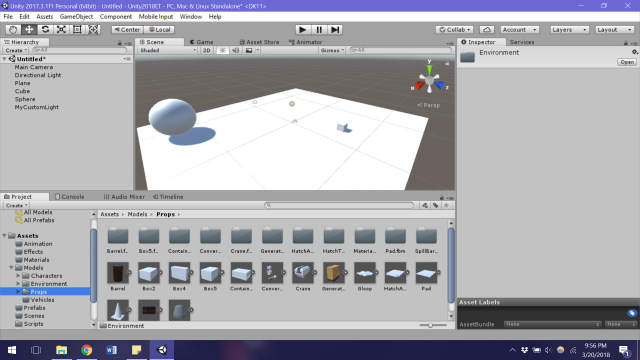

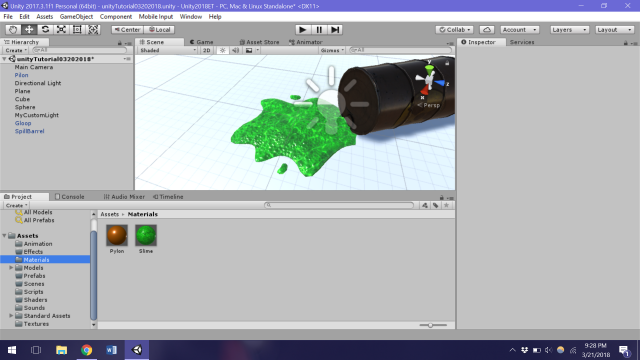

kerjos-Unity2

kerjos-UnityEssentials

rolerman-LookingOutwards07

An AR project I love is ARQUA!, by Cabbibo. It’s a psychedelic AR aquarium, where you draw on your surroundings and turn it into a sort of rainbow fish tank.

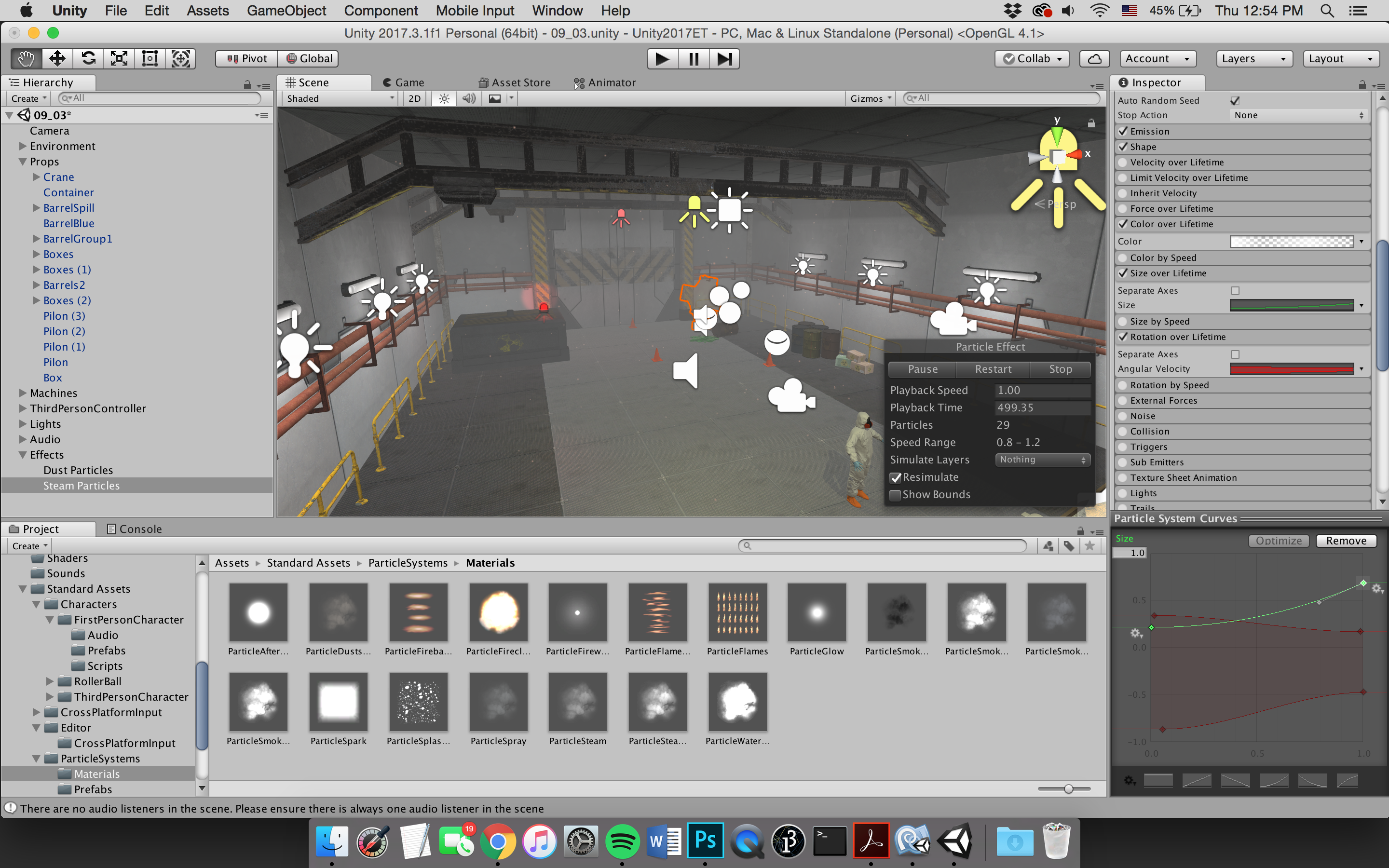

The project is really fun to play with, and reminds me of particle effects, animations, and shader stuff I’ve learned in Unity tutorials.

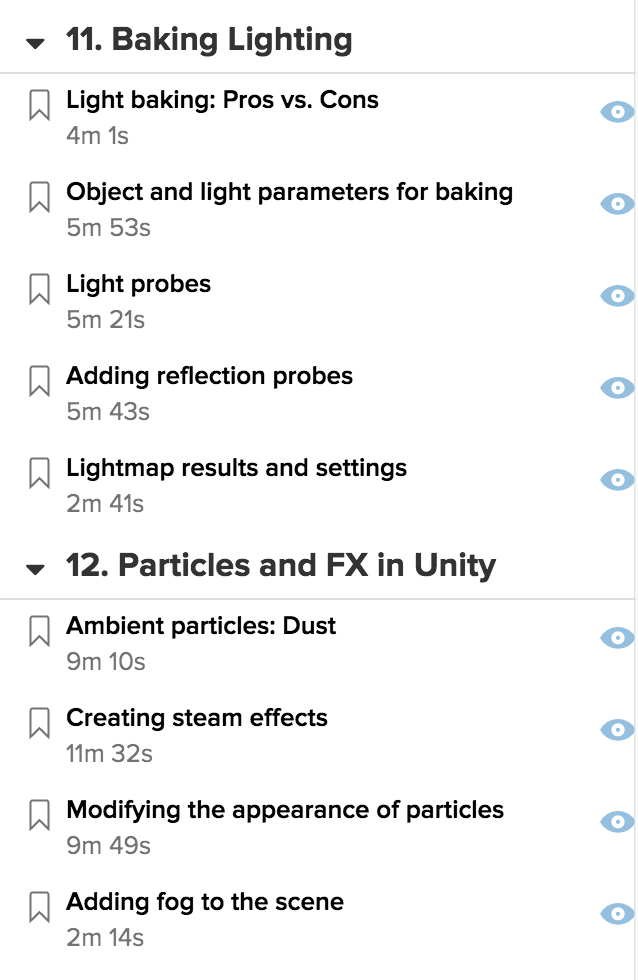

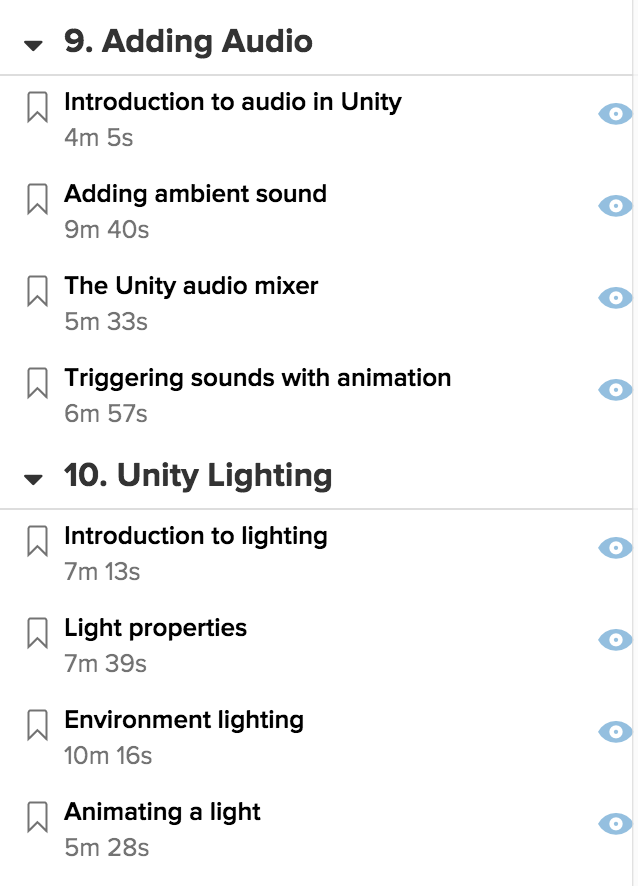

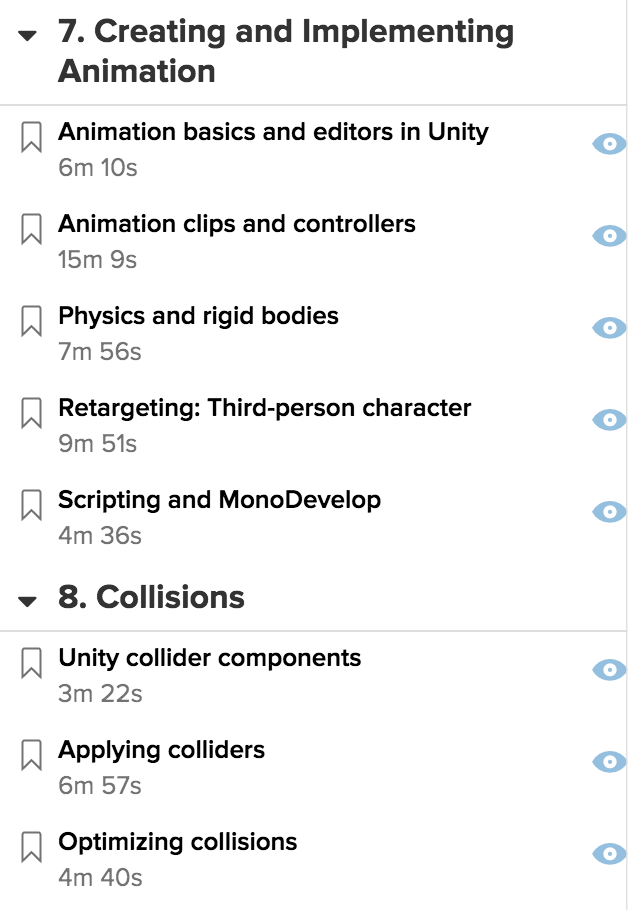

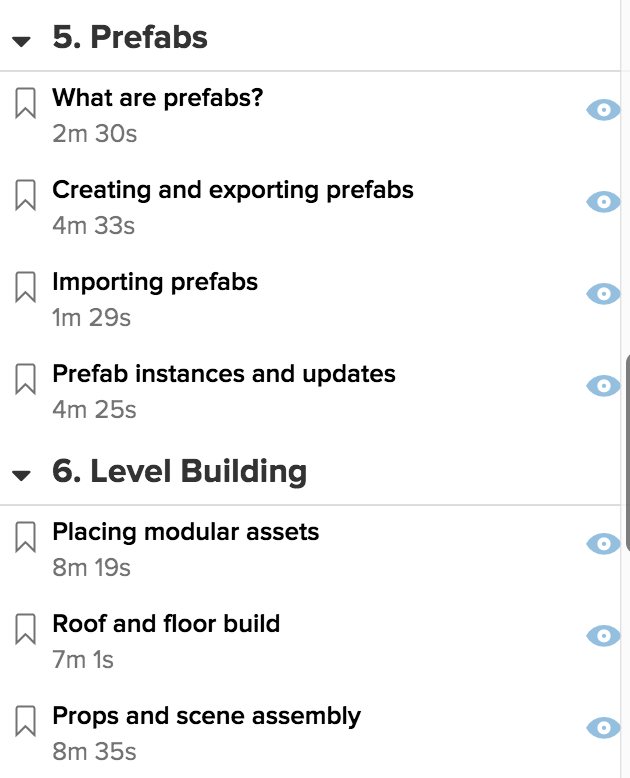

rolerman-UnityEssentials

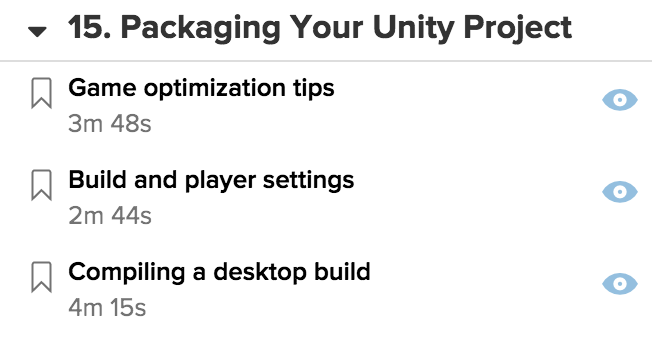

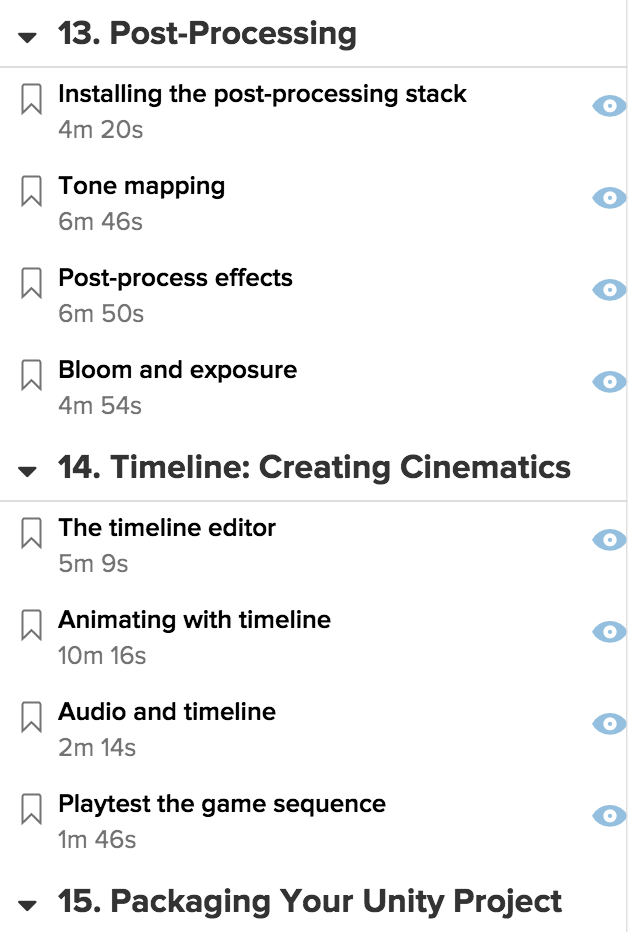

I did the tutorials! I thought the screenshots we were supposed to take were of us having watched all the videos (the little blue eyeball means the video was watched), but here is a screenshot of the completed game as well:

aahdee-Unity2

sheep – 3/22 Unity2

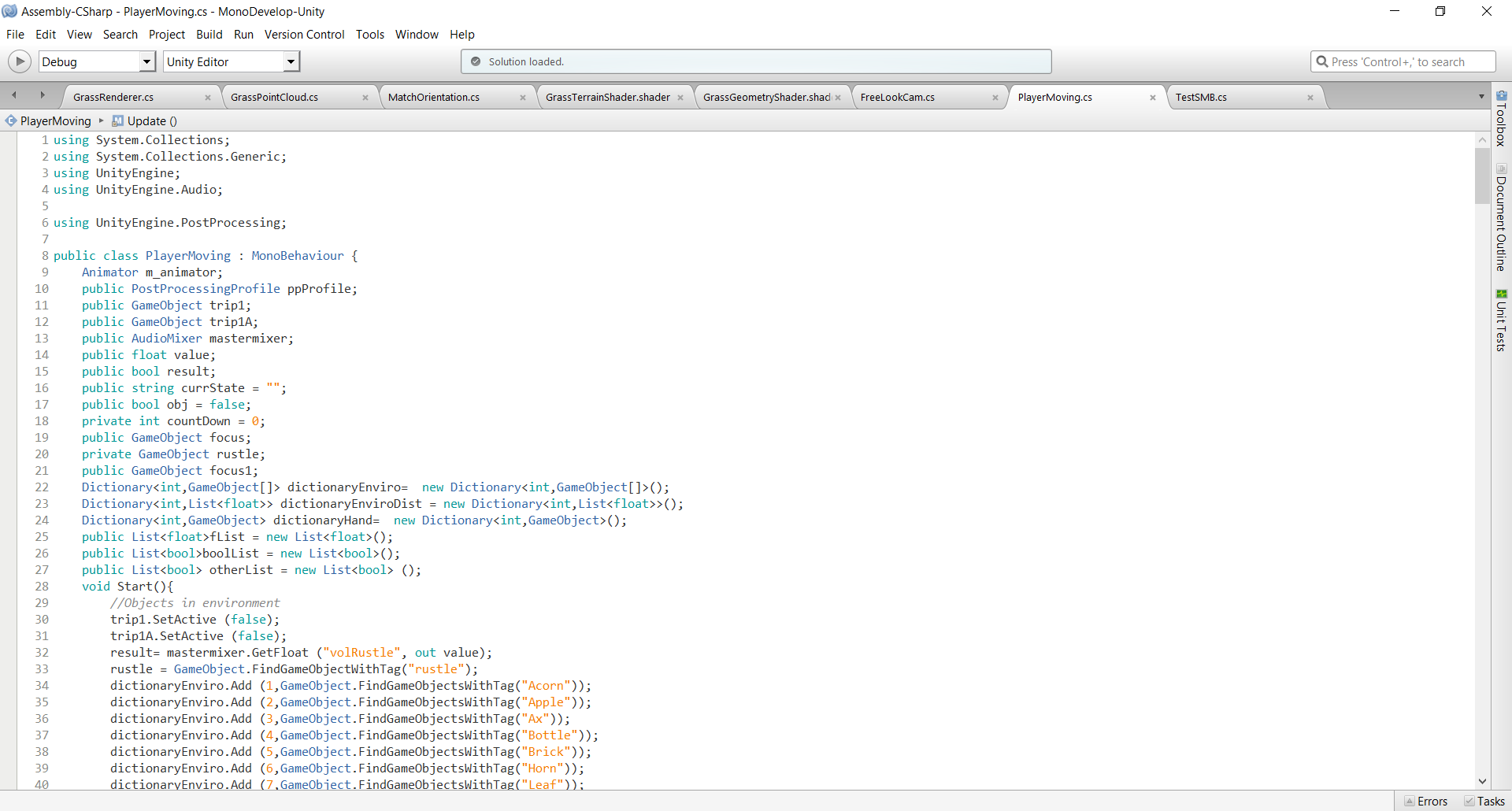

Notes:

You can make a dictionary of Lists by declaring

Dictionary<int,new List<float>> (varname) = new Dictionary<int, new List<float>>();

joxin-lookingoutwards07

KFC WOW@25 Campaign – An Augmented Reality App

March 2013, Blink Digital (Mumbai, India)

When I was researching on augmented reality projects broadly on the web, most projects I encountered were not ‘art,’ but instead—in the words of Alibaba AR Lead Jiang Jiayi—”gimmicks to generating more users and engagement” for profit. On Vimeo, I ran into this project, an augmented reality app for KFC’S WOW@25 Campaign in India, created by Mumbai-based ad agency Blink Digital. I was very amused by this project, and I find it the most literal representation that I have ever seen of AR’s usage as a marketing strategy by corporations. In this app, the user literally scans money, and the app tells you what you can buy with that money at KFC. The message can’t be more direct. I think this project is quite representative of a type of relationship that creative technology has with business. Many other companies have done similar AR projects (e.g., Buy+ and Catch a Tmall Cat by Alibaba), which are not as literal as this one but serve the same purpose essentially.

Here is a video that tells you about the app as well as the entire ad campaign. It is pretty entertaining in my opinion.

From this quick research in AR, I realized that this technology seems to be used for two polarized objectives: art and marketing. The former strives to question and deconstruct myths in our society while the latter exploits the lack of critique. This reminds me again of the nature of technology as something completely determined by human beings, and the tendency for technologies like AR to lead us towards a more dystopian future.

sheep – UnityEssentials 3/23

Intro

Part 1

Part 2

Part 2

Part 3

Part 4

Part 5

Part 6

Part 7

Part 8

Part 9

Part 10

Part 11

Part 12

Part 13

Part 14

Part 15

Final Build

phiaq – UnityEssentials

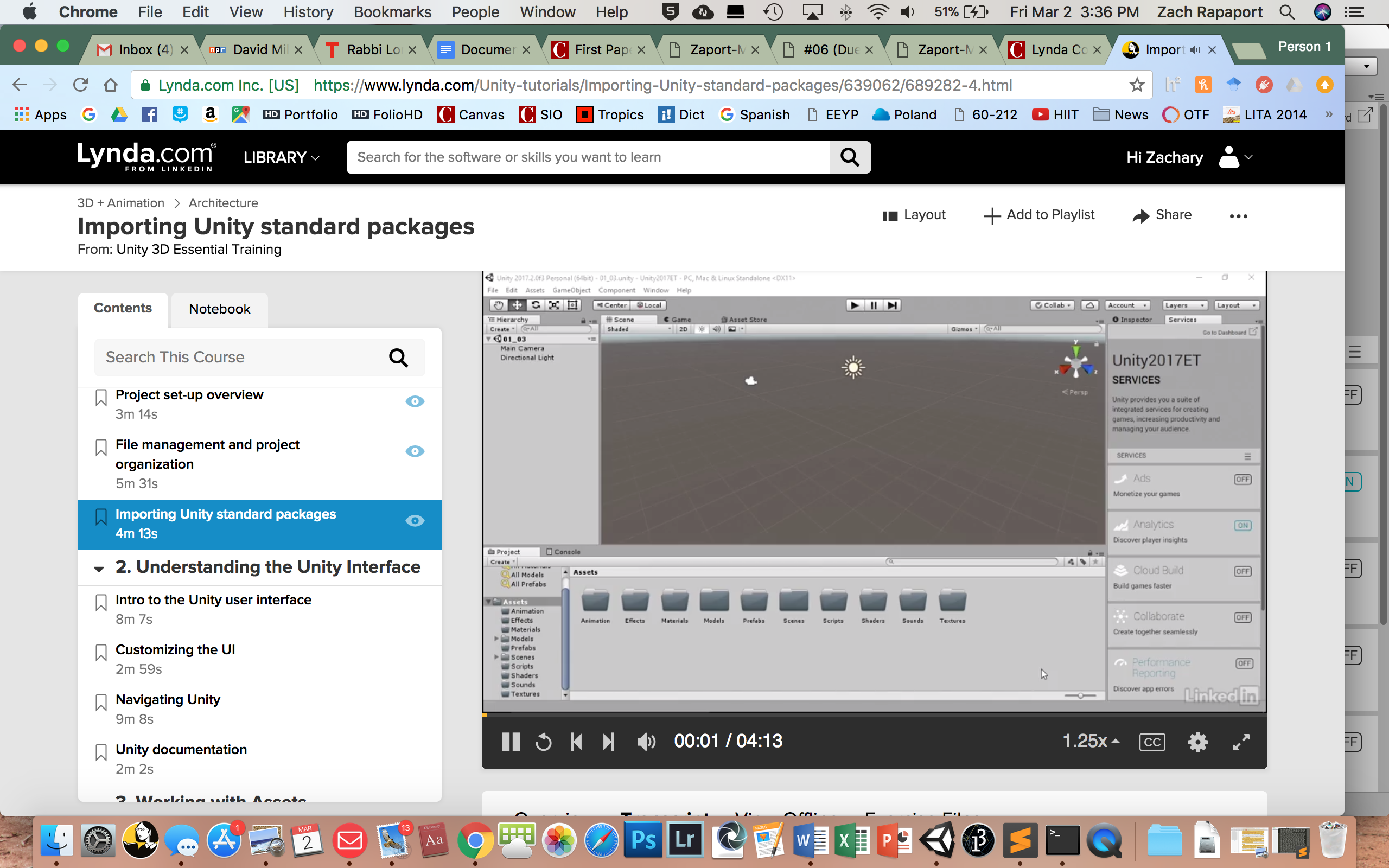

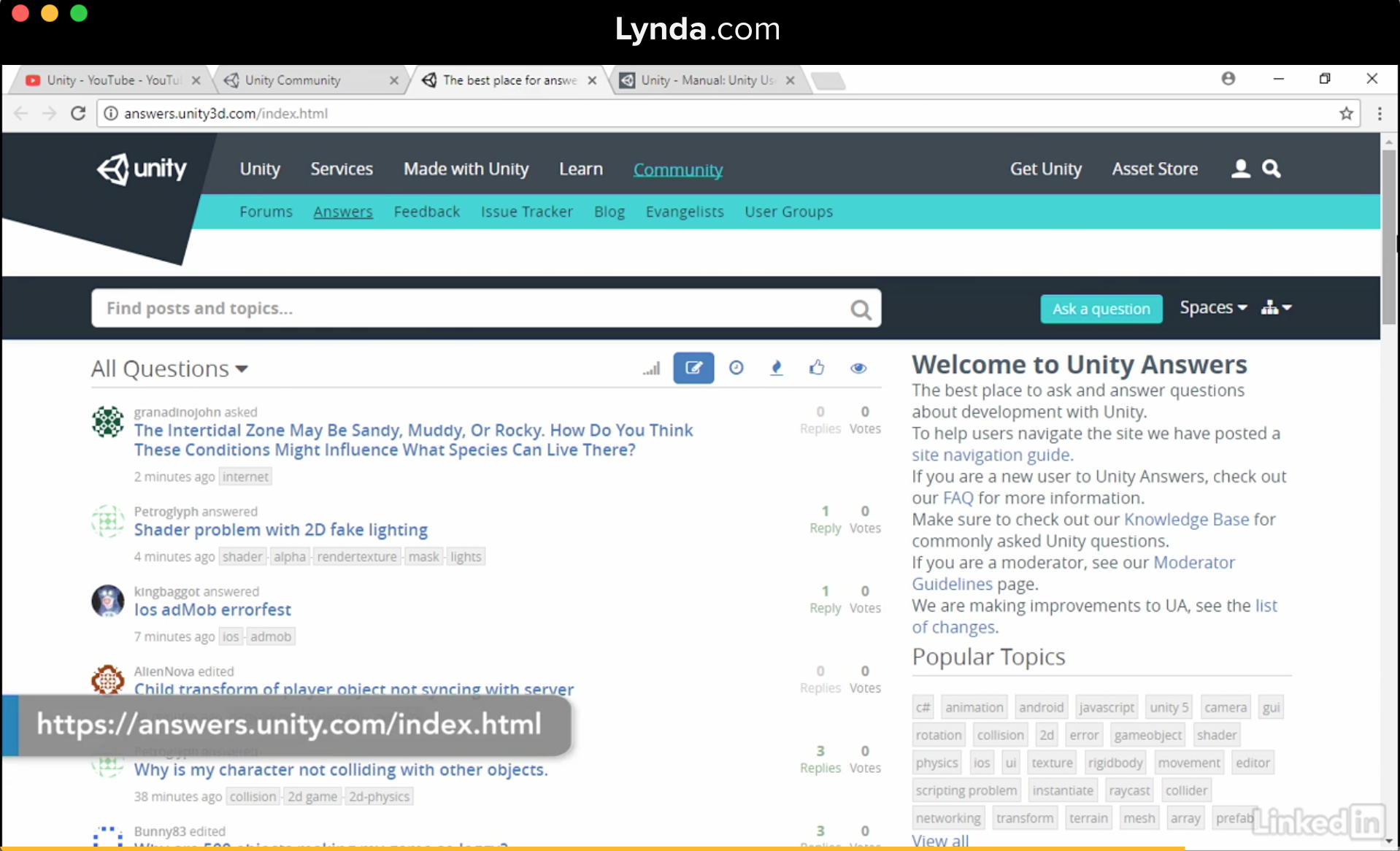

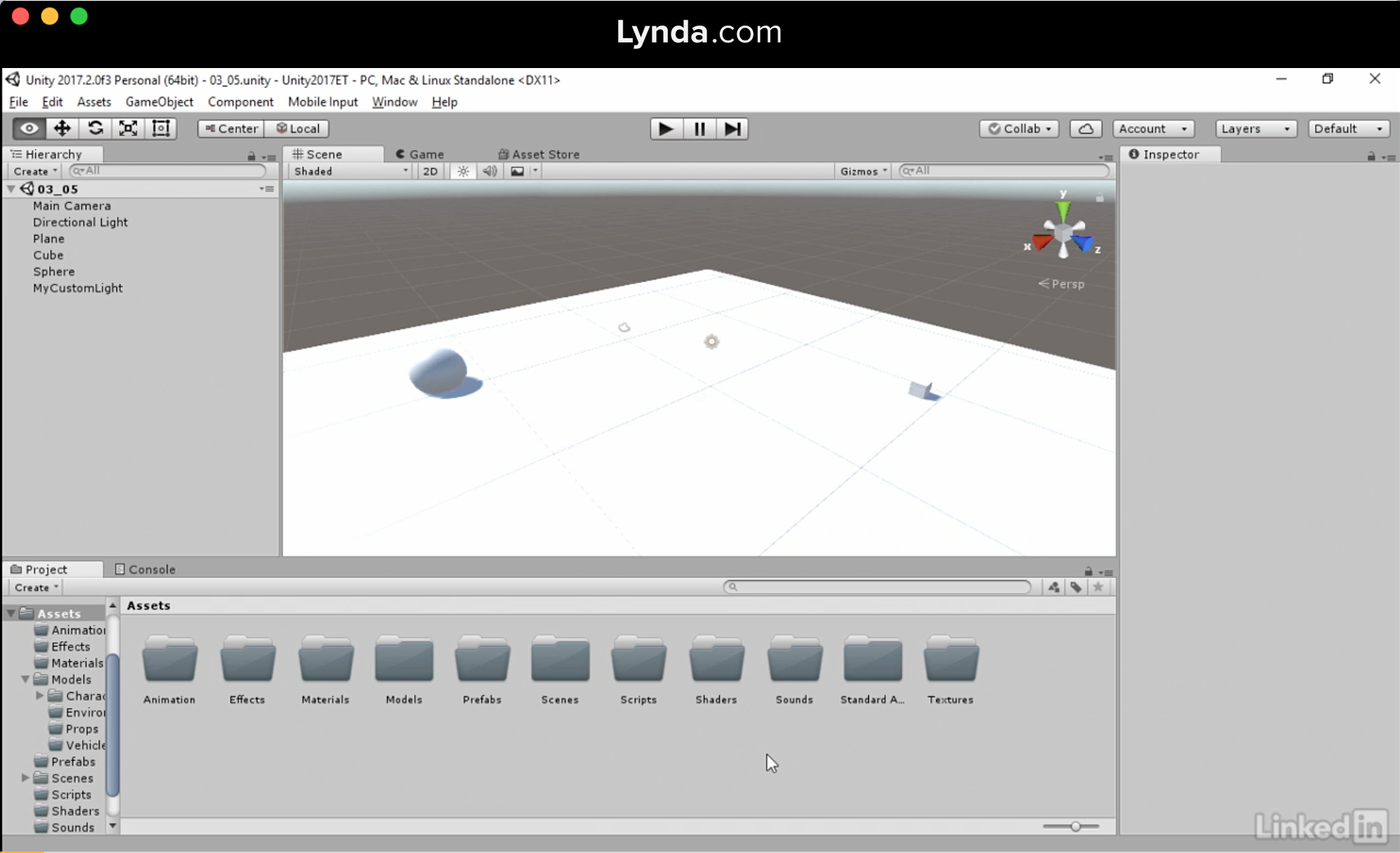

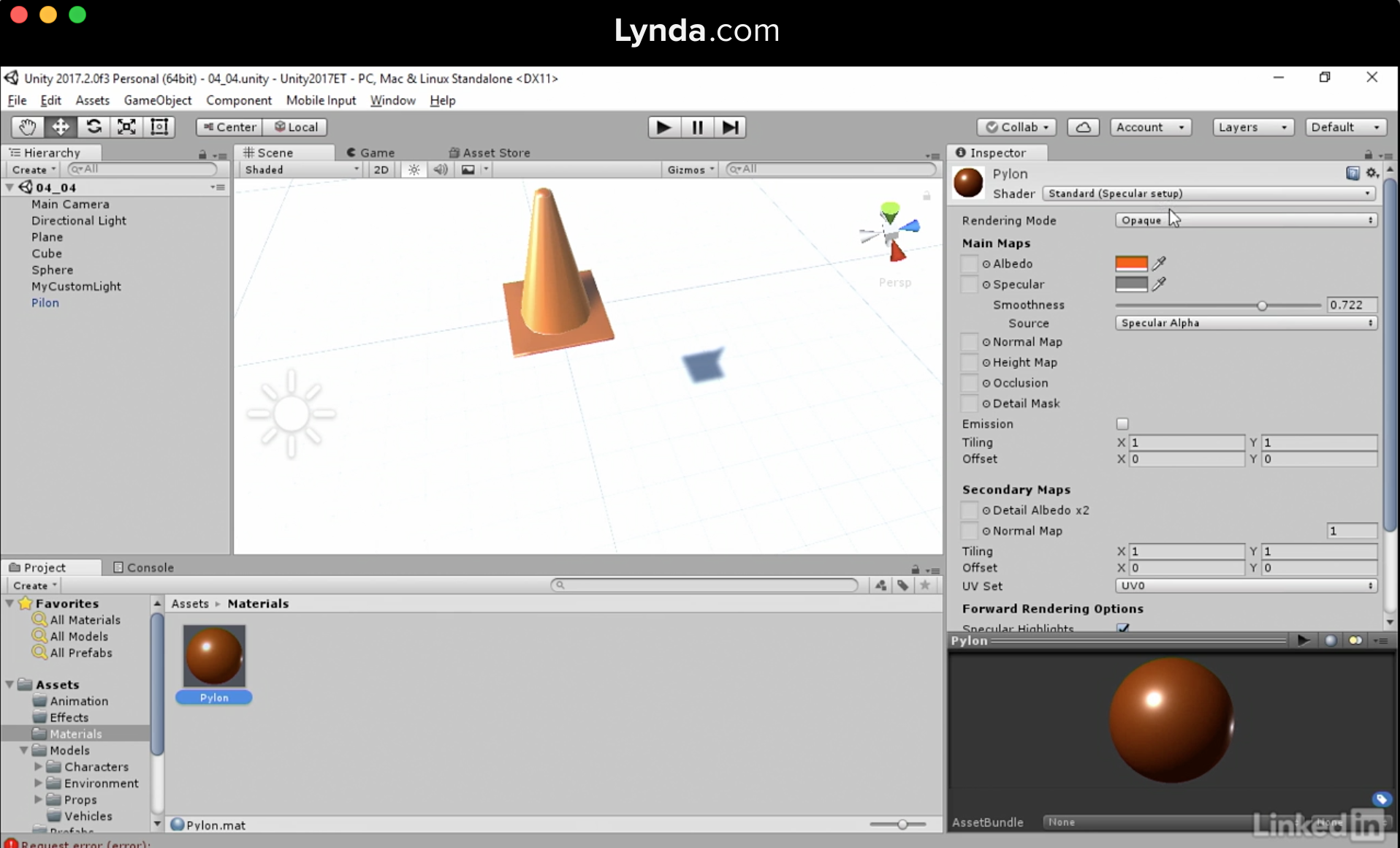

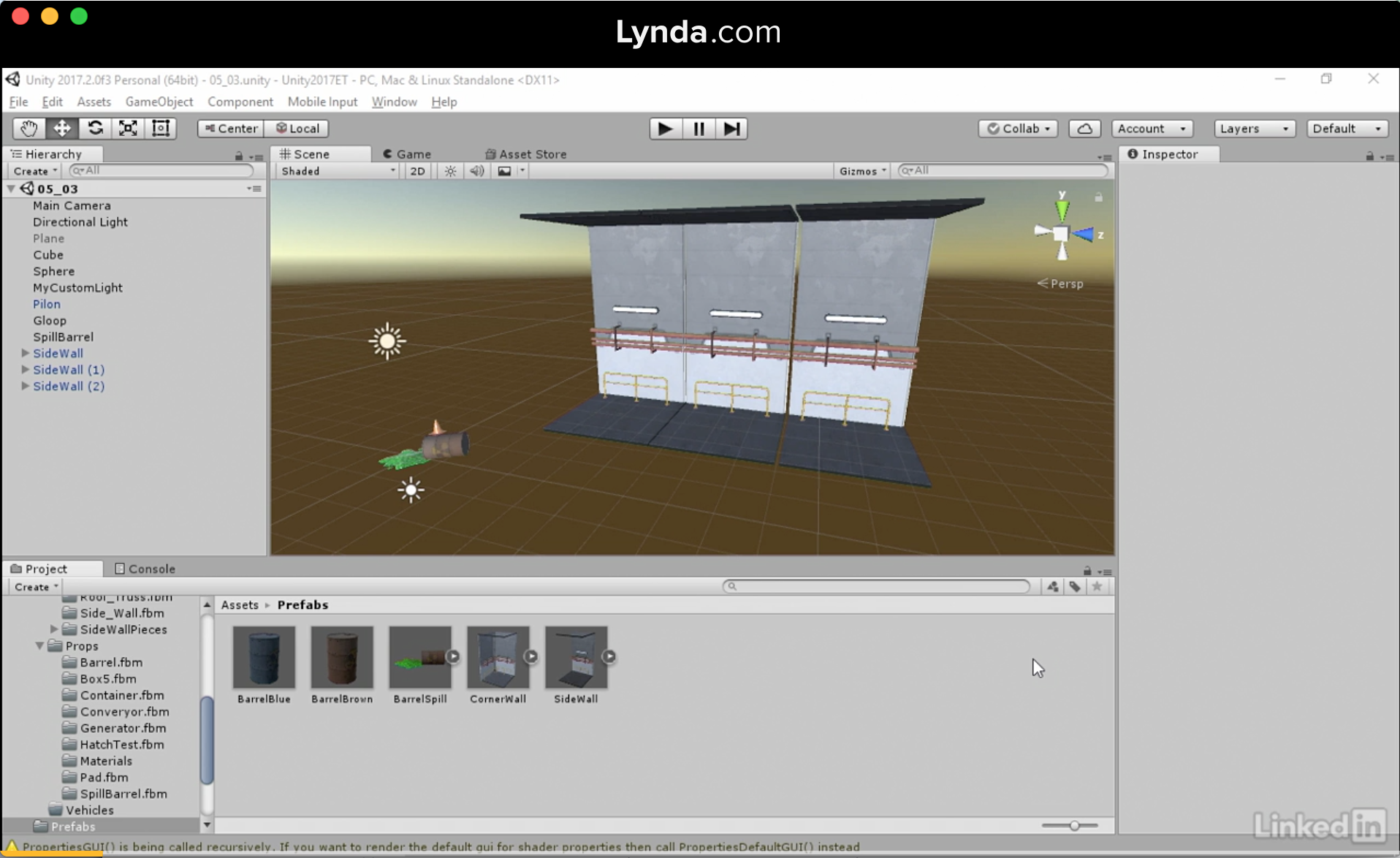

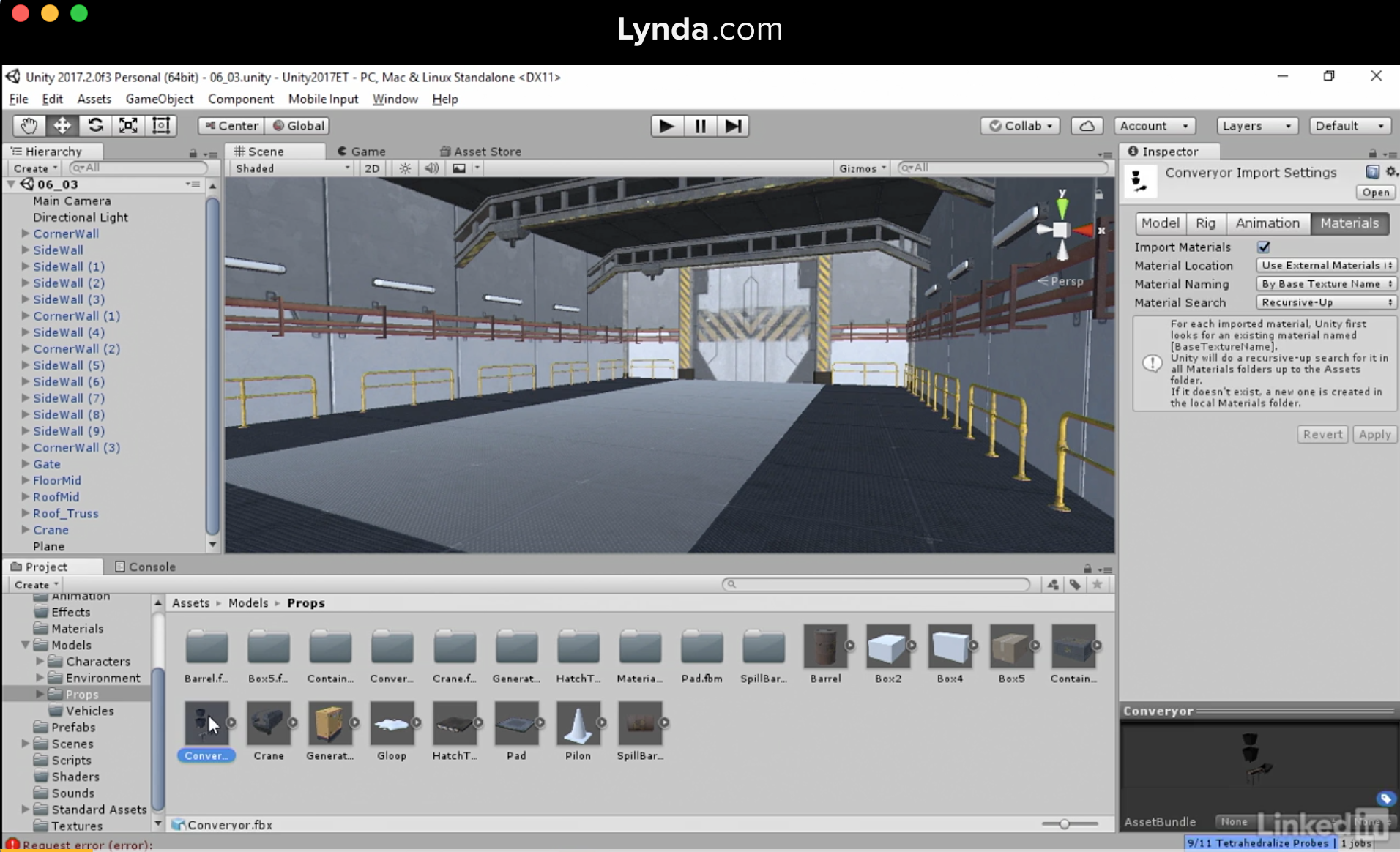

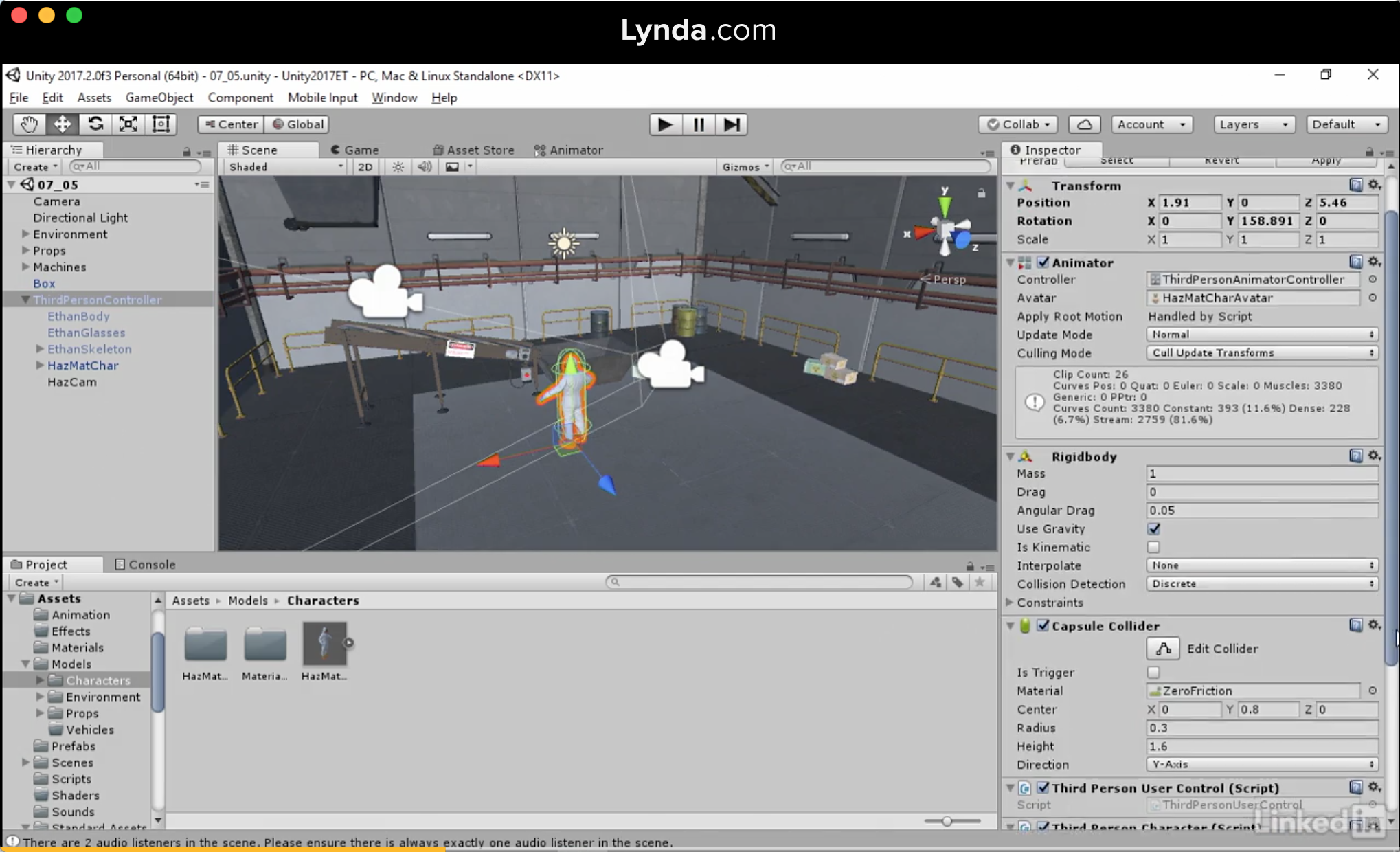

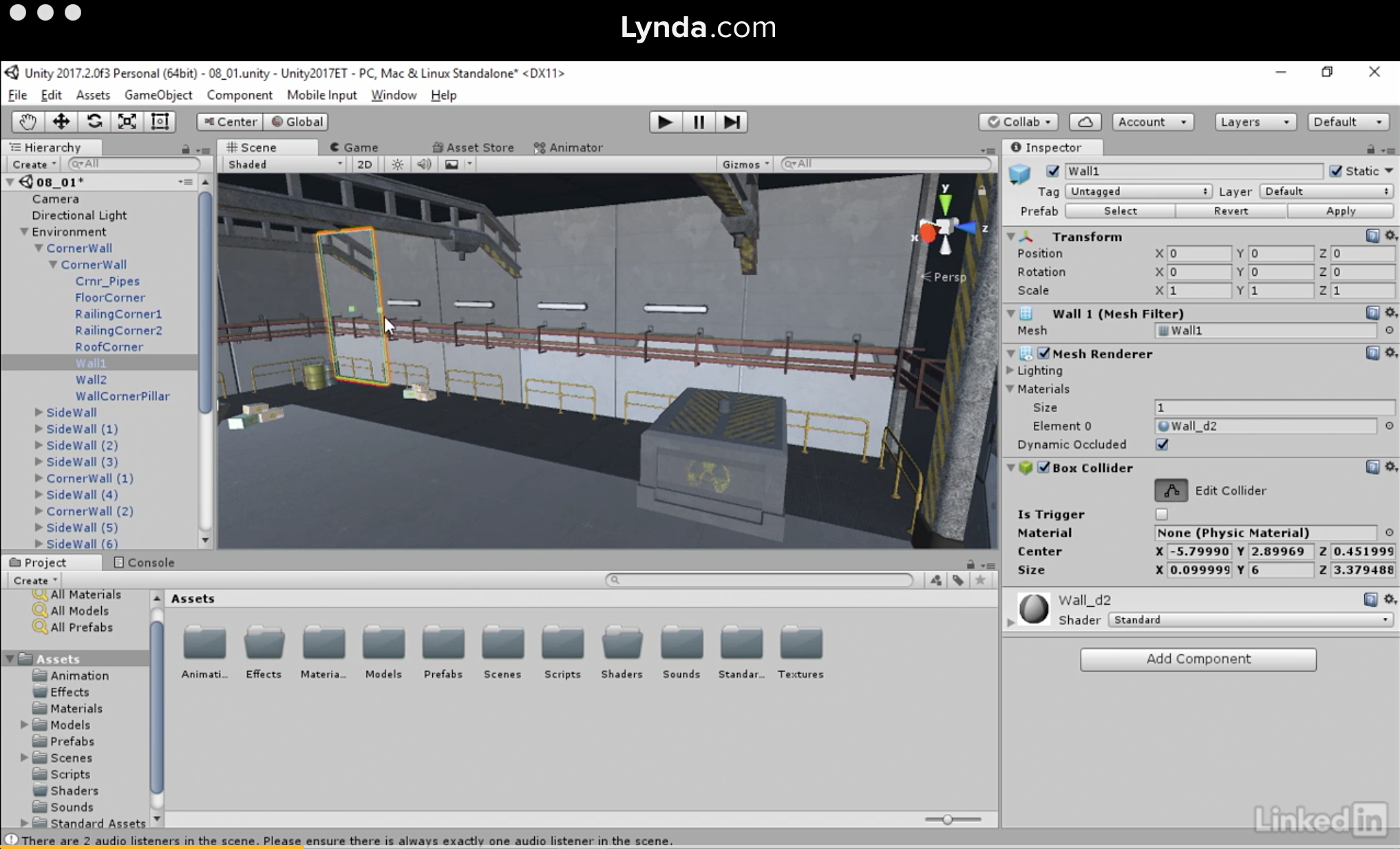

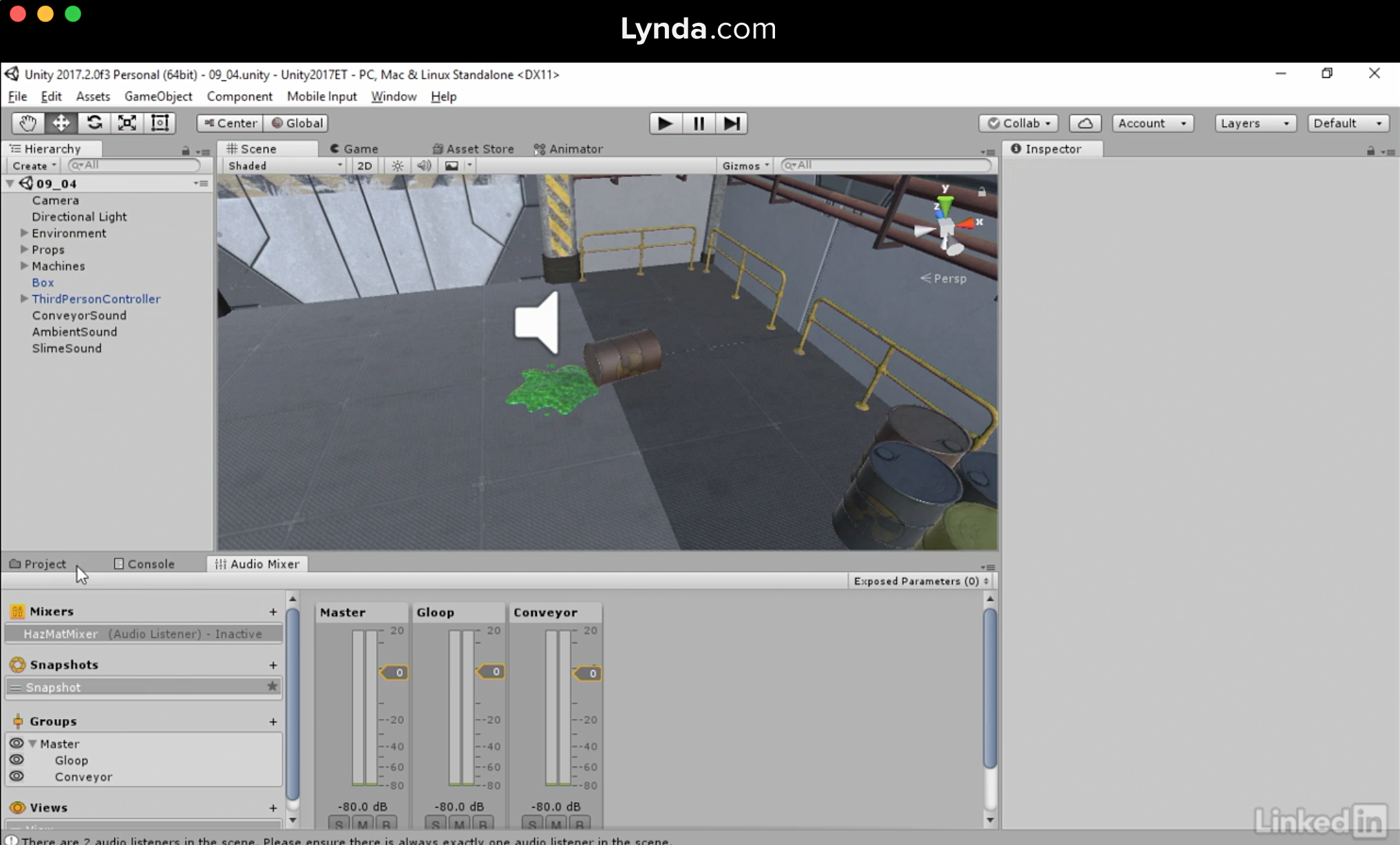

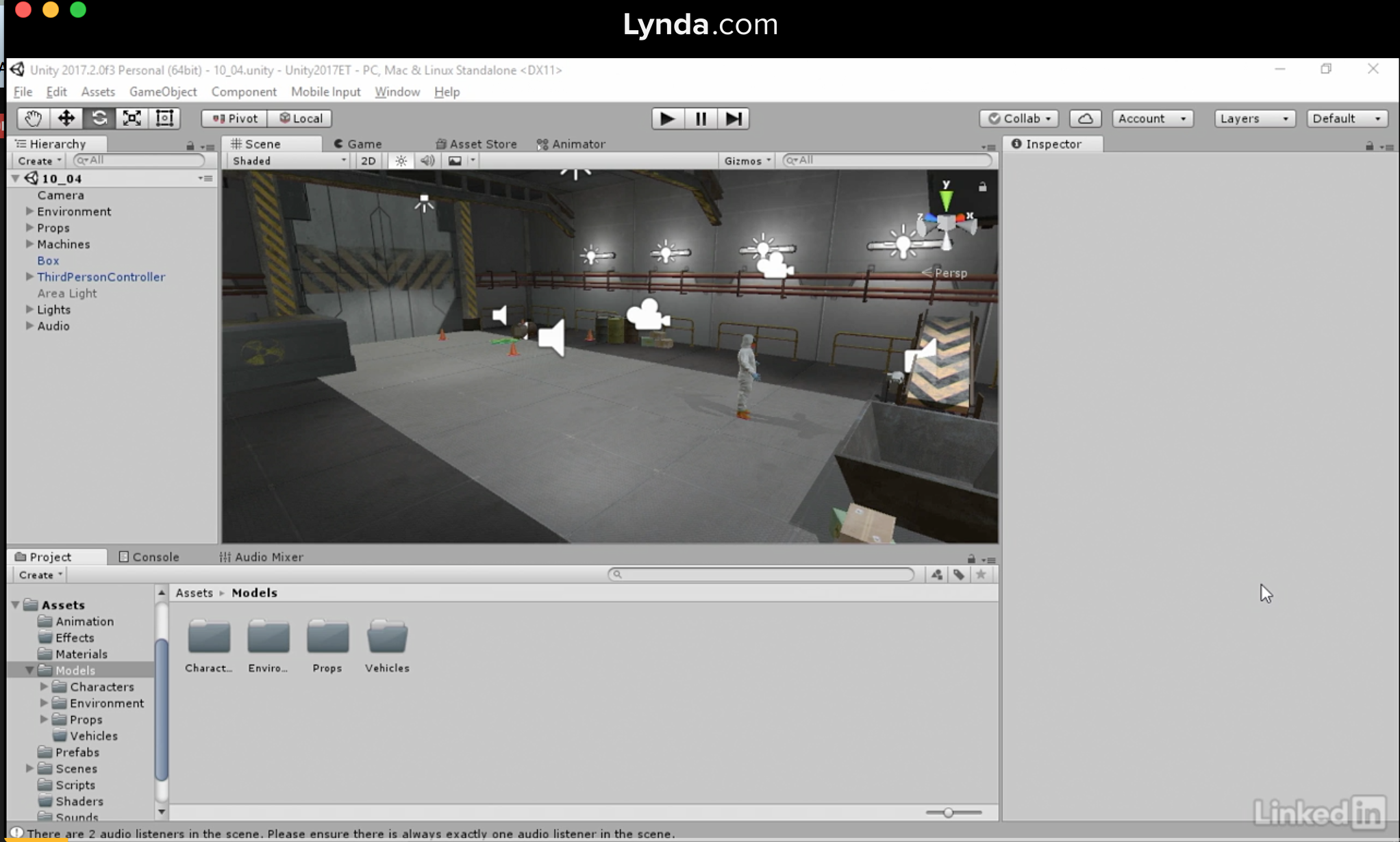

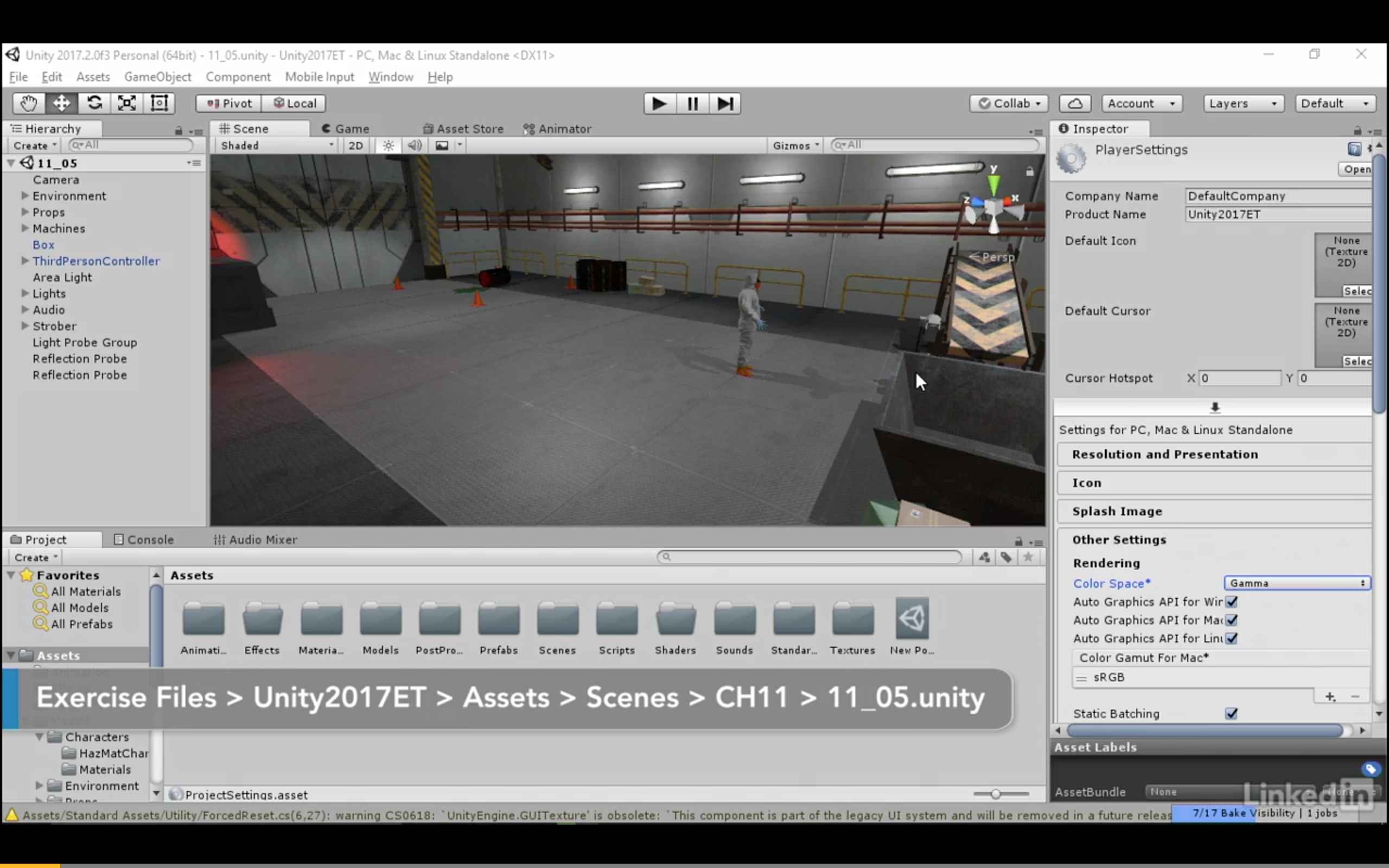

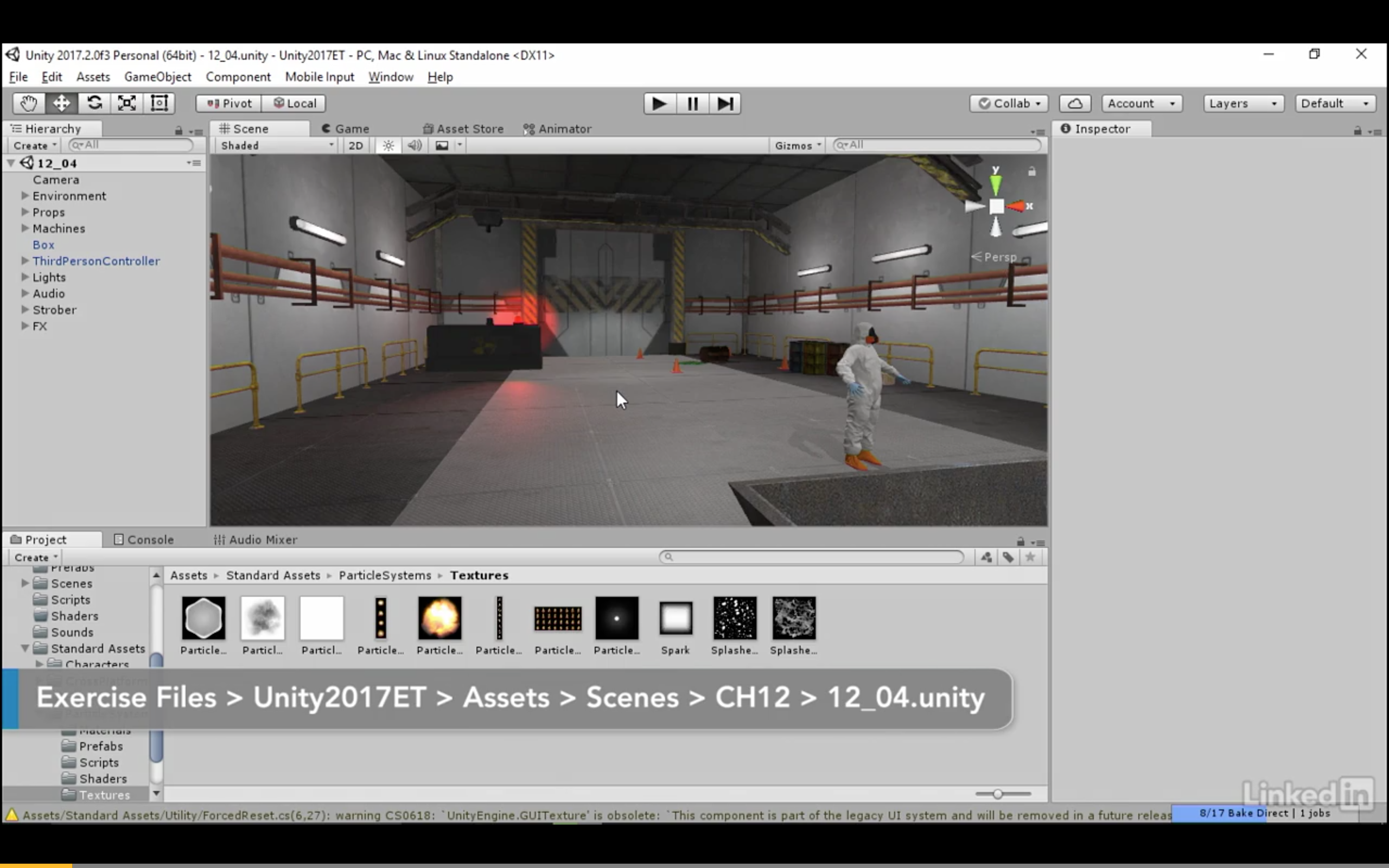

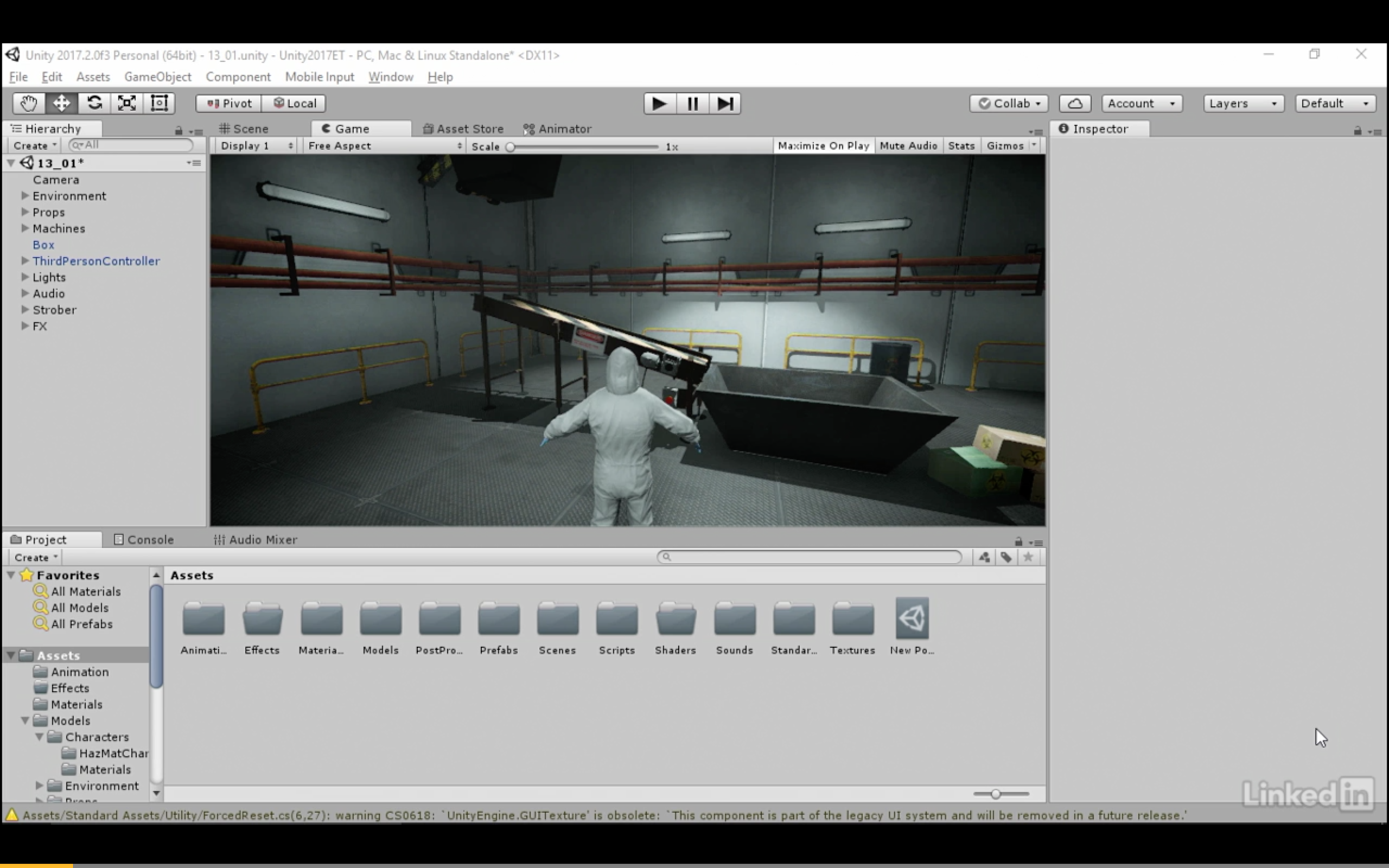

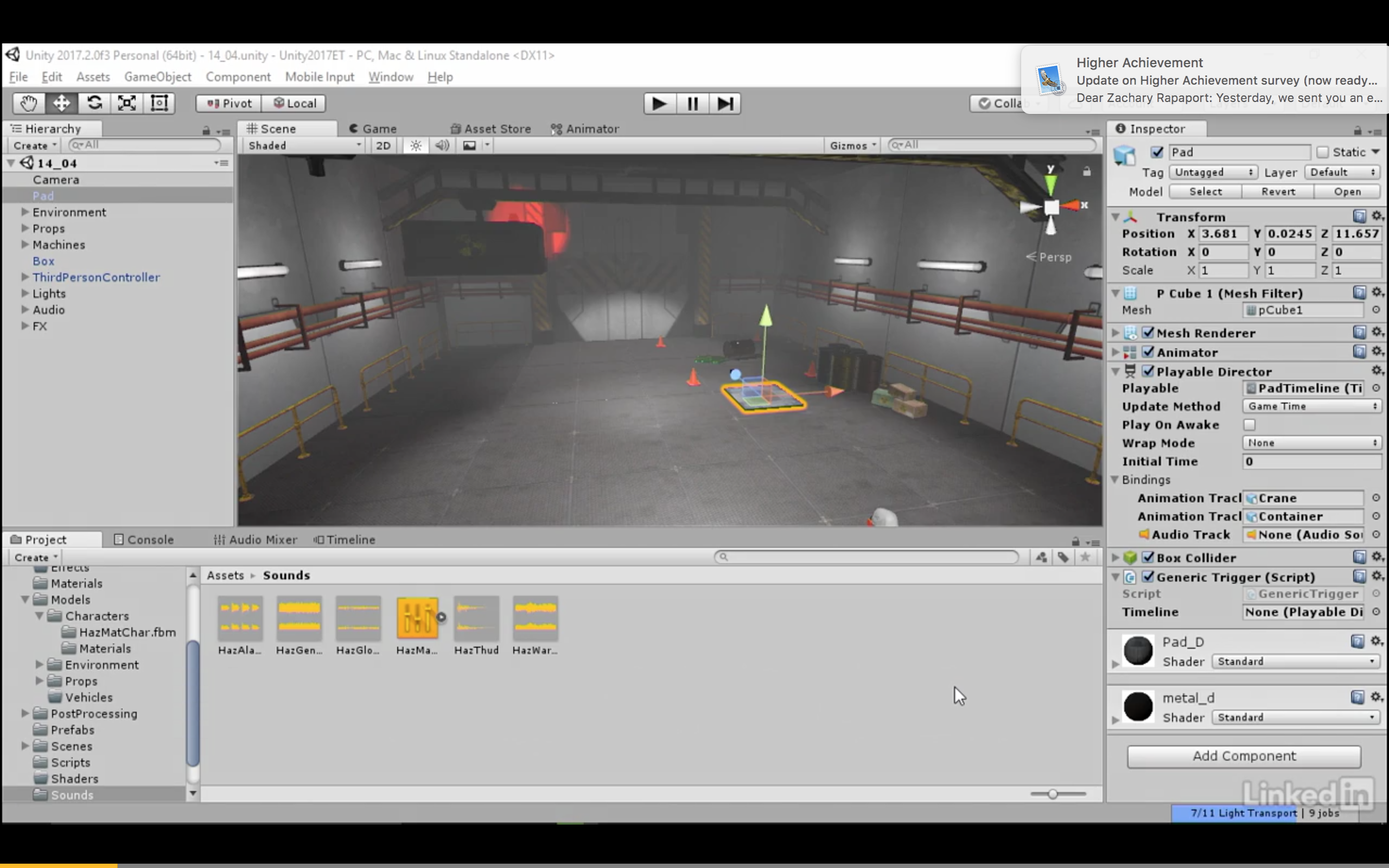

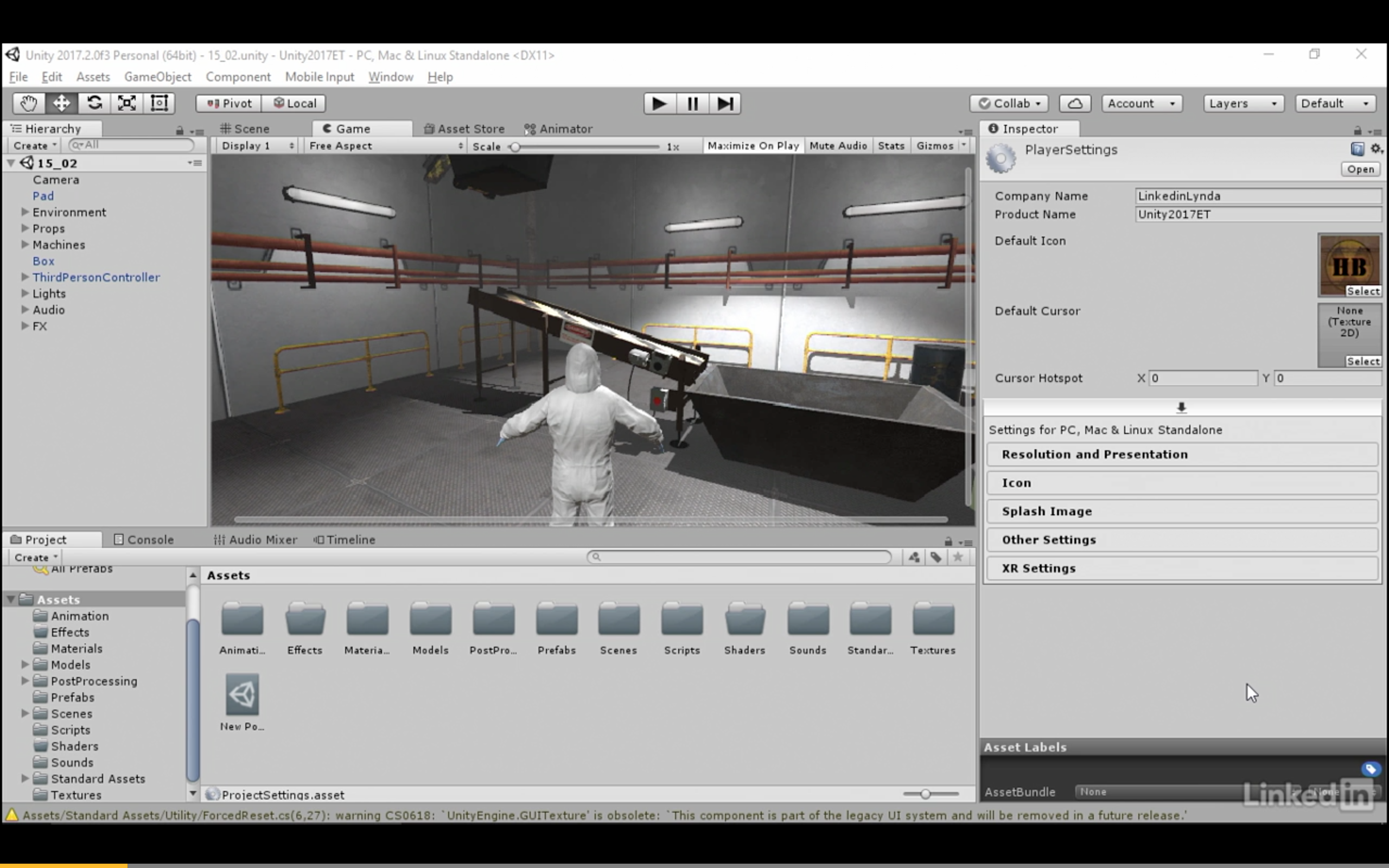

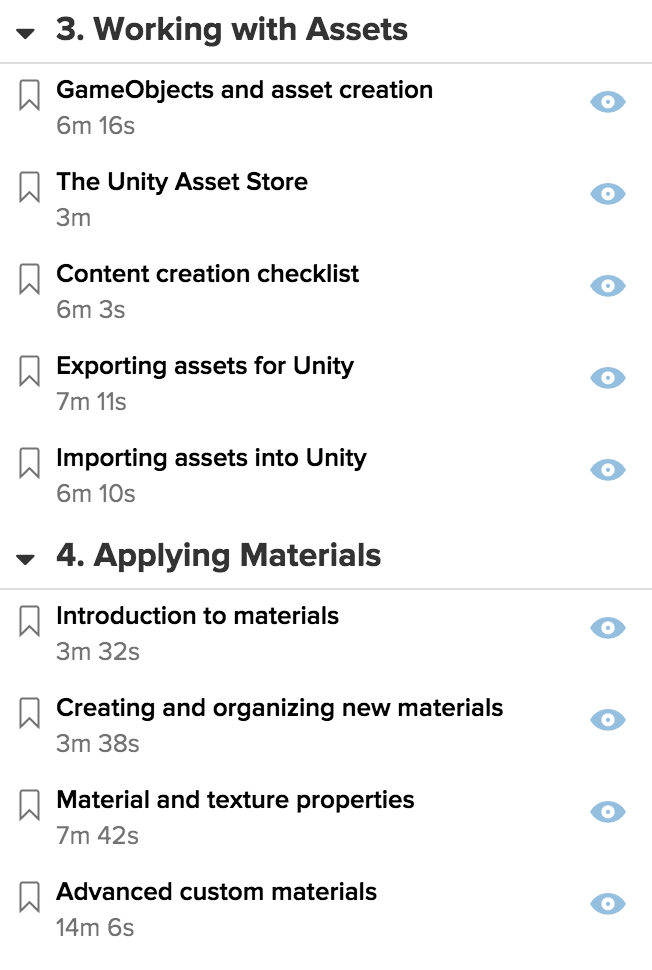

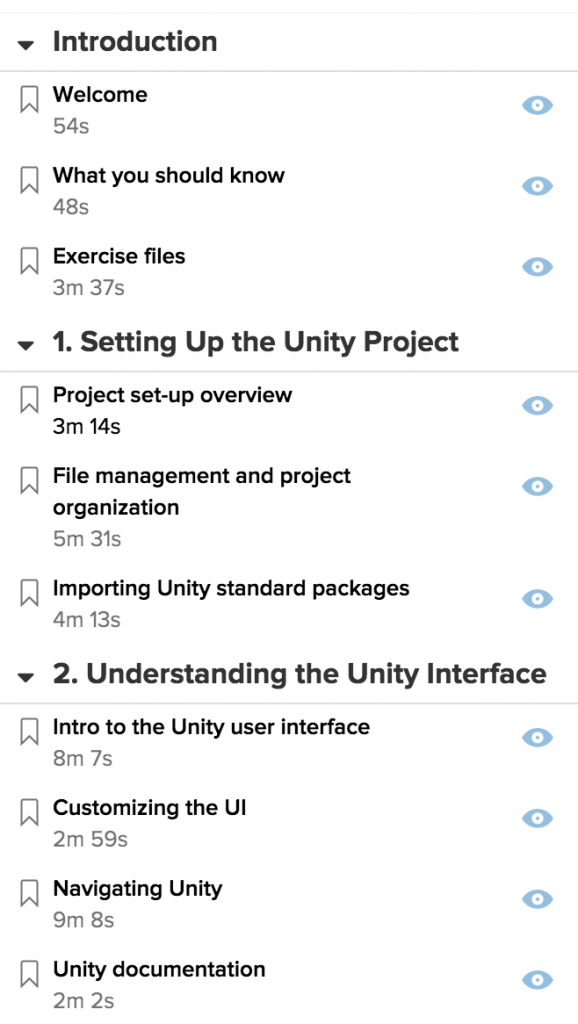

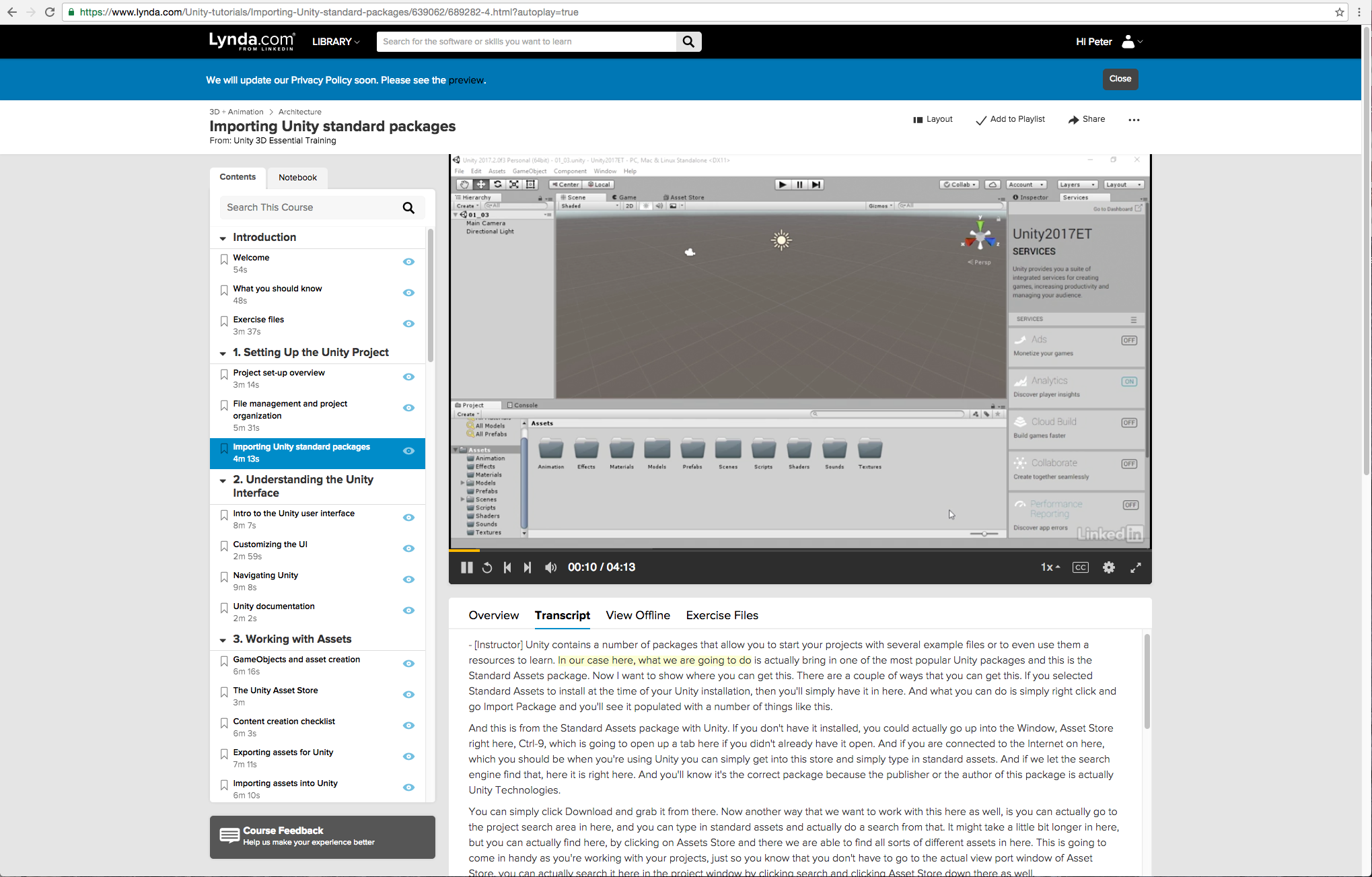

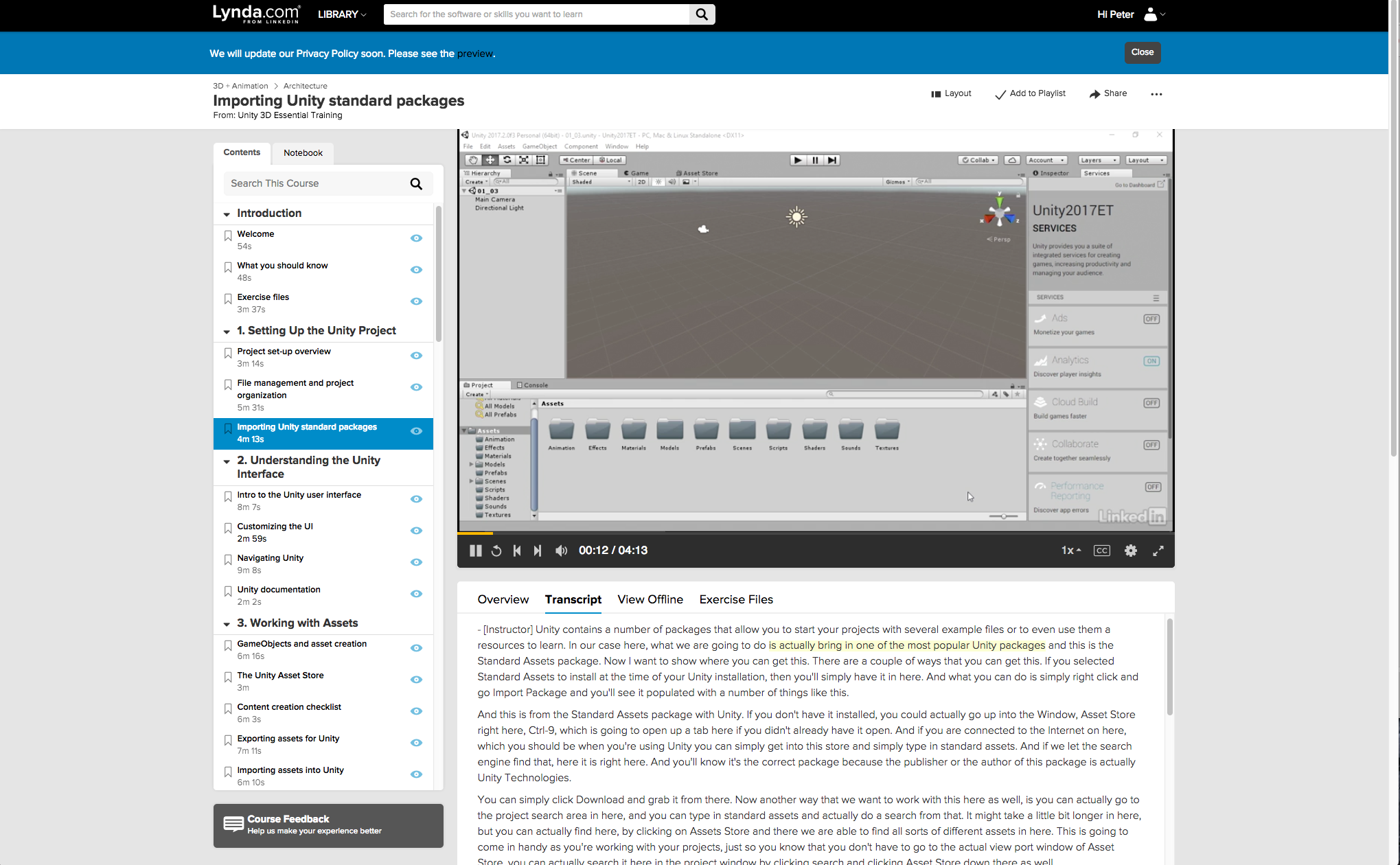

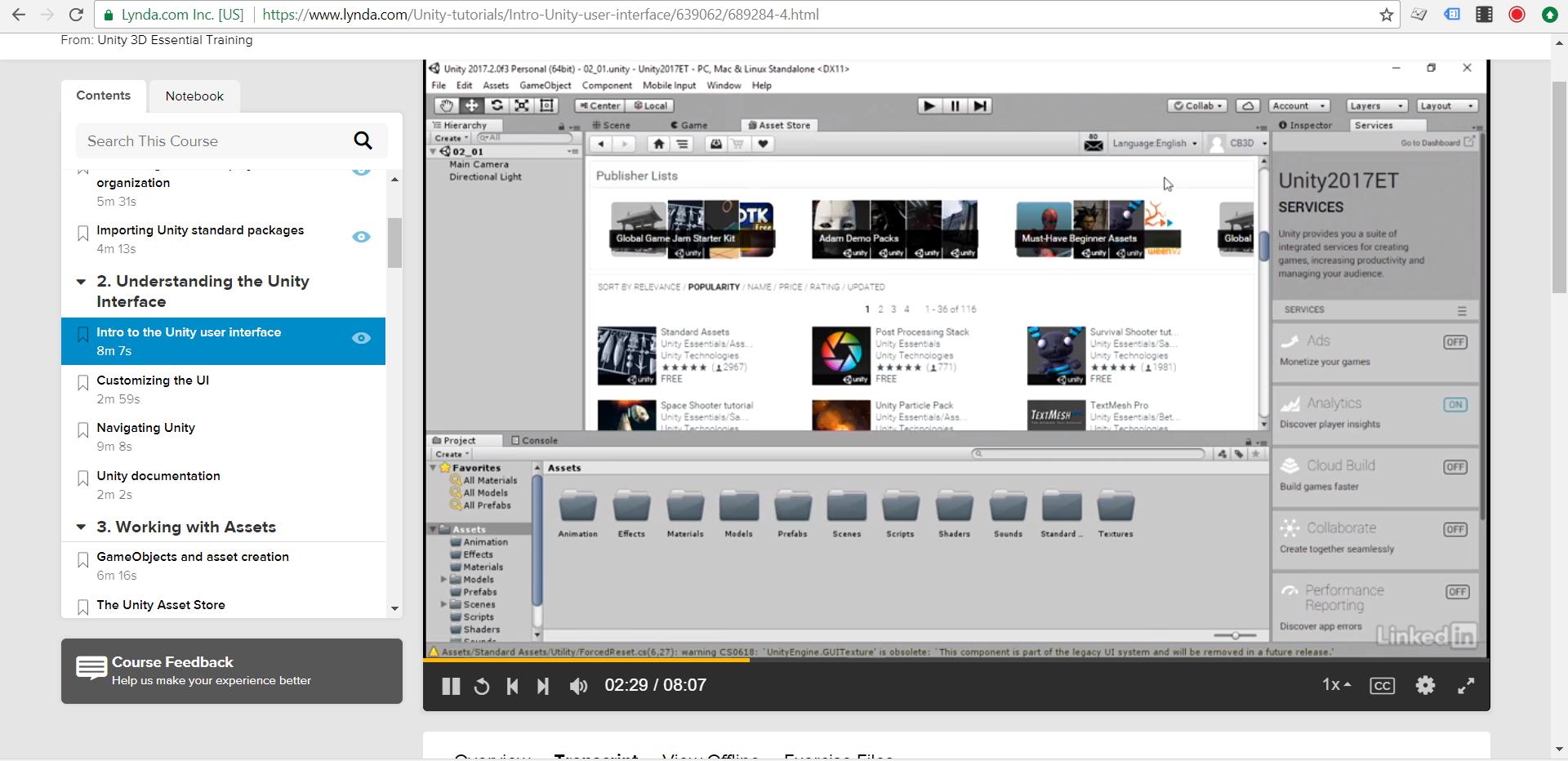

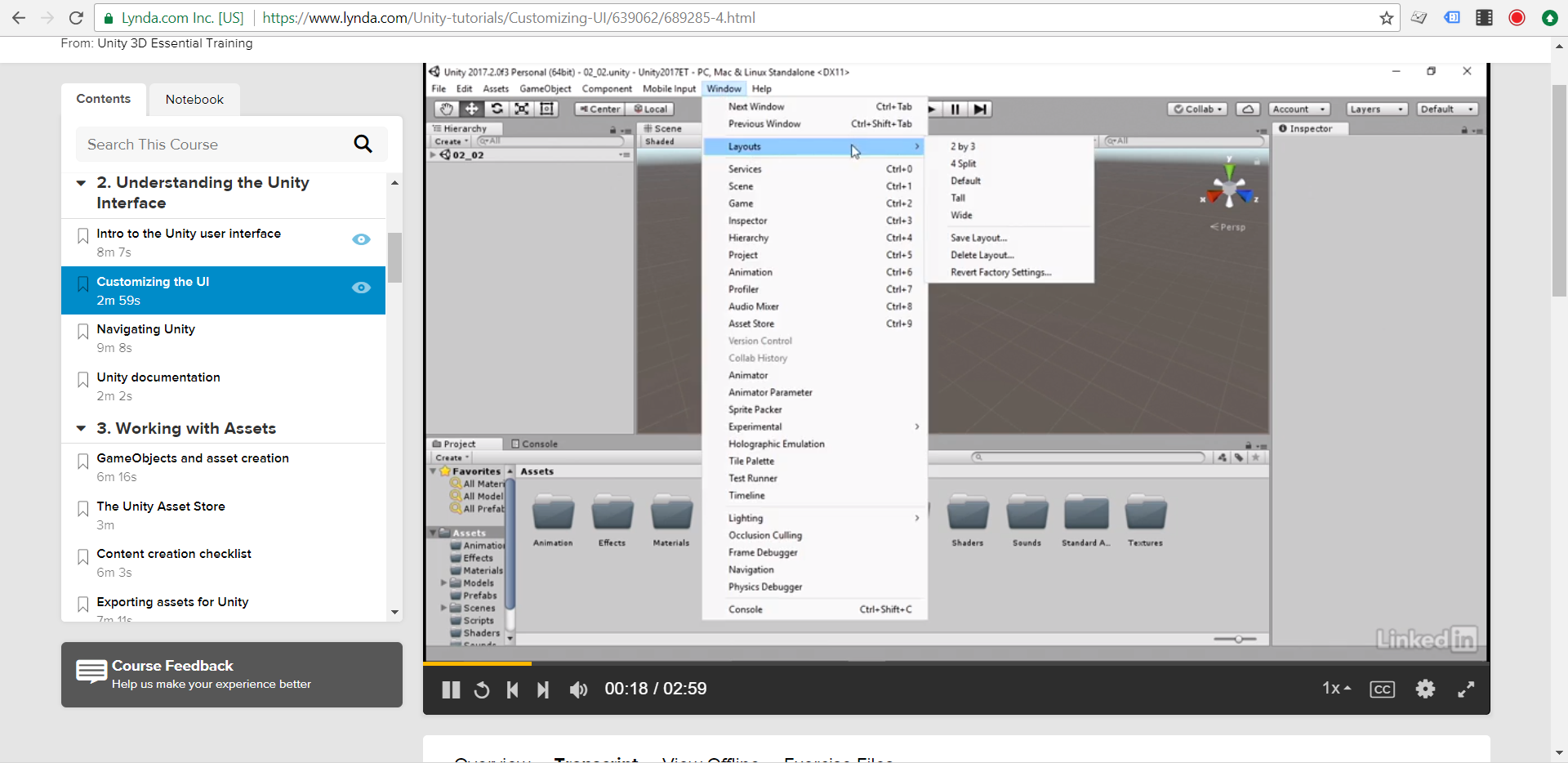

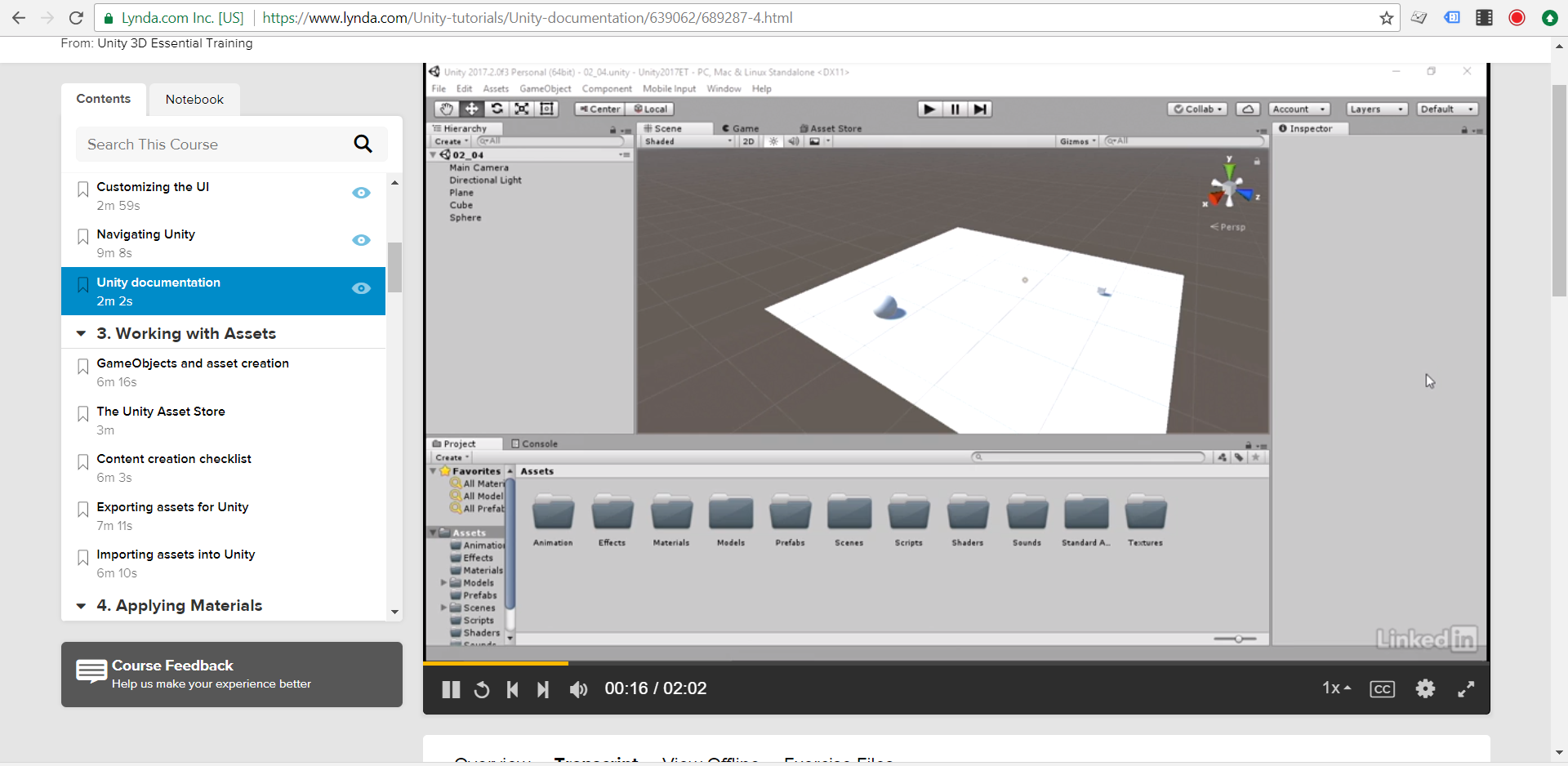

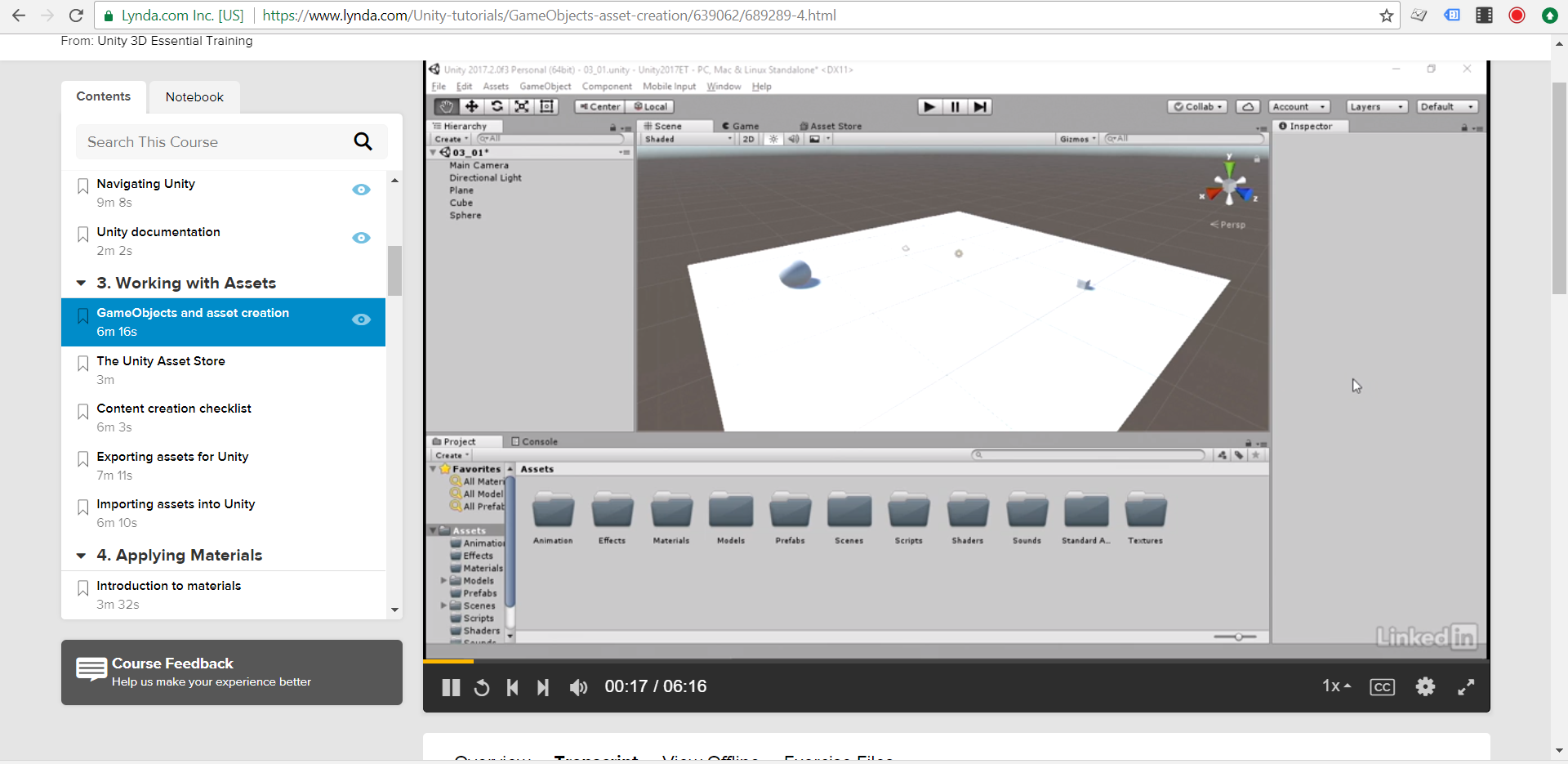

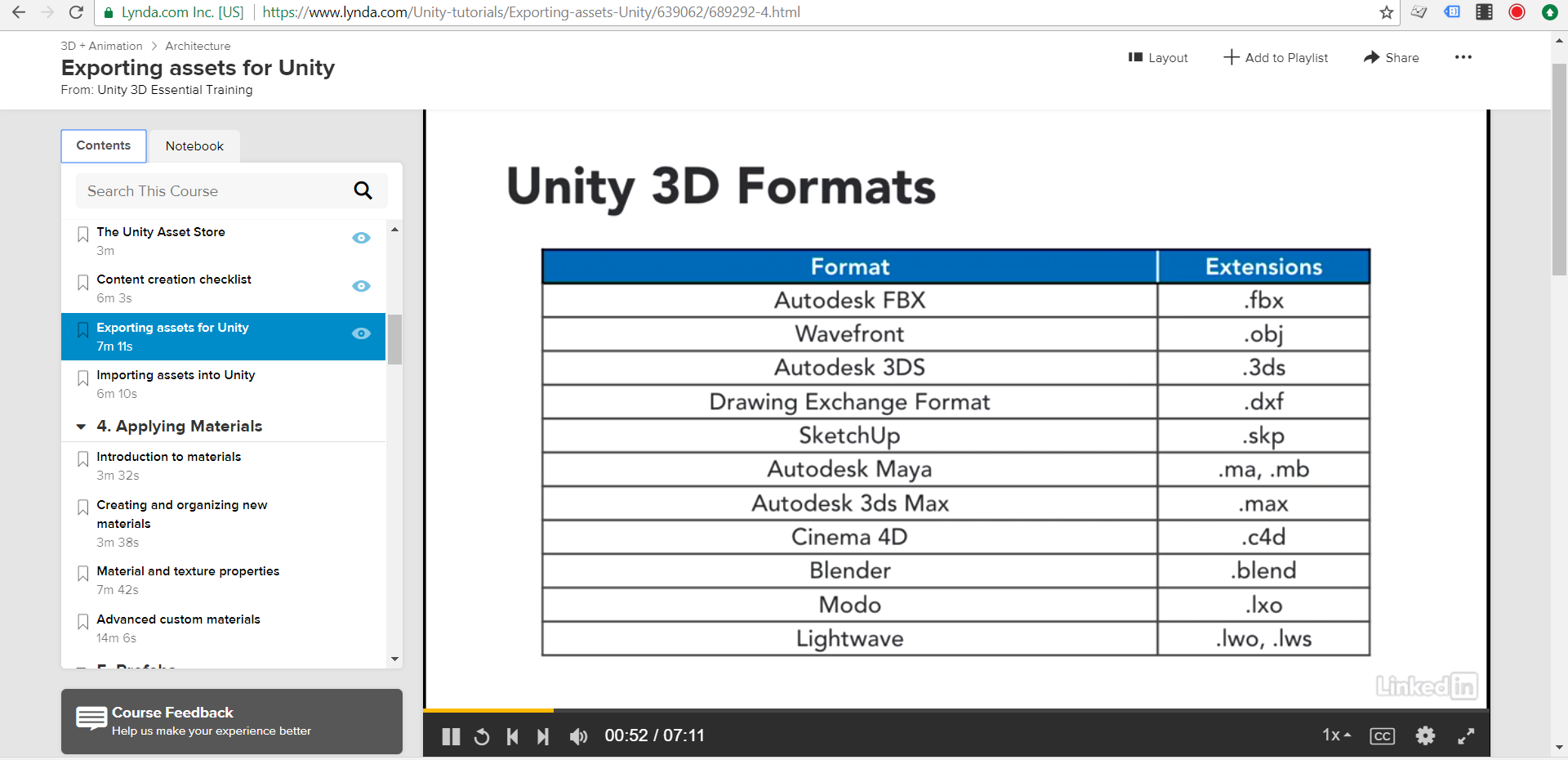

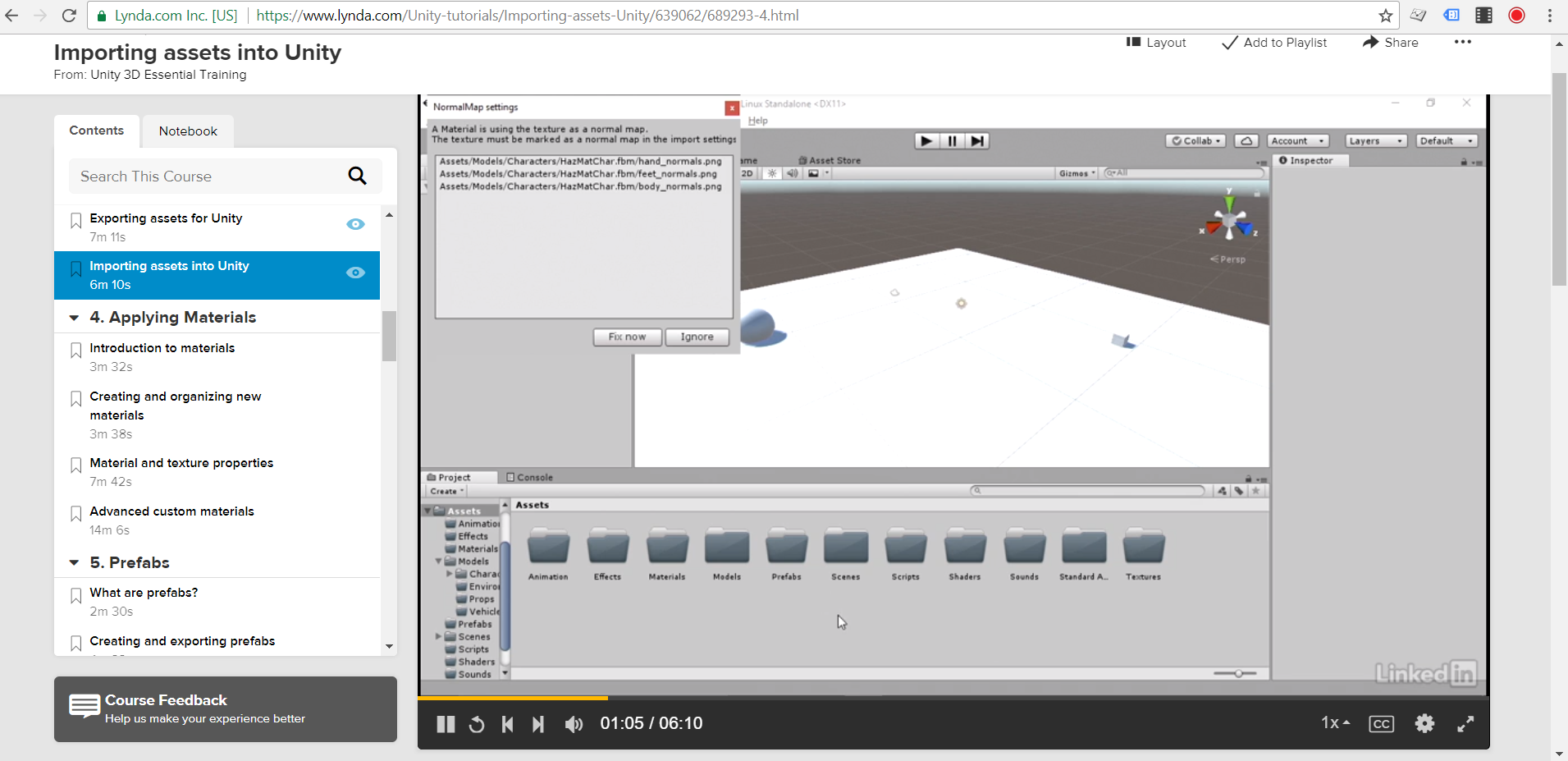

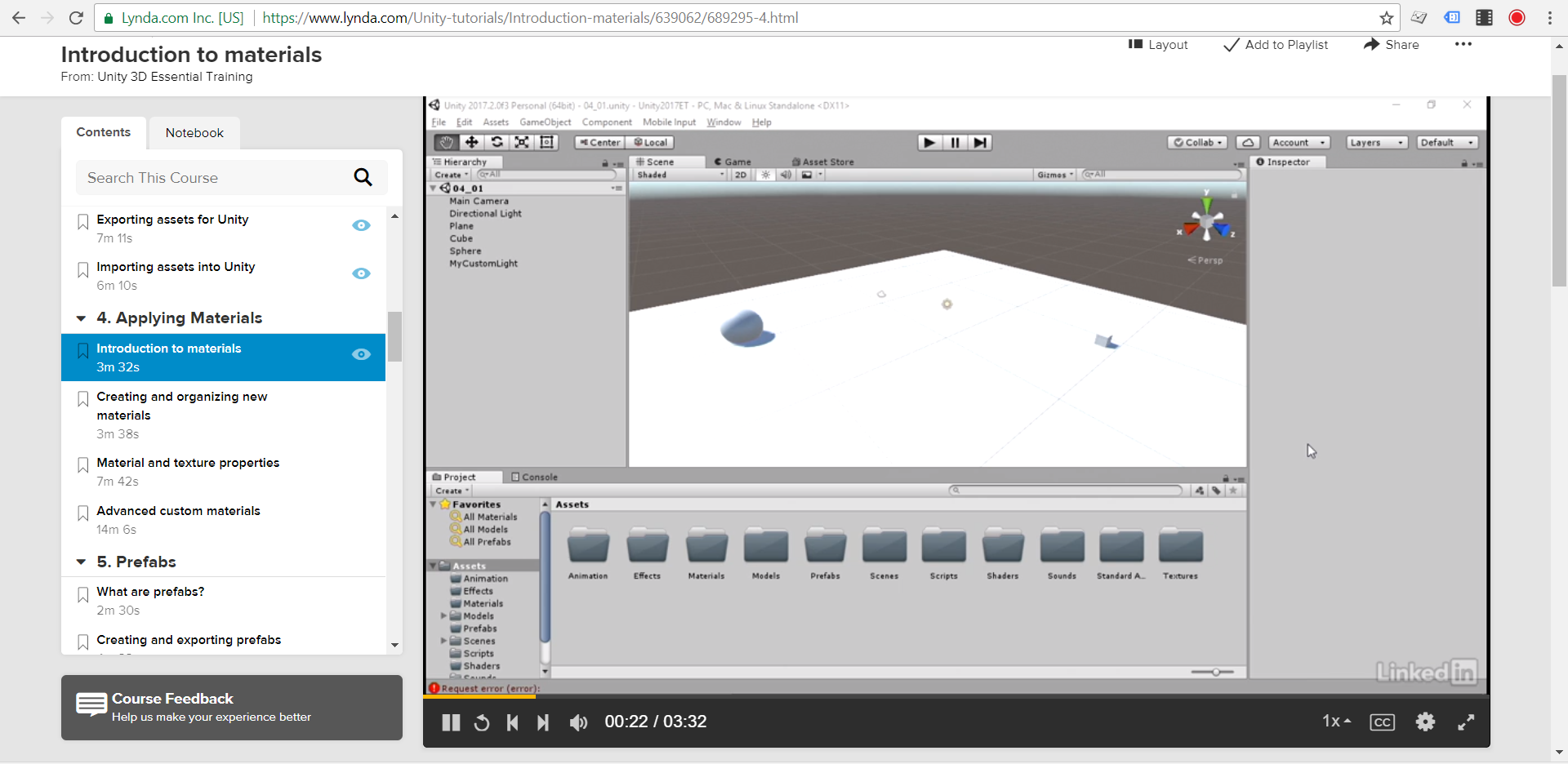

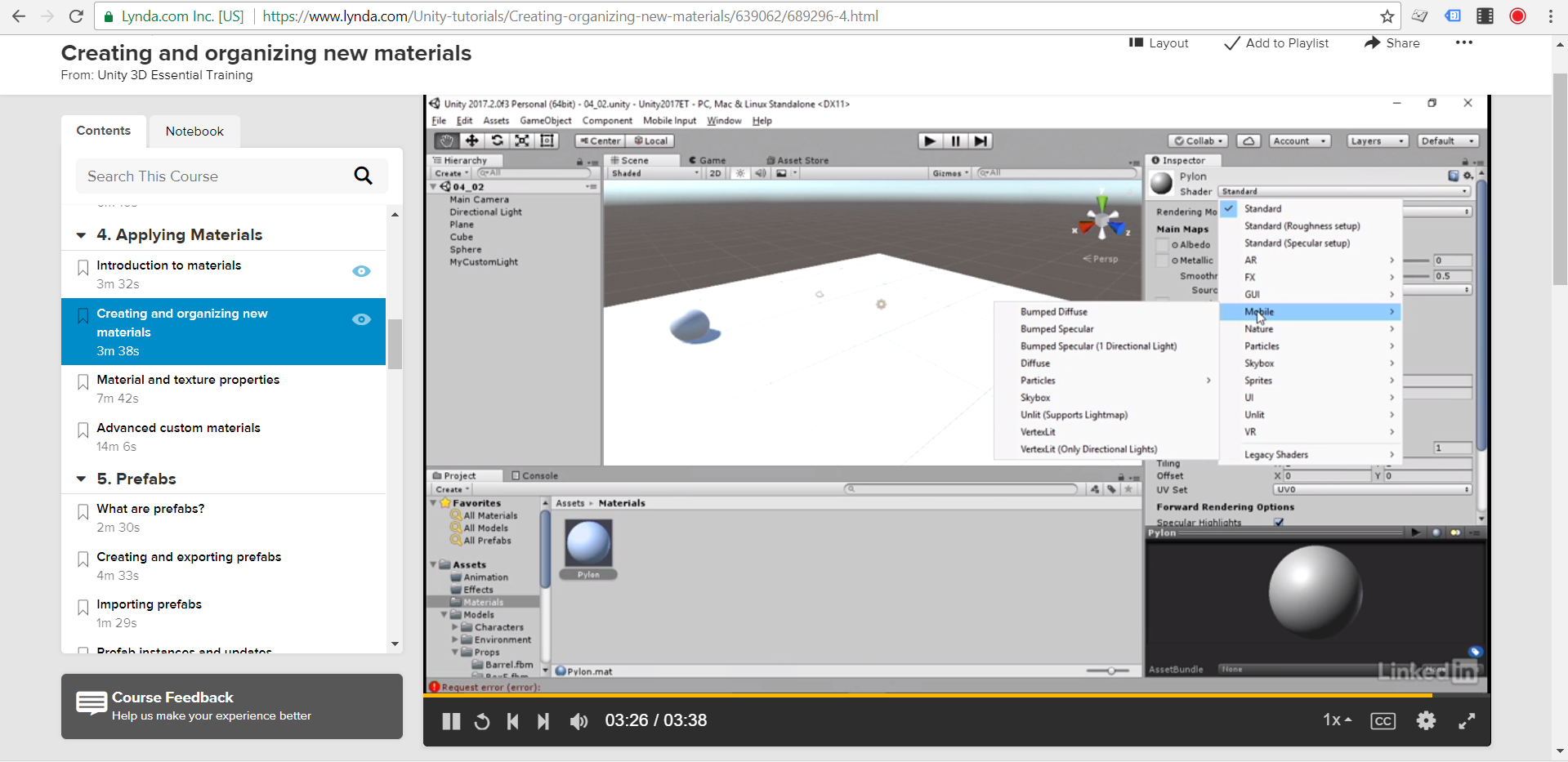

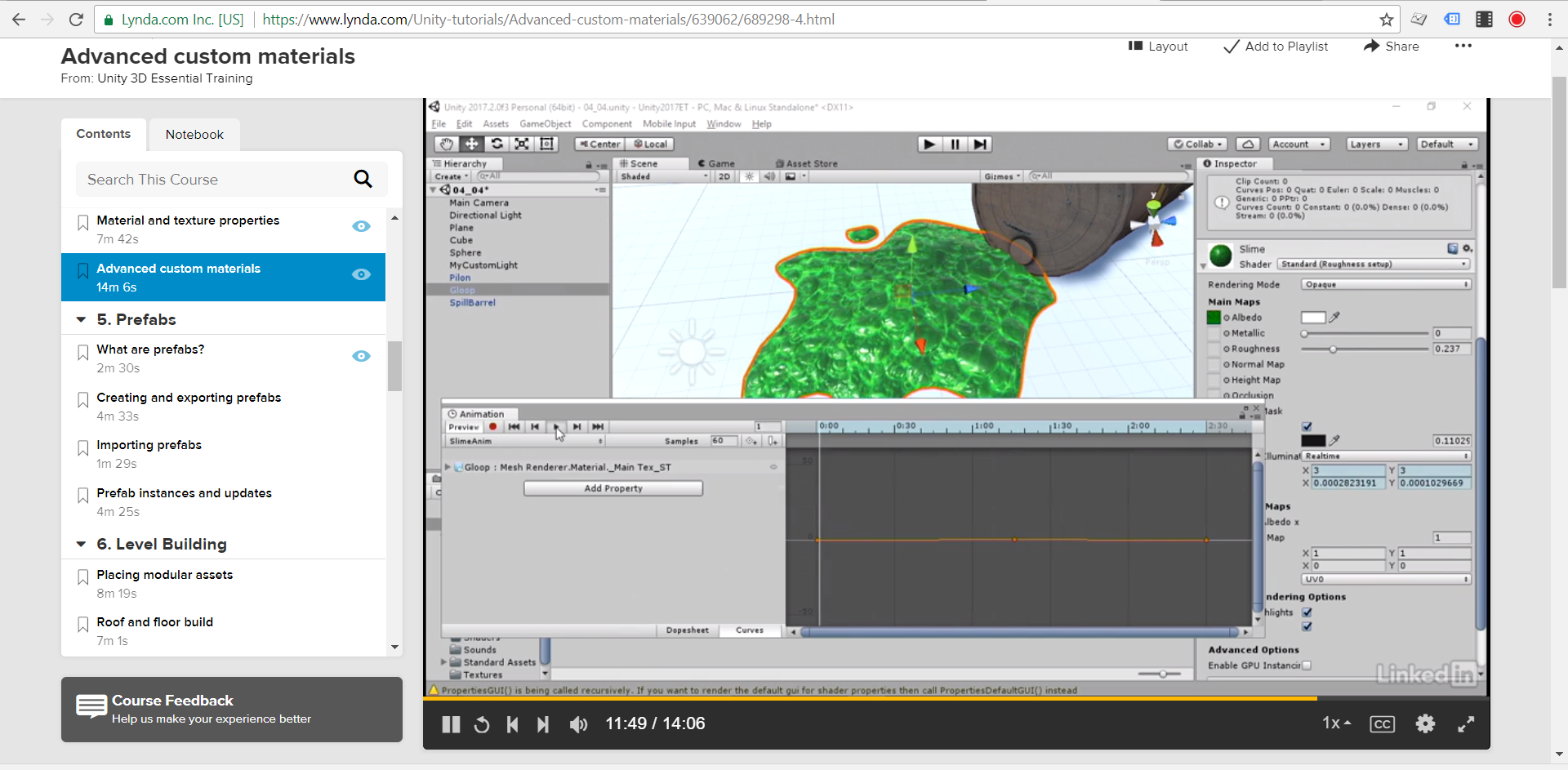

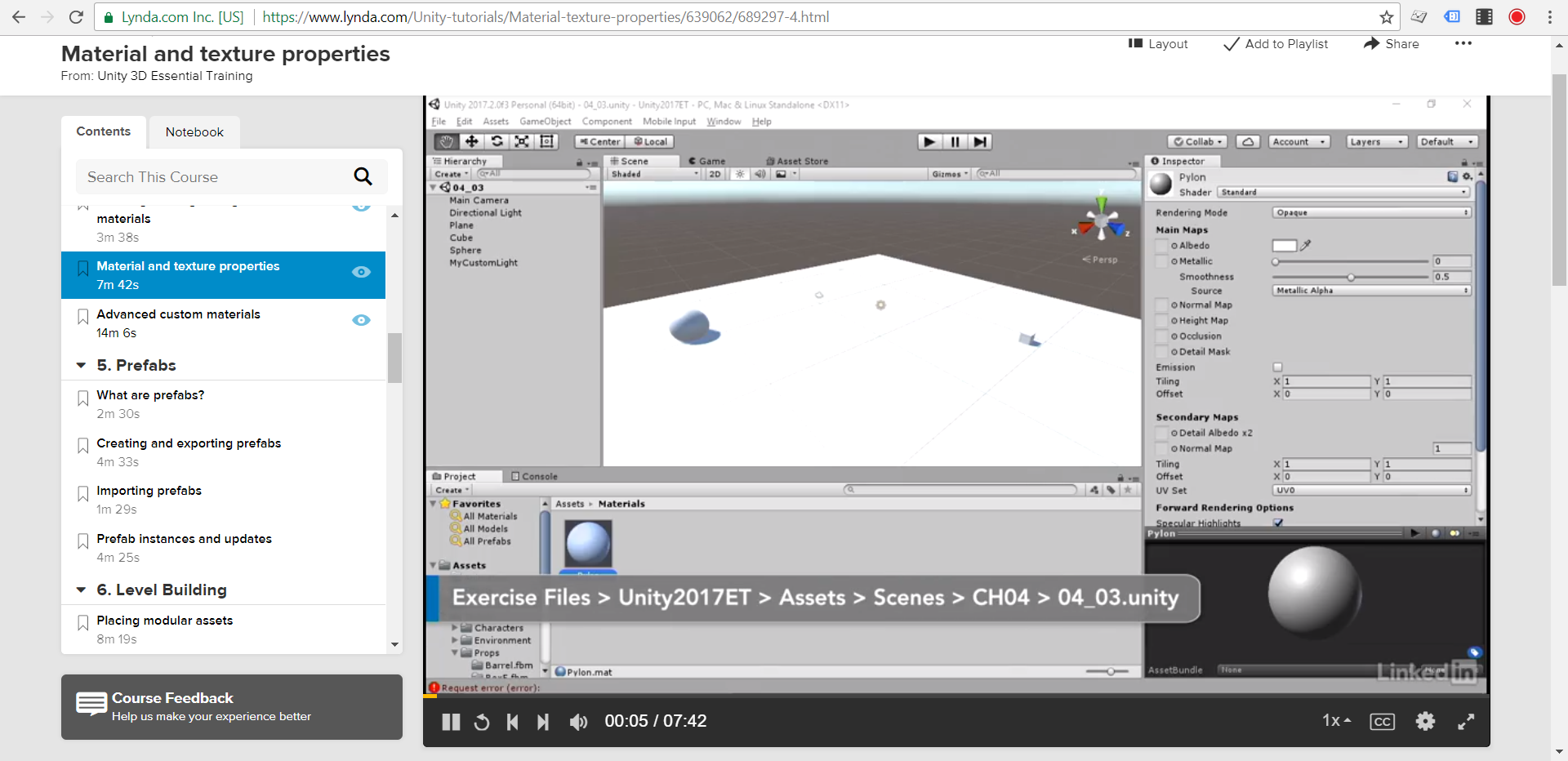

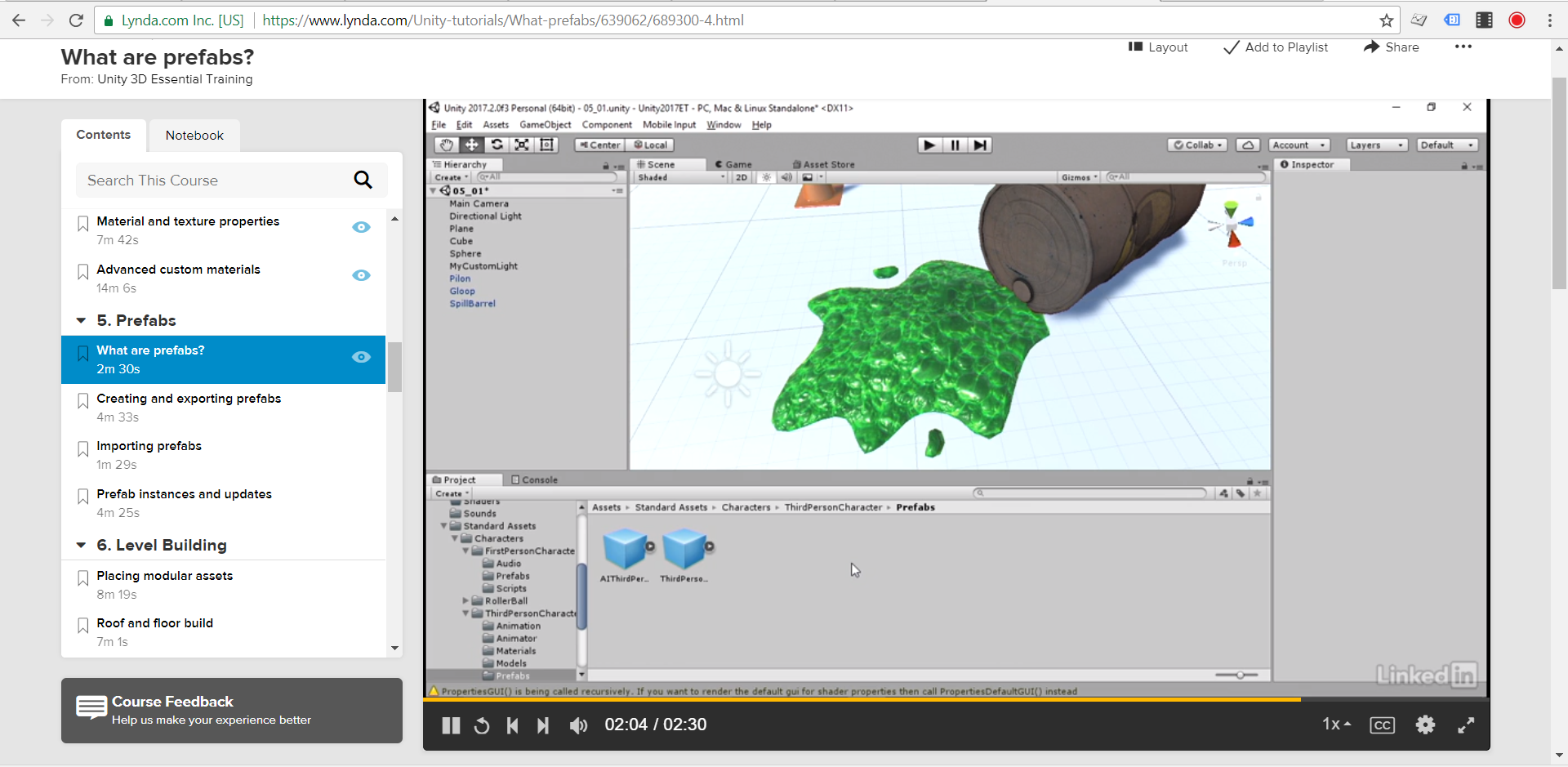

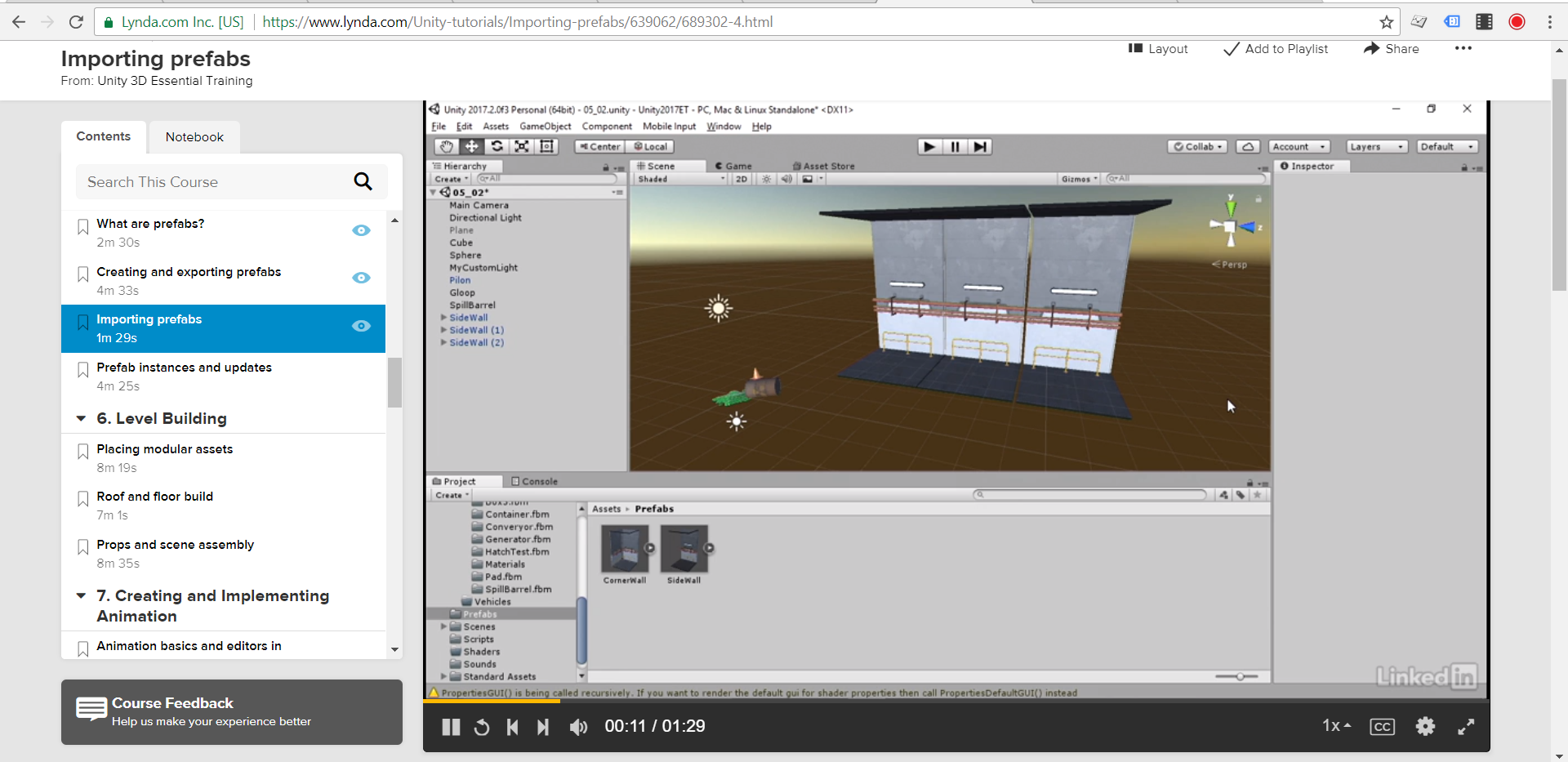

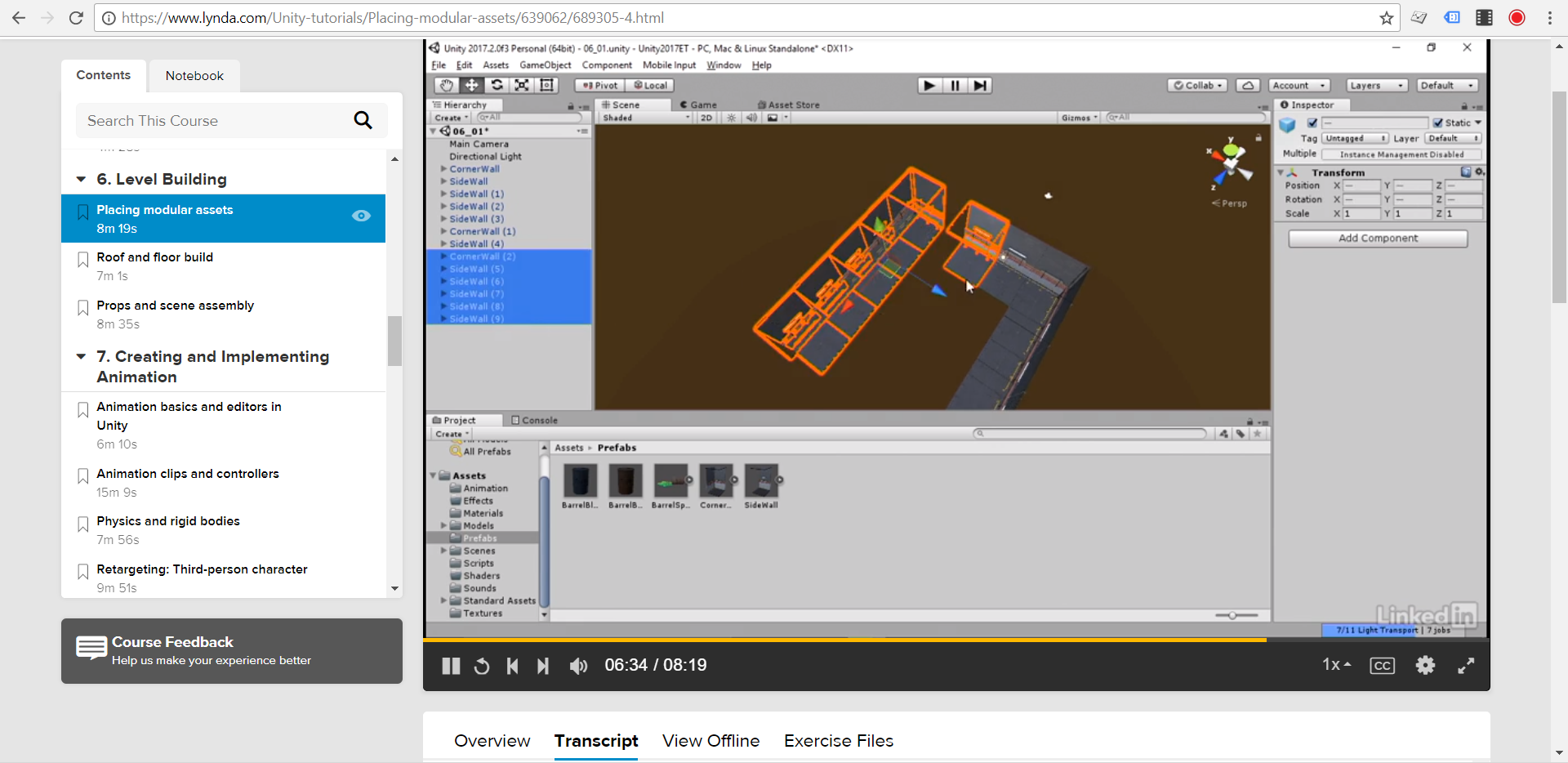

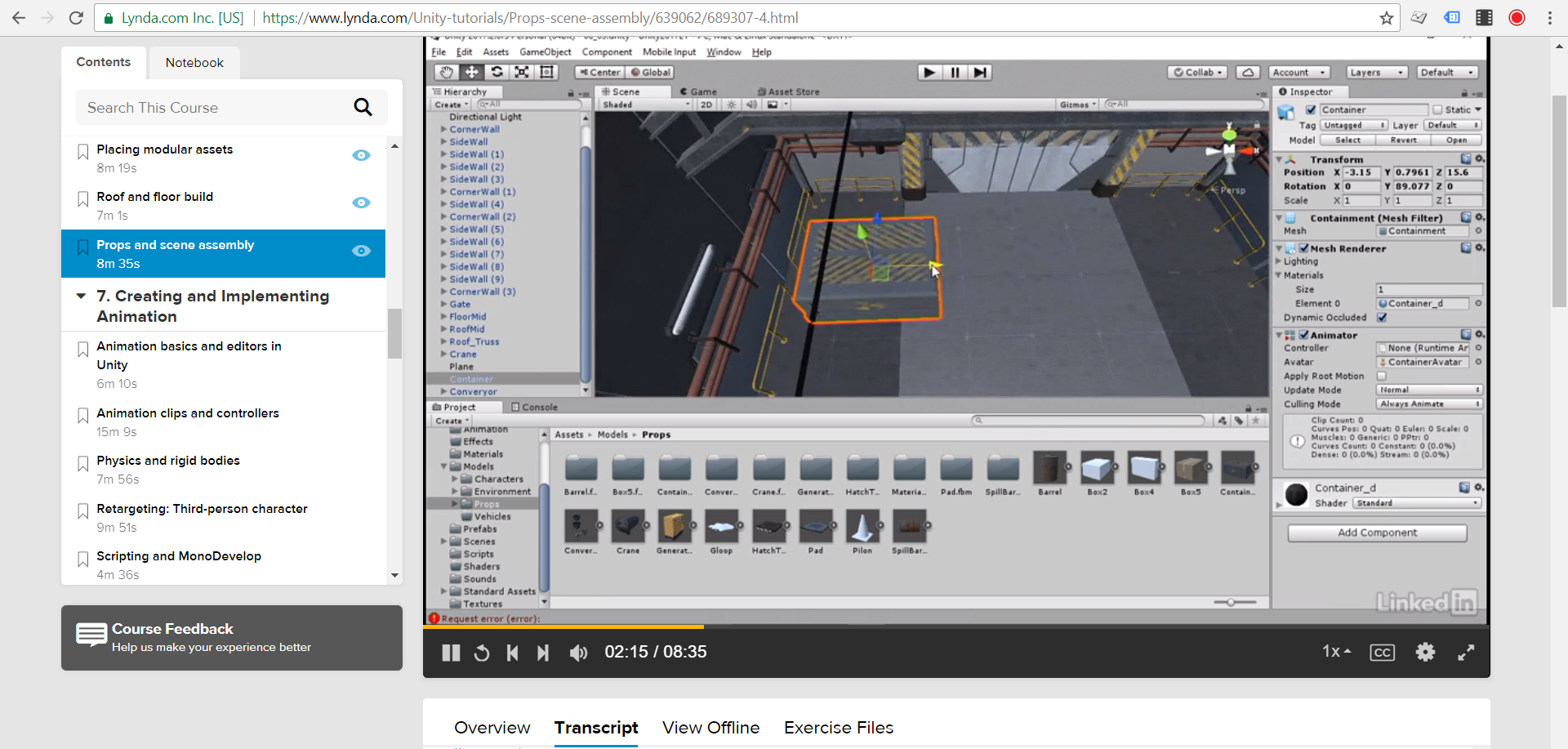

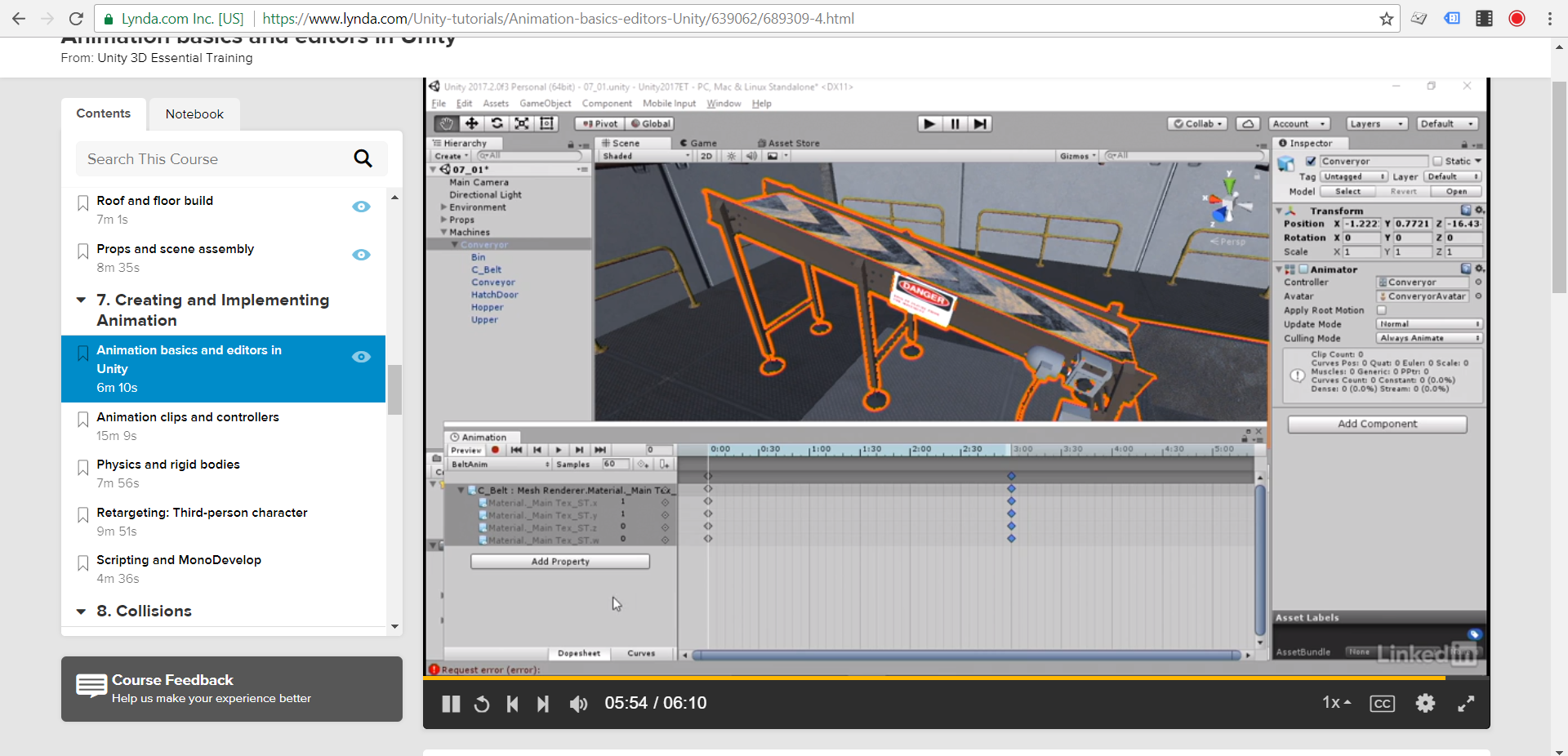

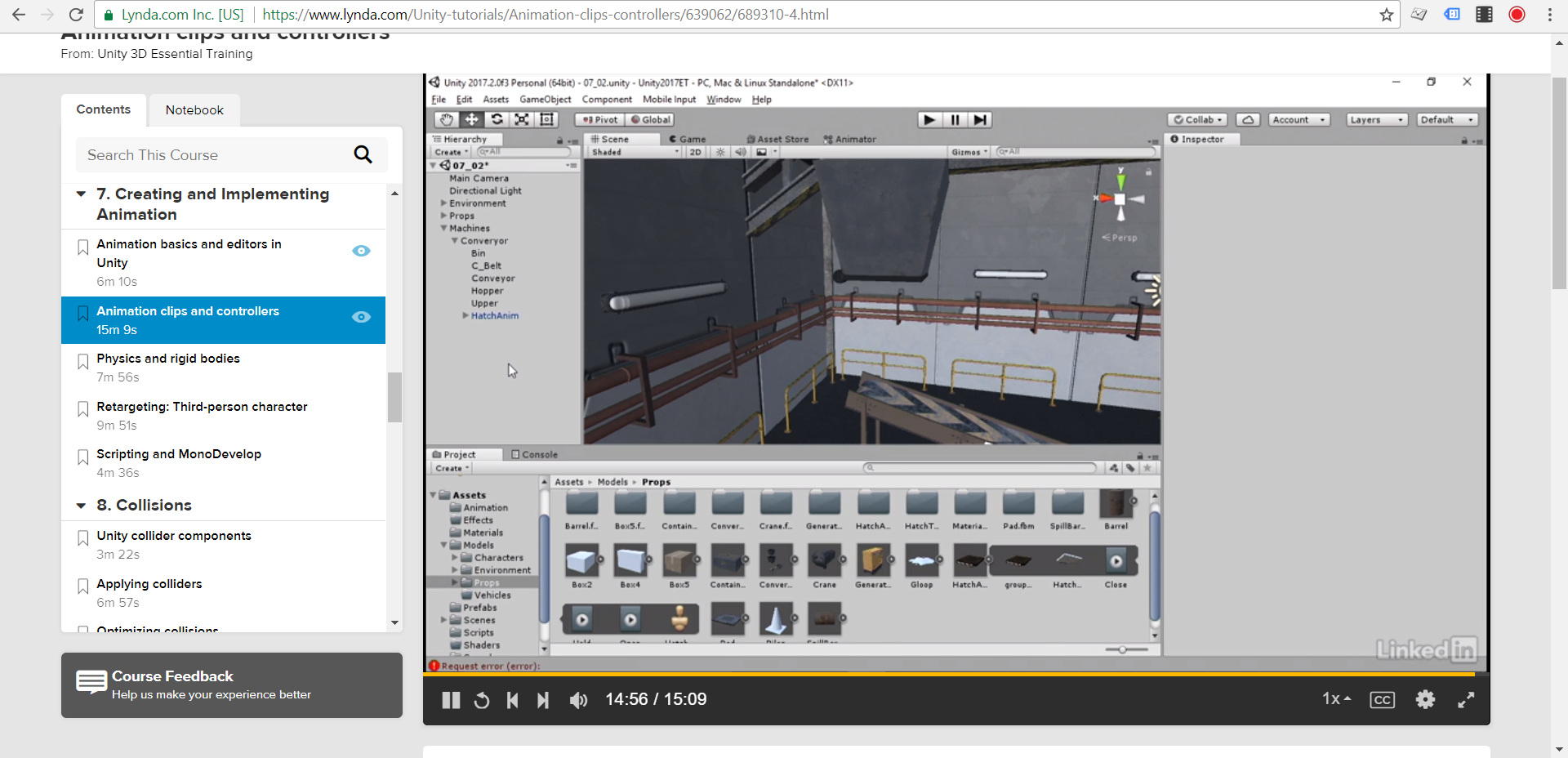

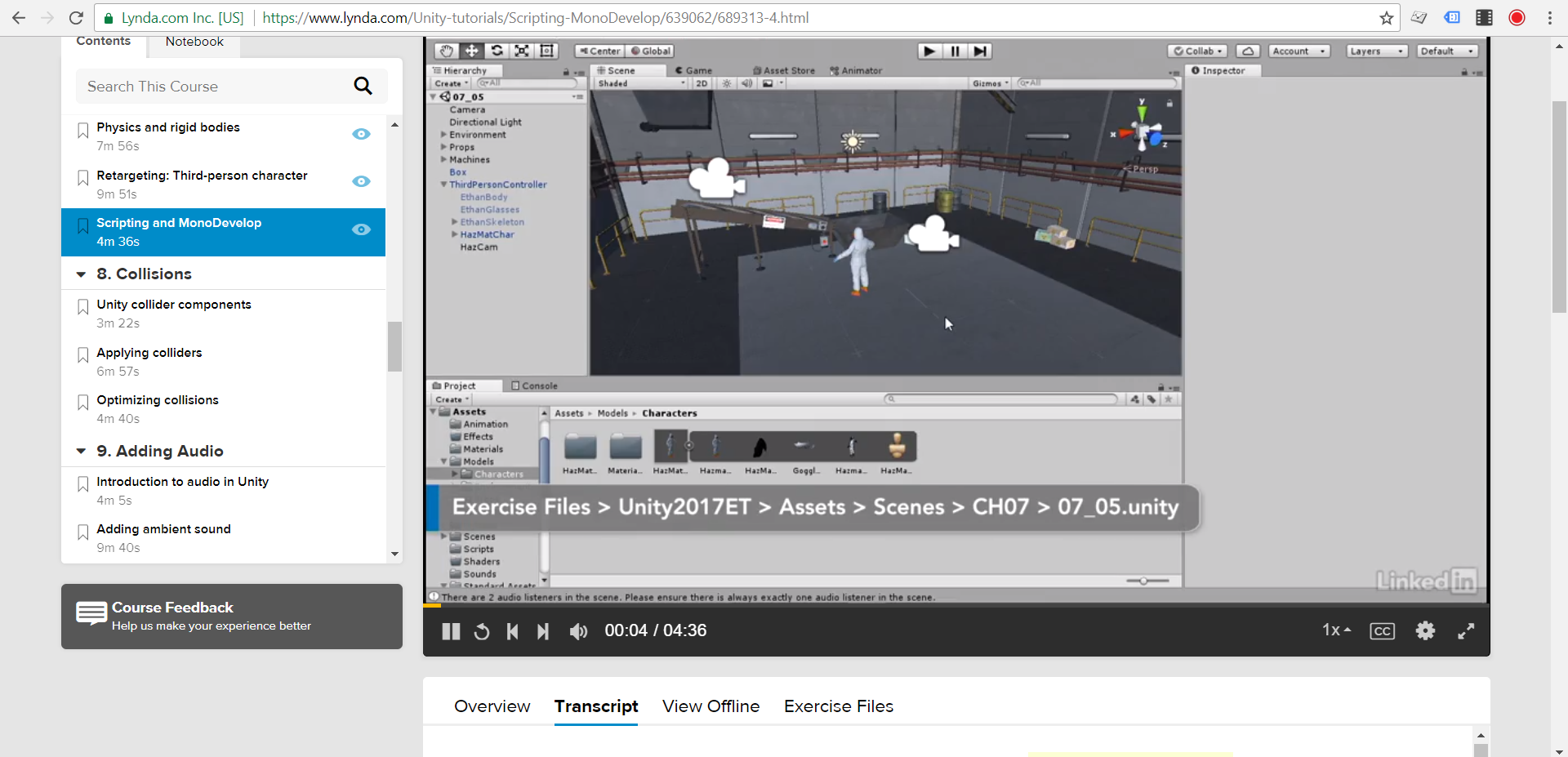

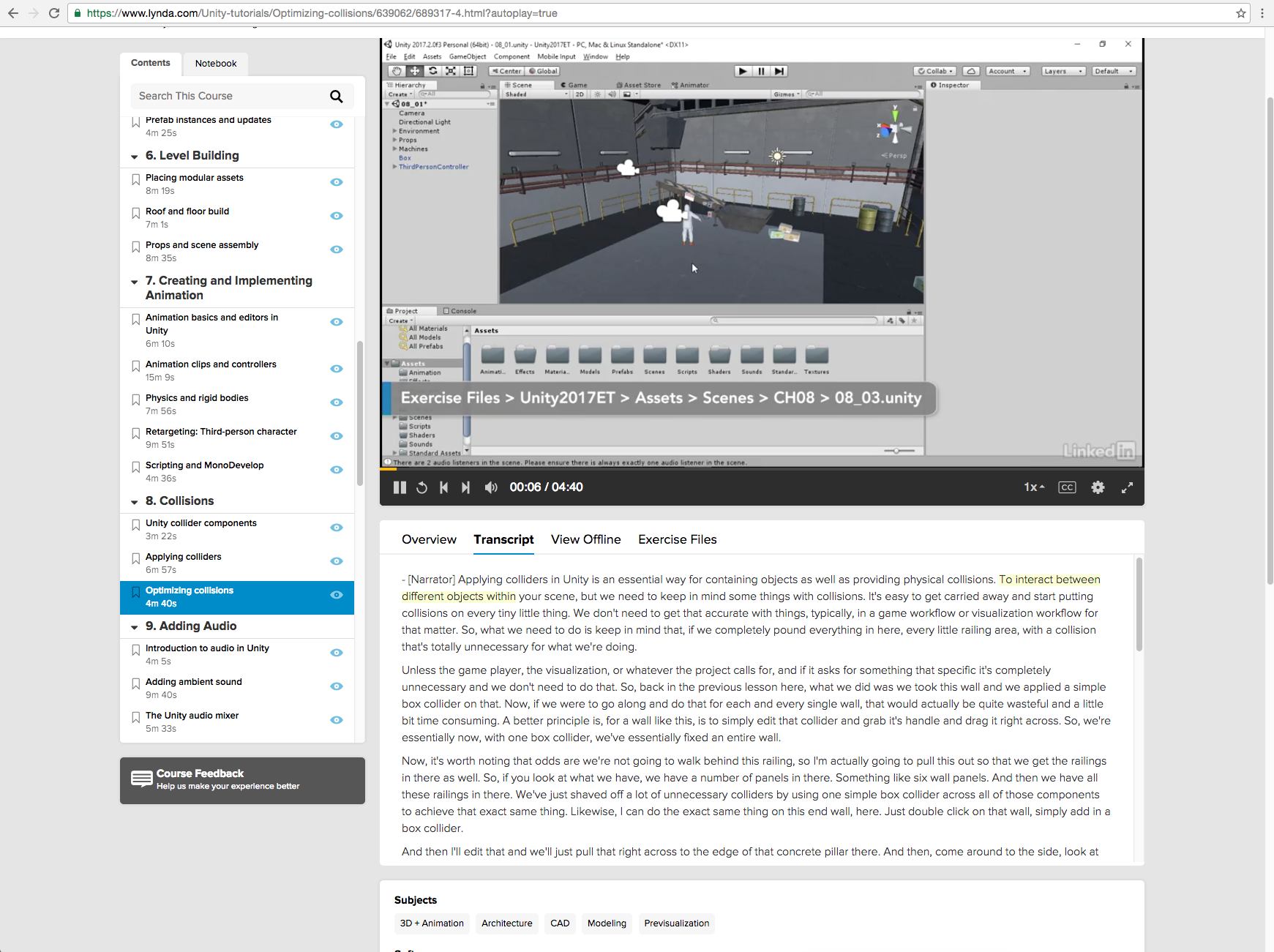

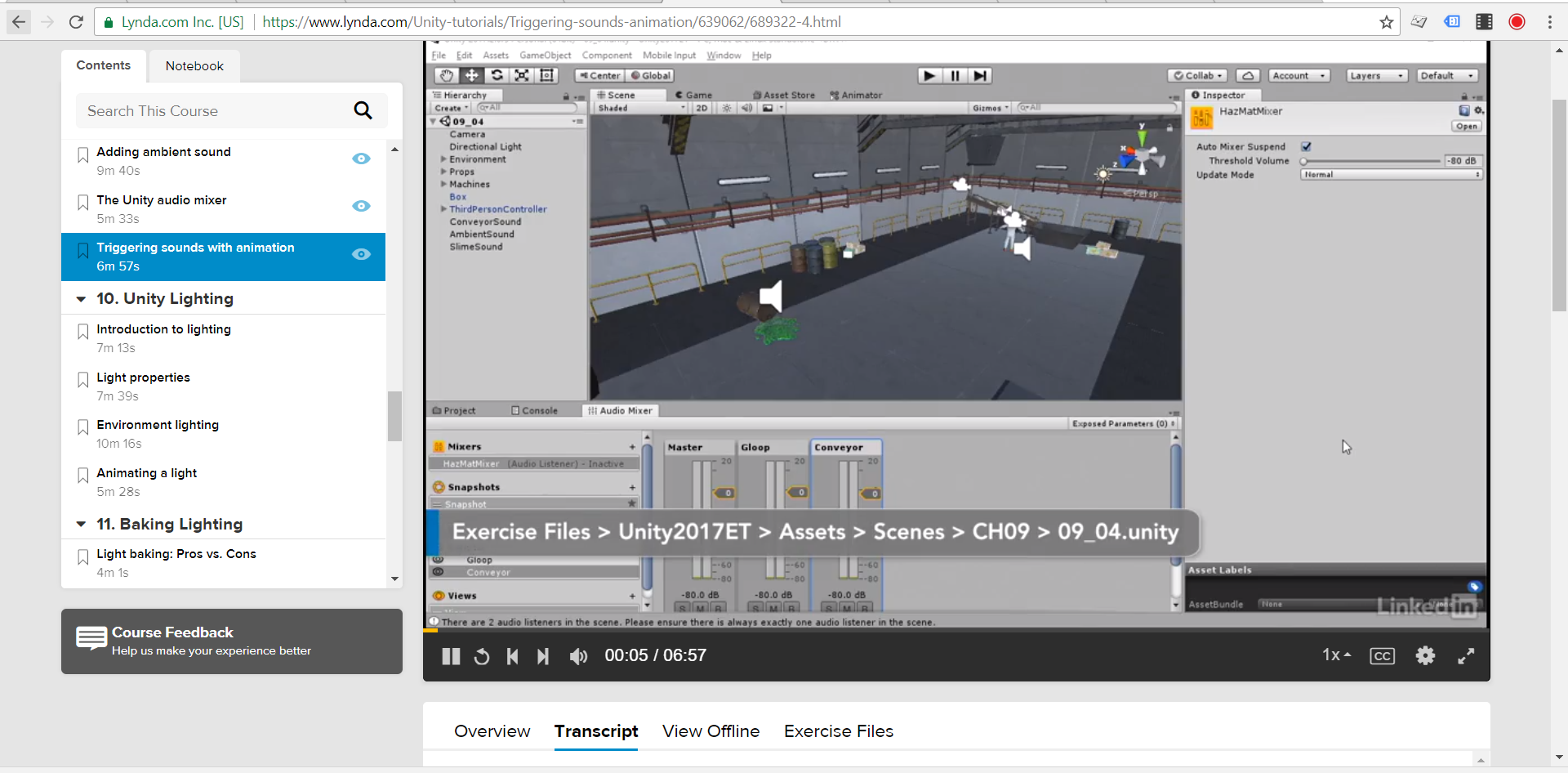

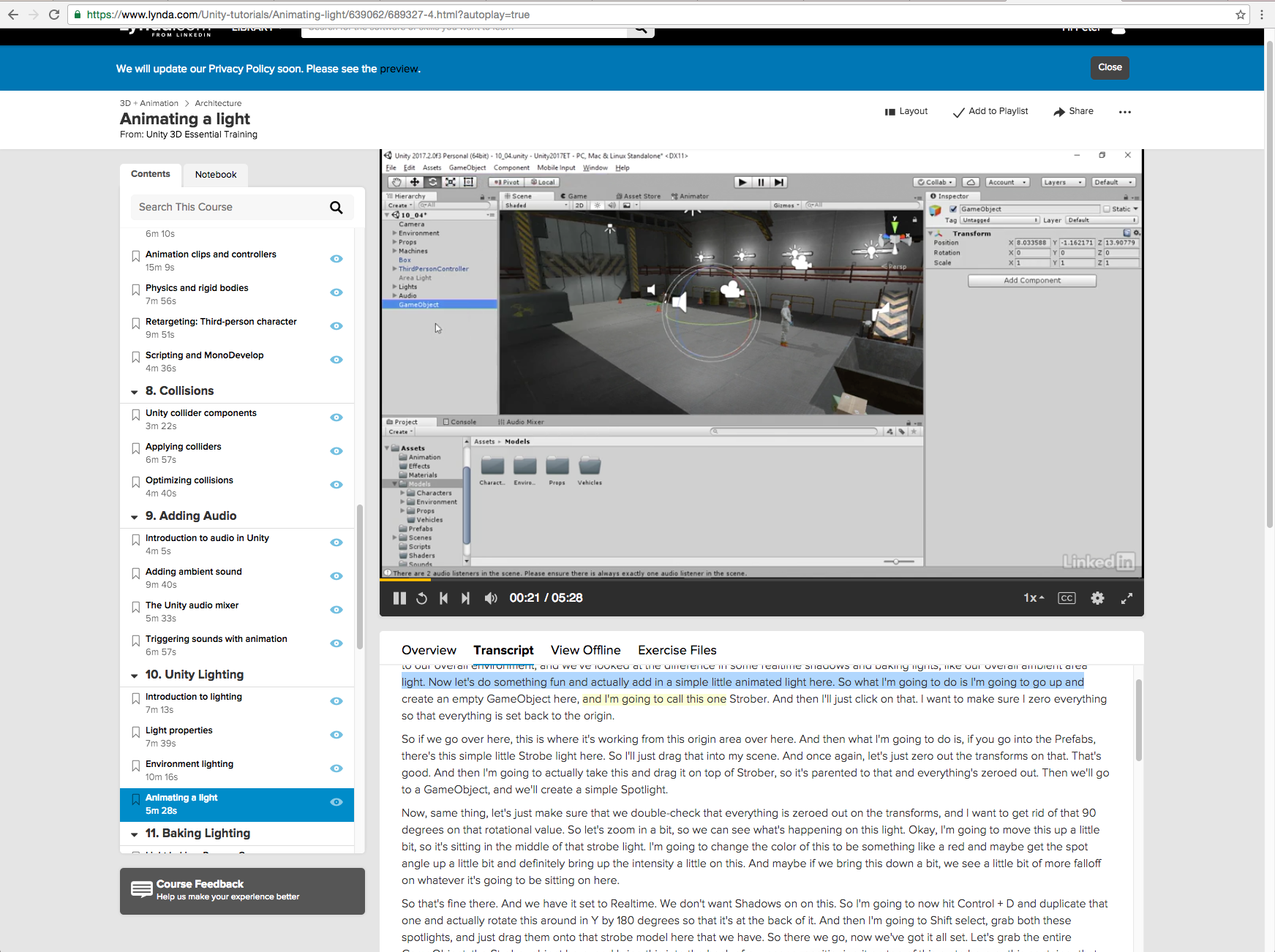

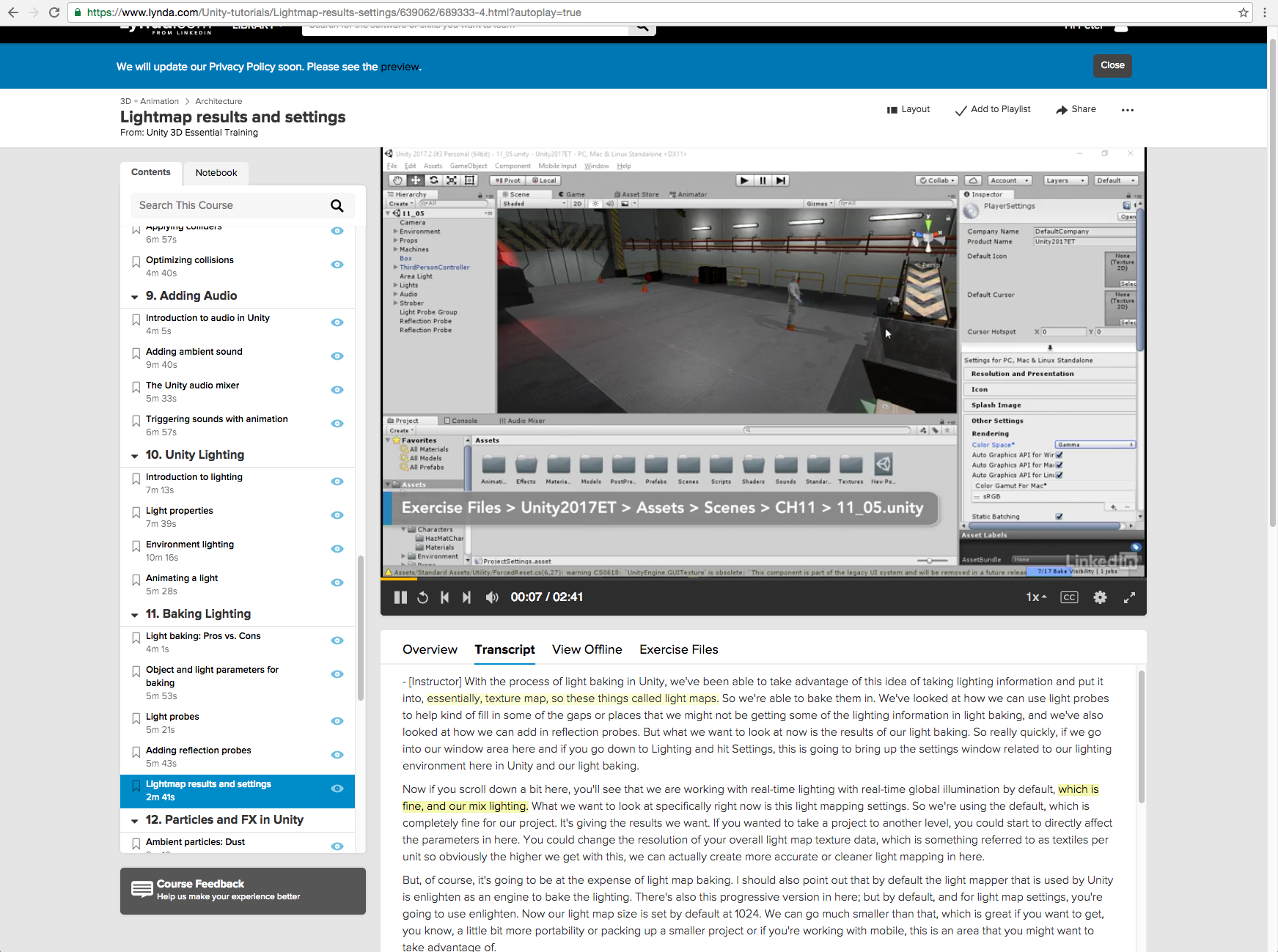

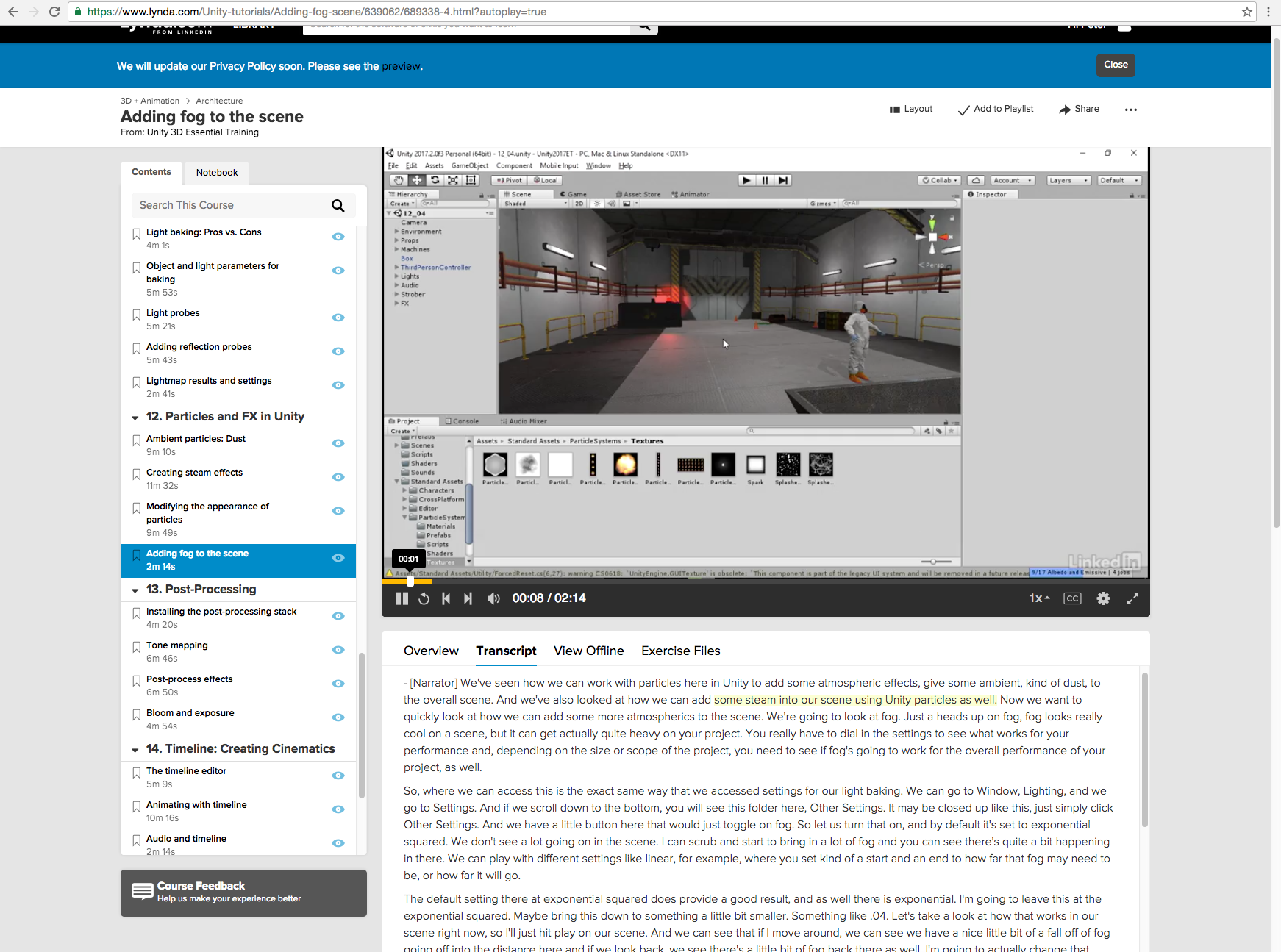

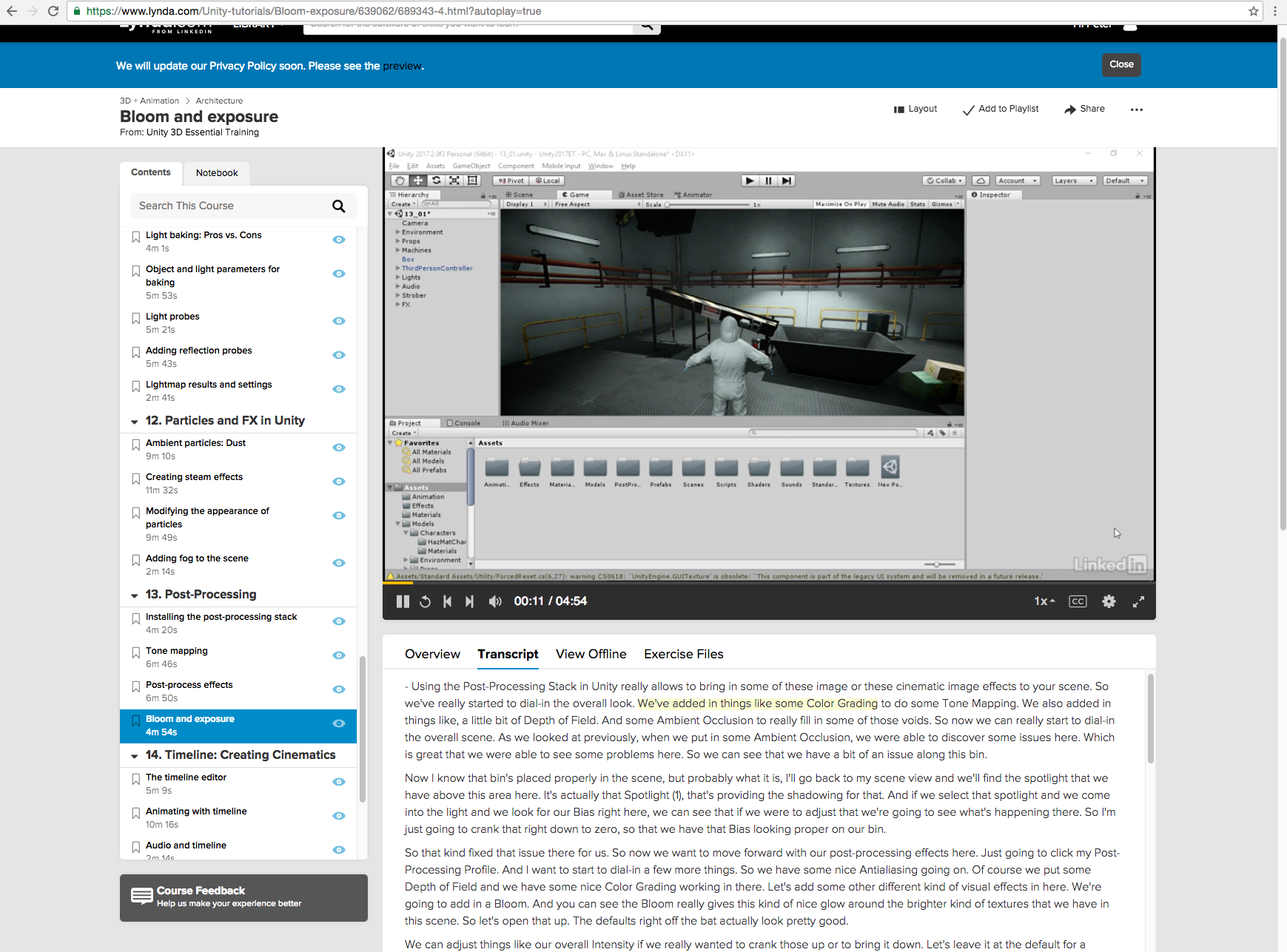

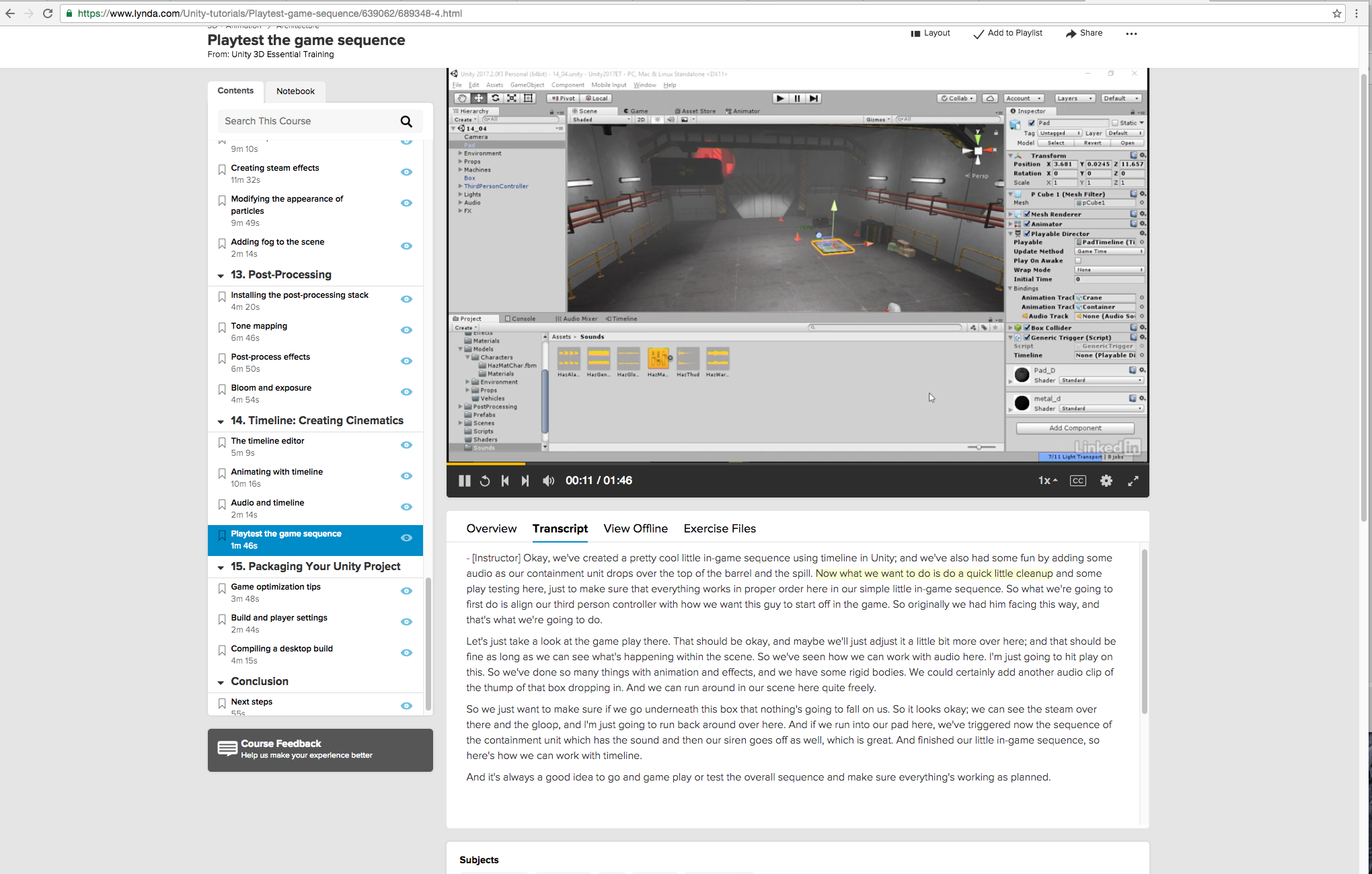

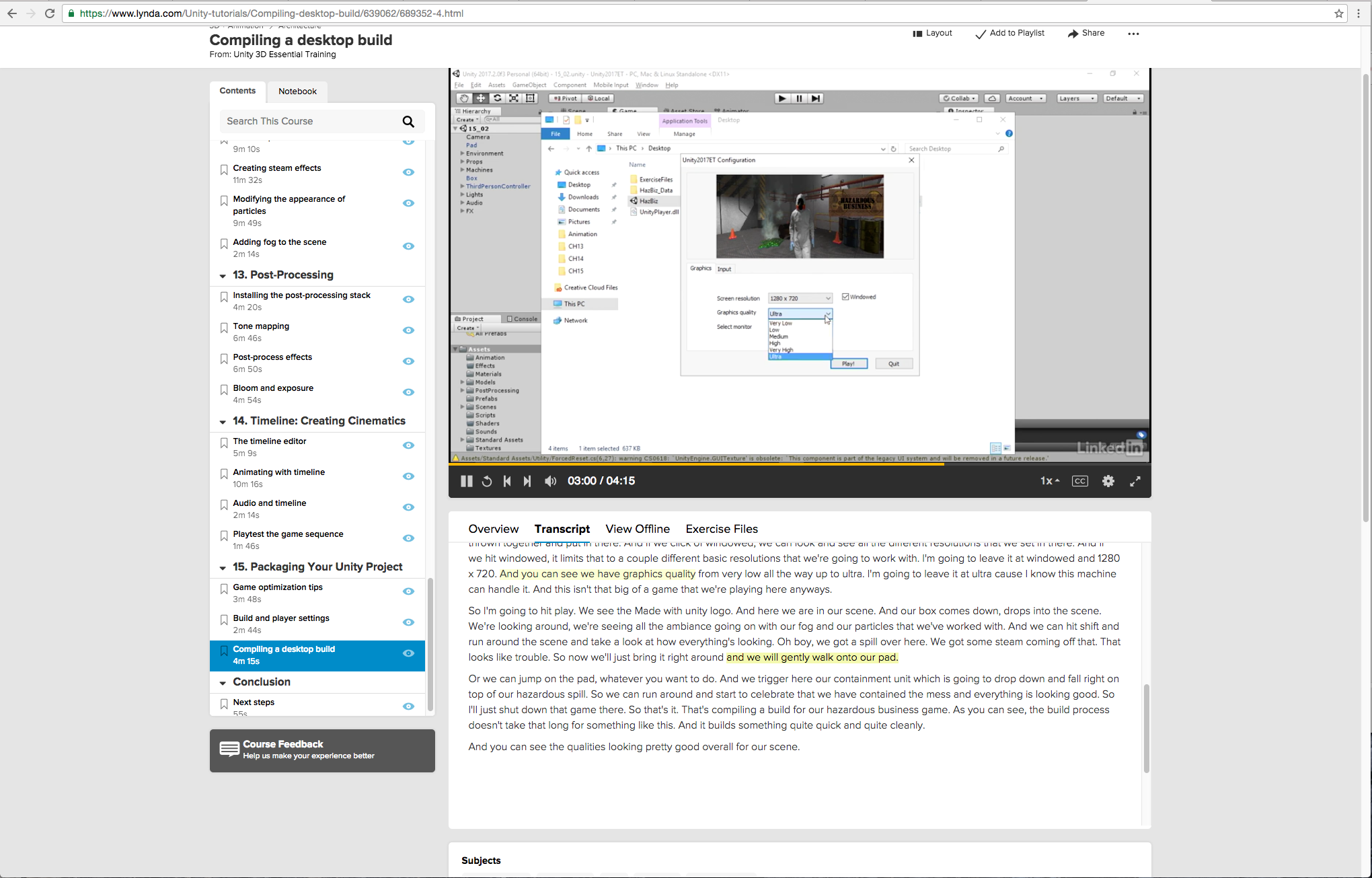

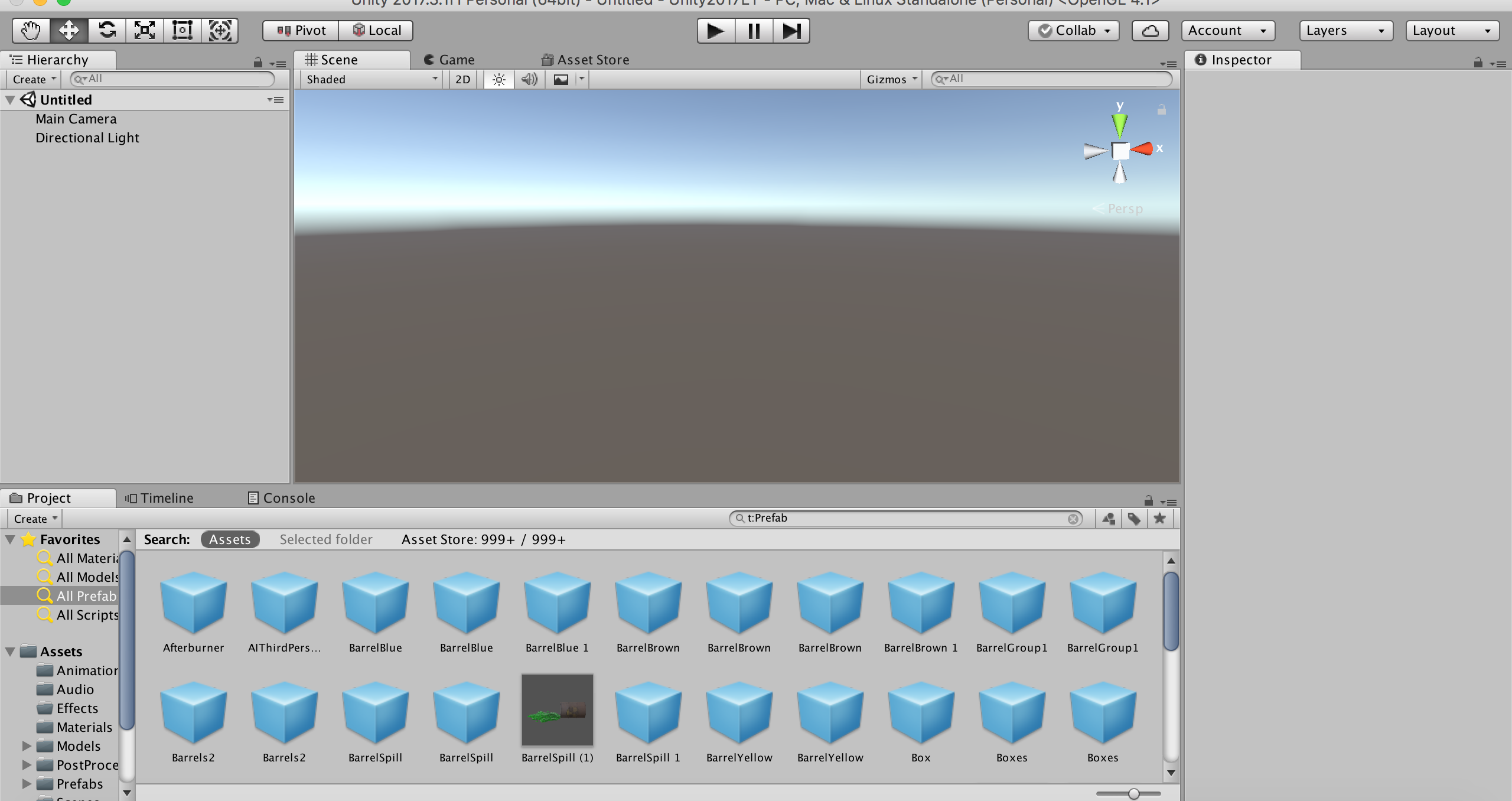

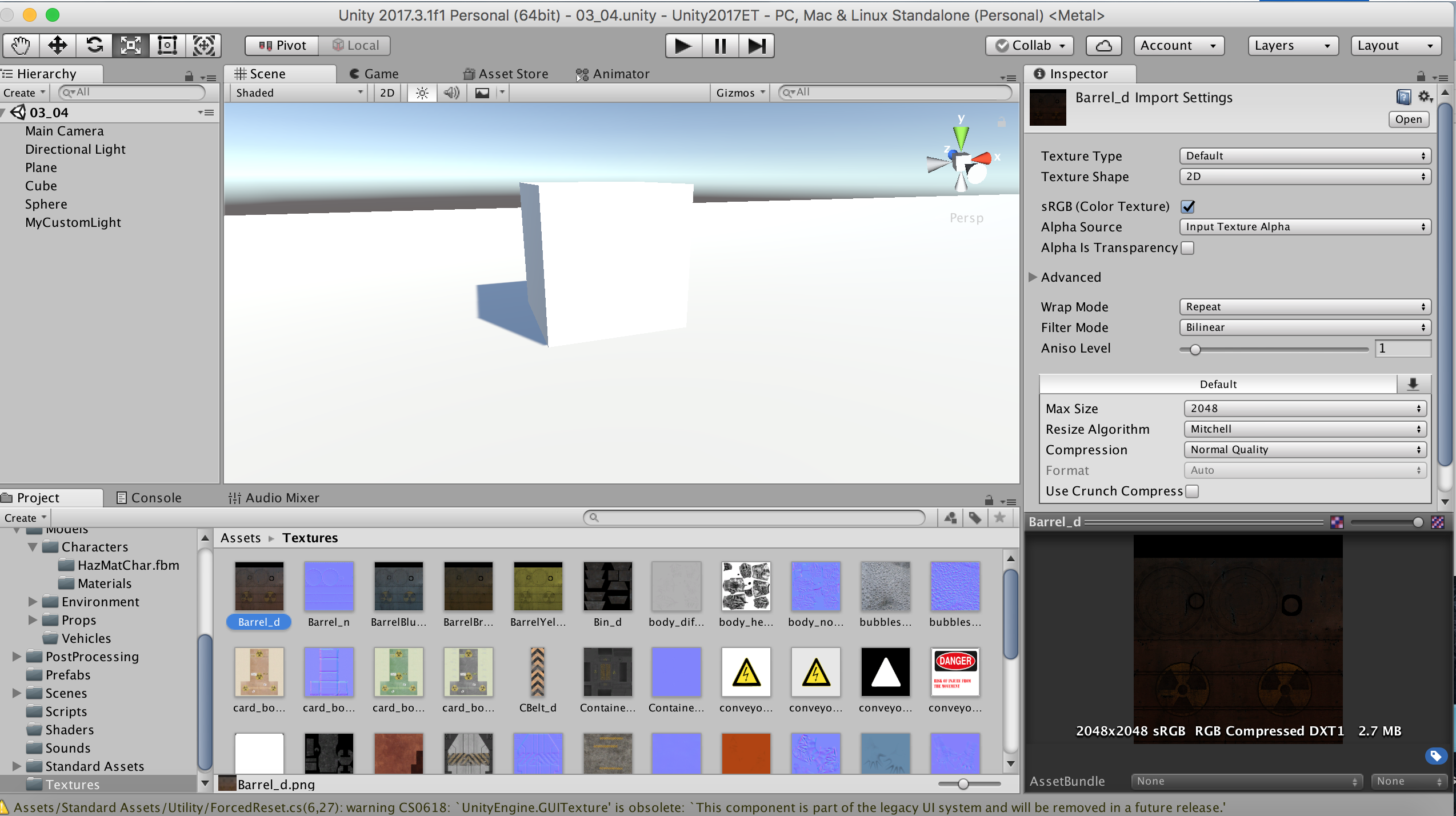

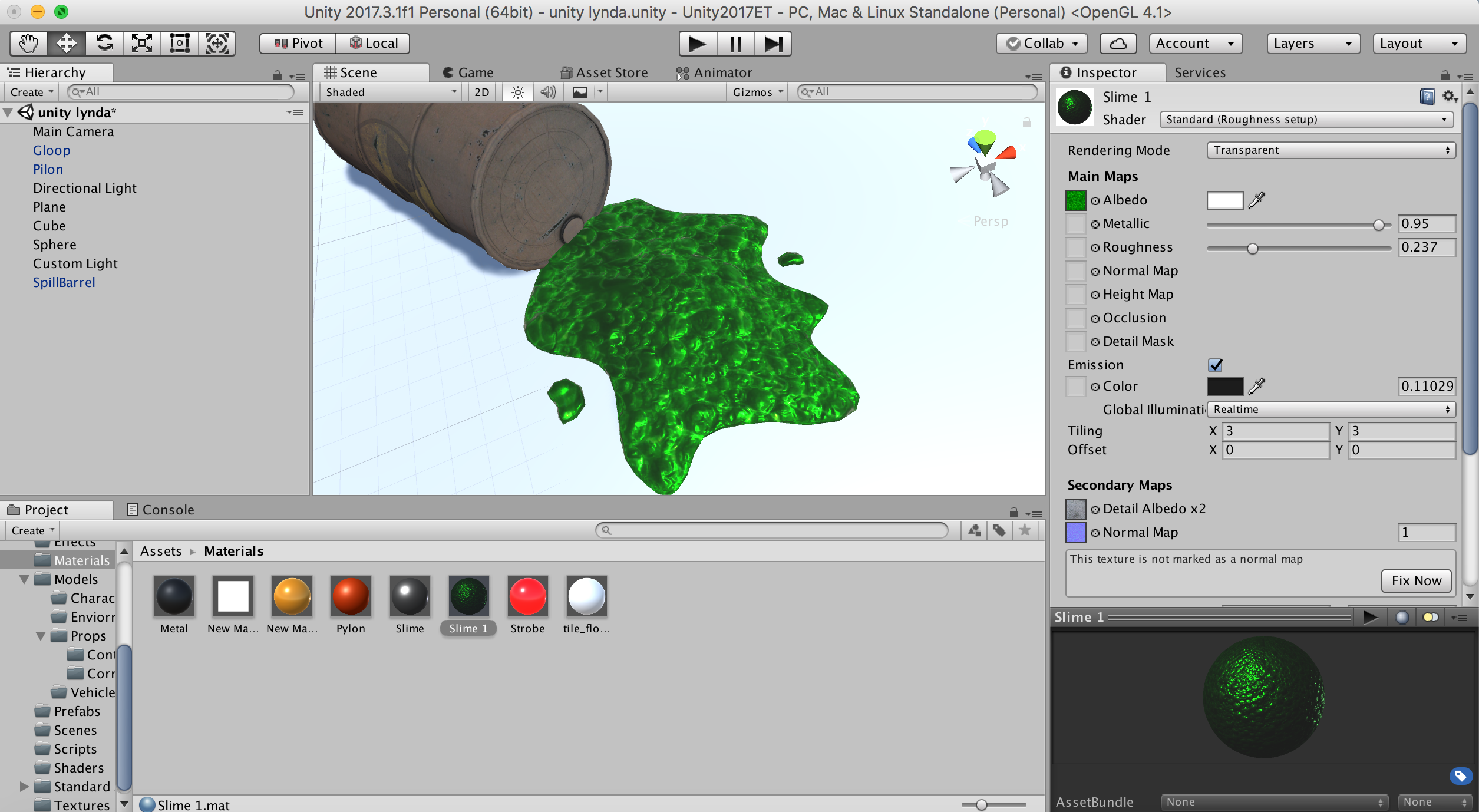

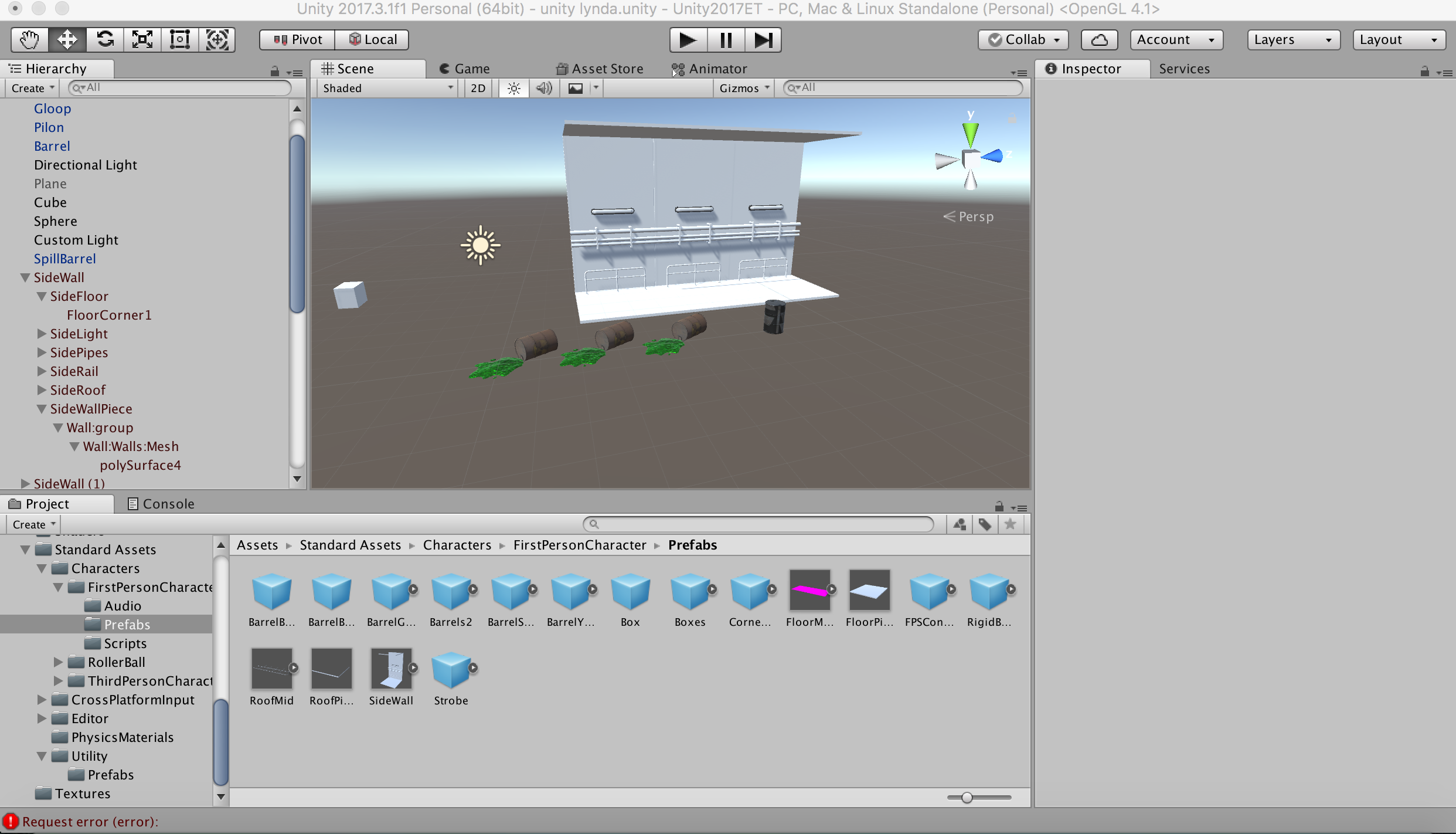

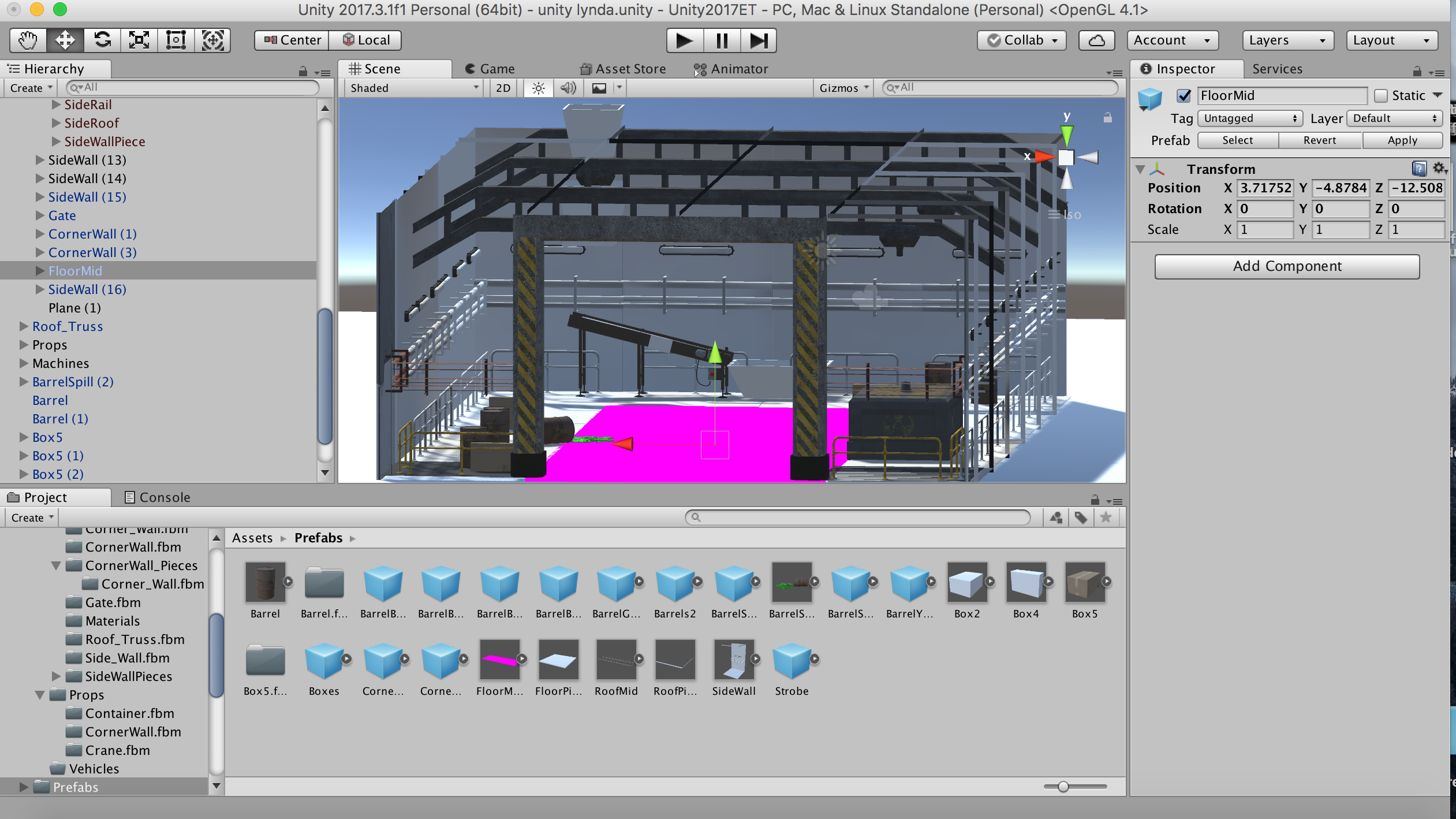

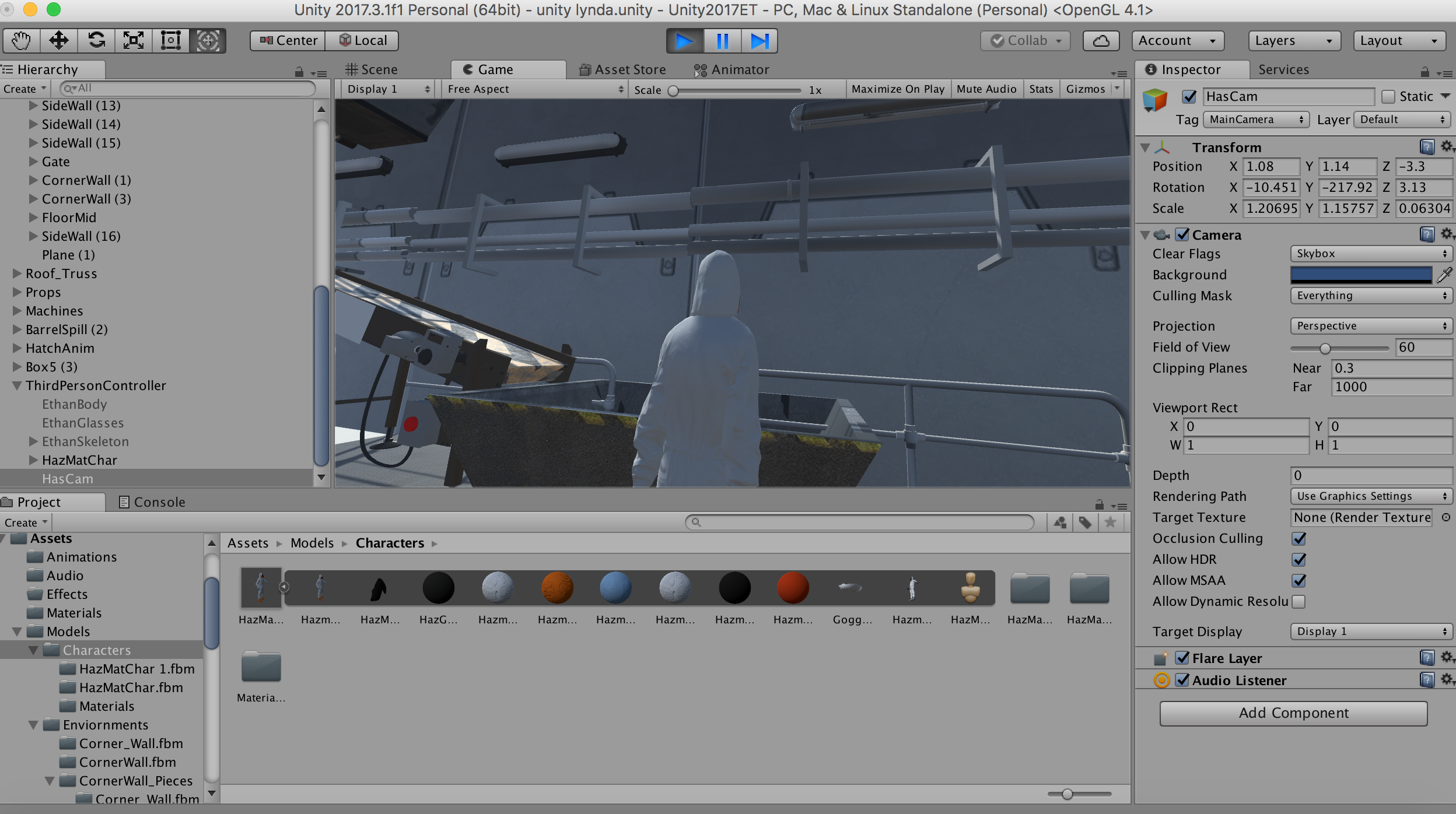

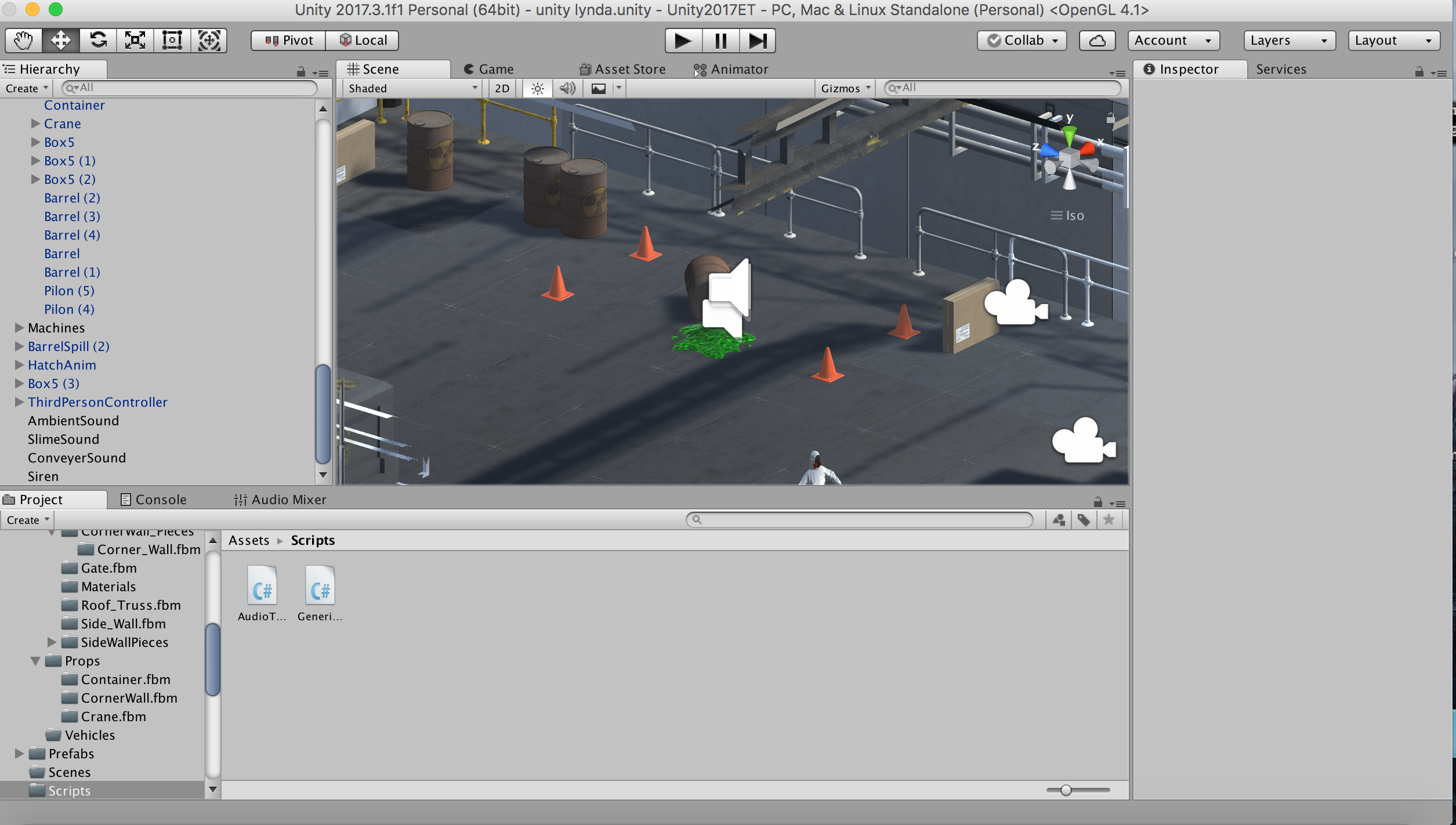

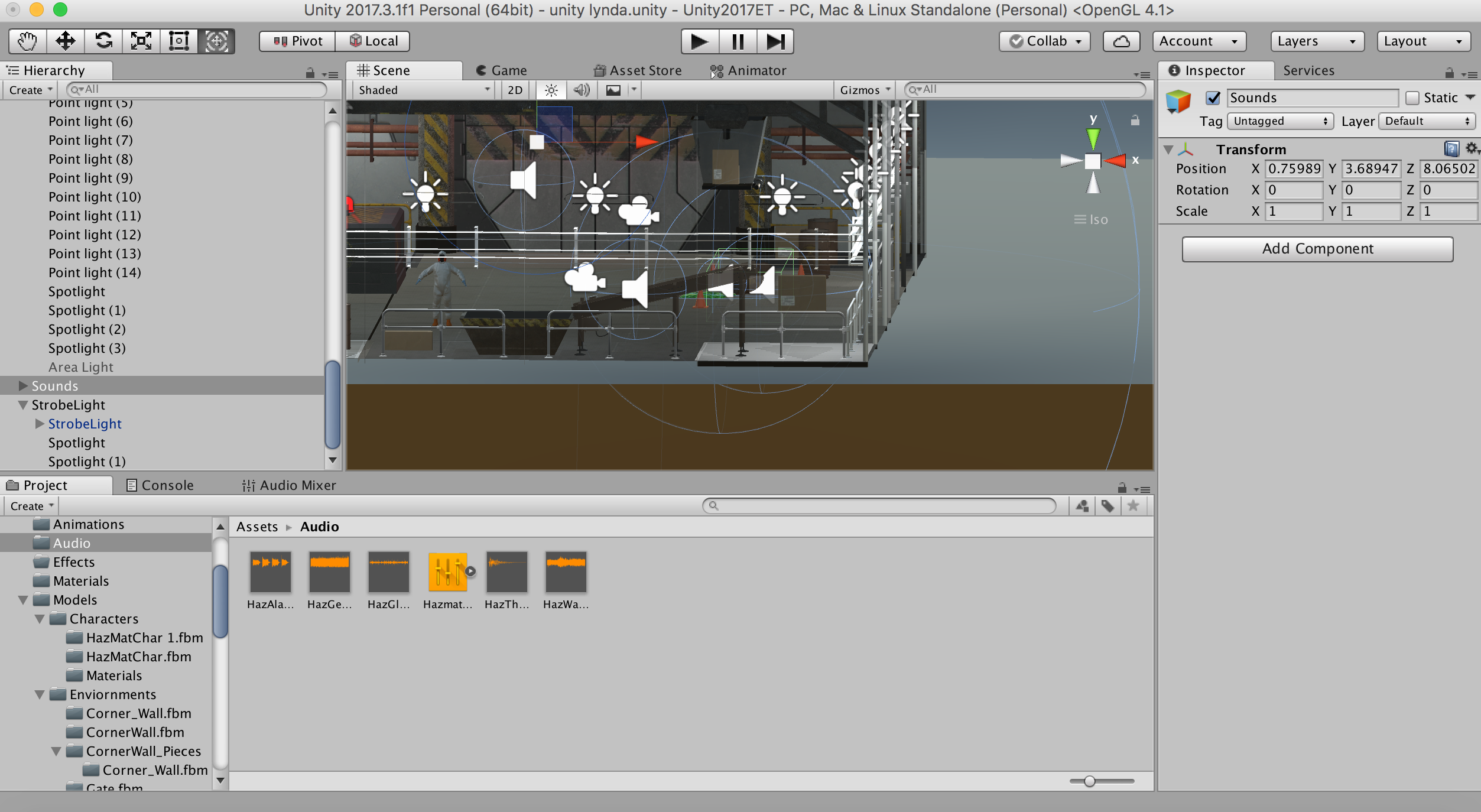

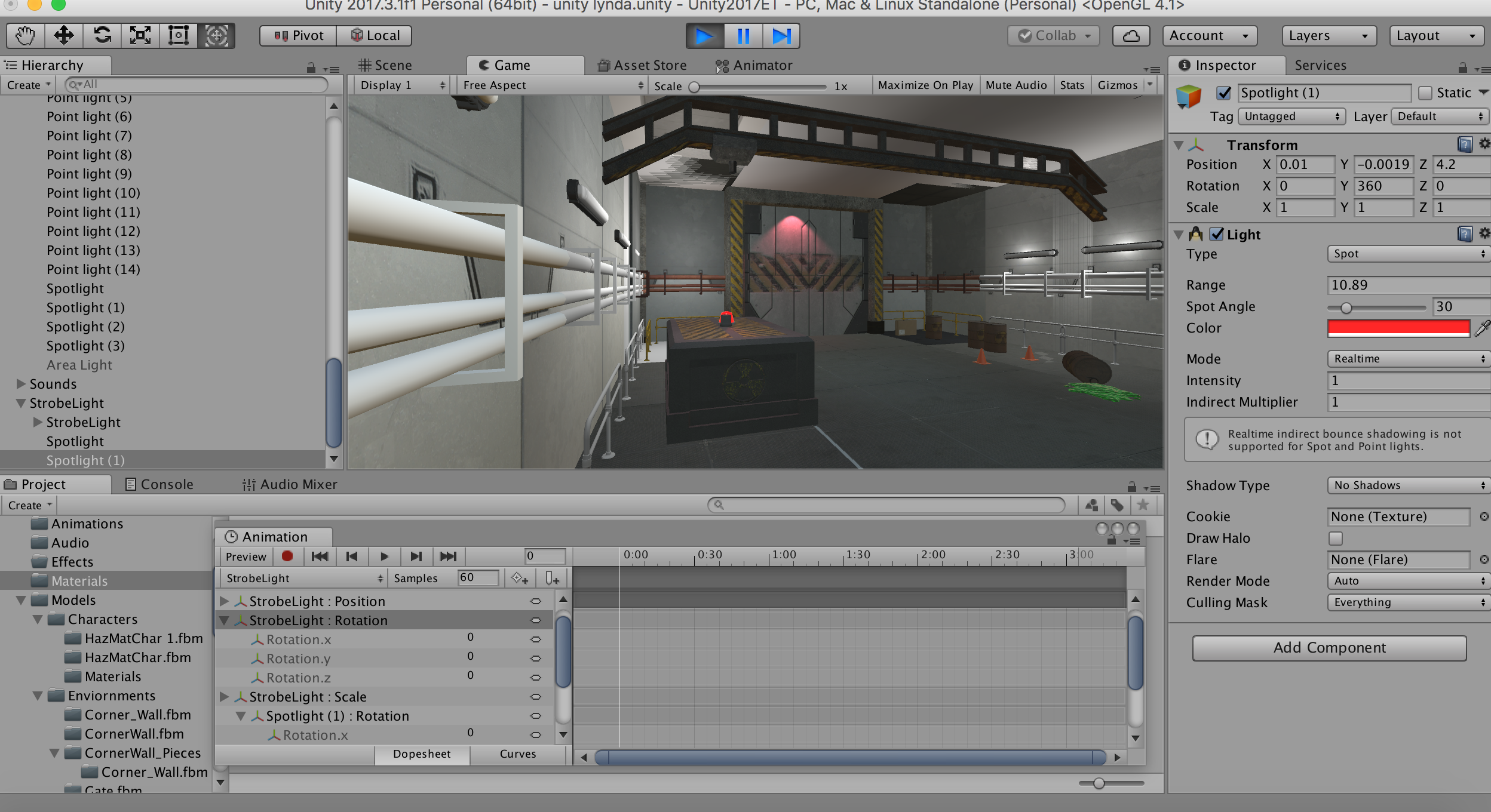

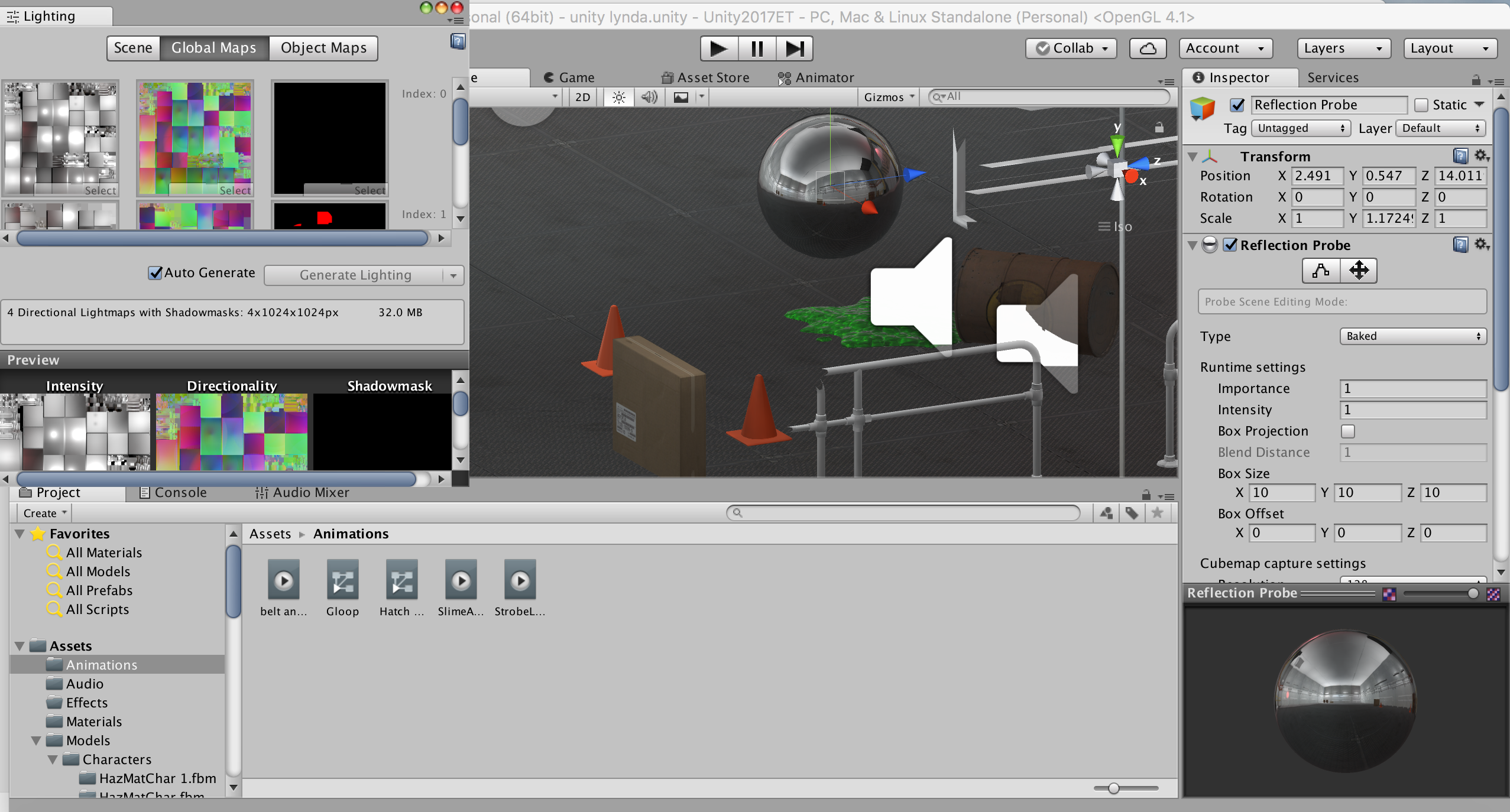

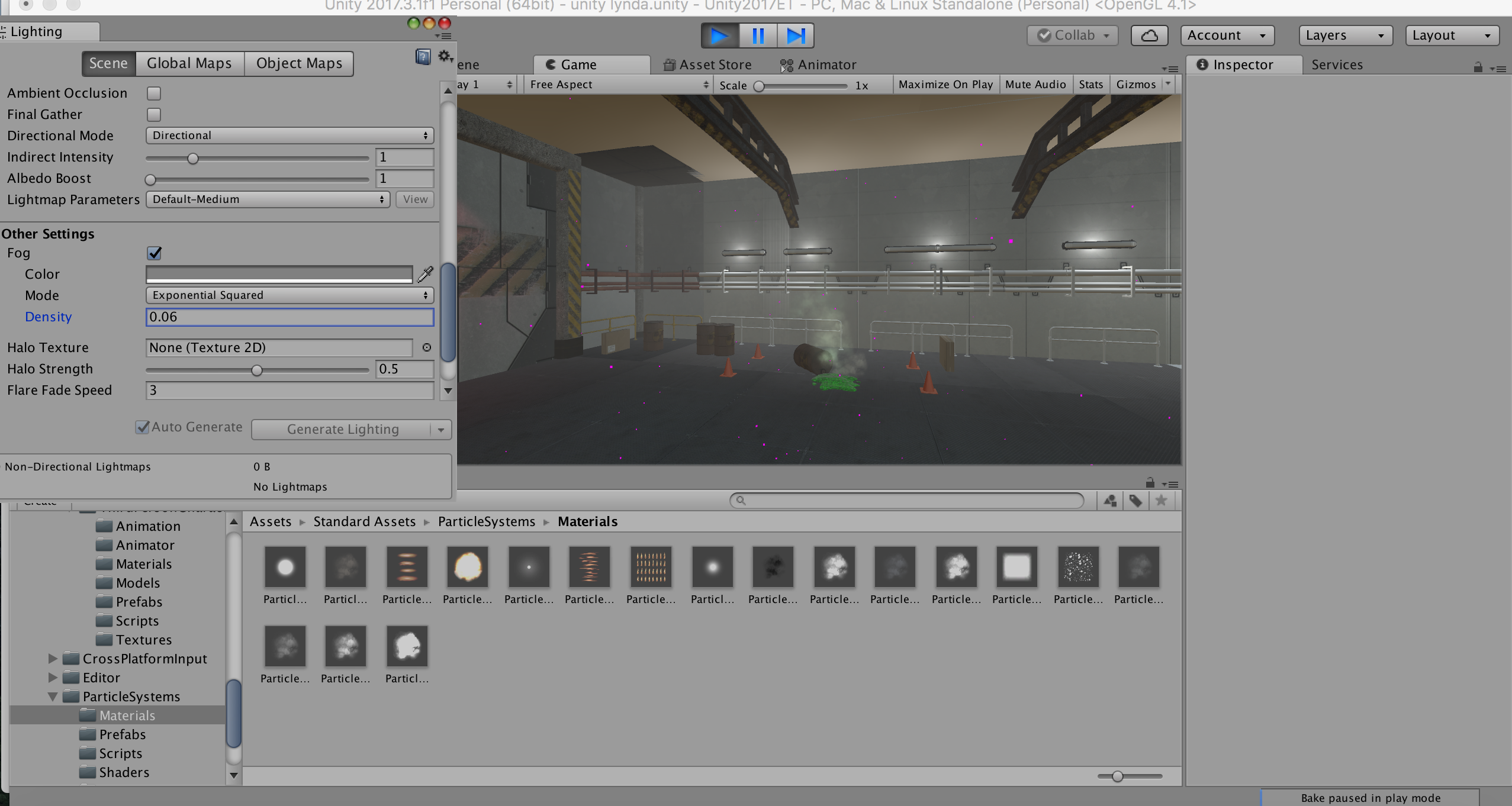

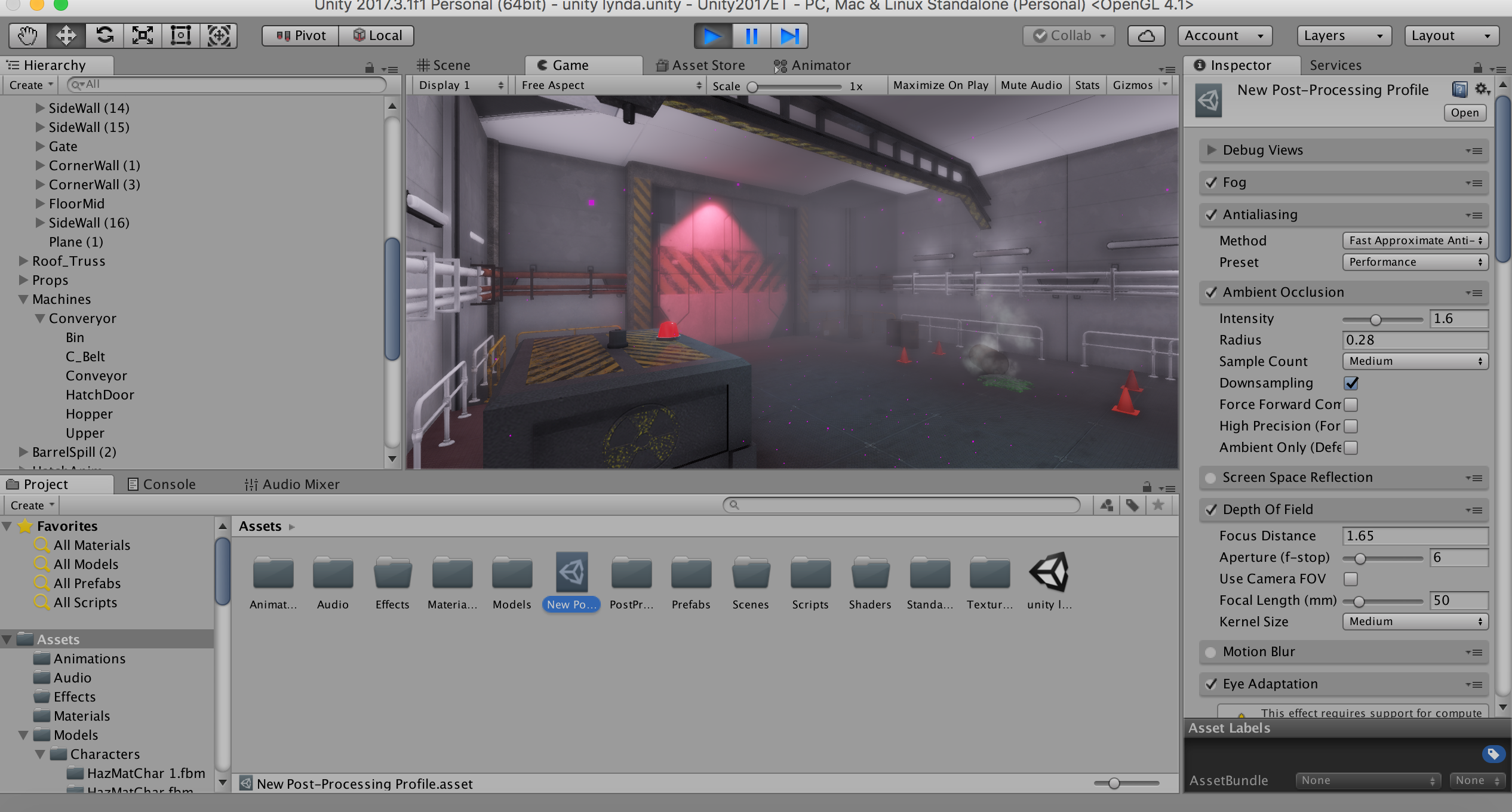

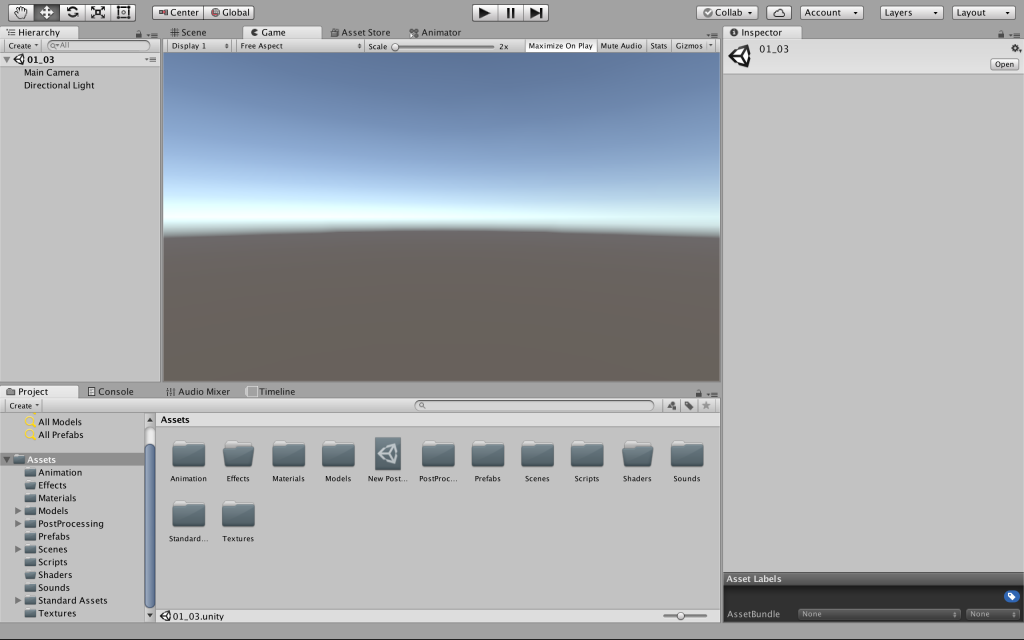

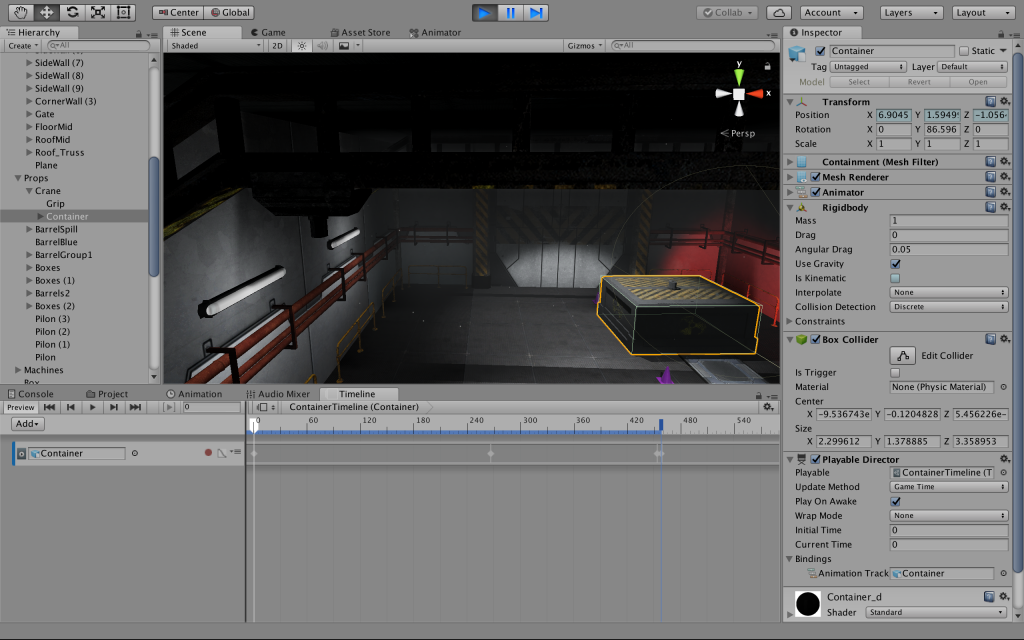

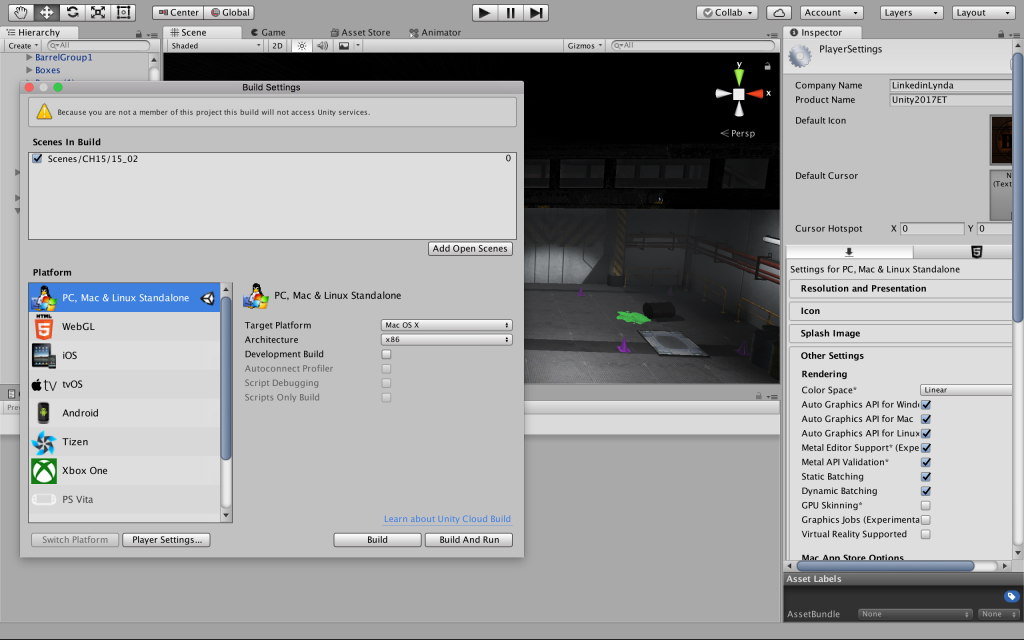

I watched all the unity tutorials on Lynda and here are the screenshots of the Lynda project I worked with the given exercise files from 1 -15.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

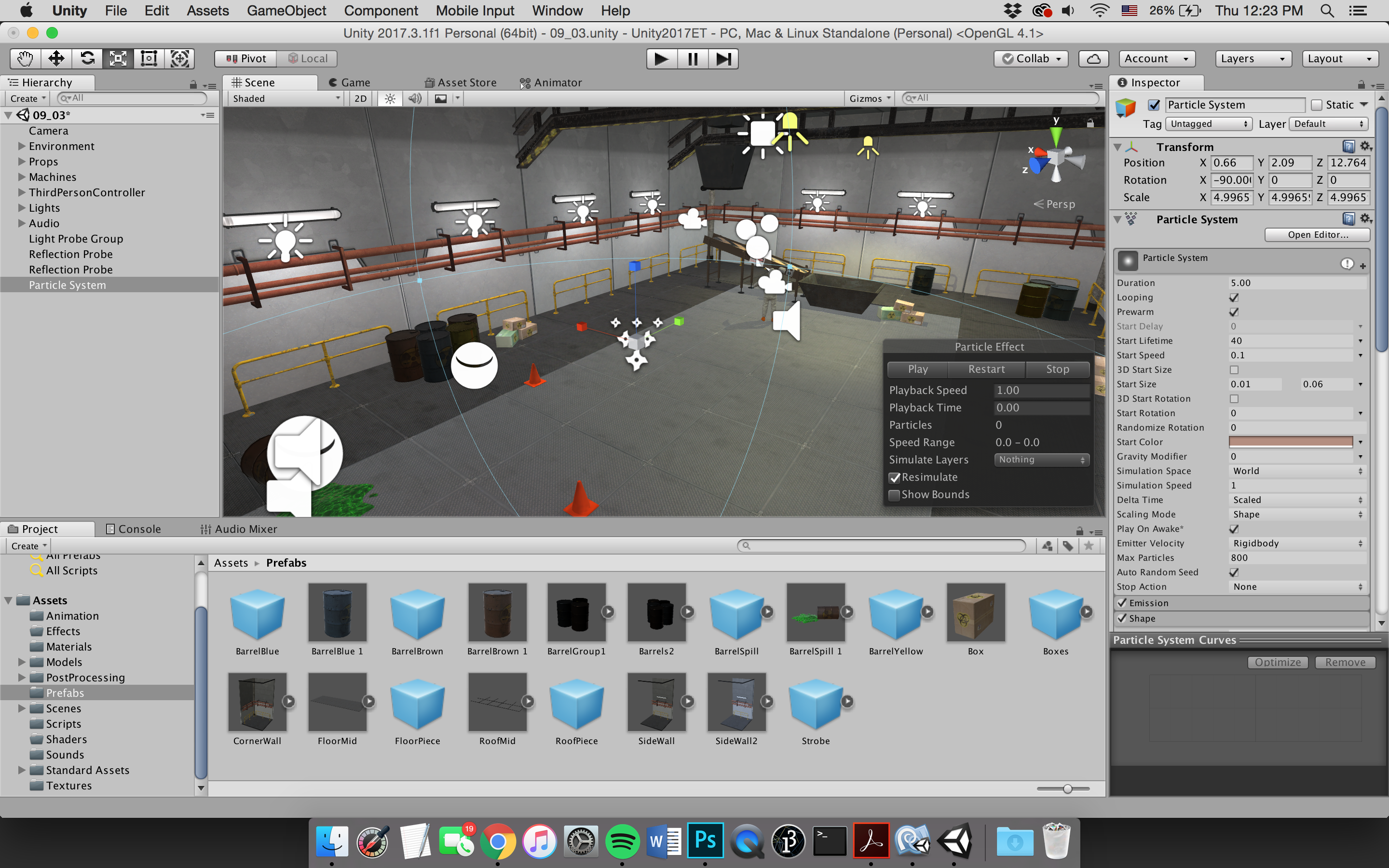

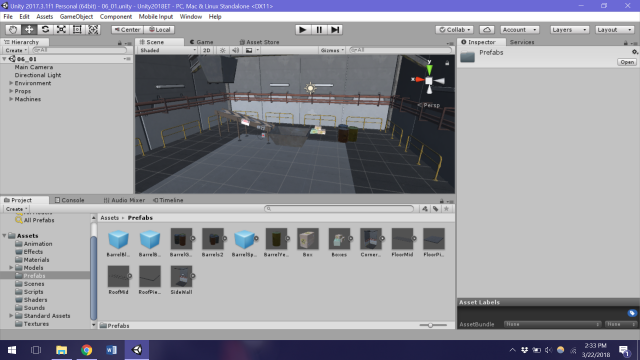

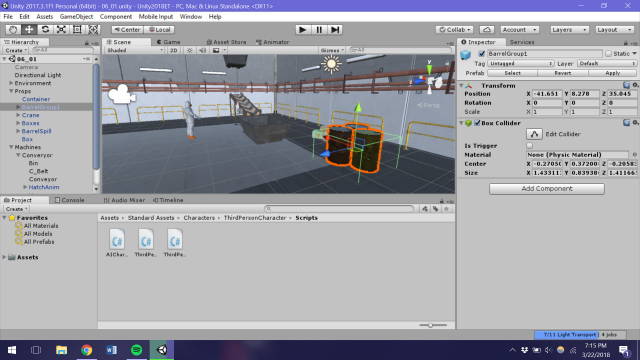

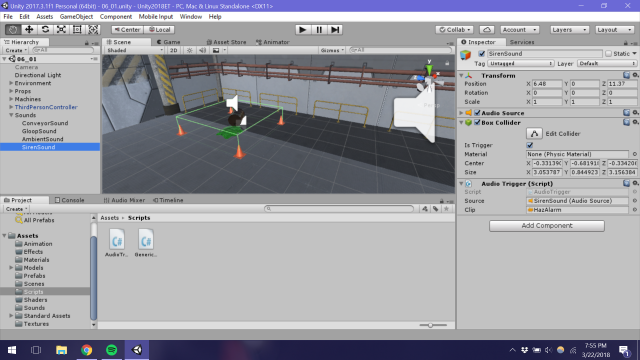

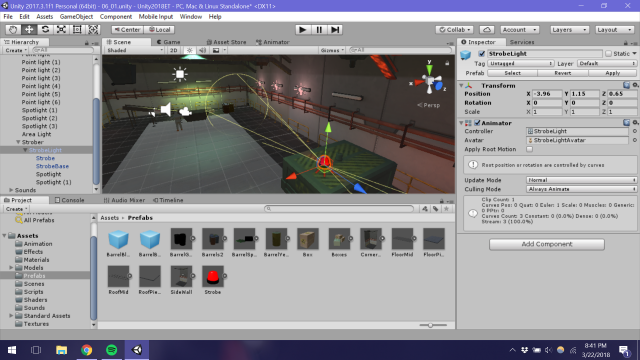

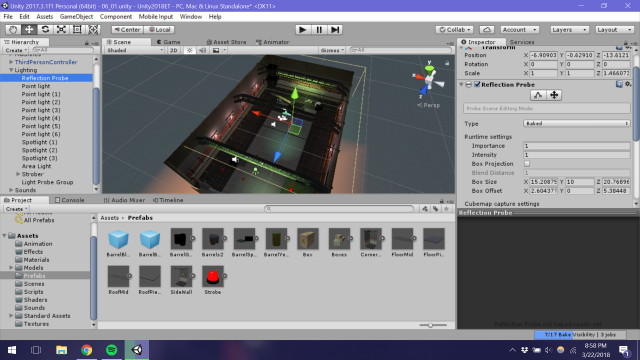

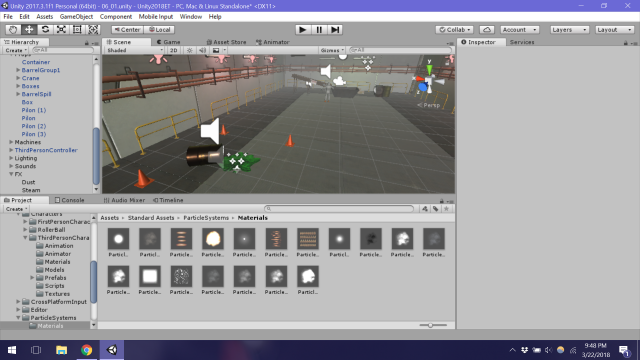

joxin-UnityEssentials

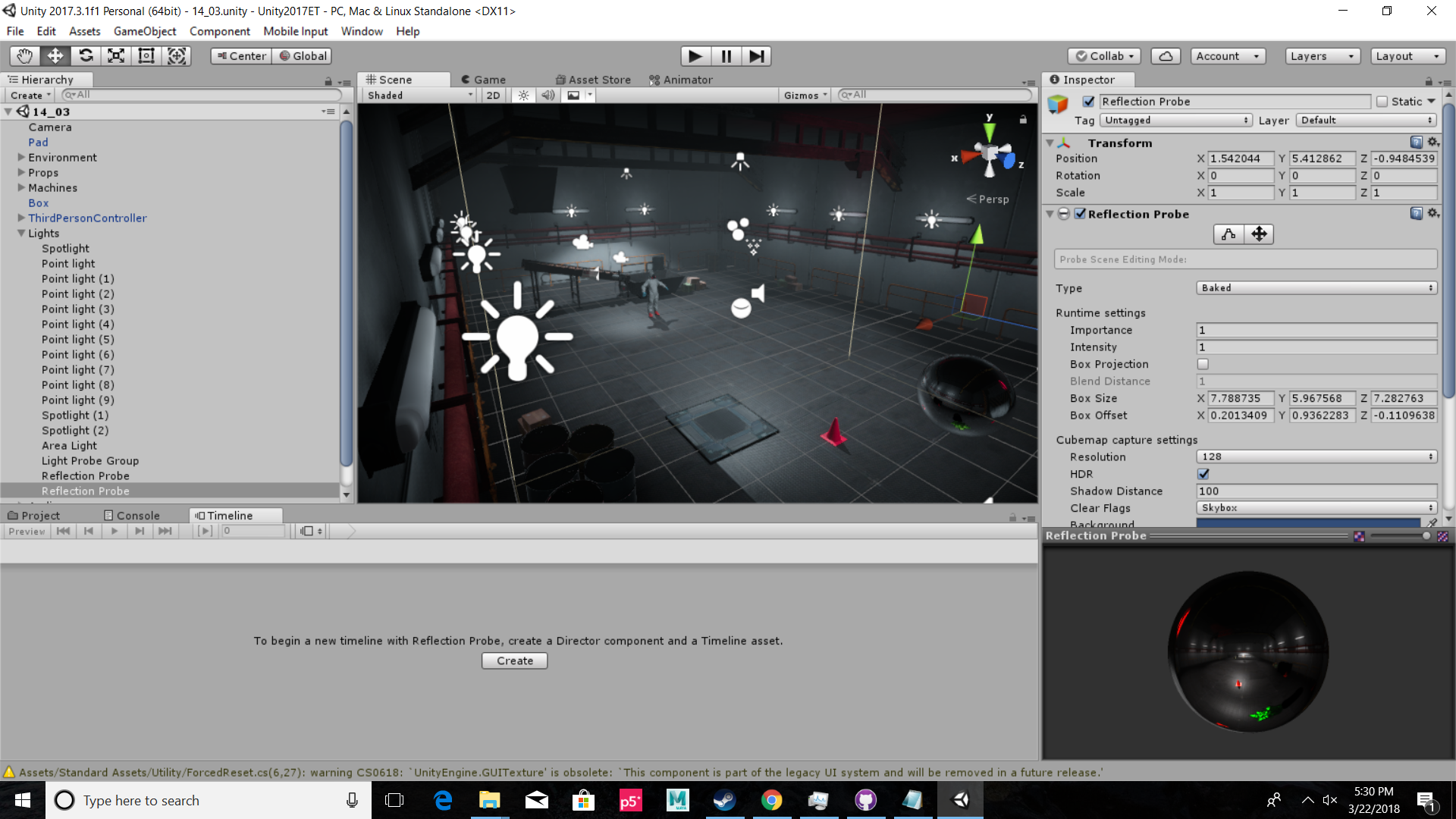

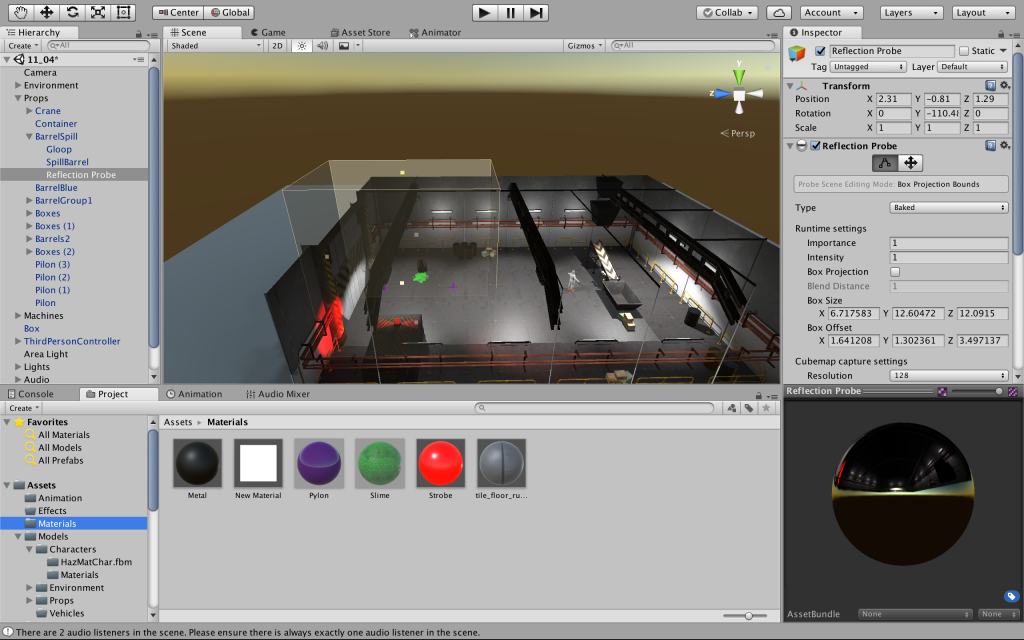

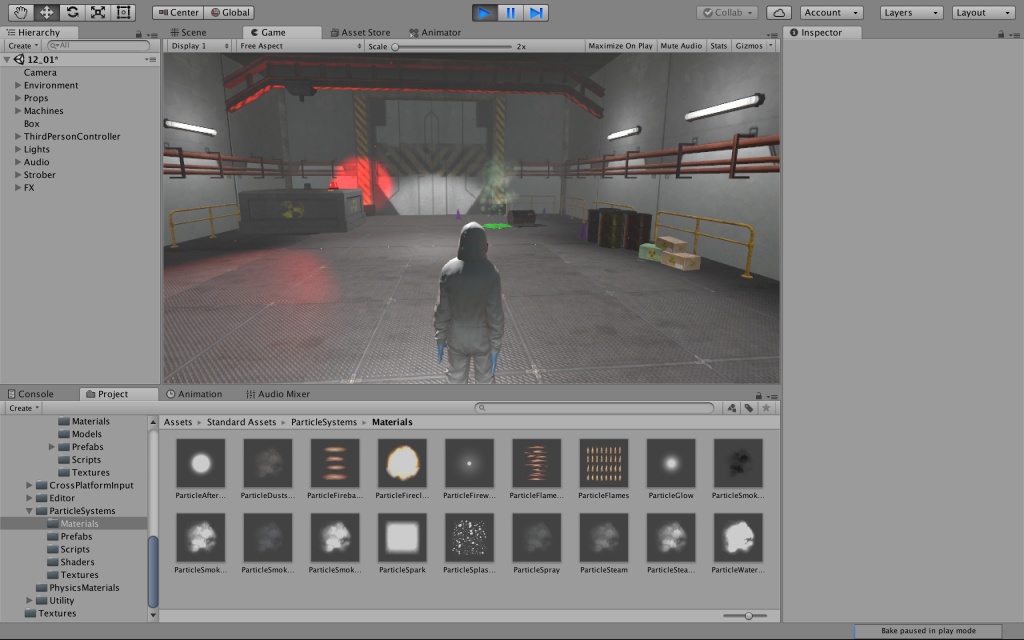

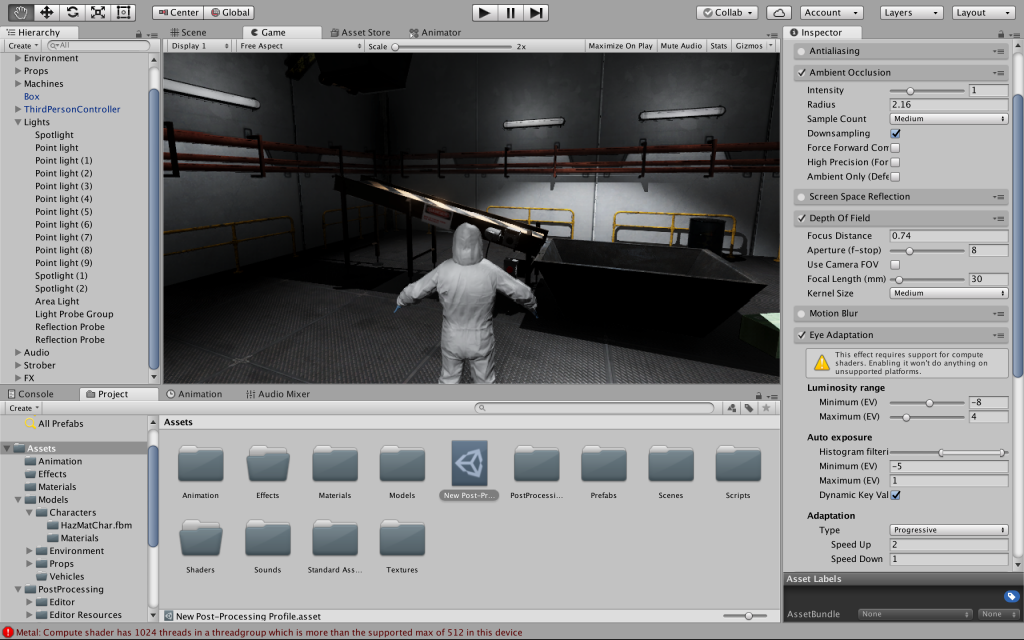

Notes for myself:

- Before turning an object into a prefab, I should place it in a desired position in relation to the origin (aka the transform information of the prefabs should have the desired position when it is placed at [0, 0, 0]).

- light probe group not working on my machine?

- Use q, w, e, r, t to toggle between

.

.

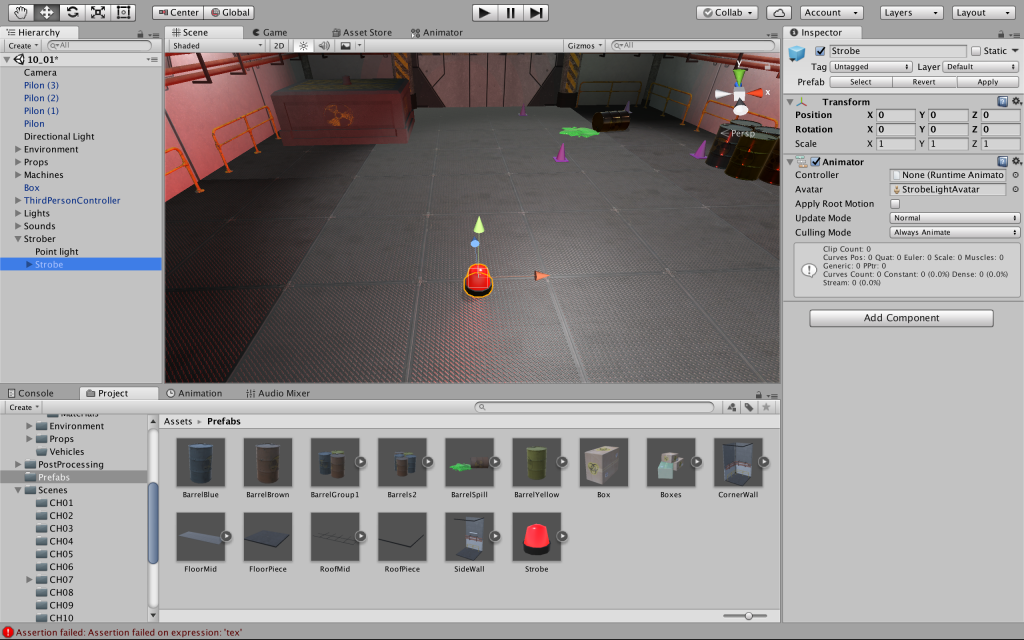

Tutorial_1

Tutorial_2

Tutorial_3

Tutorial_4

Tutorial_5

Tutorial_6

Tutorial_7

Tutorial_8

Tutorial_9

Tutorial_10

Tutorial_11

Tutorial_12

Tutorial_13

Tutorial_14

Tutorial_15

conye – LookingOutwards07

There’s this new VR headset in Japan that has an arm attached to it so that the wearer can experience a cute Japanese girl feeding them candy in 4d.

I can’t handle the fact that this exists. I really want to try it, not because I want to experience a cute Asian girl feeding me candy, but because I want to experience how realistic it is. It’s kind of amazing that they managed to sync an arm to a VR headset and package it as a commercial product, and it’s even more amazing that they did it because there was (I think?) an apparent large demographic that would want this product. It’s such a one-dimensional product, I mean, imagine all the things they could’ve done with an arm and a VR headset and they come up with this? I’ve been in a long distance relationship for a few years so the idea of intimacy through digital means is super appealing to me. How many levels of removal are we willing to accept for the feeling of intimacy with someone? This video makes me want to build a bunch of creepy fake intimacy machines for a capstone project.

LookingOutwards07

This AR interpretation of manga-style illustration

I like it because… well I admit I haven’t seen that many AR projects, not to say experience AR myself – yes I haven’t played even Pokemon Go. But nevertheless this is the first AR I see that plays its trick on something that we almost always take for granted to be 2D (manga-style, graphic-design-like, black and white illustration), and the effect is like magically blending the graphic-design 2D world with our 3D space. It also reminds me of the Harry Potter films in which pictures in newspaper can move. With AR I can totally see that’s achievable in our muggles’ world as well 🙂