New Capstone Idea

After working on my original idea and having a working prototype, I realized it was a little to simple/boring. Even though it was a challenging computer vision problem to change peoples cloths. Once it was done there was no real surprise aspect.

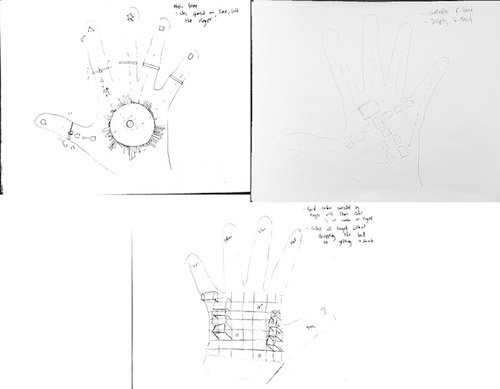

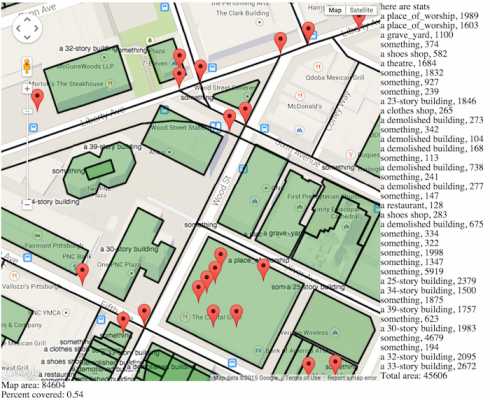

With this in mind I changed my original project to a more interesting one. I am creating a game with the LEAP MOTION which will live in your hand. This game will be projected onto your hand and you will use it as the main display and input device.

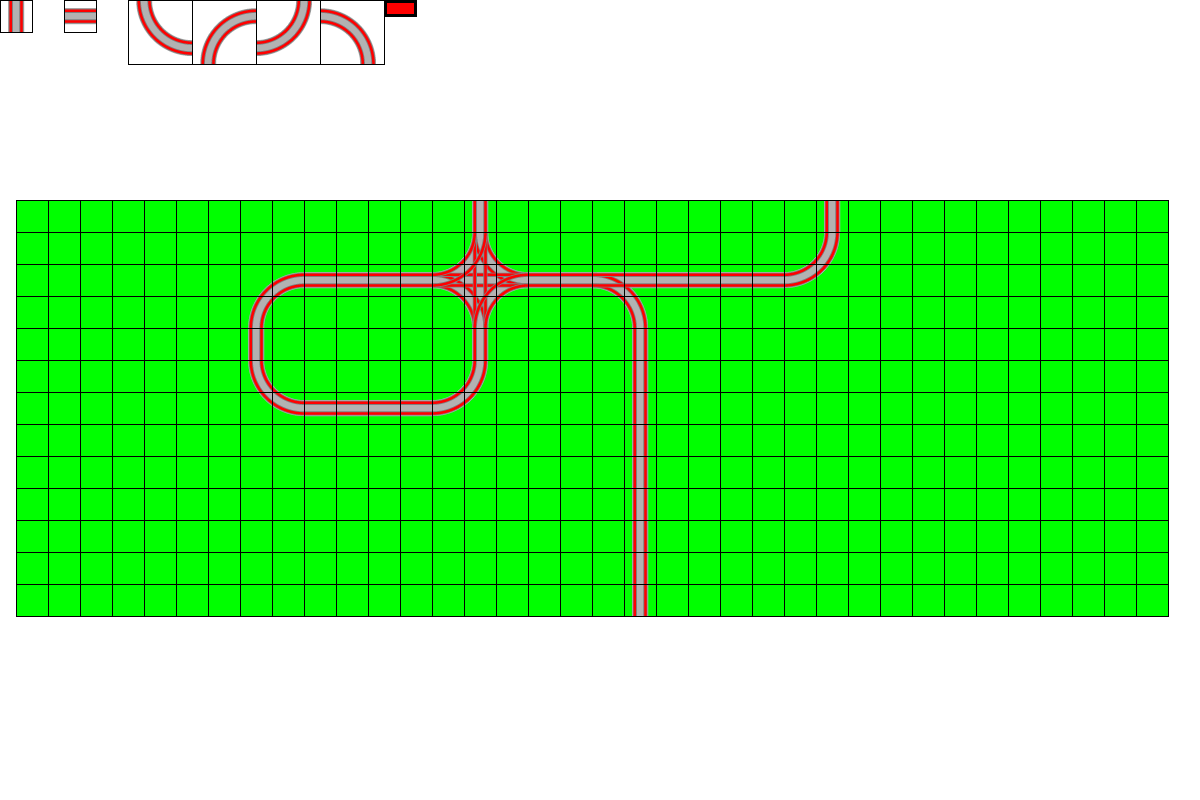

It will be done using OpenFrameworks for calibration and Unity3D for the main game mechanics.

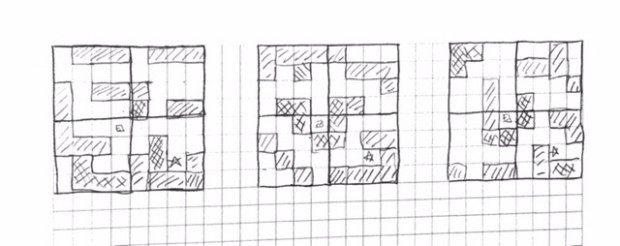

This are some initial game ideas I have. I will start with one and the if I have time I will make one more.

The first game I am doings game design levels. It is a maze, in which the user needs to move its hands and fingers to get the ball to the final destination. As the user moves its fingers, some walls will disappear/ appear to help the user take the ball to the final destination.