setbacks

Machine Learning – In the days before the final critique, most of my frustration has been caused by attempting to get the GRT machine learning library to get along with openframeworks. Even though there are tutorials such as this one, there is apparently an issue with C++11, still unresolved.

Pure Data – Since moving to osx, I’ve not been able to process OSC data an audio DSP at the same time. This was a very strange glitch that I think has been solved. With Dan Wilcox’s help, we found that sending all of the leap data over OSC is too much and clogs the port, so the immediate solution is to only send the data that I need, but his is not favorable since I also want to release this app as a tool publish leap data over OSC.

successes

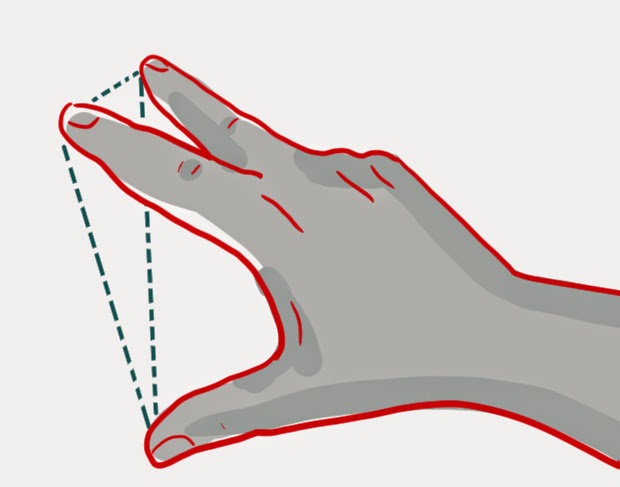

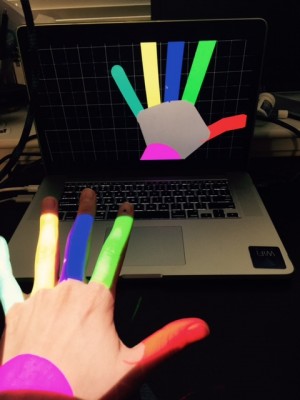

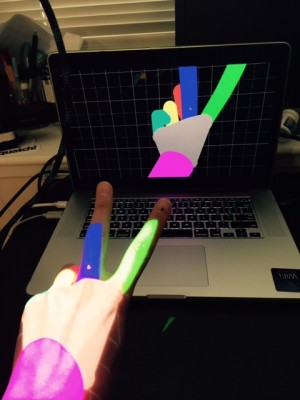

The selection gesture is working relatively well and has a good feel, although the GRT problem came up, it should be relatively easy to set a standard gesture like grab to keep the selected area.

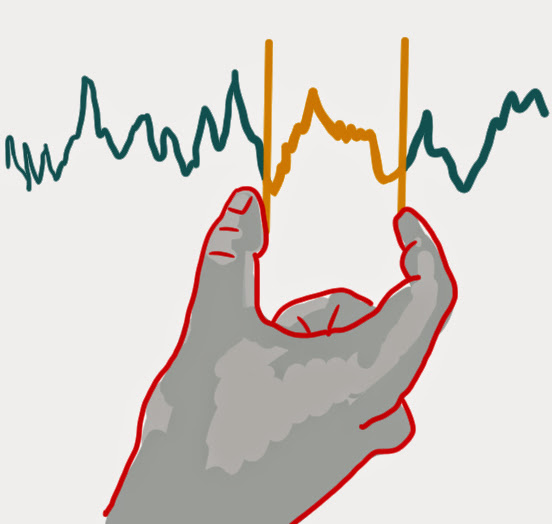

Triangulation gesture – this gesture seemed very intriguing and now that it’s implemented, I found out it is. Although the map-to-what-parameter issue is very much still in the air, the basic interaction itself is quite satisfying.

((x1+x2+x3)/3,(y1+y2+y3)/3,(z1+z2+z3)/3)

Interface

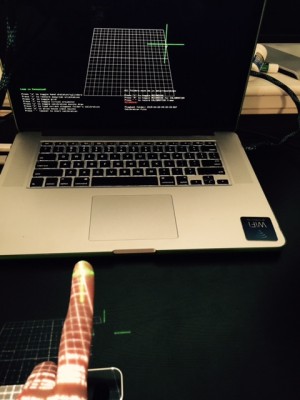

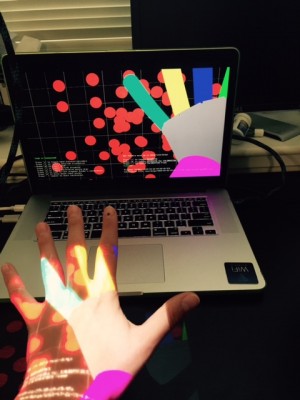

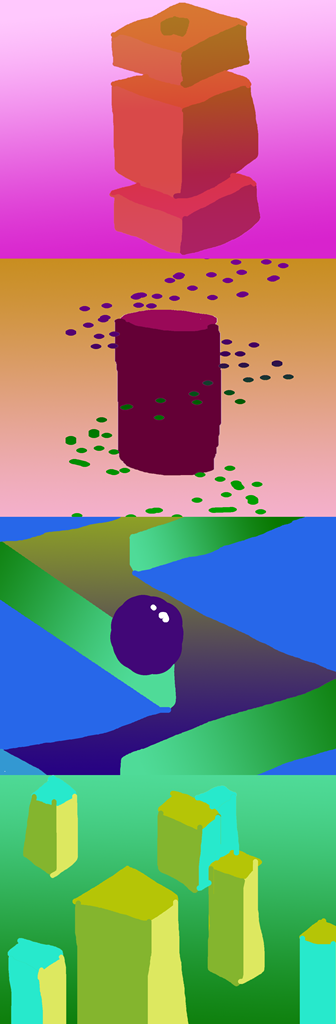

It’s clear that I’m working on 2 distinct features here: an editing tool and a performance instrument. And so these 2 should be on different pages. For the most part, the selection interface looks like the right direction to go, but I have some ideas about the performance interface. Here are some sketches of what that could look like: