Ideas for final project

For my final project I want to do something fun with projectors. For several reasons:1. I have a projector at home and I immensely enjoy it. 2. Among all forms of displays, projectors probably have least defined shape and scale. It can magnify something that only occupies a few pixels on screen to a wall-sized (even building-sized) image. I think it is really magical. 3. I found some really good inspirations in the projects Golan showed in class. One of them is Light Leaks by Kyle McDonald and Jonas Jongejan:

This project reminds me of a thing that almost everyone used to do: using a mirror to reflect light spots onto something / someone. I still do that with the reflective apple sign on the back of my cellphone. I think the charm of this project comes from the exact calculation they did that resulted in the beautiful light patterns. Though reflecting light spots with disco balls alone is a cool enough idea, the project would not be as stunning as it is if the light leaks had been randomly reflected onto the walls in random directions.

A little experiment

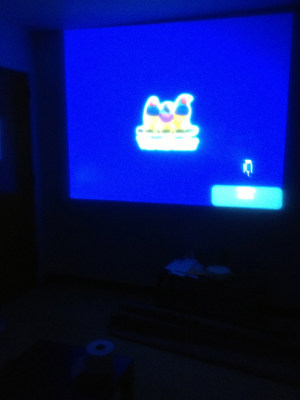

I was really amazed by the concept that ‘projection can be reflected’, so much that I carried out this little experimentation at home:

This is the projector I have at home. We normally just put it on a shelf and point it at a blank wall.

I

I

The other night I put a mirror in front of the light beam it projected. The mirror reflected a partial view of the projected image onto a completely different wall (the one that’s facing my bedroom, perpendicular to the blank wall). It’s hard to tell from the photo but the reflected image was actually quite clear. As I rotate the mirror around its holder the partial image started to move around the room in a predictable elliptical trajectory. That meant I could sort of control the location of the image in 3D space by setting the orientation of the mirror. If I could mount a mirror on a servo and control the orientation of the servo, with some careful computation (that I don’t know how to do yet), I’d be able to cast the projection to anywhere in a room. Furthermore, the projected image doesn’t have to be a still image – it could be a video, or be interactively determined by the orientation of the mirror.

This opens an exciting possibility of using the reflected light spot as a lens into some sort of “hidden image”. For example, the partial image could show the scene behind the wall it’s projected onto. The light spot in a sense becomes a movable window into the scene behind – and it’s interactive, inviting people to move it around to explore more of the total view. Or, the projector + mirror could become a game where the game world is mapped to the full room. Players can only see part of the world at once through the projected light spot, and they move their characters by rotating the mirror / interacting with other handles that manipulate the mirror.

If all I want is to project an image to an arbitrary surface, why not just move the projector?

Well, the foremost reason is that moving a mirror is way easier than moving a projector. The shape of the projection can also be based on the mirror shape so that we don’t always get an ugly distorted rectangle. The idea can be relatively easily set up with the tools we have in hand. Another motivation is that by having multiple mirrors, each reflecting certain region of the raw projection, the original image can be broken into parts and reflected in diverging directions. They can all move independently, but ultimately using light from the same projector. Light Leaks uses this advantage very well to emit light spots in numerous directions.

So that’s what I’ve thought of so far. There are still many undetermined parts, and I’m not sure how challenging it would be implementation wise. I have played with Arduino only in middle school and it was such a disaster that I completely stayed away from hardware in college. But I’m willing to learn whatever is needed to make the idea come true. I’m currently still researching other works for inspirations, also trying to make sure that my idea isn’t already done by someone else.

Other inspirations:

Chase No Face – face projection. Also discussed in class.

More to come…

Update:

Taeyoon and Kyle pointed me to this project.