Looking Glass: Little Owl Lost

Young readers bring storybook characters to life through the Looking Glass.

I wanted to explore augmented storytelling so I created a device to add content to a book. The reader guides this ‘magnifying glass’ device over the pages in a picturebook. Animations appear on the display based on where the magnifying glass is on the page. These animations add to the content of the story and let the reader explore new interactions.

I used the book Little Owl Lost by Chris Haughton.

I used the main character, Owl, as the focal point for the animations.

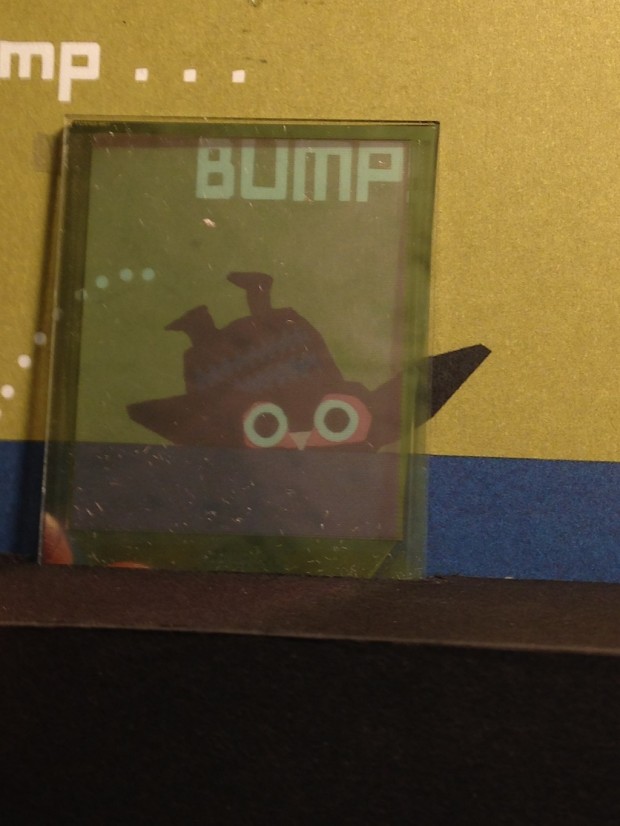

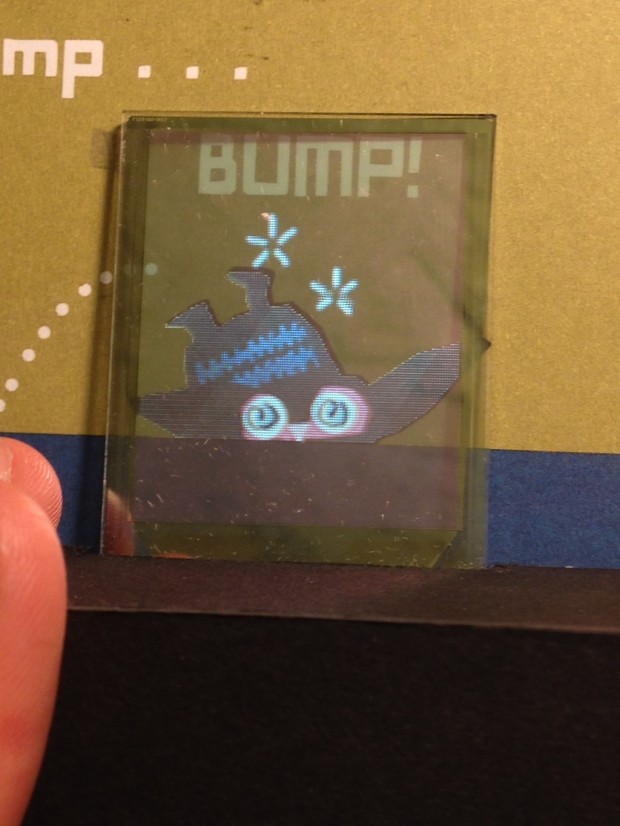

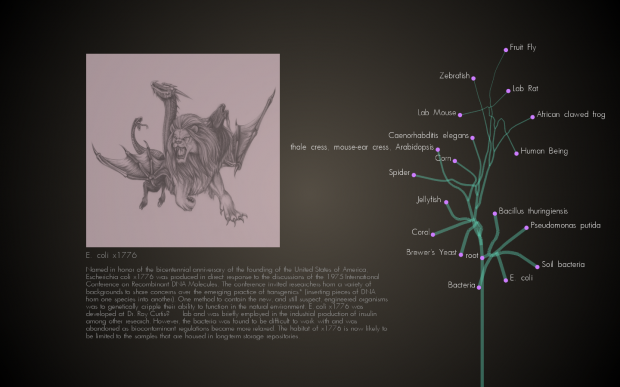

One of the animations is triggered as the magnifying glass device is brought to the correct position:

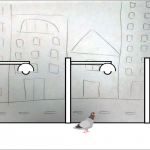

Here is another example with before and after:

These are some of the animations for Little Owl Lost.

How it works

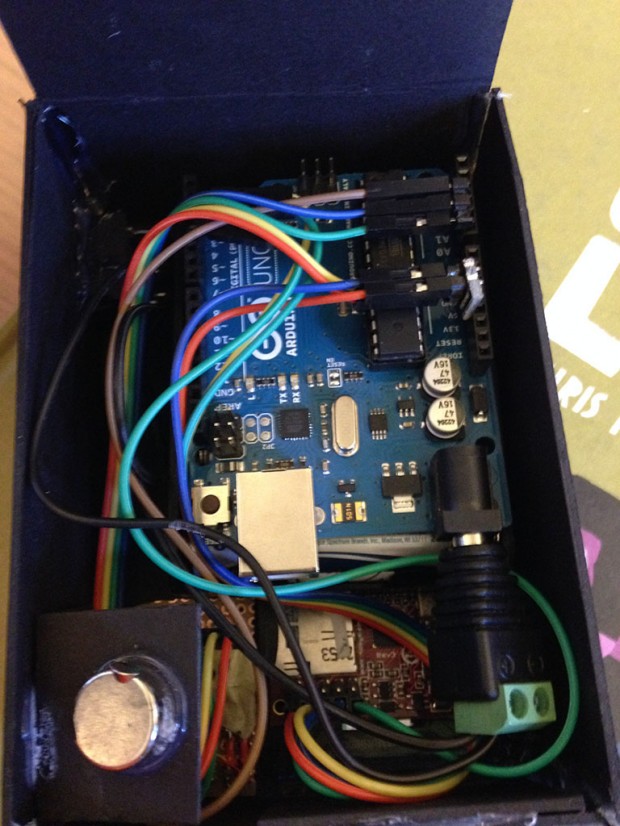

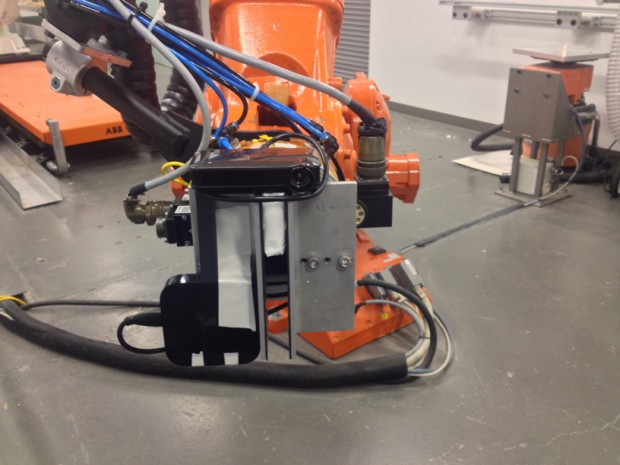

Hardware Specs

This project is composed of only a few parts. Control of the interface happens through the arduino. The OLED screen has its own processor and SD card to store all of the animations. The two processors communicate via serial. There are also 3 Hall effect sensors, an on/off switch and batteries.

Prior tests of the OLED are in this previous post.

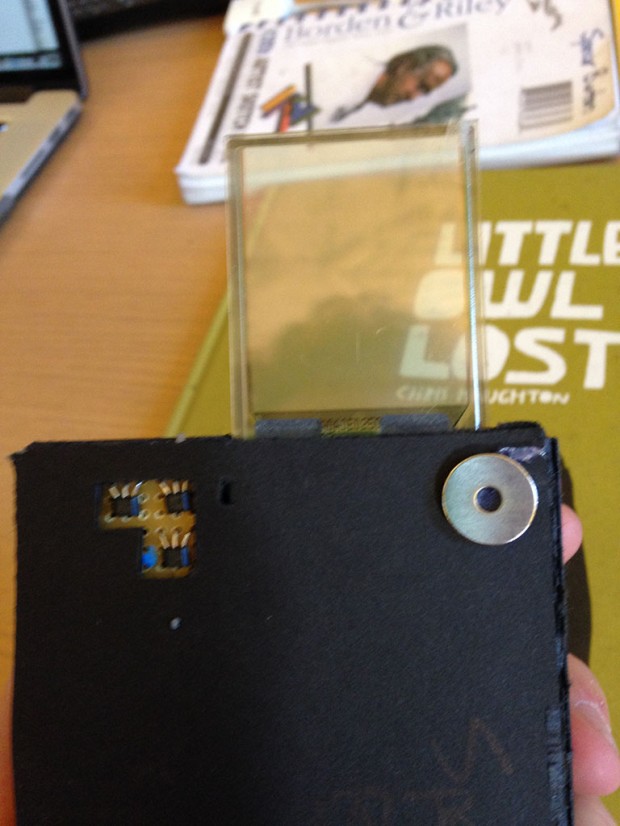

OLED Display

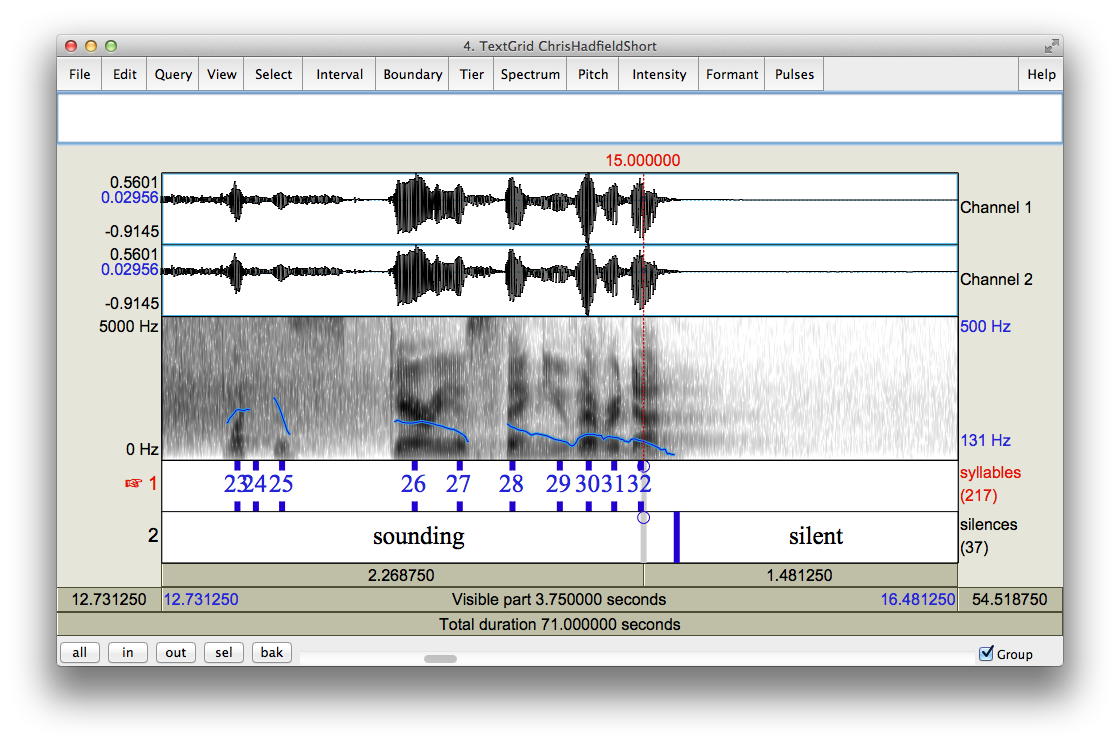

The OLED (organic LED) display comes from 4D Systems. I used their uTOLED_20_G2 display which is no longer in production. Animations were loaded as GIFs onto an SD card that lived on the display. Animations were then triggered via the supported serial interface.

Hall Effect Sensors and Magnet Tags

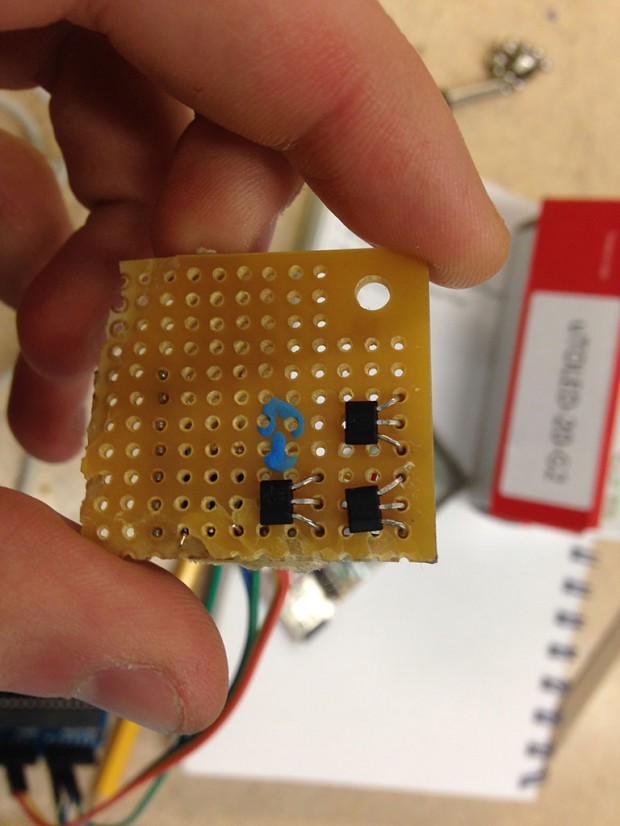

I use Hall effect sensors and magnets to detect where I am in the book. I have created a series of tags which consist of a group of 3 magnets in an L. Each magnet can have either a positive or negative polarity facing upwards which gives me a total of 8 unique tag combinations. The L shape of the tag enables me to determine orientation.

I then placed the tags inside the front and back cover of a book. The magnetic field can be detected through multiple pages.

I use Hall effect sensors to measure magnetic polarity. There are 3 sensors to correspond to the 3 magnets of the tags. The sensors are highly accurate and only detect the magnets when they are directly over the magnets.

Placement Magnet

To help the reader find the animations and trigger the animations at the correct location I have added larger placement magnets to both the book and the magnifying glass object. These magnets hold the display in place as the animation occurs.

Flaws

The most glaring flaw of the current design is that the Looking Glass never actually knows which page it is on. The magnetic field passes through all of the pages and so it is impossible to know what page the Looking Glass is actually on.

A solution would involve additional sensors. For example color sensors could sample the colors on the current page an take an educated guess as to which page the Looking Glass was over. I did test basic color sensing but did not get far enough with this project to add that feature.

Software

All of my code lives on Github. The main arduino file is StoryBoard.ino. Connecting to the arduino via USB will enable a calibration mode for the sensors.

Feedback

- Comments

- Form factor

- Ideally the box would be smaller

- Hallmark cards may be a good model

- Leah Buechley at MIT has good examples of similar work

- Jie Qi – http://technolojie.com/circuit_sketchbook/

- Story Clip – http://highlowtech.org/?p=2923

- Living Wall – http://highlowtech.org/?p=27

- Form factor

- Alternate idea

- Choosing where you go?

- What about board and card games?

- What if it had a wireless transmitter so you don’t know what it will show?

- What about surprise?

Based on feedback this project has many possible future directions. As a first prototype this project is fine but further iterations will need to be smaller. This is well within the realm of possibility, especially if I create a custom circuit board. I would also like to add audio. If further prototypes can be made more robust I hope to make the Looking Glass available for the Carnegie Library of Pittsburgh.