The digital prophet is a rendition of Gibran Khalil Gibran’s “The Prophet” as told by the Internet, composed of tweets, images, wiki snippets and mechanical turk input.

The PDF is available at : http://secure-tundra-7963.herokuapp.com/

A new version can be generated at : http://secure-tundra-7963.herokuapp.com/generate

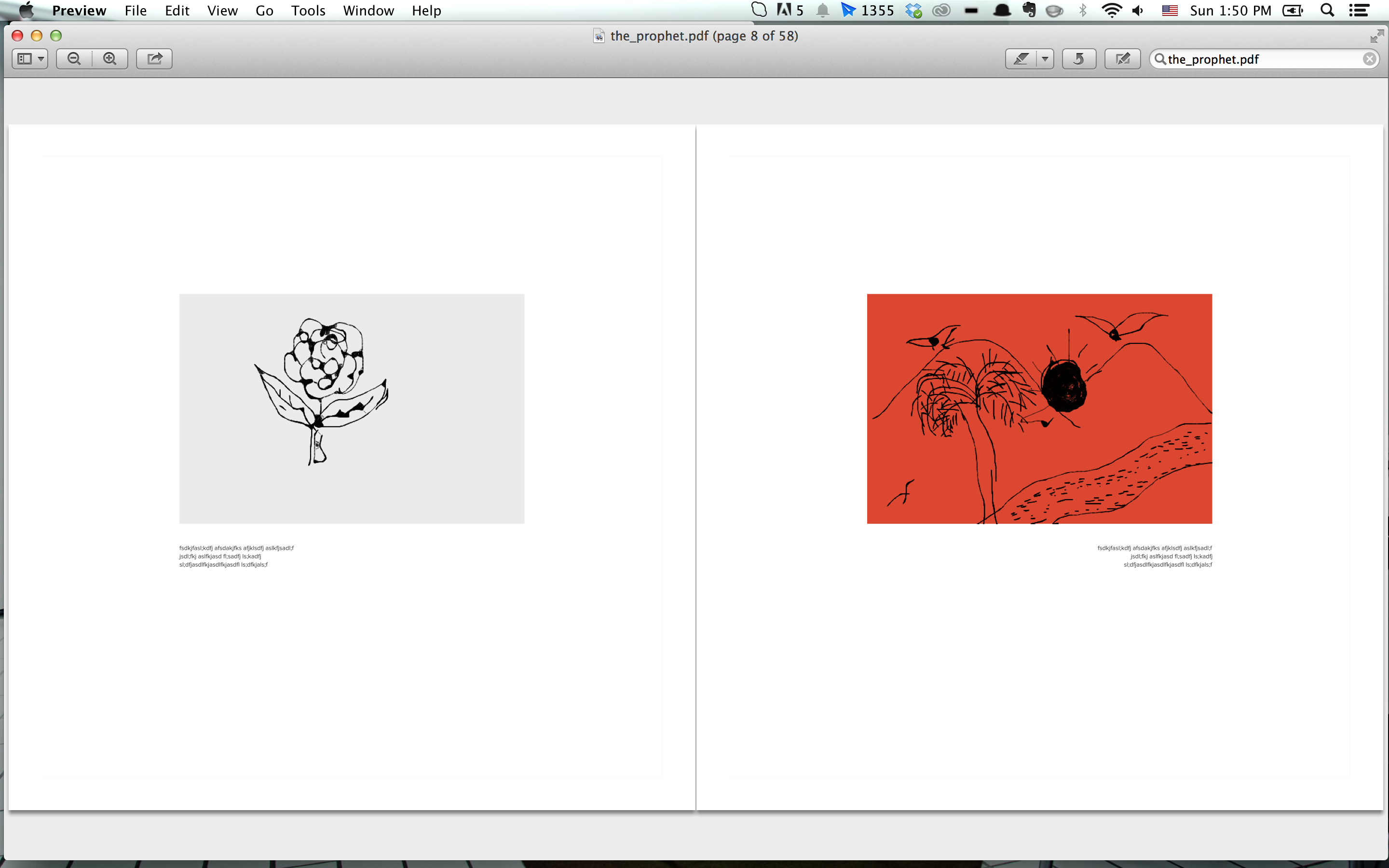

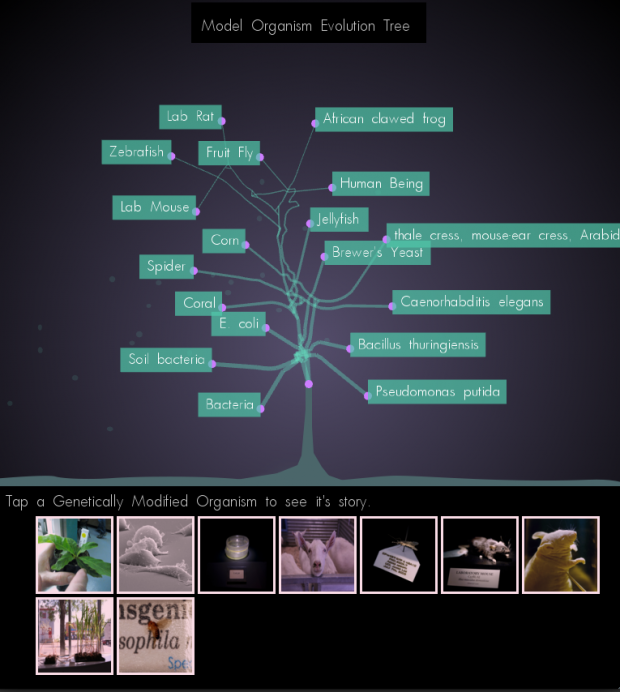

The Digital Prophet is a generative essay collection and a study of internet anthropology, the relationship between humans and the internet. Using random access to various parts of the Internet, is it possible to gain sufficient understanding of a body of knowledge? To explore this topic, random blurbs of data related to facets of life such as love, death, children, etc. are collected via stochastic processes from various corners of the internet. The book is augmented via images and drawings collected from internet communities. In a way, the internet communities and by extension the the Internet become the book’s autobiographical author. The process and content of this work is a tribute to philosopher Gibran Khalil Gibran’s The Prophet.

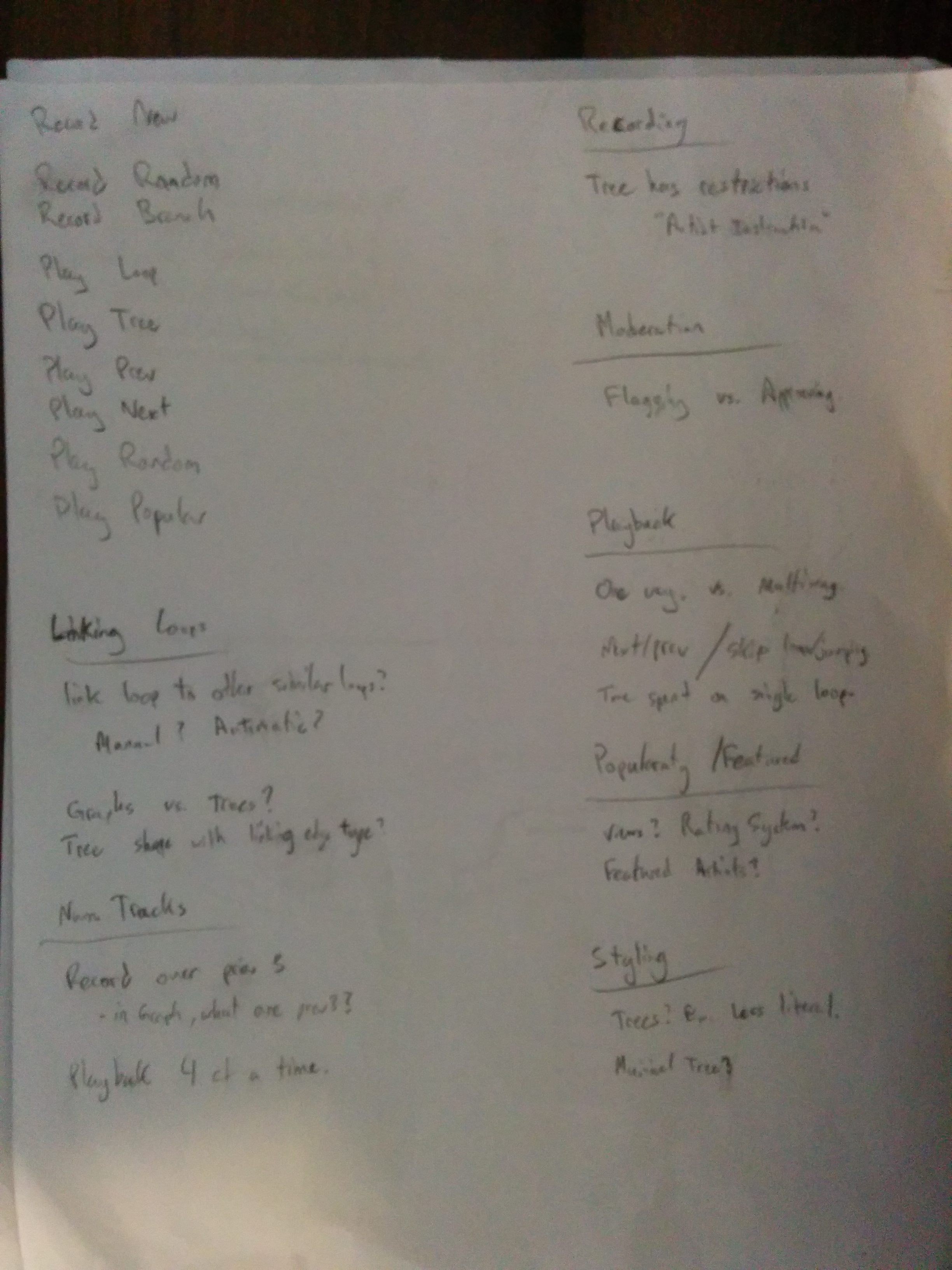

Generative processes

The Digital Prophet is a story told by The Internet, an autobiographical author composed of various generative stochastic processes that pull data from different parts of the Internet. The author is an amalgam of content from digital communities such as Twitter, Wikipedia, Flickr and Mechanical Turk.

As it is not (yet) possible to directly ask a question to The Internet, each community played a role in two parts. The first as a fountain of new data and the second as a filter of the raw form of the internet through the categorization and annotation of the data. In effect, peering through the lens of an internet community we can extract answers to questions about all facets of life.

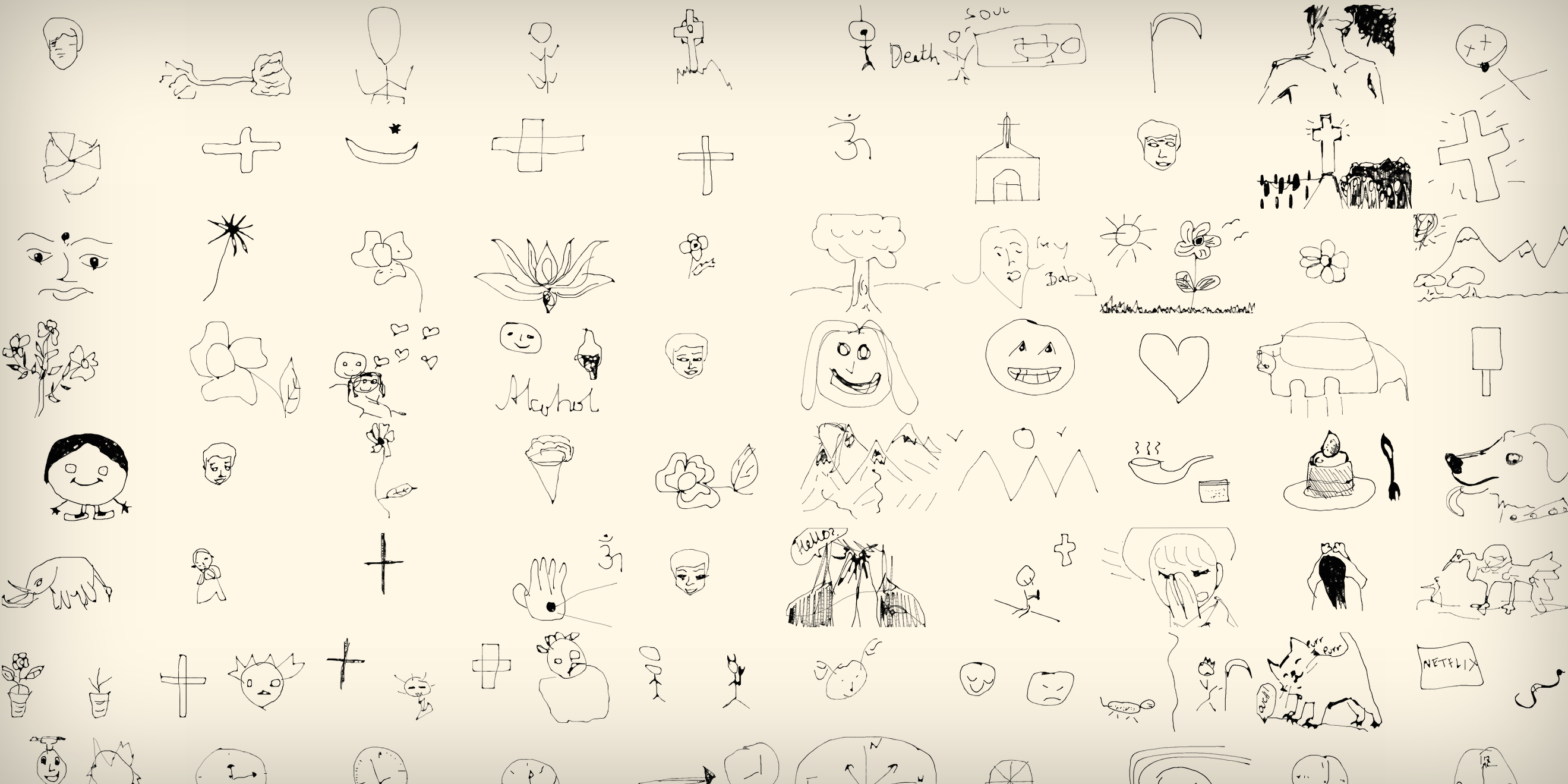

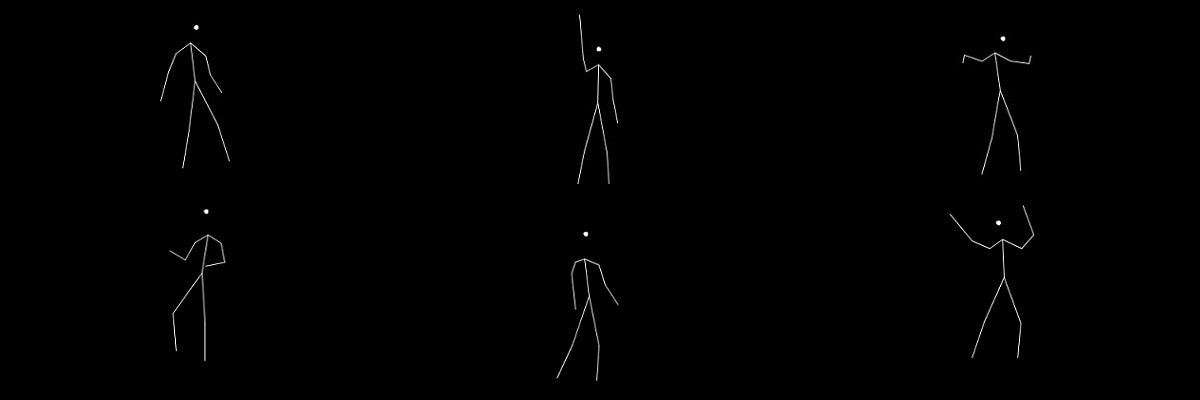

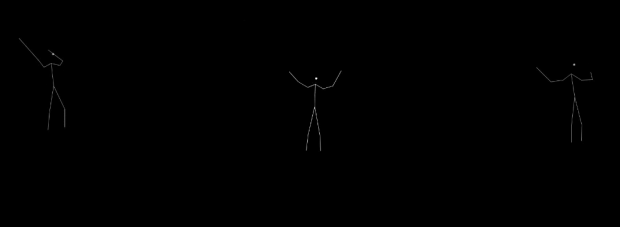

By asking personal questions to Mechanical Turk, a community of ephemeral worker processes, we obtain deep and meaningful answers. `What do you think death is?`, `Write a letter to your ex-lover`, `Draw what you think pain is`, etc. brings about stories of love, cancer, family, religion, molestation, and a myriad other topics.

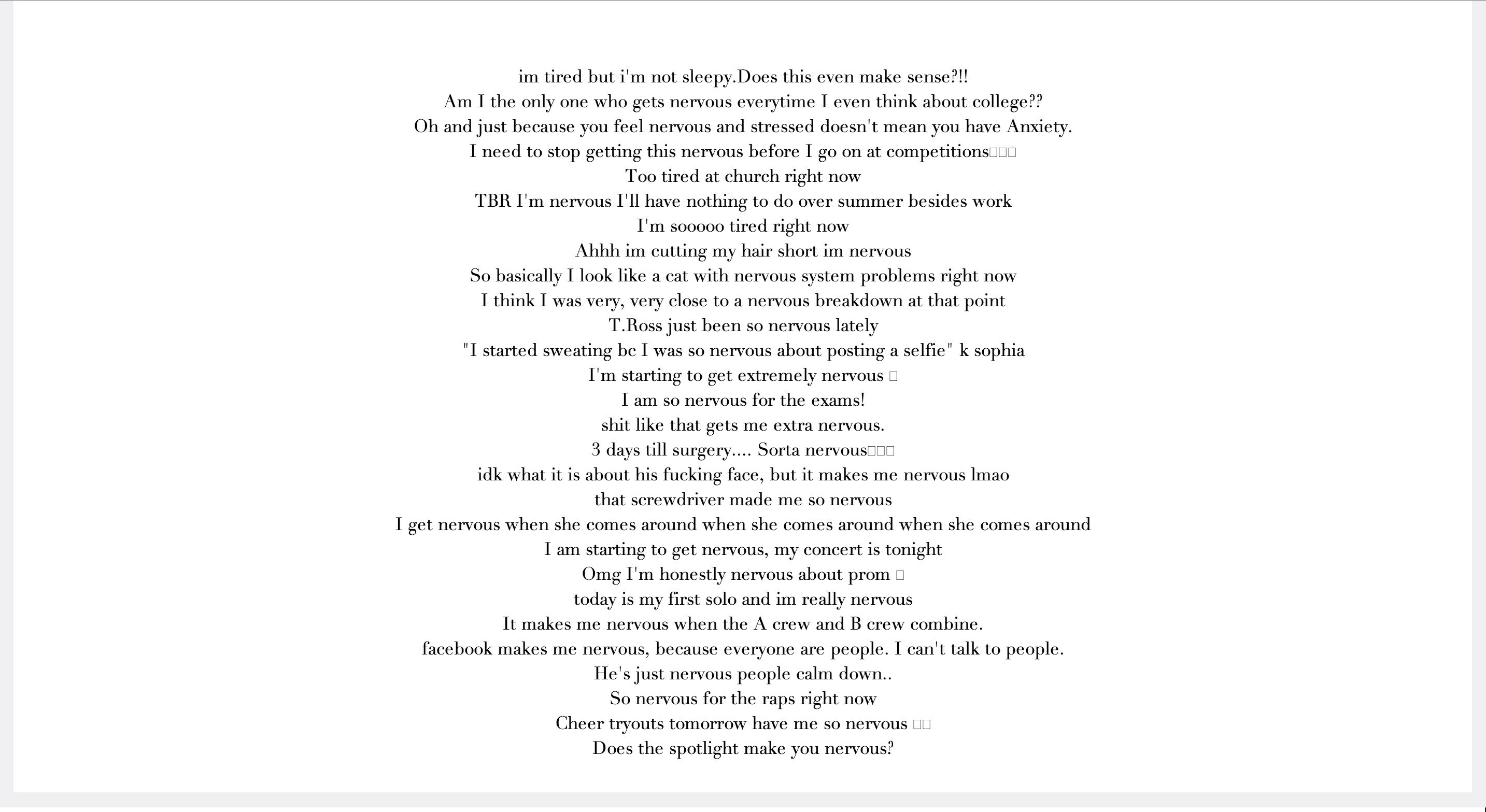

Twitter on the other hand is a firehose of raw information. Posts had to be parsed and filtered using Natural Language Processing to derive tweets that would have the most meaningful content. Sentiment analysis was used to gather tweets that were charged with the most emotional bias.

Flickr data corresponds to a random stream of user contributed images. In many cases, drawing a random image related to a certain topic and juxtaposing it with other content serendipitously creates entirely different stories.

Wikipedia articles have an air of authority, it is narrated by thousands of different authors and different voices, converging into a single (although temporary) opinion. Compared and mixed with the other content, it provides a cohesive descriptive tone to the narrative.

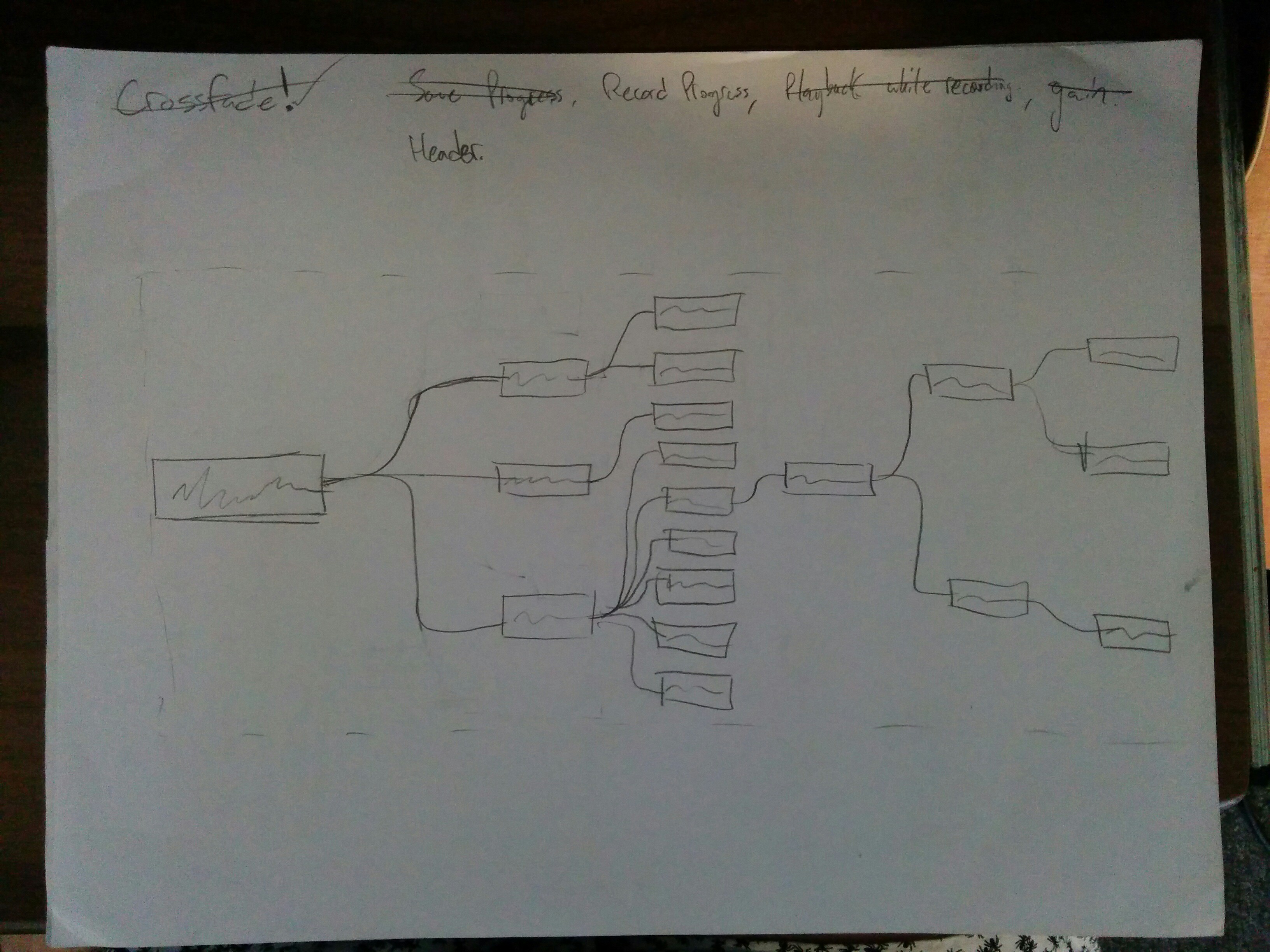

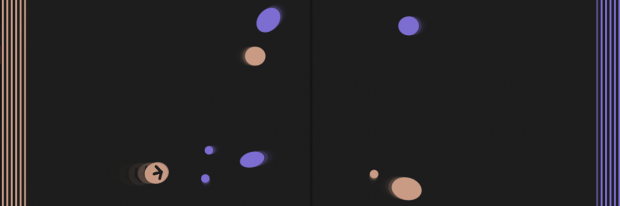

The system

The author is generated through calls to APIs to gather, sort and sift through the internet in order to get the newest most complete answer to the question`What is life?`. When accessing the project’s website, a user sets into motion this process and generates both a unique author and a unique book each time. The book is timestamped with the date it was generated, and dedicated to a random user (a random part) of The Internet. Hitting refresh disappears this author, as no book can be generated in the same way again.

Technology used

- Flickr API

- Twitter Search API

- Mechanical Turk (Java command line tools)

- Wikipeida REST API

- Node.JS

- npm ‘natural’ (NLP) module

- wkhtmltopdf

- Hosted on Heroku