Summary

This is a game about protecting your “Little”, a small car that follows you around, while running into your opponent’s “Little”. At your disposable is the ability to change the terrain at will. You can form hills and valleys in real time at your discretion, to aid you and thwart your opponent.

To download the game, contact me or Golan Levin. The filesize is too large for this server.

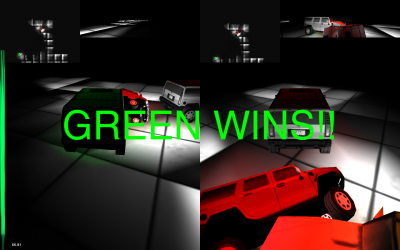

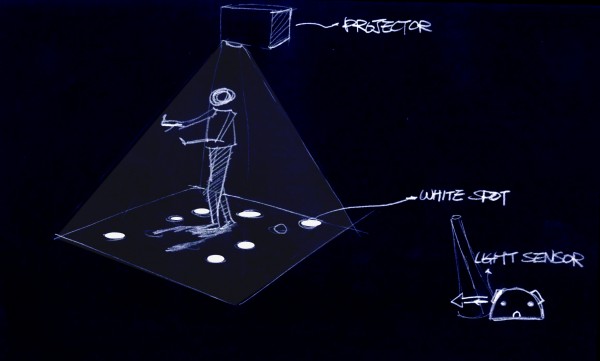

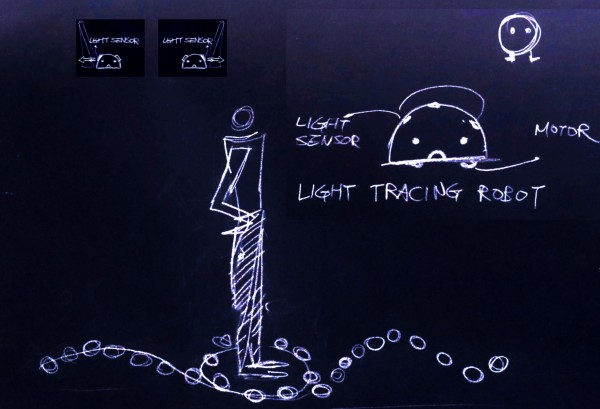

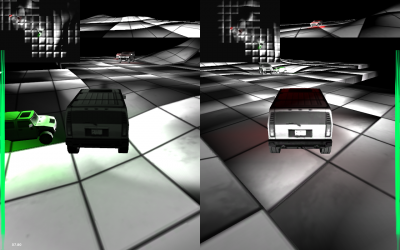

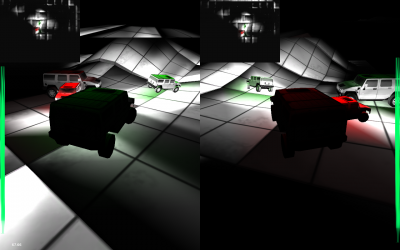

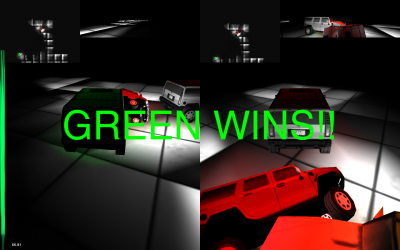

Some screenshots:

The players face off.

The battle ensues!

Green wins!

Concept

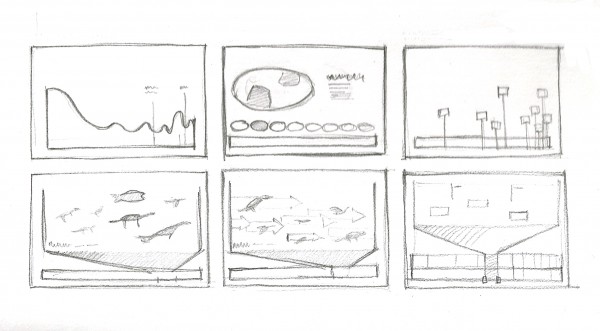

My idea went through several iterations, however I was satisfied with what I ended up with. I wanted to do something involving dynamic terrain generation. Initially, my idea was to write algorithms that simulated Earthlike terrain formation, creating a similar effect to watching a few seconds of stop motion photography of a mountain forming over a period of thousands of years. However, I was also interested in making this a game somehow, so I decided to add an element of driving through these mountains, as they formed.

I decided the gameplay element would be to make as much progress as possible, as terrain gradually became harder and harder to progress through. This idea was scrapped, because I felt it would be frustrating to have to struggle through ever growing, terrain, with losing by getting stuck as the only option. I also saw my original idea (forming terrain) getting lost as I attempted to answer the question of how to create a lifelike environment in only a few weeks.

After many more ideas passed through my mind, I settled on creating a game where the player controlled the formation of hills and valleys, an idea I had from the beginning. The game would involve two players driving around, each in charge of protecting a “baby car” while simultaneously crashing into the enemy’s baby car. This idea is only one of several that I could have implemented; listed here are some of the others and why I chose not to implement them:

A game where two players cooperate to ward off zombie-like little things that attack your castle in waves. Defense would consist of forming hills and valleys to lure them away from your castle to a hole, where they would fall to their doom. This idea was scrapped because I felt the game would be too difficult to implement quickly and well.

A game where one player attempts to escape the other player. The hunter can make hills and valleys – to hinder and trap the quarry. I scrapped this idea because I wanted both players to be able to create hills – I felt being the quarry would become boring.

A game where one player protects a treasure while the other player attempts to capture it. The game that I eventually created is, in essence, similar to this game, except that each player has something to protect, and ‘capturing’ is changed to ‘crashing into’. I wanted to make sure to add an element of collision and mild violence when I observed Xiaoyuan’s glee at running into my car during one of the checkpoint presentations.

Implementation

At the suggestion of one of my classmates, I started to use Unity, a game engine designed for making 3 dimensional games, especially first person shooters. Using Unity as a development environment has been a very smooth experience, and I would recommend it to anyone looking to rapidly prototype computer games that require advanced physics, good graphics, or simply work with a 3D environment.

From Unity, I took from the public domain a demo of a vehicle and began to familiarize myself with heightmaps and meshes, because I would be constructing all of the terrain procedurally from code. I also looked into terrain deformation, and I found a demo of mesh deformation, which proved to be somewhat similar to what I needed.

I decided the terrain would be an infinite expanse of grid that formed as you approached it – this would serve to give the world more of an otherworldly feel as well as reduce rendering costs. Also, if necessary, I could delete parts of the grid.

Then, I learned how meshes behave, and I began to create my own rectangular prism meshes from code, as these would be the basis for my terrain deformation. Once I had satisfactorily created these prisms, I looked into deforming them to make hills and valleys. The mesh deformation algorithm was essentially a function that that added to the height of the mesh at the point of interest, and changed the height of the surrounding points by varying lesser degrees, so that it looked as if someone had stuck a ball under a rug.

I would then redraw the mesh and recalculate the collision detection. I am glad that I chose to use a grid from the onset, because I was able to apply a couple optimizations that allowed the game to run at an acceptable rate on my computer. Some include: only calculating the collision detection near the vehicles, updating the collision detecting at a slower rate than the rendering of the meshes, and only calculating the deformation on a select part of the grid, rather than the entire playing surface.

Future

I think the game could do with more polish. I also think there are many new possibilities for using terrain deformation as a gameplay mechanic – only one of which I have explored. Furthermore, even within the concept of terrain generation itself I have barely scratched the surface. I could create tunneling algorithms, or the ability to create crevasses.