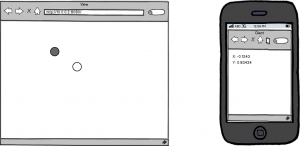

Abstraction: Browser and iPhone Interaction Experiment

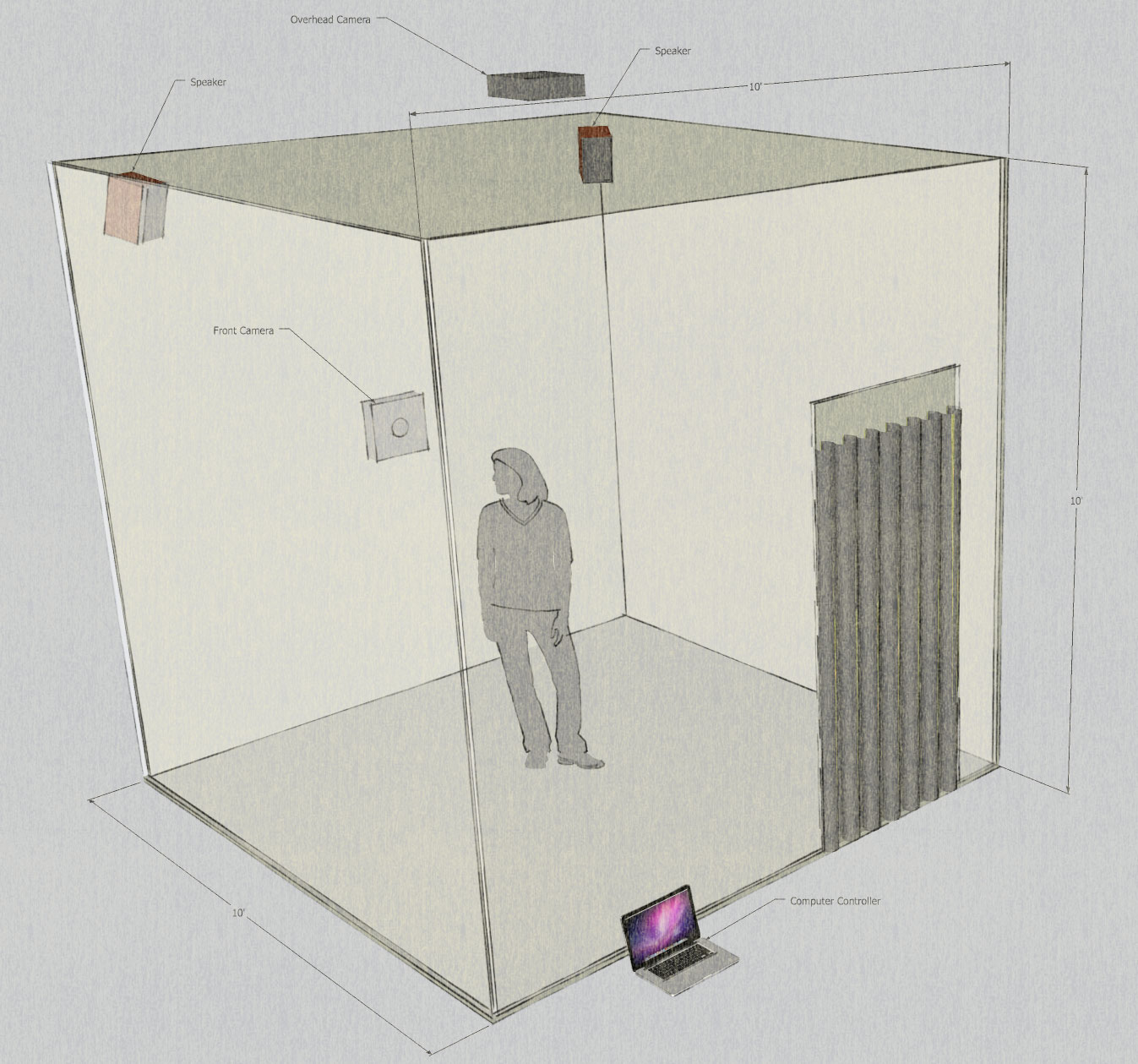

Background: There are many interactions with sensors like Kinect, LeapMotion, Wii. All these interactions require additional sensors with limitation of context and cost. They are either scalable but with high cost or with cheap device but limited with size. Therefore, an idea using ubiquitous devices like a smart phone or iPad as sensors is on the right track. Using browser as the interaction medium also frees the system from heterogeneousness between different mobile operating systems.

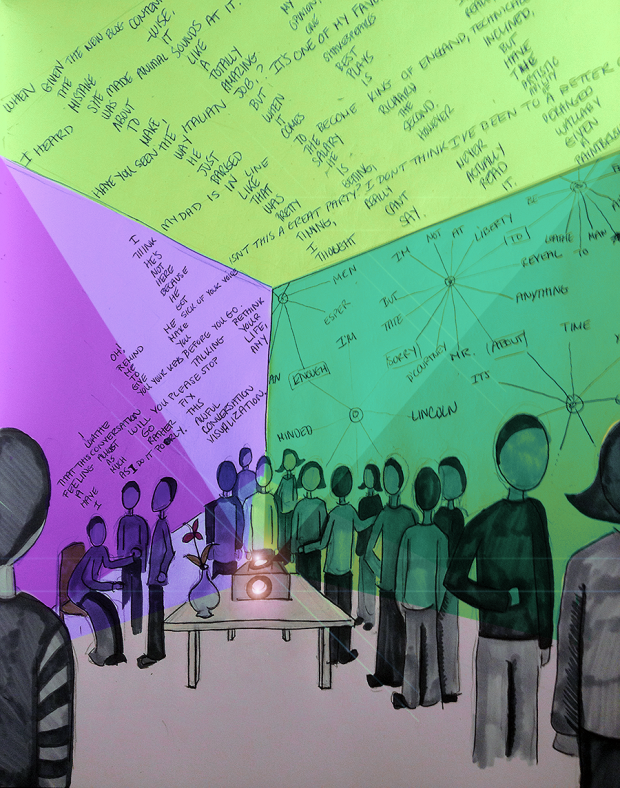

Inspired by Boundary Functions (1998) by Scott Snibbe, every person in real world has a physical relationship with others, I am thinking how about relation between people in virtual space. This interaction installation will be set in public spaces, like airports and train stations. People are getting into Internet through devices. Identity of human is represented as identity of digital devices. With interactions between their mobile devices, people are getting sense of relations between others(whether anonymous or not) in the same public spaces.

Verification & Communication

Currently I didn’t implement the verification for the system right now. User can access the system by visit the server ip address with websocket. Once a device is connected to server, the server will generate a new ball for the user. Server can get the device id to identify the user.

Interaction

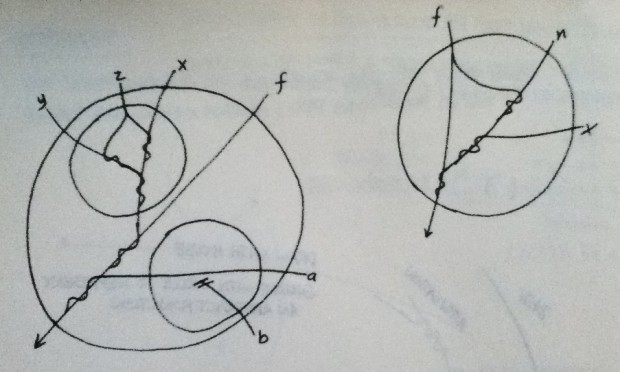

The browser on the mobile devices will obtain gravity and accelerometer of the current device and send data to the server. The server will normalize the velocity and orientation of x-axis and y-axis values and map them to the position on the browser page.

Tech

I use Node.js as server with now.js and socket.io for live interaction. Apple accelerometer is the sensor on the client device.

Github repo: https://github.com/hhua/xBall