For the love of Samsung

Background

Samsung is a a multinational conglomerate company with it’s headquarter in Seoul, South Korea. Samsung holds financial, political and cultural supremacy in South Korea and is rapidly expanding globally. It is comprised of industrial subsidiaries, as well as entertainment, commodities, food market and much more. It is the archetypical Chaebol, a single family in control of the matrix of corporations and enterprises.

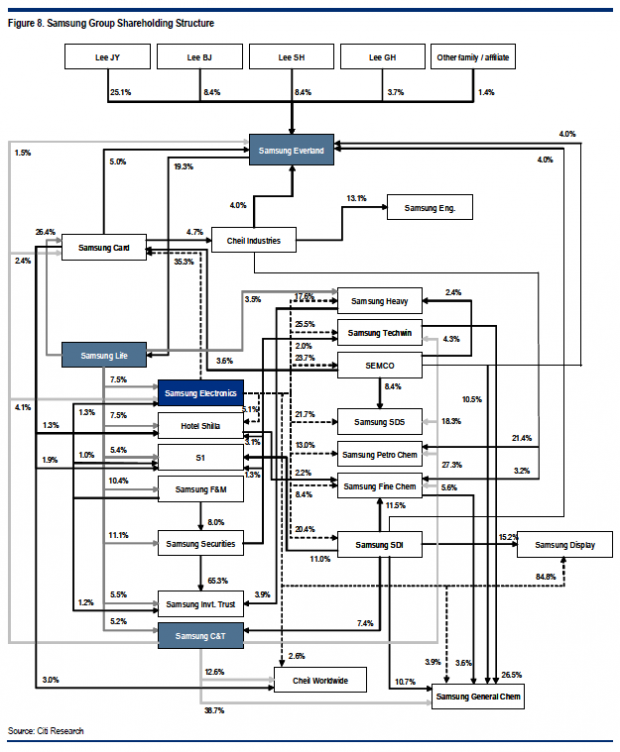

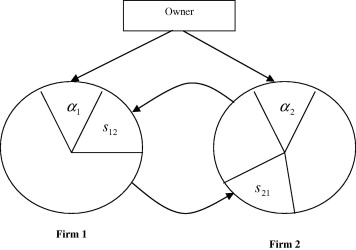

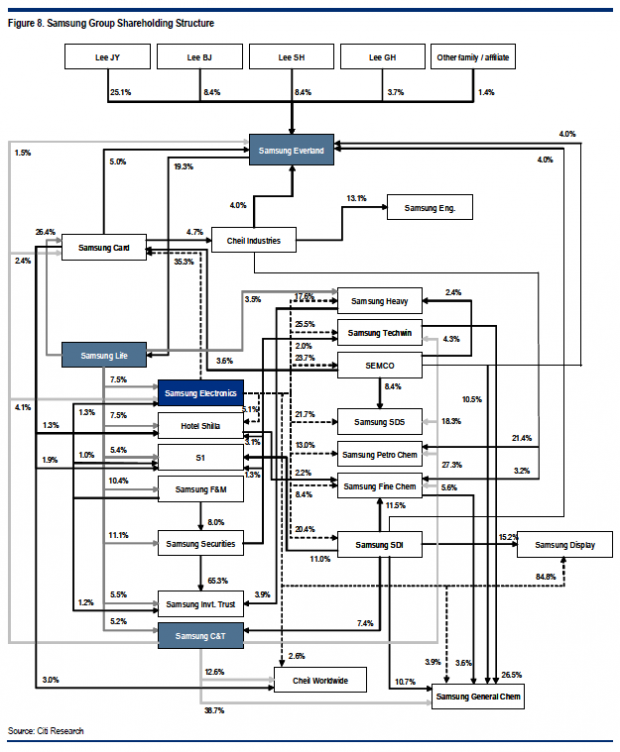

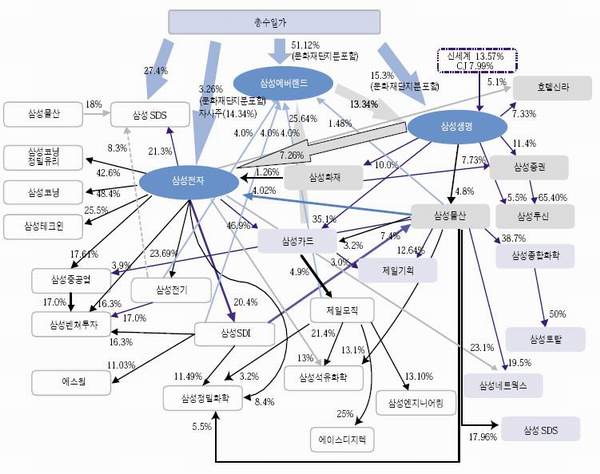

Corporate Group Share holding structure.

[Image 1] This diagram, created by Citi Research, shows much of the assets and profit going into Samsung Everland. It is a company that manages a Samsung theme park in the outskirt of Seoul among other services. It was the heart of controversy regarding illegal inheritance of wealth within the family that resulted in 9 year trial between 2000 ~ 2009.

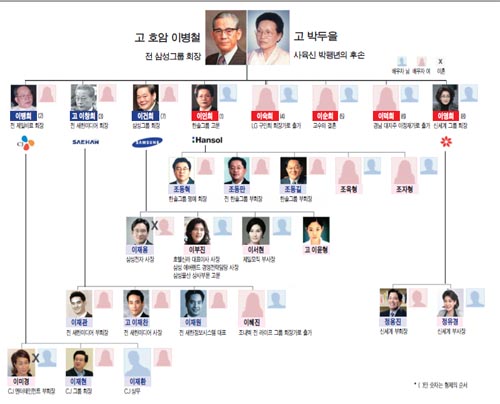

An illustrated family tree of Samsung owners.

While Samsung is cherished as the economic backbone and the pride of nation, it also attracts criticism in market monopoly and subsequent inflation, the way wealth and power is inherited and ties to the politics via arranged marriage. The family members attract celebrity-like attention as well as notoriety for their lavish lifestyle and significant role in arts, culture and sports. [Image 2] They are also subject of popular imagination, often times romanticized in K-pop drama series about arranged marriages, scandals and of course, they are the epitome of Gangnam Style.

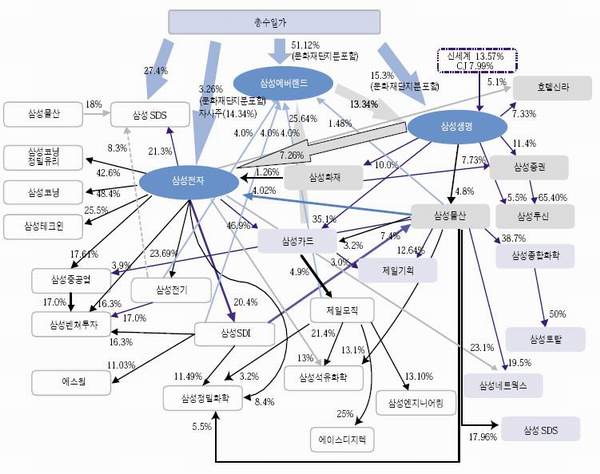

Illustration of C.E.O family and subsidiaries.

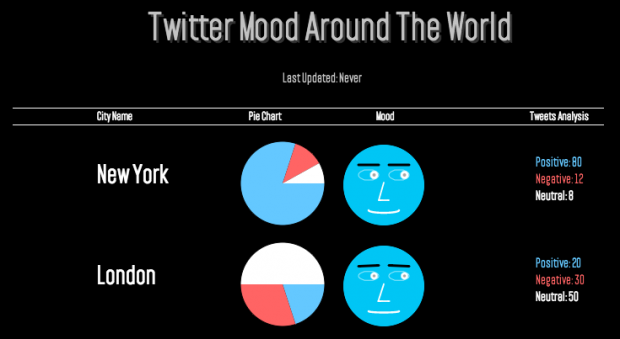

[Image 3] shows similar relations as the image #1, however with focus on the relationship between Everland, Samsung Life (insurance), and Samsung electronics. It was found in an online newspaper reporting on the correlations of various subsidiaries and the Chaebol family.

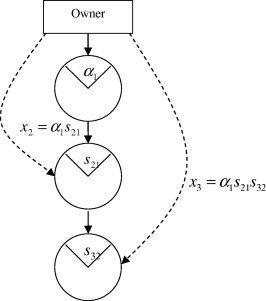

[Image 4] shows the corporate shareholding structure with subsidiaries in different colors. Download this PDF samsung

One of recent issues with the company was regarding passing on of power from Lee Gunhee to his son, Lee Jae-yong as the vice-chairman and his daughter Lee Boo-jin as the head of Everland and Hotel Shilla. Lee Gunhee and the Samsung heirs were accused of stealing from Lee’s father’s trust, as well as series of controversies when he was “found guilty of embezzlement and tax evasion in Samsung’s infamous slush funds scandal.”

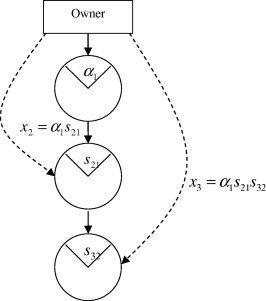

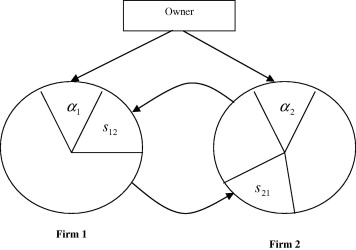

[Image 4/5] Two images on top illustrate Chaebol’s shareholding structure, that is of Pyramidal, on the left and Circular, one the right. Source Their tricks and strategies have been political and social controversy in South Korea, because of it’s tremendous scale as well as how politicians continually forgive their misbehavior. It explains South Koreans’ shared an ‘affective’ relations to Samsung.

[Image 4/5] Two images on top illustrate Chaebol’s shareholding structure, that is of Pyramidal, on the left and Circular, one the right. Source Their tricks and strategies have been political and social controversy in South Korea, because of it’s tremendous scale as well as how politicians continually forgive their misbehavior. It explains South Koreans’ shared an ‘affective’ relations to Samsung.

[Image 5] The image illustrates the familiar relationship of different Chaebols, Media and press, and politics. Samsung is the blue terrain that intercepts with most of other conglomerate.

When the power shift is complete, the Lee junior will be the third in Lee family to become ‘the emperor’ of Samsung. It is no doubt that the inheritance of power resembles the family on the North of border.

+ + +

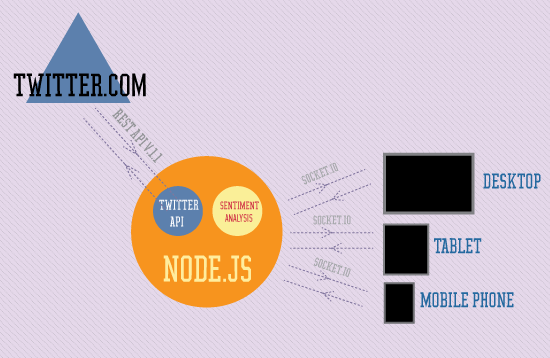

Project Idea

Inspired by Golan’s comment (from the lecture) to work with data that means something to me, I’d like to search for public data on Samsung as well as other private and speculative datas on their shareholding structure. The goal is to create simple and elegant visualization of the network between subsidiaries and the Chaebol family. I appreciate the aesthetics of Mark Lombardi’s simple drawings and research and the technical achievement of They Rule. While the illustrations I collected for the proposal are interesting, it is largely based on subjective decisions, and have been influenced by their political intentions. I’d like to create an objective view of the network that reflects complexities of the corporate structure.

Technical tasks

1. Identify data. Collect and parse data on corporate shareholding structure, translate necessary information and create a set of csv files that can be used for visualization. I did a brief search online and I fear there might not be a public data available in excel or txt file. I might need to hand craft the data from Annual report, or work with other available data on Samsung.

2. Create 2 dimensional visualization of selected data with Processing or Openframeworks. I will need to learn to use various techniques and libraries. Priority is to create systems which can be used to process various data, because each subsidiaries and sister companies may have different relation to the parent company and the family. The goal is not to create aesthetically pleasing interface, but to become familiarized with working with complex and incomplete sets of data. This is the technically challenging part of the project, therefore I will make a separate post as I make progress.

3. Translate the visualized data to other form. This is where aesthetic interest plays an important role. I’m thinking of using the data to generate 3 dimensional form to 3D print, via OpenScad or Grasshopper with Rhino. I’m new to both platform, so it will take some time to develop. I might call for collaborator on the phase 3 if will be more interesting.

+ + +

Pardon me, this assignment is a month too late. I’ve been super busy organizing workshops and exhibitions in Seoul. Check out some hackers and media artists we met for Demo-Day Seoul. Now I’m back in NY, and will continue to follow this class. I’m impressed and encouraged by other student’s work! The time frame for this assignment will be about three weeks from now, so hope to make visible progress by March 23rd, while I may continue to work on it afterwords.

thanks!

ty