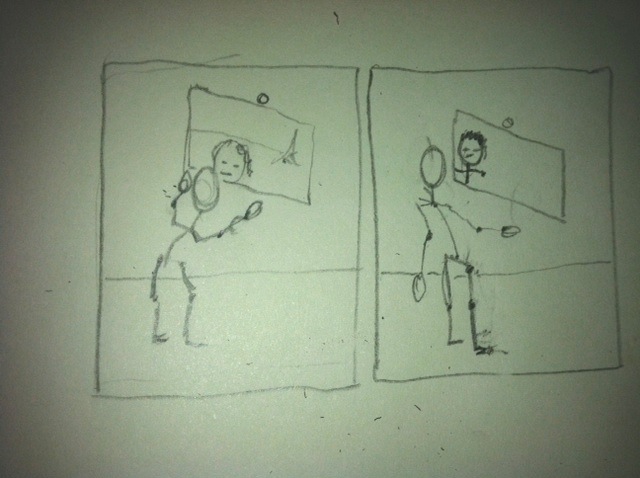

For our Capstone Project, Kyna and I are continuing to collaborate on our mobile game Small Bones. As such, for this Looking Outwards I tried out some current popular runner mobile games:

1) Jetpack Joyride by Halfbrick

As the name implies, this game is an infinite runner where the character you control is wearing a jetpack. The player is able to have the jetpack hover in mid-air by tapping and holding his finger on the mobile screen. The purpose of hovering is three-fold: 1) to avoid obstacles, 2) to collect coins, and 3) to gain power ups. Each power up is a different vehicle that has different capabilities, though each is still controlled by tapping or tapping and holding. On the plus side, this game has a simple, but clear premise based on a simple mechanic that is intuitive and cohesive with the theme. The game is very easy to learn how to play, even without tutorial. I think that the reason for this is due to the simplicity of the mechanic and the player’s intuitive notion that to make a jetpack fly you press and hold a button. I also think that the graphics are very well done, though I’m not as into the “cutsy” style that seems to dominate mobile games, in particle when dealing with depicting humans. In terms of negatives, the storyline is very unclear. If I had not seen the above video, I would not understand that the character is a typical 9-to-5 American worker who is unhappy with his life and decides to steal a jetpack and go on a joyride. In addition, although the different power-up vehicles are creative and give game-play more character, in seems to take away from the storyline and the main premise of stealing a joypack. Also, like a lot of mobile runner games, there’s this idea of collecting coins to buy items outside of the actual gameplay to be used in gameplay that takes the player out of the suspended disbelief of the game, which I’m not such a fan of.

2) MegaRun by getset

Megarun is also a runner, but it is broken up into levels (the direction Small Bones is currently heading). Again, there is a simple jump mechanic of tapping and holding to jump higher. This time, if you collect a power-up it will automatically be activated and stay activated until another power-up is collected, an enemy is run into, or the power-up’s timer is up. This game too uses “cutsy” graphics but I think it works better here because the characters and the world are meant too be cartoon-ish and not resemble the “real” world. Furthermore, the power-ups in this game make more sense than those in Jetpack Joyride because they actually make finishing the level easier. As I said, in Jetpack Joyride the main purpose of the power-ups seems to just be making the gameplay more interesting. Also, the cohesiveness of the game’s narrative extends to those coins I hate so much. For one, the storyline is based on the character trying to regain his riches, as seen in the above trailer (though, again, without the trailer this would not be immediately obvious), and, secondly, collecting different types of coins helps the character run faster, thereby helping the player complete the level. On the negative side, the use of levels is purely to separate out difficultly; I wish there was more storyline reveals in the different levels.

3) Temple Run and Temple Run 2 by Imangi Studios

(sorry for such long videos)

This is actually one of my favorite runner games. This game uses a few variations on one mechanic, the swipe, to do a few different movements. Sliding your finger up makes the character jump, down makes the character slide, and on side to another makes the character turn. It also uses the accelerometer to get the character to tilt his run pattern to one side or the other. All of these mechanics are simple yet intuitive and add to the sense of depth in the 3d world. Although I am partial to 2d games, I happen to really like the aesthetics of Temple Run, and even more of Temple Run 2 and I think they really enhance the storyline of the game. Like with the first two games, the storyline is somewhat vague and implicit, but unlike with the first two games, it is less bothersome for Temple Run. The beginning sequence of the scary gorilla-like monsters chasing you along with the graphics imply that you need to run as fast as you can to safety, and that gives the player enough agency to feel engaged with the premise. One of the best features of this game is the tutorial. The tutorial does a good job of teaching you the mechanics of the game one at a time with a combination of world obstacles and text overlay. It shows you different obstacles where you want to use different mechanics and lets you die if you make a mistake, resetting you to the part of the tutorial at which you died. I also liked that Temple Run also incorporates coin collection more into gameplay. Although there is no reason to collect coins in terms of storyline, the location of the paths of coins suggest to the player the path and mechanics they may want to use at that particular time.

The two versions of the game are pretty similar but have a couple of key differences. Firstly, the first version is at a constant height and the world has a purely orthogonal layout. The second version allows for variation in height and in curvature of the path. Although I like the variation in height, the curvature distracts from the mechanics in my opinion because it makes it more unclear as to when you need to swipe a turn. The second difference is that in the second version, there is a double-tap to enable a power-up. One potential issue we were running into with Small Bones was differentiating between drawing a path and enabling a power-up, the first of which was to be a tap and drag and the second a tap and release. We could use a double-tap to better distinguish this.