‘Imisphyx IV’, is a cursory exploration of how we might make novels and other fictional stories interactive, non-chronological, and perspective dependent.

This is the fourth iteration of ‘Imisphyx’: an invented word that, in greek, would mean something close to ‘fake pulse’. The first version is the original manuscript — also a work in progress. ‘Imisphyx II’ is a choose-your-own-adventure vignette set in the same universe, written in Twine (an html based platform for branching stories). If you’re interested, you can read it right here. ‘Imisphyx III’ was an object-oriented telling of the tale, based on a set of polaroids of pieces of police evidence, and associated evidence sheets filled out by one of the novel’s antagonists. You can read more about that version by heading over here, and scrolling down a bit.

At its core, Imisphyx tells a story about ~ten characters, living in a universe where a chemical extract has been developed that is capable of amplifying ‘belief’ — i.e. it can cause irrational zealotry or utter despondency in an otherwise stable person in a matter of hours. The weird thing, though, is that a story is only ever what somebody believes happens. So, depending on what characters you choose to believe as a reader, the story changes radically.

(If I were try to explain the premise of the ‘Imisphyx’ story in its entirety, suffice to say I would be writing this blog post for the next 11 years, because that’s how long the concept behind ‘Imisphyx’ has been percolating in my head. Yeah… it’s that convoluted… er… I mean… detailed.)

Turns out it’s really difficult to write a novel that morphs as it’s told– particularly when you’re limited to pen and paper. So that’s why I’ve turned to other methods of storytelling.

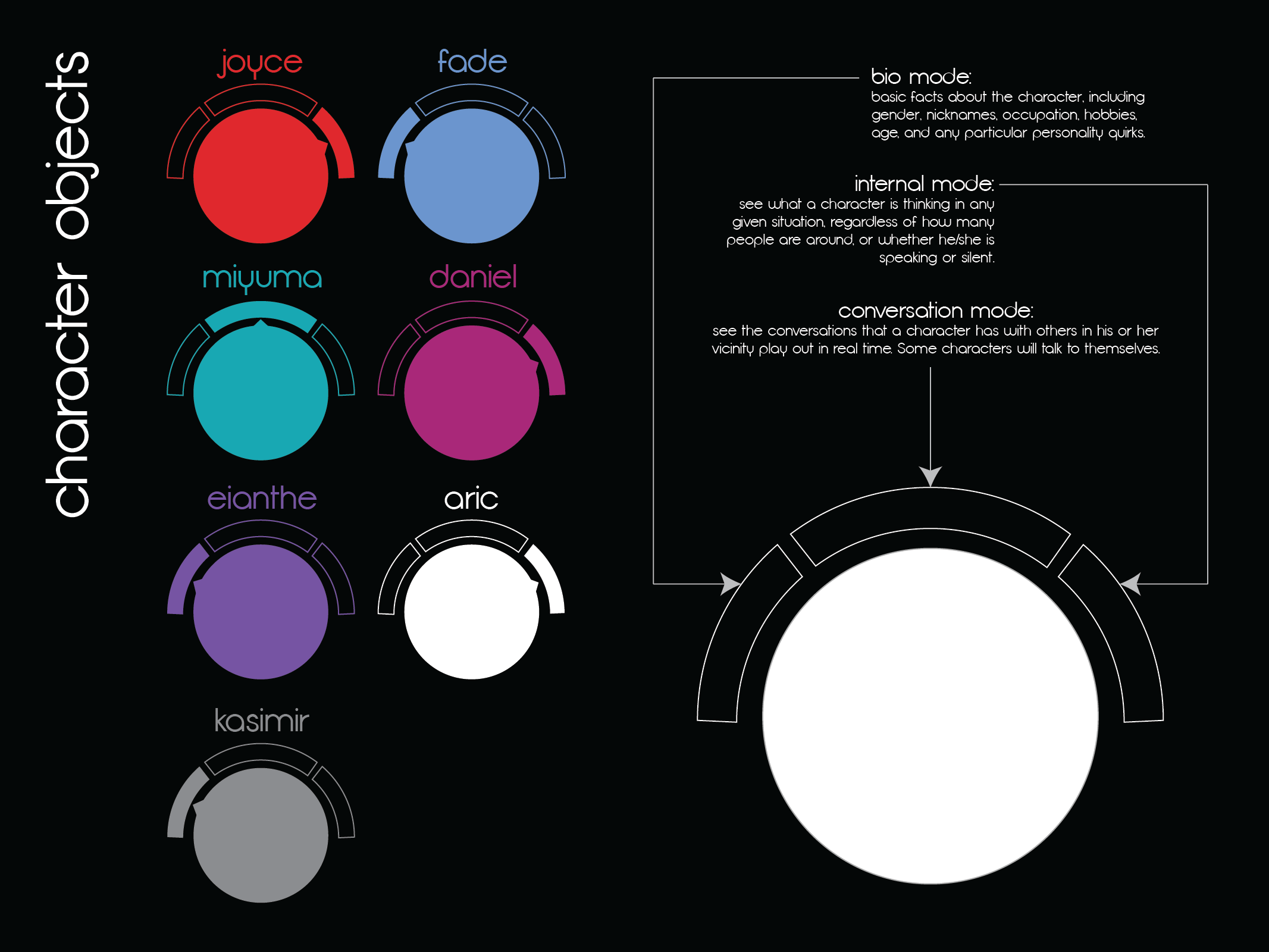

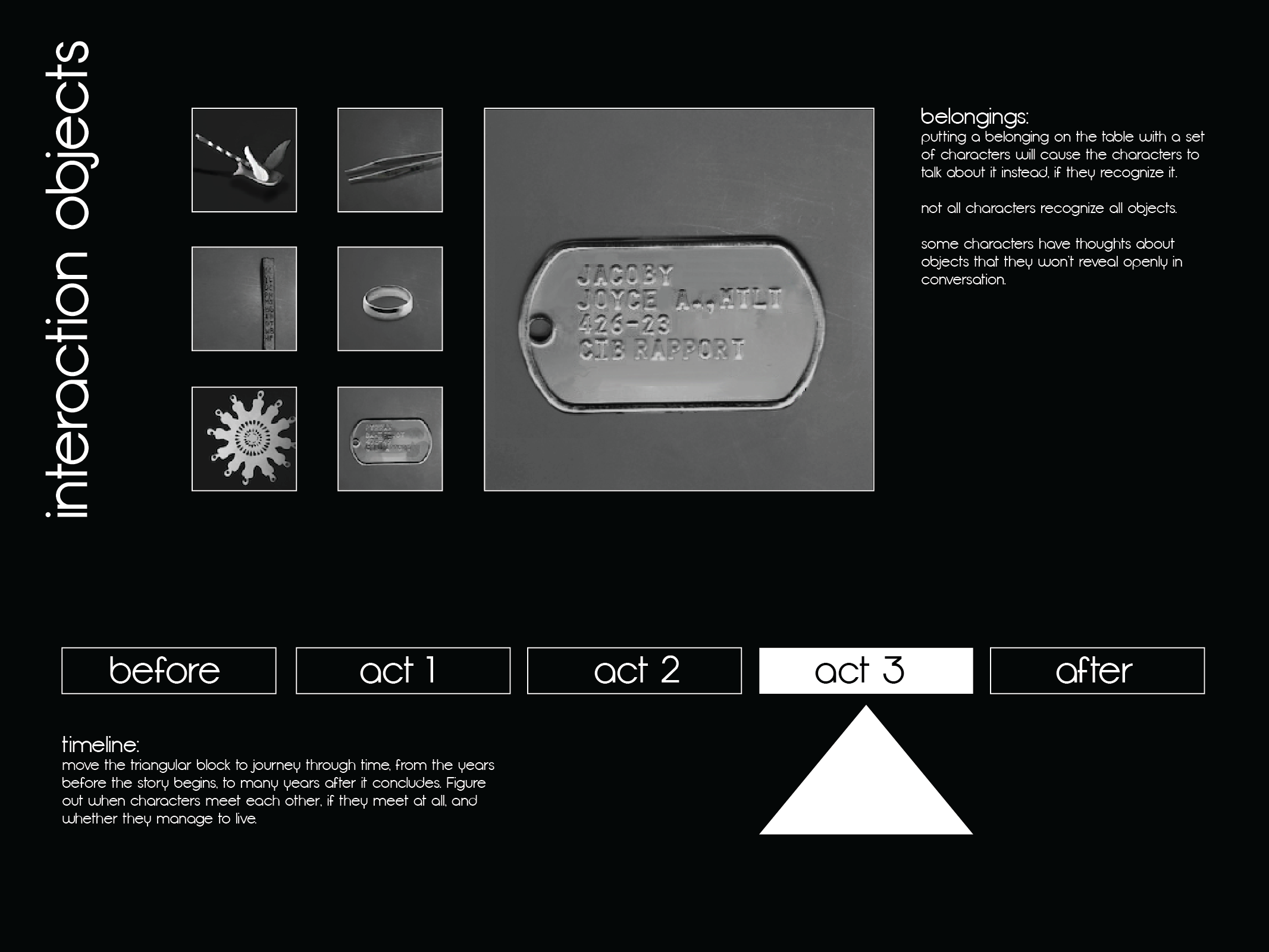

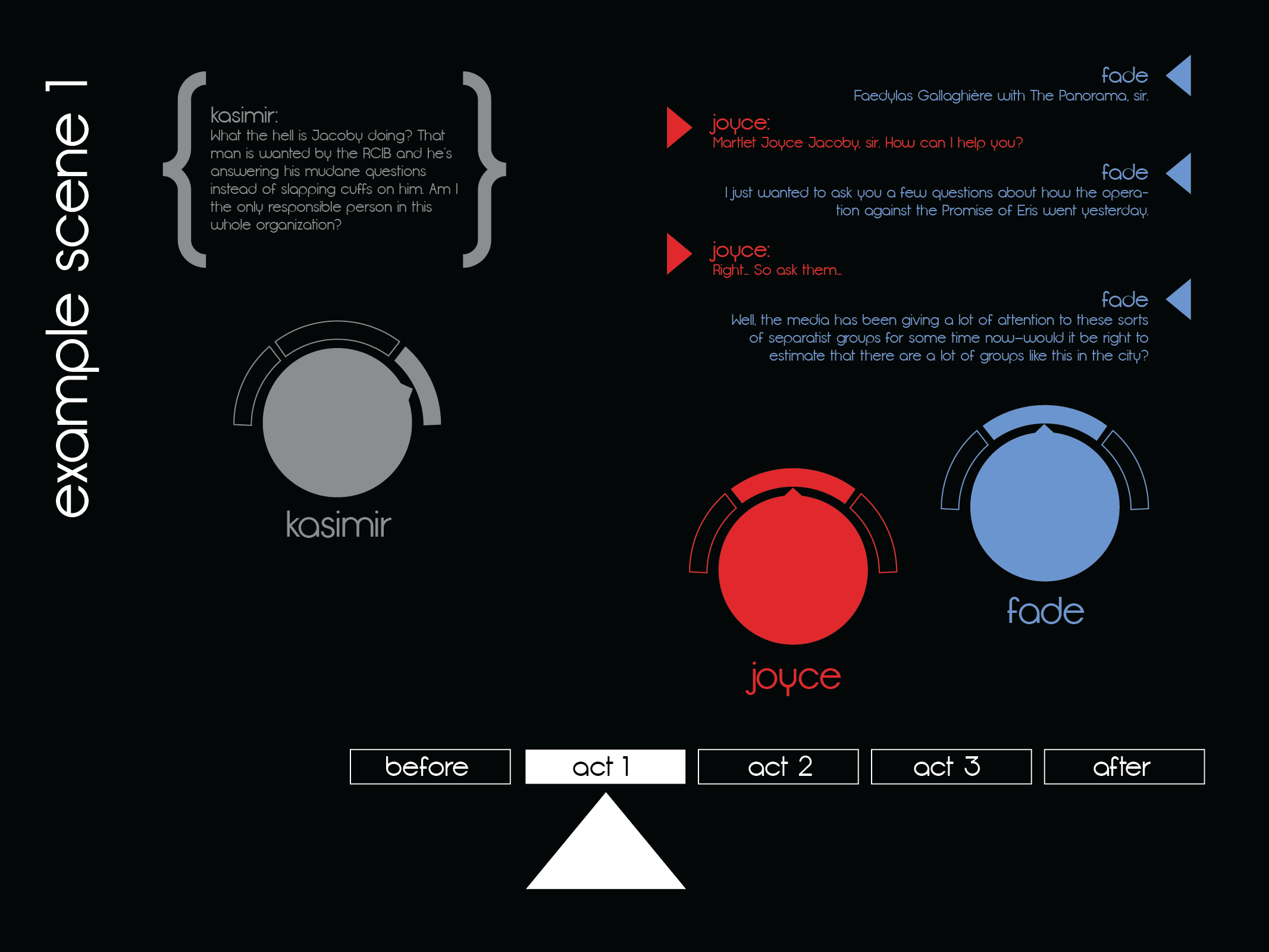

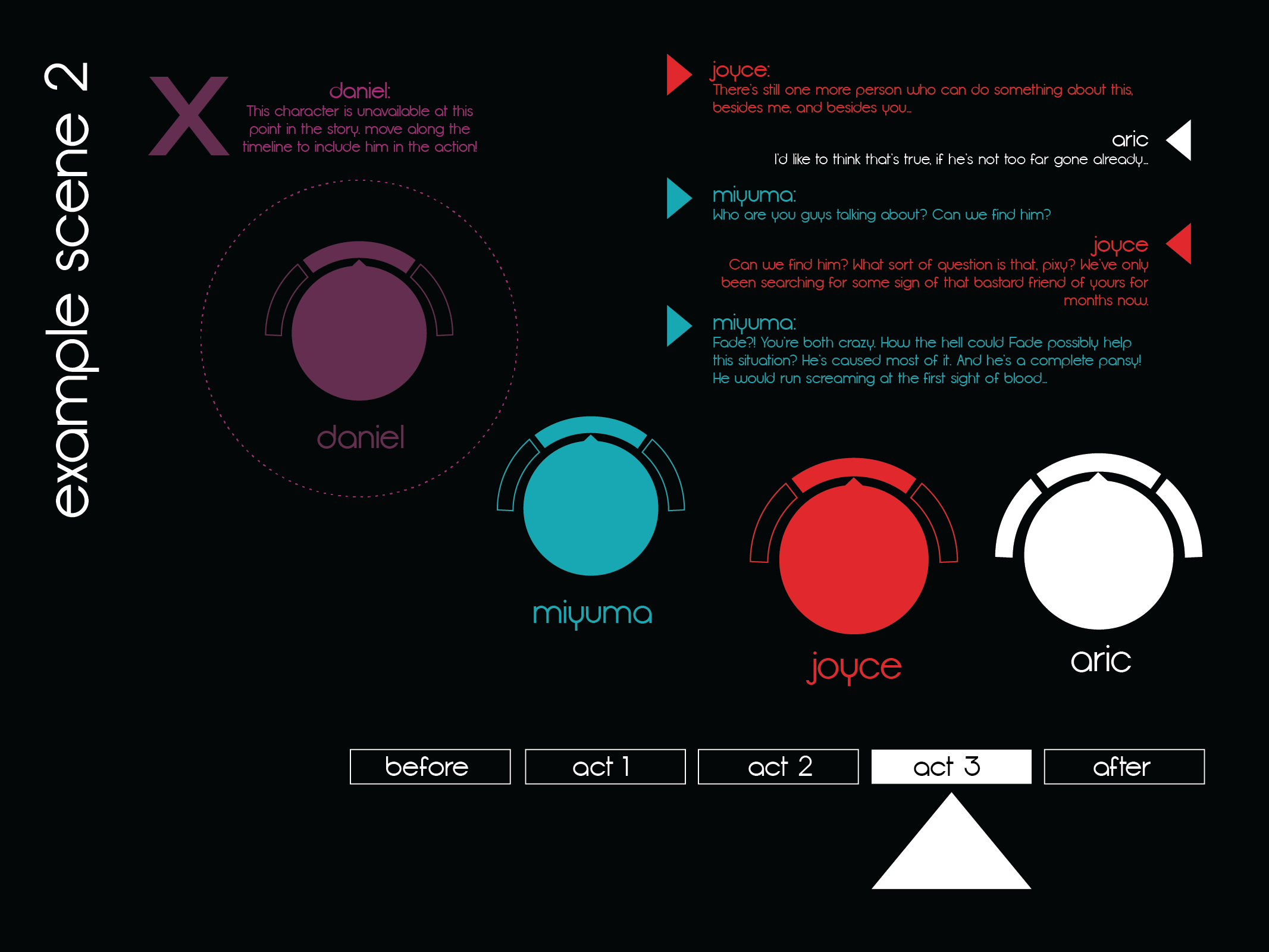

My initial concept used the reactivision library and the reactable to allow people to move characters and objects in and out of the interaction. Imagine a play where the audience votes on which actors appear on stage at any given scene, and what props they can use. In this version, the player/reader/audience-member could also move through time, experiencing the story out of chronological order, or character by character. Each character has multiple states — an internal (first person PoV) state, where the reader can see thoughts, a conversational (third person PoV) state, where one only sees what a character says outloud, and a bio state that displays basic facts. See the sketches below.

I still think this version of the experience is far more elegant, but since the reactable was unavailable for the duration of this project, and since I’m still barely managing to hack together functional processing code sometimes, I settled for a screen-based prototype of the most basic function — dialogue display.

So, at the end of the day, my project 3 can be distilled down to ‘fun with the controlp5 library, 7-factorial button combinations, and lots and lots of tears.’ Enjoy!

EDIT:

I was asked to give a brief analysis of what I felt were the major shortcomings and successes of my current working prototype, and if I’m honest, the former greatly outweigh the latter.

The root of everything unsavory about the processing prototype can be traced to the fact that it is screen-based and relies on buttons instead of moveable objects. There is very little satisfaction to be gained by simply clicking on combinations of buttons that all look and behave the same way. Part of the joy of a reactable interface would be to pick up pieces as one would in a board game, arrange them exactly how you might imagine the characters standing while they converse, and watch the interaction take place in the surrounding space. Admittedly, the same general concept could be implemented on screen (in fact that’s more or less what the reactivision TUIO simulator is), but I opted to spend more time searching for and pruning the content.

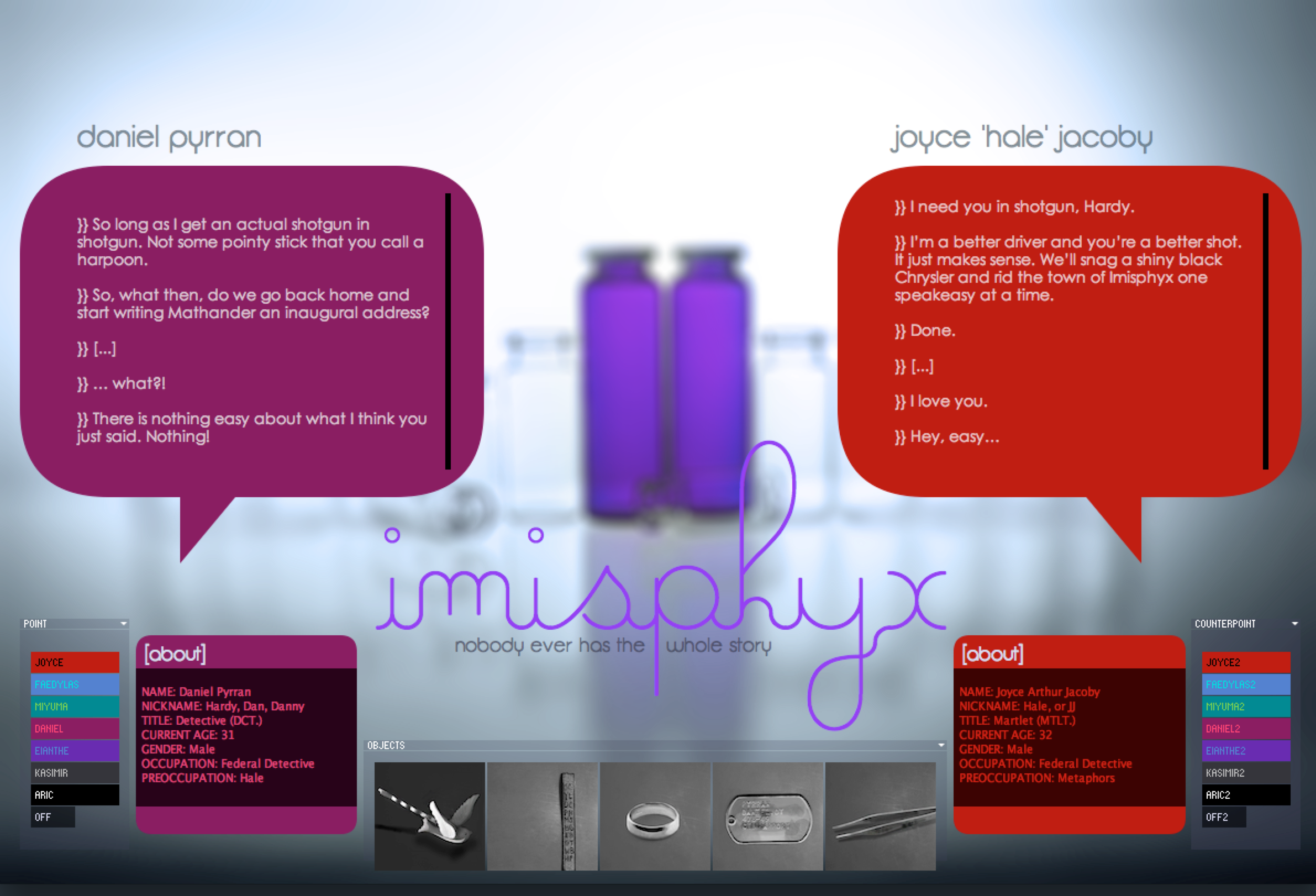

On that note, not only are the characters and objects pinned in space, but the dialogue is confined to the space of pre-drawn speech bubbles, and two separate speech bubbles at that. On one hand, this gives the prototype a cute comic-book aesthetic, but on the other, it’s incredibly impractical for long stretches of dialogue like I’m trying to present. It would be far easier to follow if the dialogue was merged into a single stream, color-coded by character like a chat window, or as shown in the concept diagrams above. This is definitely something I would implement immediately in another iteration of the project.

The last major pitfall of the prototype isn’t limited to the processing version, but is inherent in the concept itself; this currently requires an enormous investment from the author or storyteller. All interactions need to be selected, formatted, an evaluated — some probably have to be created specifically for this installation, if they don’t exist in the original manuscript.

In response to this, some people have suggested limiting the work that the author needs to do by producing dialogue generatively based on set character-traits, but I deeply dislike this idea. My aim is not to create a choose your own adventure story, nor an ensemble of AIs. I tend to fall into the camp that thinks that an author needs to retain a certain amount of authority over a story, or else it quickly crumbles into non-compelling dravel (not that authors aren’t totally capable of falling into that trap on their own :D)! Here, the plot and the conversations are very much pre-determined. I’m only interested in widening and narrowing the lens through which the audience gets to explore that predestined story.

At the end of it all, what I think I’ve managed to achieve here — perhaps unsurprisingly, given my background — is an aesthetic gist of what I hope this project could be. And that is not without value. Actually, one of my major fears about using an interface like the reactable is that I risk losing the atmosphere that the current prototype evokes. Most of the reactivision installations I’ve seen have relied on a visualization library that is shiny, but generic and very ‘digital’. The concept sketches reflect that barren look and feel, even though it’s not really what I want. A large amount of effort would have to go into making the table interface match the tone of the fictional work being presented. One size does not fit all.