Overview

In this assignment, you are asked to locate an interesting online source of data, and scrape or acquire a hefty pile of it. (A few weeks from now, in a subsequent assignment, you will be asked to visualize it.)

There is plenty of data out there, and much of it is boring. The interestingness of the data you collect is one of the key factors by which your work will be evaluated. You are going to ask the world a question, and the world will cough up some data in response. Often a small change in the way you pose a question will produce very different results.

Learning Objectives

- (Per Ben Fry’s formulation) Upon completion of this assignment, you will be able to acquire, and potentially parse and filter, data using a powerful meta-API for web scraping.

- Students will be able to use data scraping techniques to support inquiry-driven and curiosity-driven investigations of the world.

Acquiring Data

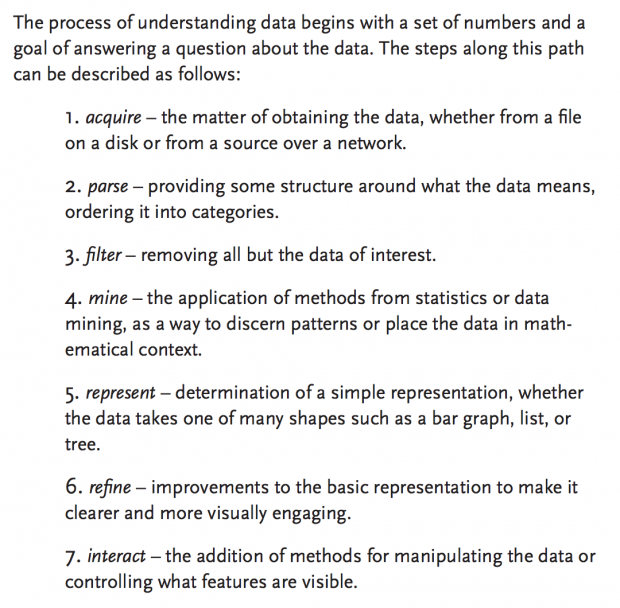

The objective of this assignment is to introduce basic tools and techniques for automatic content extraction and parsing, such as are commonly encountered in data-intensive research projects. We first consider Ben Fry’s overview of the process of data science, from his doctoral thesis:

.

.

Scraping, Ethics, and Law

A gray area exists. Can web scraping be illegal? It is no exaggeration to say that people have certainly lost their liberty and even their lives for scraping data. In class we will discuss the ethics and tactics of data scraping. Before we begin, please read about the brilliant and creative Aaron Swartz, who was arrested for downloading too many publicly-funded academic journal articles, with tragic consequences. Read:

- The Guerilla Open Access Manifesto by Aaron Swartz.

- Also please read about Swartz’s activism related to PACER and JSTOR.

Carl Malamud, “rogue archivist”, presented this eulogy for Aaron Swartz:

Another challenging example was that of the hacker Weev, who faced 5 years in jail for scraping improperly secured personal email addresses from AT&T. (He was eventually acquitted after much legal trouble.) To avoid this sort of situation in our class, we will establish the policy that you may not scrape personal data (e.g. contact, personal or financial data about customers, credit card information, etc.) for anyone other than yourself.

Instructions & Deliverables.

For this assignment, we anticipate you will use the “front door.” For this reason, you have been given a one-month “Power” account generously donated by Temboo.com, which will allow you to make up to 100,000 queries. Temboo provides access to more than 100 public API’s through a dozen programming languages, with working documentation examples (in each language) for more than 2000 different API calls. Your favorite language is almost certainly supported by Temboo:

You are asked to do the following:

- Create an account on Temboo.com, immediately. Complete this survey to give the professor the email address associated with your Temboo account so that it can be blessed with special powers.

- Read the three articles:

- Read the Taxonomy of Data Science tasks, by Hilary Mason and Chris Wiggins, with special attention to their sections on obtain and scrub.

- Read the Guerilla Open Access Manifesto by Aaron Swartz.

- Read Art and the API by Jer Thorp.

- Identify an interesting online website, public data API, or database that manifests in scrape-able HTML. This can be anything you wish — for example, you might fetch public civic data Or you might fetch something weird and funny, such as the real names of hip-hop artists.

- Create a scraper to automate the extraction and downloading of this data.

- Scrape some data. Our target is something on the order of 1000 or more records, preferably ~10,000: more than you could conveniently copy-paste by hand, and more than you could visualize without some sort of computational approach. Please: don’t forget to put in a couple seconds’ delay between queries — otherwise, you may get banned from some network or service.

- In a blog post, discuss your project in 100-200 words. What did you learn? What challenges did you overcome?

- In the blog post, present a sample of some of the data you collected. The format of this is up to you. Present enough records (50?) that one can get a feel for what you’ve collected.

- Draw a rough sketch of how you might later visualize this data; in include a scan or photo of the sketch in your blog.

- Link to the Github repository that contains all of your code for this project. Be sure not to post your private API keys in your Github code.

- Categorize your blog post with the category, 12-datascraping.

Advanced and/or Optional Approaches:

We strongly recommend the use of Temboo! However, there are other ‘unsupported’ options. The enthusiastic recommendation of a wide consensus of data scientists is that the Beautiful Soup Python library is the premier tool for creating a custom data scraper entirely from scratch. You may also consider using the new KimonoLabs tool for automated data scraping, which provides an API for website with structured data but no public API. Here are some additional resources:

- Web Scraping with Beautiful Soup (Python for Beginners)

- Web Scraping 101 with Python, by Greg Reda

- Web Scraping Workshop, by Brad Montgomery

- Scraping: Beyond the Basics, by Tim McNamara

- Scrapy at a Glance, by the Scrapy developers