G’d’eve, folks. Here’s a nifty trio of interactive things I’ve discovered while perusing the internet this week.

Daily Dose of Shakespeare : Stubbornness

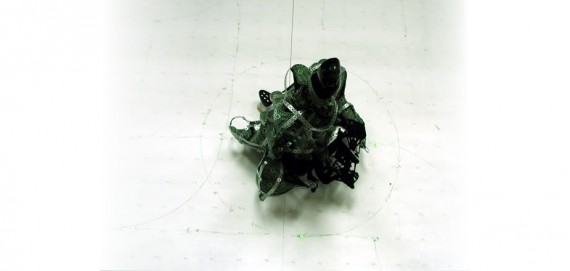

[C]aliban Robot Artificial Shakespearean Stubbornness… aka “CRASS”

This ugly little abomination locates humans, targets them, and delivers caustic Shakespearean insults originally attributed to the wicked Caliban from The Tempest (you may recall me moaning on a previous blog post about people forgetting this play, so I’m pretty excited that somebody used it)! Usually, I don’t like ugly stuff, but the choice to make this little dude as completely wretched looking as possible is frankly hilarious to me, given the character of Caliban. The robot doesn’t allow for people to respond to its insults, which in a certain light could be viewed as a shortcoming of the interaction, but the artists provide a pretty adorable rationale for why two-way-dialogue isn’t possible with their monster. They invent the concept of “artificial stubbornness”, explaining that in normal conversation between two humans, stubbornness occurs when one human isn’t capable of modifying their position or opinion based on feedback from another. The robot, they say, is merely exhibiting the same behavior, but “artificially”…. because it simply can’t listen. A good example of a clever narrative compensating for technical limitations—or, maybe, a piece of interactive art created specifically to fit a clever narrative.

Not for all those insect phobic people, I guess…

Delicate Boundaries

This is an older piece which I just happened to stumble across. Little glowing bug-like creatures swarm out of a screen and onto participants, crawling across them much the same way a parade of ants might crawl across your shoe. It seems pretty simple, but I really like the clean execution, and the message it’s trying to convey about the boundaries between virtual and ‘actual’. The artist seems to want to make a point about how uncomfortably and unexpectedly invasive digital technology is becoming in our lives, and the use of creatures that resemble bugs or bacteria of some-sort really drives home the metaphor for me. I’d like this piece more if the bugs somehow had a bit more substance when they left the screen, so that it wasn’t so obvious that they are just light projected onto clothing. I feel like advancements have been made since 2007 that would allow for 3D hologram-like creatures that would prove much more startling.

Everything is better with watercolors…

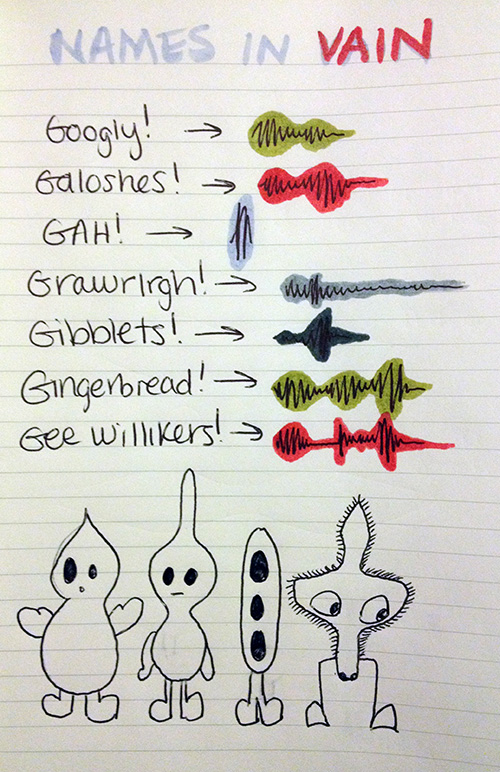

Starlay

This interactive comic for the iPad has been all over blogs this week, and although I’m not utterly blown away by the interactivity (it seems like very standard, game-like, touch-and-discover mechanics), I really do appreciate the art style. The hand-drawn lines and broad watercolor splashes really make this experience something lovely.