Imisphyx V from Anna von Reden on Vimeo.

Well, folks, it’s about time to wrap up the show. I have had a blast over the course of this semester, expanding my coding horizons and creating some pretty nifty art, and now it has all come down to this. Imisphyx V is my capstone project for the course, and is a continuation of my interactivity project from March. The avid reader may recall Imisphyx IV , a prototype built in Processing that allows you to pick a pair of characters from a menu and read the conversations that occur between them. I’d said at the time that I really wanted to implement this concept using an interactive tabletop, to pull it off of the screen and allow people to manipulate the characters with their hands rather than just clicking buttons. With Imisphyx V, I have succeeded in doing exactly that. While there is still a lot of work to be done to implement all the details of my concept, what is shown here is a basic platform for interactive text that I feel has a lot of potential to change the way we interpret stories.

For those of you who missed my discussion of Imisphyx IV, the concept here is pretty straightforward. I am fascinated with the notion of perspective in literature. Authors have been playing for centuries with myriad ways of showing and hiding what goes on in a person’s mind as they move through the physical action of a book. Sometimes these details are written plainly, other times the author hides a character’s true feelings behind actions and dialogue (or lack thereof). By using an interactive table, I wanted to enable a reader to turn on and off these details character-by-character, delving deep into the minds of some, and shying away from others. I feel like this is an interesting experiment in how we form relationships with characters, and how we judge the truth or validity of a narrative, based on how much we trust the narrator we choose to tell us the tale.

I won’t dwell long on the technical intricacies of the project. If you’d like an in-depth discussion of all of that, please check out Imisphyx X : A Formal Write-up (PDF), for eight whole pages of joy and pain. I will say that the installation relies on the reactivision framework and the TUIO library in processing to track fiducial markers that are inscribed on the bottom of 5 glass disks. The table itself was constructed from steel beams, with a plexiglass top covered with a large sheet of tissue paper to enable projection and marker tracking. The camera is a Sony Playstation 3 Eye, and the projector was a wide-angle Casio model, which allowed the entire system to be housed within the table proper.

The whole project was a joy to build, but possessed a lot of challenges for somebody with my somewhat limited coding (and hardware) experience. I now know about twenty times as much as I did about cameras and projection, and still have much more to learn. As a communication designer, this project has also presented me with an as-yet-unsolved conundrum: how do you display vast quantities of textual information with limited screen real-estate in a way that is easily understood and also compelling. It was hard enough to implement the raw dialogue and biographical information, particularly as the resolution of the projector mandated the use of much larger font sizes than I’d been relying on. As the aim is to eventually include even more text (five or six different layers of emotions, thoughts, and actions), a new paradigm will have to be established to avoid total chaos. People have asked me why I don’t use audio, or cinematics to get around this issue, but I am adamant that this project be about innovative uses of text. The entire point is to push the limits of the written word and to craft a new creative writing platform. Using audio, film, and animation abandons the heart of the project.

My hope is that one day, a table similar to this one will replace the coffee tables in our living rooms, and instead of picking up a paperback or a gardening magazine, we can put our coffee down on a coaster, move it around, and watch a story unfold before our eyes. The book isn’t dead! Long live the book.

Thanks for following my work this Spring. Again, if you’d like to know more about this project, please check out the hyperlinks, and make sure to check out the video above, and the images below. Here’s to making more quirky techno-art abominations in the future!

Okidokiloki!

-AvR

Image 1: a set of three characters on the table and ready to converse! Will it be a cage-match or a love-fest?

Image 2: a zoomed out view of the table, also showing my laptop and the projection mirror.

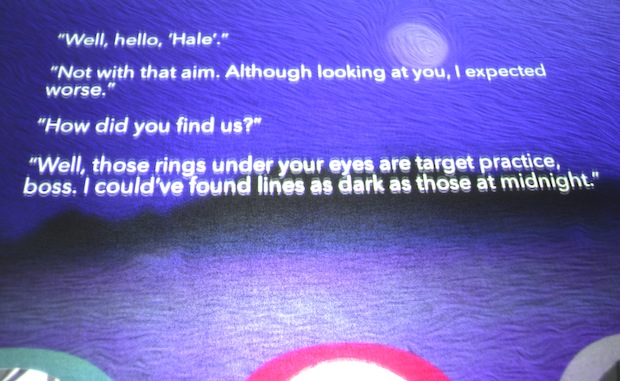

Image 3: an example conversation. Unfortunately this is a little bit buggy, because the previous conversation isn’t currently erased before a new one starts. That might explain the unexpected non-sequitur between the first and second lines of dialogue…. Oops.