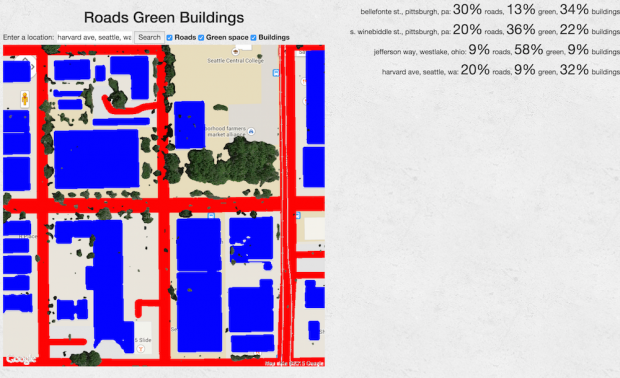

Ok, pick an address, and this app will show you all the roads, green space, and buildings, and show you what percent of that area is each.

What is neat: I’ve lived in two places in Pittsburgh. I had no idea that my current place in Shadyside has way more buildings-per-square-foot than my old place in Bloomfield. In a sense, my place in Seattle was just as dense as Shadyside. And my parents’ house in Cleveland is, now quantifiably, very green.

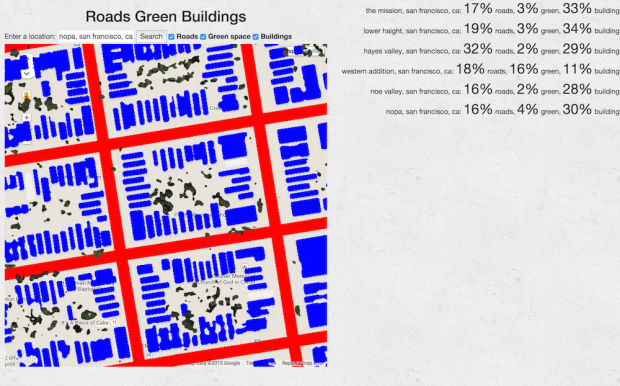

Hey, I’m moving to San Francisco for the summer. Where should I live?

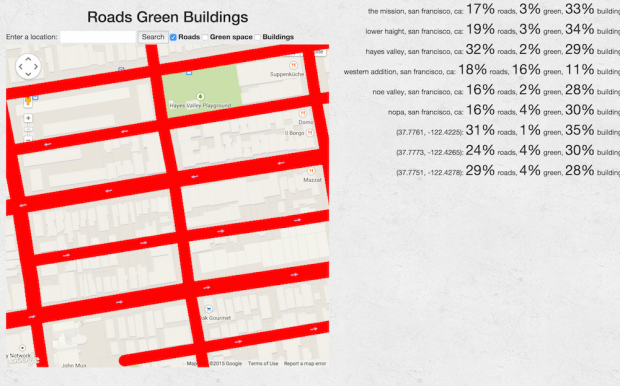

Neat. All the neighborhoods I’m looking at are pretty similar… except it looks like I hit a park in the random square of the Western Addition that I was looking at, and some kind of big roads in Hayes Valley. But that’d be a thing to look for: is the Western Addition a little bit less dense than some others? Is Hayes Valley plagued by roads?

Huh. Panning around Hayes Valley, I keep hitting road densities in the high 20s or low 30s, while other neighborhoods are ~10 points lower. Seems there are more bigger roads there. That’s a good thing to keep in mind.

Anyway, there’s a ton of stuff I’d like to do with this in the future:

- let you share and compare different places

- pre-populate with a lot of places from around the world

- show you where the closest place that has the same proportions as yours is

- let you draw an arbitrary polygon instead of just a box

- tweak the green/road/building finding algorithms so they work better, especially in other countries

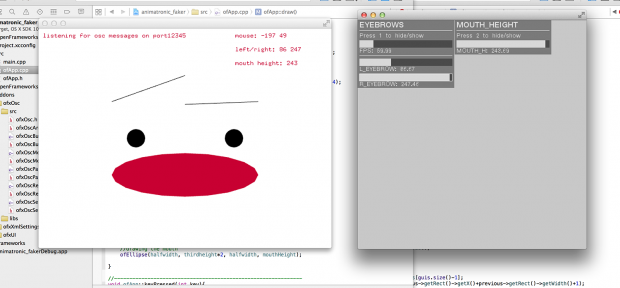

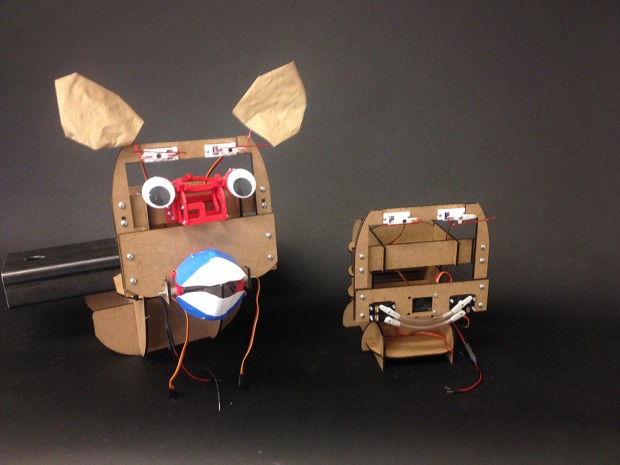

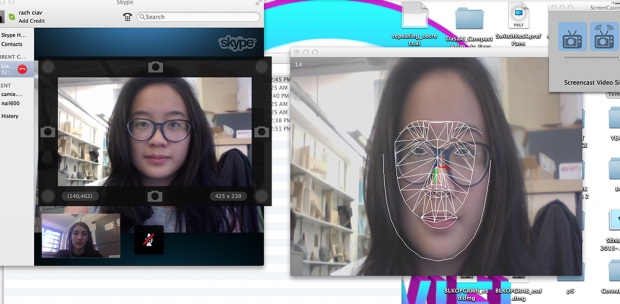

To help with debugging in OSC I’ve created two OF apps that simulate the Facetracker output (OSC sender) using GUI sliders and Arduino response (OSC receiver) by moving a little face I drew. So far everything works well.

To help with debugging in OSC I’ve created two OF apps that simulate the Facetracker output (OSC sender) using GUI sliders and Arduino response (OSC receiver) by moving a little face I drew. So far everything works well.