I love the idea of computer vision–it’s an excellent system for sensing that lets people react with machines in a much more natural and intuitive way than typing or other standard mechanical inputs.

Of course, the most ubiquitous form of consumer computer vision has been made possible by cheap and the Kinect:

Of course here are plenty of games, both those approved by Microsoft and those made by developers and enthusiasts all over the web; [see http://www.xbox.com/en-US/kinect/games for some examples], but there are also plenty of cool applications for tools, robotics, and augmented reality.

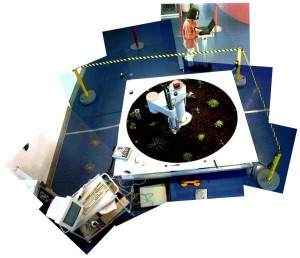

Here’s a great example of an augmented reality application that uses the Kinect–it tracks the placement of different sized blocks on a table to build a city. It’s a neat project in its ability to translate real objects into a continuous digital model.

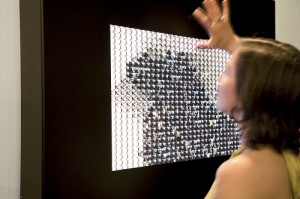

Similarly, there is a Kinect hack that allows the user to manipulate Grasshopper files using gestures [see http://www.grasshopper3d.com/video/kinect-grasshopper ]. It is a great prototype for what is probably the next level of interaction: direct tactile feedback between user and device. This particular example is lacks a little polish–its feedback isn’t immediate and there are other minor experience details that could be improved. For an early tactile interface, though, it does a pretty good job. There are plenty of other good projects at http://www.kinecthacks.com/top-10-best-kinect-hacks/

Computer vision is also incredibly important to many forms of semi or completely autonomous navigation. For example, the Cobot project at CMU uses a combination of mapping and computer vision to navigate the Gates-Hillman Center. [See http://www.cs.cmu.edu/~coral/projects/cobot/ ]. There are a lot of cool things that can be done with autonomous motion, but the implementation is difficult to create due to the large levels of prediction necessary for navigating a busy area.

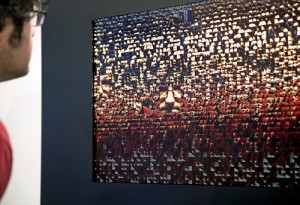

Another great application of computer vision is augmented reality. There are plenty of projects at http://www.t-immersion.com/projects to give a good idea as to how many projects involving augmented reality exist, with every idea ranging from face manipulation to driving tiny virtual cars to applying an interface to a blank wall having been implemented in some form. Unfortunately, it is difficult to make augmented reality seem like a completely immersive experience because there is always a disconnect between the screen and the surrounding environment. A good challenge to undertake, perhaps, is then how to make the experience such that the flow from screen to environment doesn’t break the illusion for the user. Food for thought.