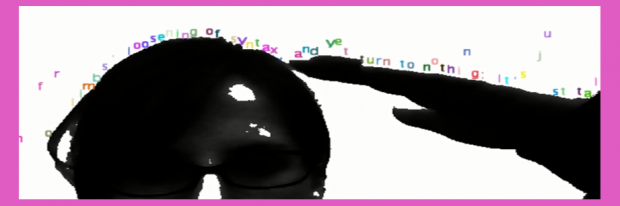

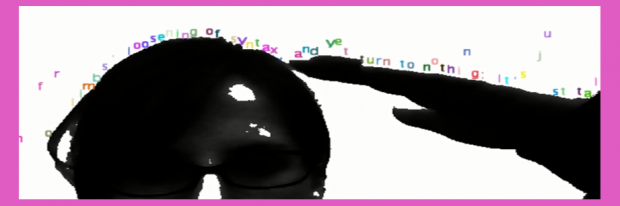

To implement Text Rain in Processing, I created a few helper classes. First, I create a Character class that draws a character at its current x and y location and detects whether or not the character is free falling or if it landed on a dark spot. It does so by checking the brightness of the pixel directly below it and setting a boolean within the class. I also created a camera class so that I could test the application out with different types of cameras, namely black -and-white and grayscale. I had some issues with thresholding background brightness so I tried mapping each pixel’s brightness to an exponential function to create a bigger differentiation between light and dark values but I still find that I need to adjust the threshold based on the location in which I am running the project.

To implement Text Rain in Processing, I created a few helper classes. First, I create a Character class that draws a character at its current x and y location and detects whether or not the character is free falling or if it landed on a dark spot. It does so by checking the brightness of the pixel directly below it and setting a boolean within the class. I also created a camera class so that I could test the application out with different types of cameras, namely black -and-white and grayscale. I had some issues with thresholding background brightness so I tried mapping each pixel’s brightness to an exponential function to create a bigger differentiation between light and dark values but I still find that I need to adjust the threshold based on the location in which I am running the project.

Text Rain from Erica Lazrus on Vimeo.

Below is my code which can also be downloaded here:

Main text rain class:

import processing.video.*;

Capture camera;

CameraBlackWhite bwCamera;

CameraGrayscale gsCamera;

color bgColor;

String text;

Character[] characterSet1;

Character[] characterSet2;

Character[] characterSet3;

public void setup() {

size(640, 480, P2D);

smooth();

int threshold = 50;

gsCamera = new CameraGrayscale(this, threshold);

gsCamera.startVideo();

bgColor = color(#ffffff);

text = "We are synonyms for limbs' loosening of syntax, and yet turn to nothing: It's just talk.";

characterSet1 = new Character[text.length()];

characterSet2 = new Character[text.length()];

characterSet3 = new Character[text.length()];

for (int i=0; i < text.length(); i++) {

char c = text.charAt(i);

color col = color(random(255), random(255), random(255));

float speed = random(1, 6);

characterSet1[i] = new Character(c, col, 14, 5 + i*7.25, speed, threshold);

characterSet2[i] = new Character(c, col, 14, 5 + i*7.25, speed, threshold);

characterSet3[i] = new Character(c, col, 14, 5 + i*7.25, speed, threshold);

characterSet1[i].start();

}

}

public void draw() {

background(bgColor);

update();

render();

}

public void update() {

gsCamera.update();

for (int i=0; i < text.length(); i++) {

characterSet1[i].update();

if (characterSet1[i].getCurYPos() > height/2) {

characterSet2[i].start();

}

else if (characterSet2[i].getCurYPos() - textAscent() >= height || characterSet2[i].getCurYPos() < 0) {

characterSet2[i].setCurYPos(0-(textAscent() + textDescent()));

characterSet2[i].stop();

}

characterSet2[i].update();

}

}

public void render() {

for (int i=0; i < text.length(); i++) {

characterSet1[i].render();

characterSet2[i].render();

characterSet3[i].render();

}

}

Abstract camera class:

public abstract class Camera {

private Capture video;

private int numPixels;

private int threshold;

public void startVideo() {

getVideo().start();

}

public abstract void update();

public abstract void render();

public Capture getVideo() {

return this.video;

}

public void setVideo(Capture video) {

this.video = video;

}

public int getNumPixels() {

return this.numPixels;

}

public void setNumPixels(int numPixels) {

this.numPixels = numPixels;

}

public int getThreshold() {

return this.threshold;

}

public void setThreshold(int threshold) {

this.threshold = threshold;

}

}

Black and white camera class:

public class CameraBlackWhite extends Camera {

private color BLACK = color(#000000);

private color WHITE = color(#ffffff);

public CameraBlackWhite(Text_Rain_2_0 applet, int threshold) {

setVideo(new Capture(applet, width, height));

setNumPixels(getVideo().width * getVideo().height);

setThreshold(threshold);

}

public void update() {

if (getVideo().available()) {

getVideo().read();

getVideo().loadPixels();

loadPixels();

float pixelBrightness;

for (int i=0; i < getNumPixels(); i++) {

int pixelX = i % width;

int pixelY = i / width;

pixelBrightness = brightness(getVideo().pixels[i]);

pixelBrightness = pow(pixelBrightness, 3);

pixelBrightness = map(pixelBrightness, 0, 16581375, 0, 255);

if (pixelBrightness > getThreshold()) {

pixels[(width-1-pixelX) + pixelY*getVideo().width] = WHITE;

}

else {

pixels[(width-1-pixelX) + pixelY*getVideo().width] = BLACK;

}

}

updatePixels();

}

}

public void render() {

}

}

Grayscale camera class:

public class CameraGrayscale extends Camera {

private color BLACK = color(#000000);

private color WHITE = color(#ffffff);

public CameraGrayscale(Text_Rain_2_0 applet, int threshold) {

setVideo(new Capture(applet, width, height));

setNumPixels(getVideo().width * getVideo().height);

setThreshold(threshold);

}

public void update() {

if (getVideo().available()) {

getVideo().read();

getVideo().loadPixels();

loadPixels();

float pixelBrightness;

for (int i=0; i < getNumPixels(); i++) {

int pixelX = i % width;

int pixelY = i / width;

pixelBrightness = brightness(getVideo().pixels[i]);

pixelBrightness = pow(pixelBrightness, 3);

pixelBrightness = map(pixelBrightness, 0, 16581375, 0, 255);

if (pixelBrightness > getThreshold()) {

pixels[(width-1-pixelX) + pixelY*getVideo().width] = WHITE;

}

else {

pixels[(width-1-pixelX) + pixelY*getVideo().width] = color(pixelBrightness);

}

}

updatePixels();

}

}

public void render() {

}

}

Character class:

public class Character {

private char c;

private color col;

private int sz;

private float xPos;

private float curYPos;

private float ySpeed;

private int threshold;

private boolean falling;

public Character(char c, color col, int sz, int threshold) {

setC(c);

setCol(col);

setSz(sz);

setXPos(0);

setCurYPos(0-(textAscent() + textDescent()));

setYSpeed(0);

setThreshold(threshold);

setFalling(false);

}

public void start() {

setFalling(true);

}

public void stop() {

setFalling(false);

}

public Character(char c, color col, int sz, float xPos, float ySpeed, int threshold) {

setC(c);

setCol(col);

setSz(sz);

setXPos(xPos);

setCurYPos(textAscent() + textDescent());

setYSpeed(ySpeed);

setThreshold(threshold);

setFalling(true);

}

public void update() {

if (getCurYPos() < 0 || ceil(getCurYPos() + 1) >= height || isLocationBright((int)getXPos(), (int)getCurYPos() + 1)) {

setFalling(true);

}

else {

setFalling(false);

}

if (isFalling()) {

textSize(getSz());

float newYPos = getCurYPos() + getYSpeed();

setCurYPos(newYPos);

if ((newYPos - textAscent())> height) {

setCurYPos(0-(textAscent() + textDescent()));

}

}

else {

setCurYPos(findFirstBrightSpot((int)getXPos(), (int)getCurYPos()));

}

}

public void render() {

fill(getCol());

textSize(getSz());

textAlign(CENTER, BOTTOM);

text(getC(), getXPos(), getCurYPos());

}

public boolean isLocationBright(int x, int y) {

int testValue = get(x, y);

float testBrightness = brightness(testValue);

return (testBrightness > getThreshold());

}

public int findFirstBrightSpot(int x, int y) {

int yPos;

for (yPos = y; yPos > 0; yPos--) {

if (isLocationBright(x, yPos)) break;

}

return yPos;

}

public char getC() {

return this.c;

}

public void setC(char c) {

this.c = c;

}

public color getCol() {

return this.col;

}

public void setCol(color col) {

this.col = col;

}

public int getSz() {

return this.sz;

}

public void setSz(int sz) {

this.sz = sz;

}

public float getXPos() {

return xPos;

}

public void setXPos(float xPos) {

this.xPos = xPos;

}

public float getCurYPos() {

return this.curYPos;

}

public void setCurYPos(float curYPos) {

this.curYPos = curYPos;

}

public float getYSpeed() {

return this.ySpeed;

}

public void setYSpeed(float ySpeed) {

this.ySpeed = ySpeed;

}

public int getThreshold() {

return this.threshold;

}

public void setThreshold(int threshold) {

this.threshold = threshold;

}

public boolean isFalling() {

return this.falling;

}

public void setFalling(boolean falling) {

this.falling = falling;

}

}