Mldraw from aman tiwari on Vimeo.

origin

Mldraw was born out of seeing the potential of the body of research done using pix2pix to turn drawings into other images and the severe lack of a usable, “useful” and accessible tool to utilize this technology.

interface

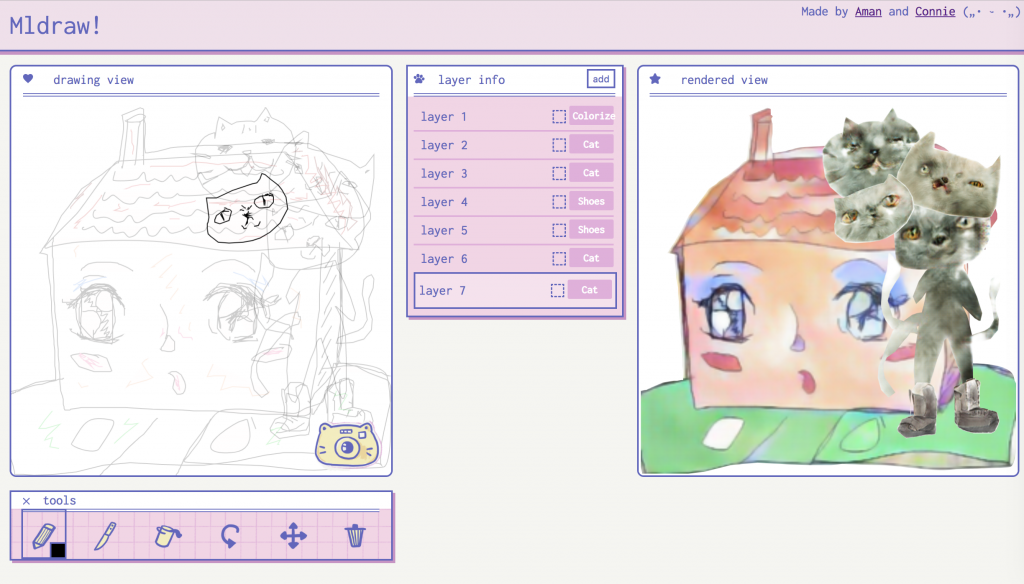

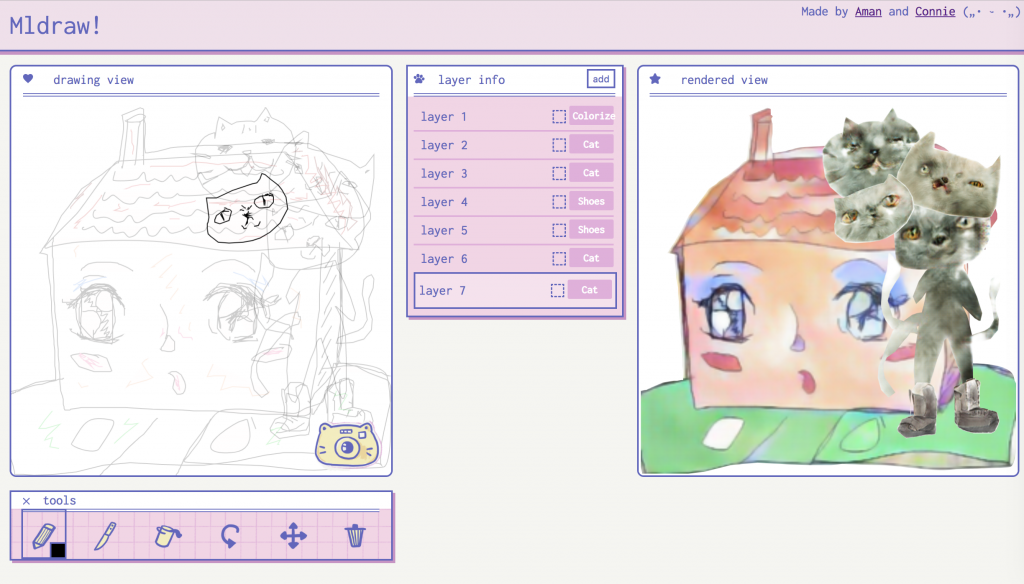

Mldraw’s interface is inspired by cute, techy/anti-techy retro aesthetics, such as the work of Sailor Mercury and the Bubblesort Zines. We wanted it to be fun, novel, exciting and deeply differentiated from the world of arxiv papers and programmer-art. We felt like we were building the tool for an audience who would be appreciative of this aesthetic, and hopefully scare away people who are not open to it.

dream

Our dream is for Mldraw to be the easiest tool for a researcher to integrate their work into. We would love to see more models put into Mldraw.

future

We want to deploy Mldraw to a publicly accessible website as soon as possible, potentially on http://glitch.me or http://mldraw.com. We would like to add a mascot-based tutorial (see below for sketch of mascot). In addition, it would be useful for the part of the Typescript frontend that communicates with the backend server to be split out into its own package, as it is already independent of the UI implementation. This would allow, for instance, p5 sketches to be mldrawn.

process & implementation

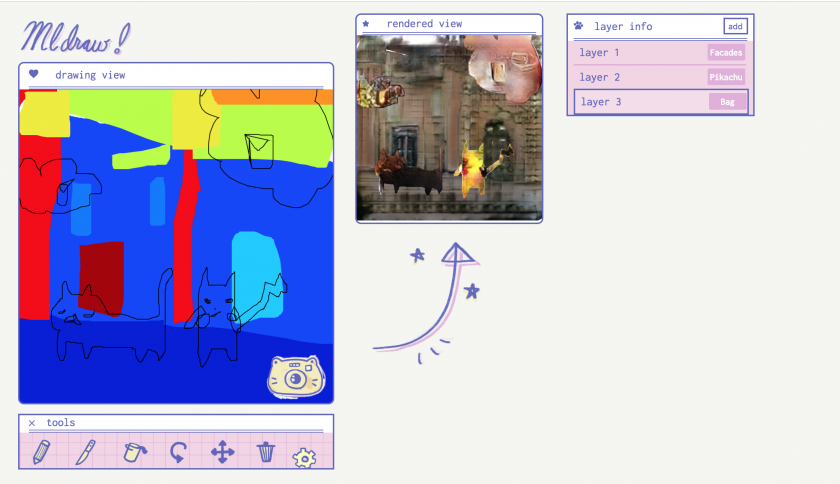

Mldraw is implemented as a Typescript frontend using choo.js as a UI framework, with a Python registry server and a Python adapter library, along with a number of instantiations of the adapter library for specific models.

The frontend communicates with the registry server using socket.io, which then passes to the frontend a list of models and their URLs. The frontend then communicates directly to the models. This enables us e.g. to host a registry server for Mldraw without having to pay the cost of hosting every model it supports.

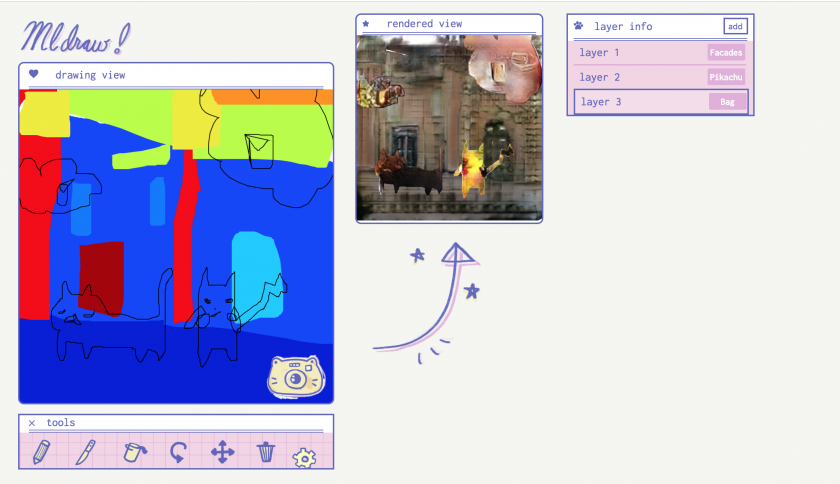

Mldraw also supports models that run locally on the client (in the above video, the cat, Pikachu and bag models run locally, whilst the other models are hosted on remote servers).

In service of the above desire to make Mldraw extensible, we have made it easy to add a new model – all that is required is some Python interface* to the model, and to define a function that takes in an image and returns an image. Our model adapter will handle the rest of it, including registering the model with the server hosting an Mldraw interface.

*This is not actually necessary. Any language that has a socket.io library can be Mldrawn, but they would have to write the part that talks to the registry server and parses the messages themselves

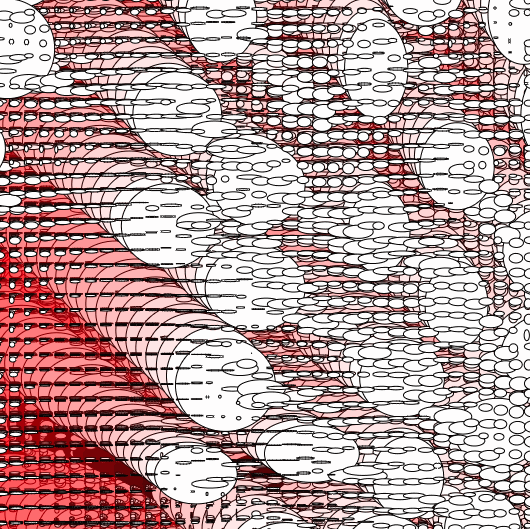

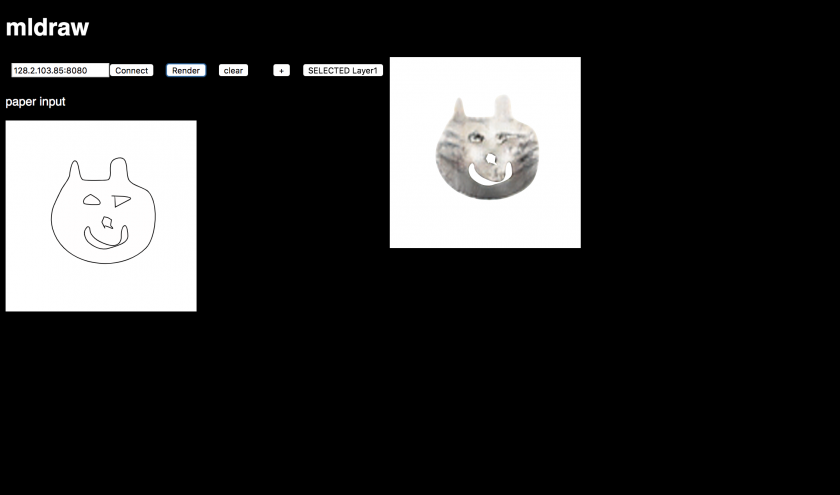

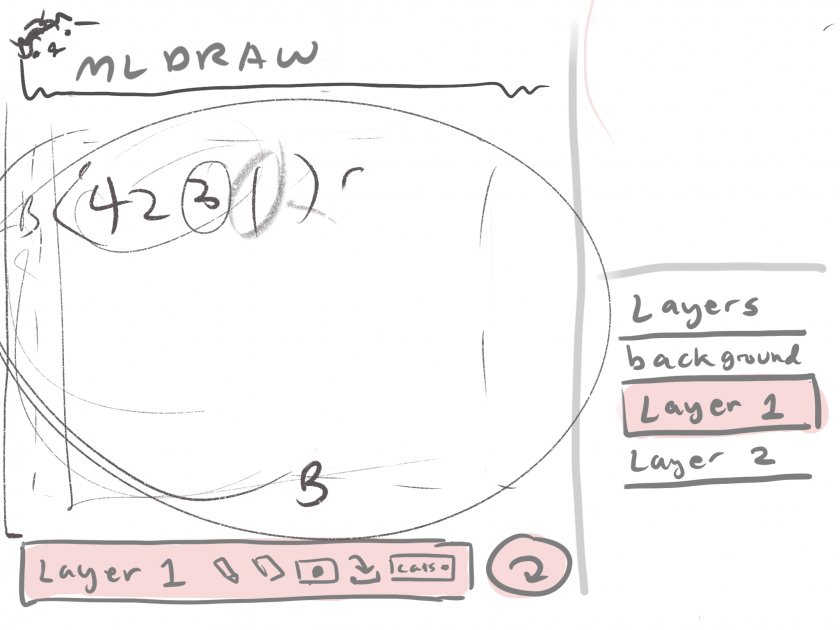

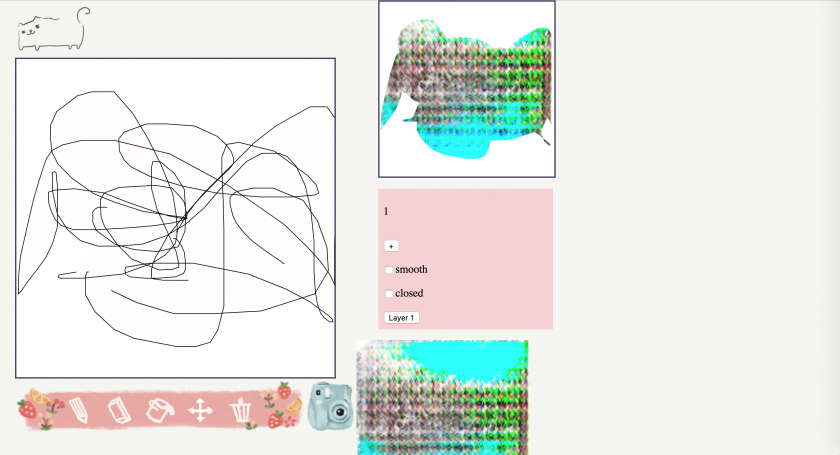

process images

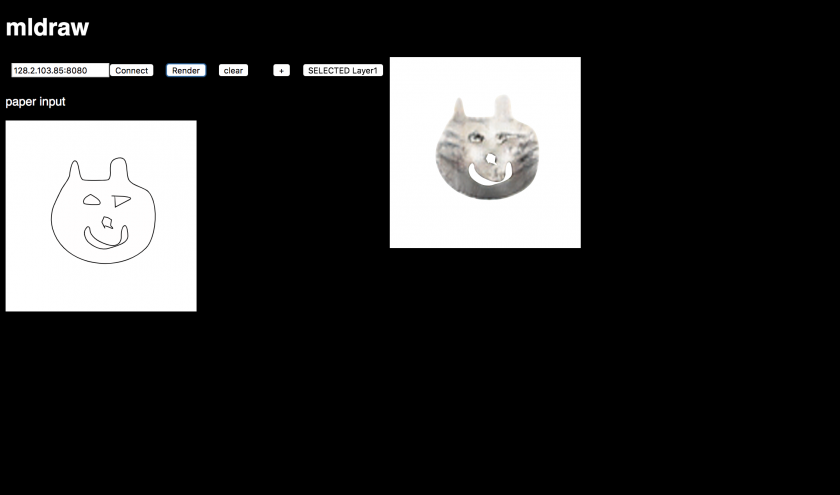

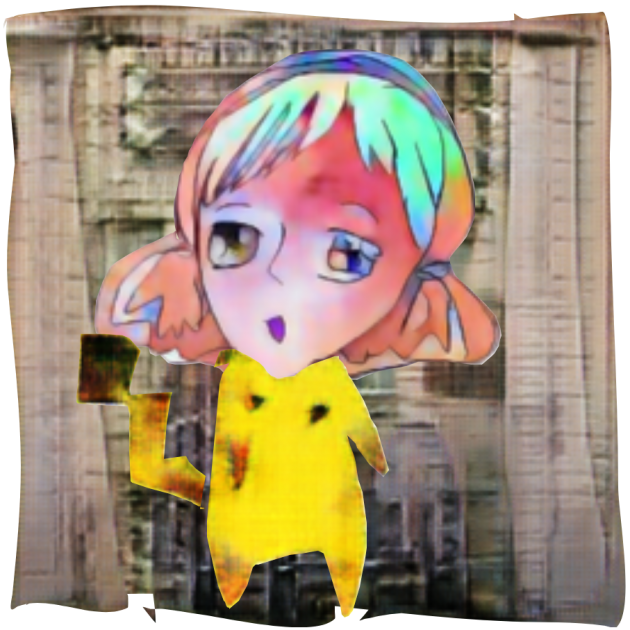

The first image made with Mldraw. Note that this is with the “shoe” model.

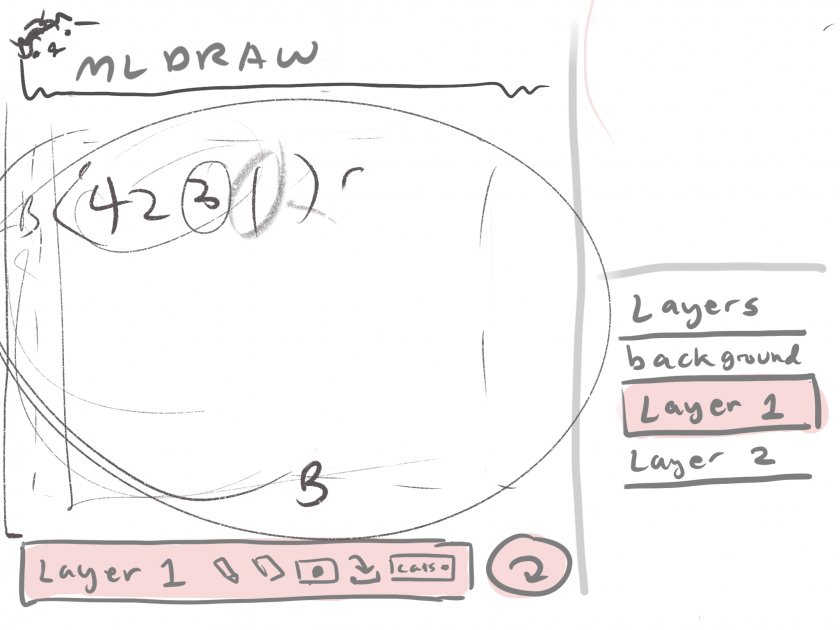

The first sketch of the desired interface.

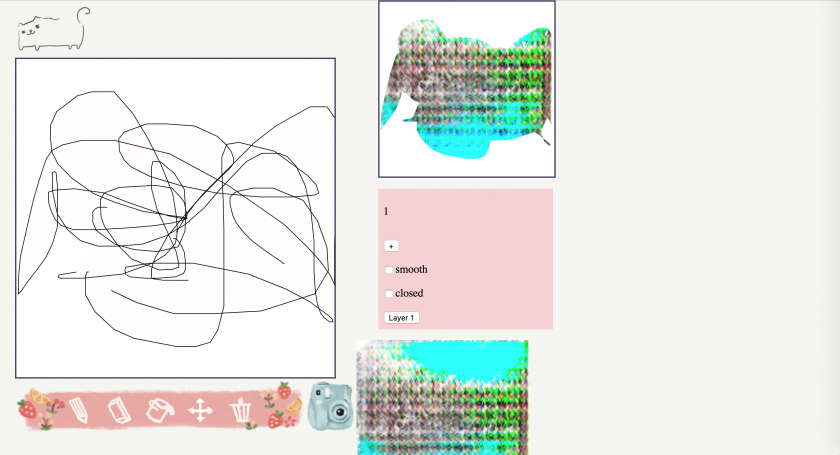

The first implementation of the real interface, with some debug views.

The first implementation of a better interface.

The first test with multiple models, after the new UI had been implemented (we had to wait for the selector to choose models to be implemented first).

The current Mldraw interface.

Our future tutorial mascot, with IK’d arm.

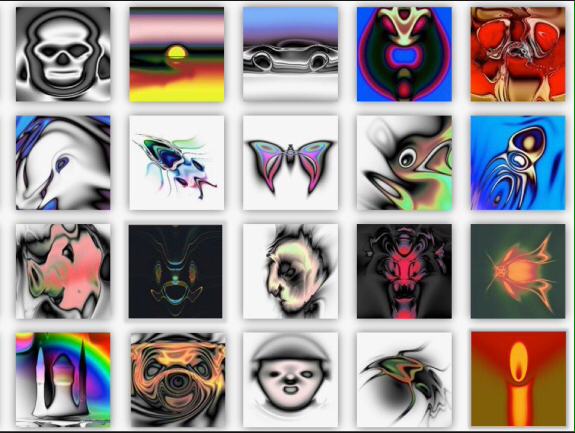

Some creations from Mldraw, in chronological order.