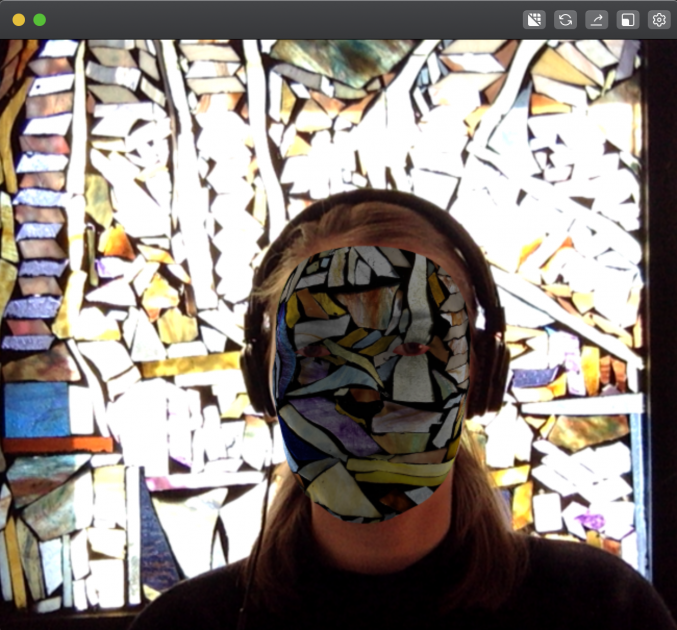

Performative Mask

Overview. From the start, I was interested in doing some work with music videos, and adapted this assignment to my own constraints. I’ve been working with two bands from Montréal on a potential music video and album cover collaboration — this assignment felt like an opportunity to prototype some visual ideas. For the sake of this warm-up exercise, I worked with the song A Stone is a Stone by Helena Deland. She sings about goodbyes and core issues in either a person’s character or in a relationship.

The lyrics made me think of permanence. When you go through life with a specific idea, character trait or person for a long time, you sometimes lose track of their presence. I was interested in working with the idea of fading into one’s environment, having been somewhere or someone for so long that you become one with it. After a while, you can barely be distinguished from the space, metaphorically or physically, that you evolve in.

Ideation Process. My process was hectic, as I had a very hard time thinking of an idea that got me excited. I started by wanting to make an app which erased your face any time you smiled awkwardly in an uncomfortable situation, but decided this kind of work had been overdone in the wearables world. Then I decided to make a language grapher using mouth movements to have a visual representation of texts which had a “Chloe” as the protagonist (as a way to study the word, how other people perceive it in literature, and how that influences personal character). I went through with this idea, and hated it by the end, so I decided to start over from scratch. Hence, the current idea described in the overview.

The hoodies that allow you to hide from uncomfortable situations.

A visualization of the introduction of the character “Chloé” in the novel ‘L’Ecume Des Jours’ by Boris Vian.

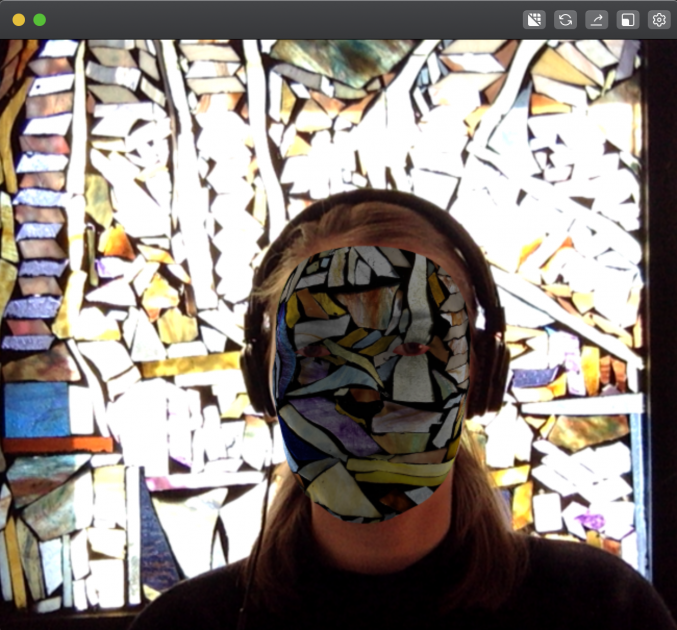

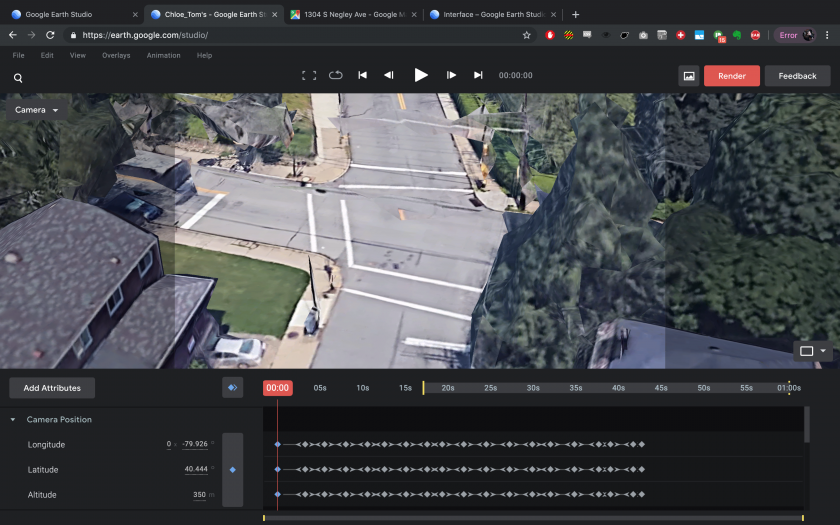

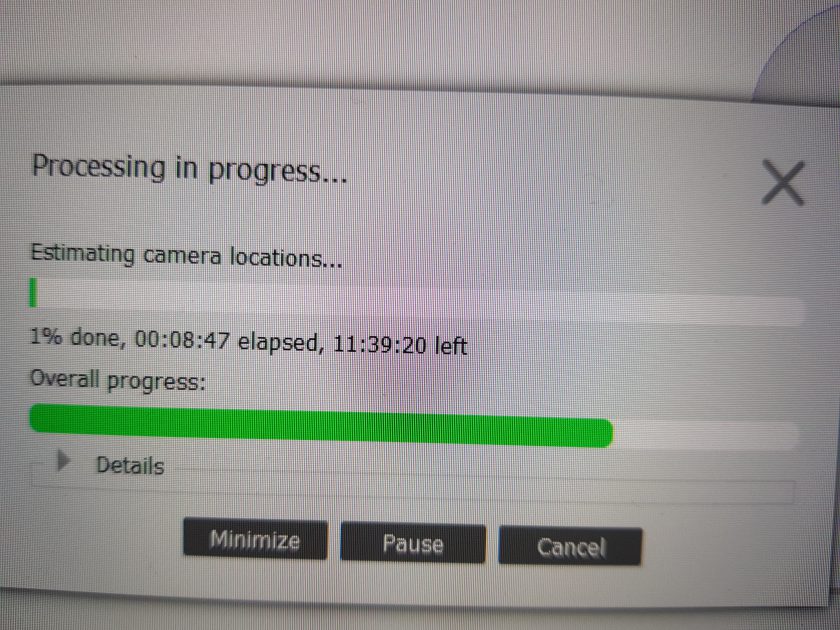

Physical Process. I essentially worked using the environment as a way to inform the content, creating bidirectionally. The researched a couple backgrounds, photographed them and performed in front of them with my nice camera. I then applied the simple mapping filter of the aforementioned image to the prerecorded video. This project didn’t actually require any scripting, since I chose to do it with Spark AR. I originally started doing it in Processing but wasn’t happy with the way it looked. Spark AR had a more advanced mesh tracking option, which allowed for warping around face features, which I stuck with.

Testing patterns and functionality.

I proceeded to film a total of 6 scenes, selected by texture and color. I wore clothes that fit the background scheme, and photographed each material before filming myself lip-synching with a dslr. I then converted the videos to a smaller format (which was the only import option, sorry for the loss of quality) and layered the mask in Spark AR. The final editing rendered the video below, only featuring a small chunk of the song.

(I removed the video from youtube, sorry Golan. I left you a gif though)

Improvements. With more time, I probably would have:

1) found a way to also include the ears and the neck, which look silly without the mask layer

2) reconsidered my idea, because I felt like the overall effect was uncomfortable and funny, while the song really isn’t

3) found a way to change the scale of my map image, because it didn’t fade as much into the background as I had wanted

, a novel which counts the tales of the travels of Marco Polo, told to the emperor Kublai Khan. “The majority of the book consists of brief prose poems describing 55 fictitious cities that are narrated by Polo, many of which can be read as parables or meditations on culture, language, time, memory, death, or the general nature of human experience.” (Thanks wikipedia)

, a novel which counts the tales of the travels of Marco Polo, told to the emperor Kublai Khan. “The majority of the book consists of brief prose poems describing 55 fictitious cities that are narrated by Polo, many of which can be read as parables or meditations on culture, language, time, memory, death, or the general nature of human experience.” (Thanks wikipedia)