Daito Manabe

Category: LookingOutwards02

Greecus Looking Outwards-2

For this project, I’ve been interested in playing with the idea of creating a lightweight VJ tool. Making a full functioning VJ tool would be too complex an endeavour for the short period of time that I have to work on this project, however I would like to toy around with the core concepts of transitions, looping and beat detection.

The first piece of inspiration came from the tool, boopy , which is a unique GIF drawing tool developed by Andrew Benson in collaboration with Giphy Arts. The tool allows users to commit drawings to a canvas that records the strokes over time so they can be replayed in a gif format.

The second piece of inspiration came from a piece by Corey Walsh where he used processing to make a visualizer that classified different kinds of drums (kicks, snares and flams).

Beat Detection in Processing

My hopes are to combine these aspects to create a rather simple but enjoyable VJ application.

dorsek – Looking Outwards – 2

This project, though not technically an art piece, certainly influenced my vision for the DrawingSoftware project.

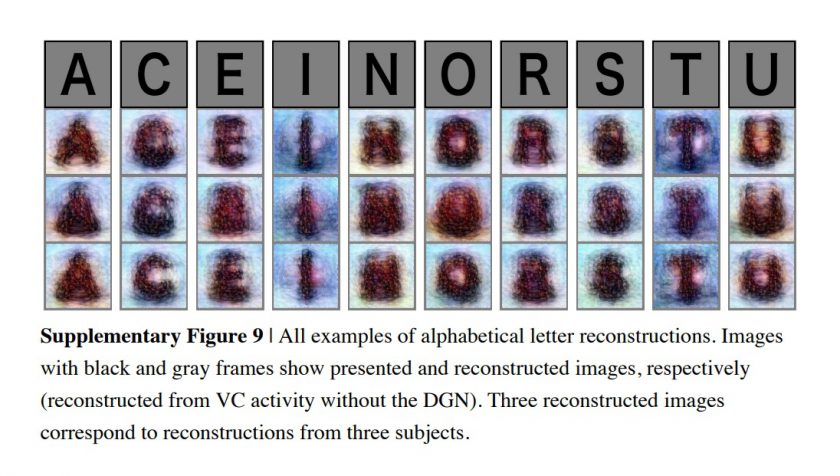

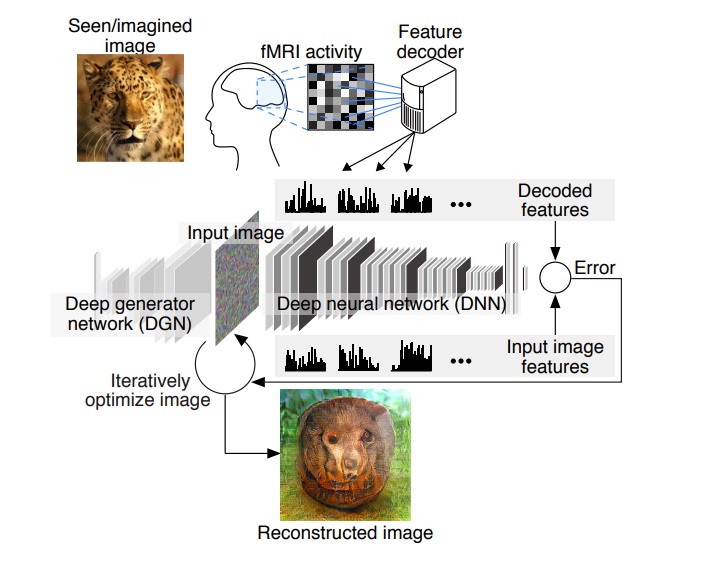

To set the stage a bit, as of about a year ago a set of four scientists from Kyoto University’s Kamitani Lab released the result of a research study on using artificial intelligence (specifically without the use of machine learning ((a method which has been used before for this typed of recording with some success)), and instead through the use of “deep neural networks”) in order to decode the brain scans of people. Through showing their participants natural images (for varying lengths of times), artificial as well as geometric shapes, and letters from the alphabet, over varying lengths of time… and recording their brains scans at those times in addition to recording when participants were told to think of a specific image, or even well looking at several of the images together. According to the researchers, once the brain waves were scanned, they would then use a computer program to “de-code” the image, or as they like to say ‘reverse-engineer’ it.

What most intrigued me regarding this project was the fact that brain scans were being used to re-generate imagery; that and the technology (which is undoubtedly beyond my capacity of understanding and my own capabilities at the moment) that they used in order to accomplish this.. Reading this is partially what inspired me to try and pursue the creation of a project which would render your dreams out for you as you slept.

Now – what’s wrong with this project? Well as a research piece, I can’t point out anything specific but in general I think my biggest critique is that this isn’t an art piece; the technology isn’t being used in a way that might challenge how we think about the world in any way – there’s no opportunity for revelation or new perspectives with regards to the concept behind the project (which obviously could simply be due to the fact that they are still developing this new way of processing and re-generating imagery via brain scans) which seems to detract from the interesting nature of the project. I also don’t believe that this will age well because of that, just for the simple fact that once you get past the initial “woah” with the technology, there’s not really anything else there that they’ve provided as brain food (on purpose at least…)

sheep – Looking Outwards # 2

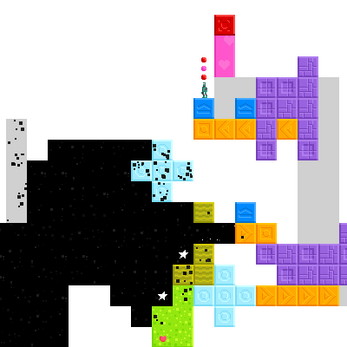

Starseed Pilgrim is a game made by Alexander Martin, Ryan Roth, Mert Bartirbaygil, and Allan Offal which I just started playing this week. It’s a game that dissects video game literacy, attempting to capture the feeling of what it means to not know how video games work and how to introduce outsiders into new and unfamiliar terrains. Not only does it invite outsiders in, it asks them to change its world through experimentation with abstract tools. Martin says: “Create systems that are interesting to explore, and people will get more out of their own learning than any tutorial would ever give them.” The game does not have a tutorial, or instructions besides vague poems strewn across its surface. Interactions must be paid attention to- you’ll probably need notes to understand the elements you are playing with. However, in the end, the game asks the player to construct its world, to plan and ultimately make the chain of building blocks that will let their goals be realized. In a way, there is no one way to solve its puzzles. The game is completely emergent, assigning you the role of gardener, refugee, and builder. Abstraction through cellular automatic blocks and corruption are your only real visual guides on the journey.

In the designer’s words: “I really don’t like describing Starseed Pilgrim! But if I don’t, I’m pretty much asking you to buy it based on… images, and that’s worse. It’s a game about discovery and learning, and eventually about mastery of a strange set of tools. It’s been said, and echoed, that it’s a game you have to experience for yourself.”

Martin built Starseed Pilgrim in Flash. It took a year to do- “Starseed Pilgrim had been 90% done for a year and a half before I finally finished it. This wasn’t even a case of “the last 10% takes 90% of the work,” there was honestly almost nothing left to do but to make some tough tiny decisions, write some super easy code, and get sound. Sound ended up being the dealbreaker, though; it was worth the wait.”

I would say it is certainly time consuming, and I just started it. If you are willing to feel like you did the first time you played a videogame, unsure how it worked or how to interact with it, then Starseed Pilgrim should be of interest to you.

Looking Outwards 02 – conye

Atlas – Guided, generative and conversational music experience for iOS

above: documentation video

Atlas is an ‘anti game environment’ that creates music and includes ‘automatically generated tasks that are solved by machine intelligence.’ This app aims to question presence, cognition, and ‘corporate driven automatisms and advanced listening practices.’ The user generates music through their interaction with the app, which asks the user questions from John Cage. These questions are ‘focusing on communication between humans’ and ‘concentrates on the marginalized aspects of presence…’ This game looks visually stunning, and I appreciate how it attempts to be a different type of game (‘anti game’). I can’t judge how successful it is in being ‘anti-game’ without playing the game, but I like the addition of the questions into the gameplay mechanic and am a big fan of how clean the visual shapes are.

It was created with javascript, p5.js in Swift iOS, and Pure Data. It has an example template using libPd and is available in the App Store for $1.99.

jamodei-lookingOutwards02

Elegy: GTA USA Gun Homicides

CW: animated graphic violence

Link to Actipedia documentation

This project is a self-playing version of Grand Theft Auto V that performs as a data visualization for “a daily reenactment of the total number of USA gun homicides since January 1st, 2018.” One interacts with this work by watching the 24/7 live stream on Twitch. As the camera slowly pans backwards, one sees characters in the video game killing each other every few minutes (or more often I guess depending in the day) as a way of marking something that can feel invisible. Elegy is challenging to watch (even though generally I do not find first person shooters to be that triggering). The mix of mediums – i.e. real gun violence vs. video game gun violence vs. statistics on gun violence – presented in a never-ending slow scroll to chill-but-patriotic music creates a performance with the viewer that forces into being a complex and unanswerable dialectic around the reality of the large number of gun homicides in the USA and the apparent impossibility of change. This complication of data is what I find to be the most fruitful aspect of the work for me. I find the work’s attempt to repurpose material observations about our reality – and communicate them in familiar cultural forms in order to visualize the political nature of data – helpful and inspiring.

tli-lookingoutwards02

Matthias Dörfelt’s Face Trade is a work that asks viewers to trade a mugshot for a computer-generated portrait, but the transaction is recorded a blockchain semi-permanently.

What interests me about this work is how much the artist gets away with asking from the audience. The trade-off is unnervingly real; not only does the work promise to record your mugshot permanently, you can also see the records for yourself at this website. The tension that the work induces is very real and, for me, very hard to separate from the artistic intention of the work. I can’t help but recoil instinctively and accuse the work of being malicious in itself. I think the audaciousness of this piece makes it successful, and I would like to take away some of Dörfelt’s nerve in my future work.

Looking into Dörfelt’s past work reveals a deep practice in generative art and a recent exploration of blockchain as an artistic tool. This helps me contextualize the choice of using computer-generated portraits as the commodity of the transaction. Face Trade‘s generated portraits seem like an arbitrary trinket that just fills the description of “commodity which the user trades for” (which may very well be the point), but I can see how these portraits build on top of Dörfelt’s previous generative sketches. It’s interesting that in some ways the viewer is paying their identity to view Dörfelt’s next iteration as a maker.

lumar-lookingoutwards2

I saw this recent work. I thought it was fun to see the Google Draw experiments made tangible and interactive, but….I actually included this piece because I wanted to bring up a potential critique — beyond the physical form just making it easier to exhibit, what does the tangible nature of the machine do for this experience? Does it fundamentally change or enhance the interaction? What the machine is doing is something the digital form can do just as easily. The way the user inputs things here is more or less the same as they would on the web version of this (mouse on screen instead of a stiff, vertical pen on paper) where they begin to draw a doodle. The machine tries to guess what it is and ‘autocomplete’ it. How it doesn’t line up/or guess it correctly ends up with your drawing recreated as a strange hybrid mix with dementing visually similar. Do I want to keep the end product? Not really. Do I really cherish the experience? I don’t know, it doesn’t bring much new to the table that Google’s web version didn’t already in terms of getting a sense of how good/ or bad the AI system behind it has gotten at image/object classification and computer vision.

So what is it that it brings? Is it the experience of seeing machine collaborate intelligently in realtime with you?

Kind of like Sougwen’s work — (see below) ?

Sougwen Chung, Drawing Operations Unit: Generation 2 (Memory), Collaboration, 2017 from sougwen on Vimeo.

sjang-lookingoutwards02

Sougwen Chung, Drawing Operations Unit (Generation 1 & 2, 2017-8)

Sougwen Chung’s Drawing Operations Unit is the artist’s ongoing exploration of how a human and robotic arm could collaborate to create drawings together. In Drawing Operations Unit: Generation 1 (D.O.U.G._1), the robotic arm mimics the artist’s gestural strokes by analyzing the process in real-time through computer vision software and moving synchronously/interpretatively to complement the artist’s mark-making. In D.O.U.G._2, the robotic arm’s movement is generated from neural nets trained on the artist’s previous drawing gestures. The machine’s behavior exhibits its interpretation of what it has learned about the artist’s style and process from before, which functions like a working memory.

I love how the drawing comes into being through a complex dynamic between the human and machine – the act of drawing becomes a performance, a beautiful duet. There is this constant dance of interpretation and negotiation happening between the two, both always vigilant and aware of each other’s motions, trying to strike a balance. The work challenges the idea of agency and creative process. The drawing becomes an artifact that captures their history of interaction.

There are caveats to the work as to what and how much the machine can learn, and whether it could contribute something more than just learned behavior. As I am painfully aware of the limitations of computer vision, I cannot help but wonder how much the machine is capable of ‘seeing’. To what extent could it capture all the subtleties involved in the gestural movements of the artist’s hand creating those marks? What qualities of the gesture and mark-making does it focus on learning? Does it capture the velocity of the gestural mark, the pressure of the pencil against the paper through conjecture? Are there only certain types of drawings one can create through this process?

It would also be wonderful to see people other than the artist draw with the machine, to see the diversity of output this process is capable of creating. The ways people draw are highly individualistic and idiosyncratic, so it would be interesting to see how the machine reacts and interprets these differences. I would also like to see if the machine could exhibit an element of unpredictability that goes beyond data-driven learned behavior, and somehow provoke and inspire the artist to push the drawing in unexpected creative directions.

Project Links: D.O.U.G._1 | D.O.U.G._2

ngdon-LookingOutwards-2

NORAA (Machinic Doodles)

A human/machine collaborative drawing on Creative Applications:

- Explain the project in a sentence or two (what it is, how it operates, etc.);

NORAA (Machinic Doodles) is a plotter that first duplicates the user’s doodle and then based on its understanding of what it is, finish the drawing.

- Explain what inspires you about the project (i.e. what you find interesting or admirable);

I find the doodles, which are from Google’s QuickDraw dataset, very interesting and expressive. They also reveal how ordinary people think about and draw common objects. They’re very refreshing to look at, especially after spending too much time with fine art. However I always wondered if they’ll look even better if they’re physically drawn instead of being stored digitally.

I think this project brings out these qualities very well with pen and paper drawings.

I’m also drawn to the machinery, which is elegant visually, and well documented in their video.

- Critique the project: describe how it might have been more effective; discuss some of the intriguing possibilities that it suggests, or opportunities that it missed; explain what you think the creator(s) got right, and how they got it right.

I think the interaction can be more complicated. I think the current idea of how it collaborates with the users is too easy to come up with, and is basically just like a SketchRNN demo. I wonder if other kinds of fun experiences that can be achieved, given that they already have excellent software and hardware. Especially since the installation is shown in September 2018, at which point I think SketchRNN and QuickDraw have already been there for a while.

- Research the project’s chain of influences. Dig up the ‘deep background’, and compare the project with related work or prior art, if appropriate. What sources inspired the creator this project? What was “their” Looking Outwards?

I think they’re mainly inspired by SketchRNN, which is a sequential model trained on line drawings that also have temporal information.

I think creative collaboration with machines has been explored a lot recently. Google’s Magenta creates music collaboratively with users, and there’s also all those pix2pix stuff that turns your doodles into complex-looking art.

- Embedding a YouTube or Vimeo video is great, but you should also

- Prepare and upload an animated GIF to this WordPress.