Waterline

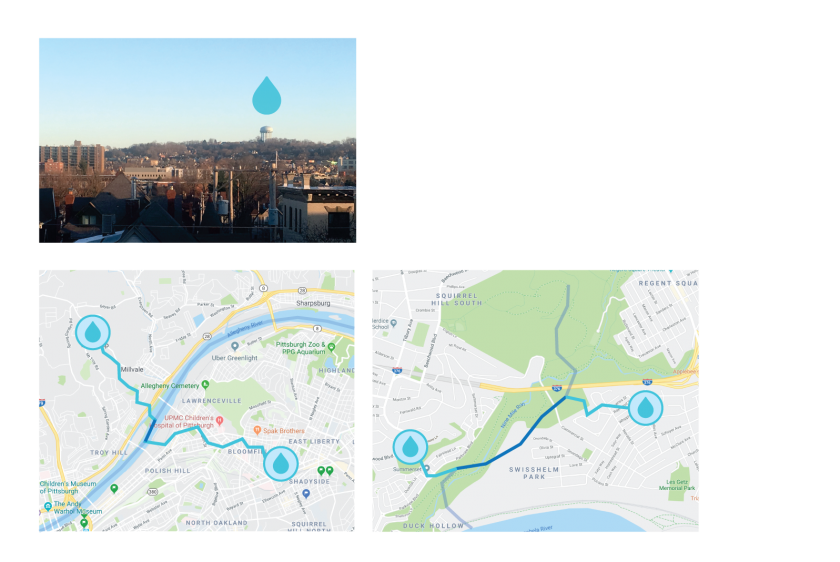

Waterline encourages responsible water use by drawing a line of where water would flow if you kept pouring it on the ground in front you.

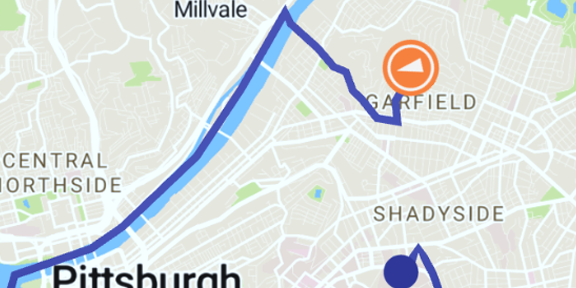

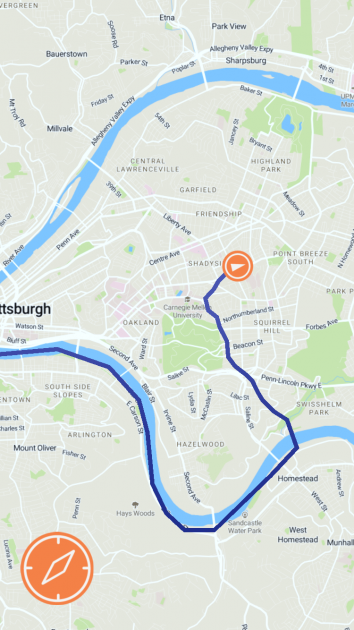

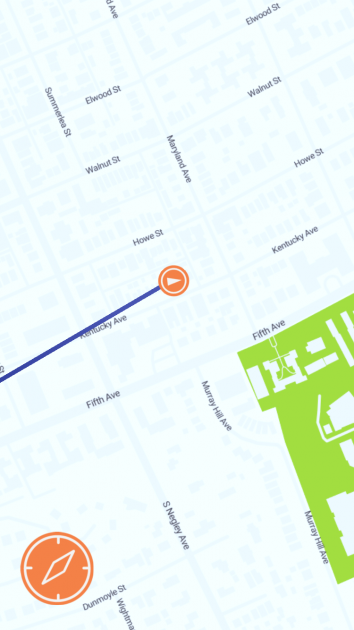

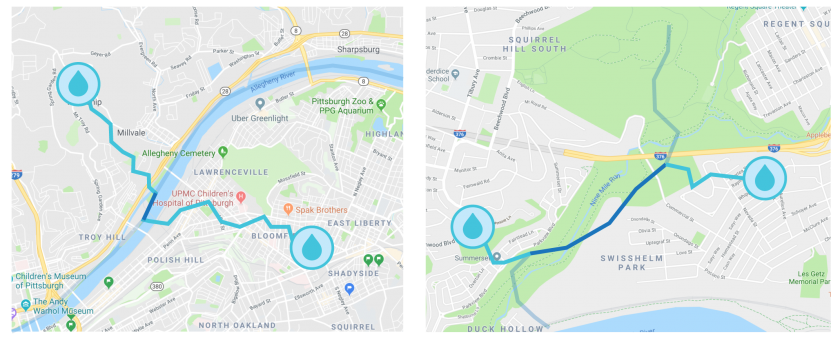

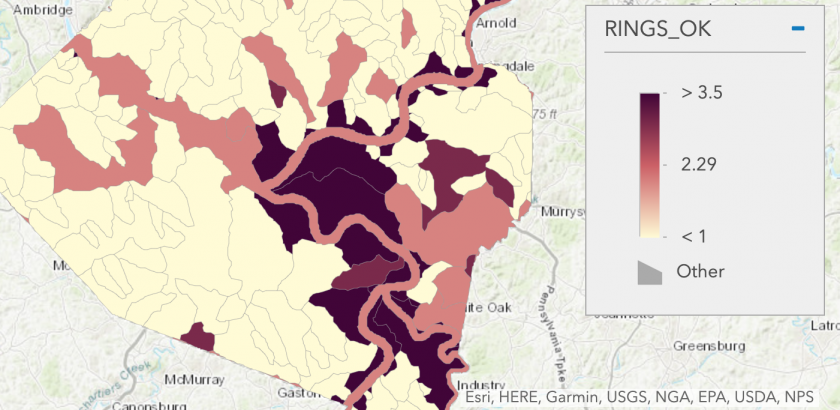

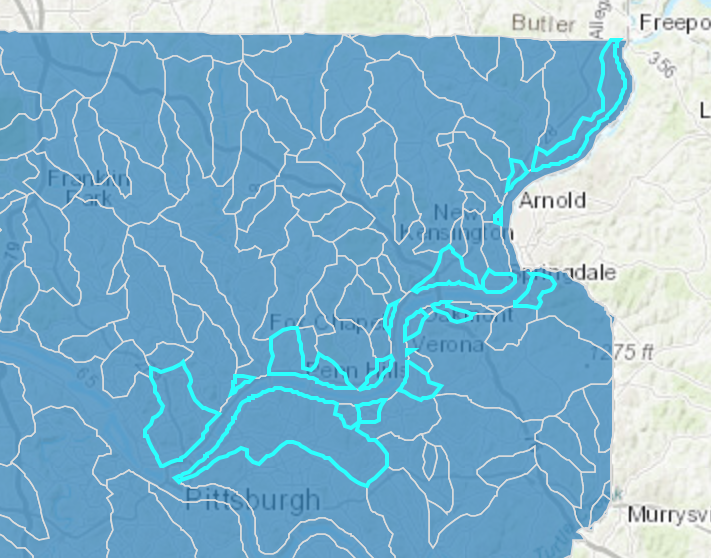

Waterline is an app that shows you where water would go if you kept pouring it on the ground in front of you. Its goal is to demonstrate all the areas that you affect with your daily use of water, to encourage habits that protect the natural environment and the public health of everywhere downstream of you. In Pittsburgh, for example, the app maps the flow of water downhill from your current location to the nearest of the city’s three rivers, and from there continues the line downstream. The app provides a compass which always points downstream, making your device a navigation tool to be used to follow the flow of water in the real world. By familiarizing you with the places that you might affect when your wastewater passes through them, Waterline encourages you to adjust your use of potential pollutants and your consumption of water to better protect them.

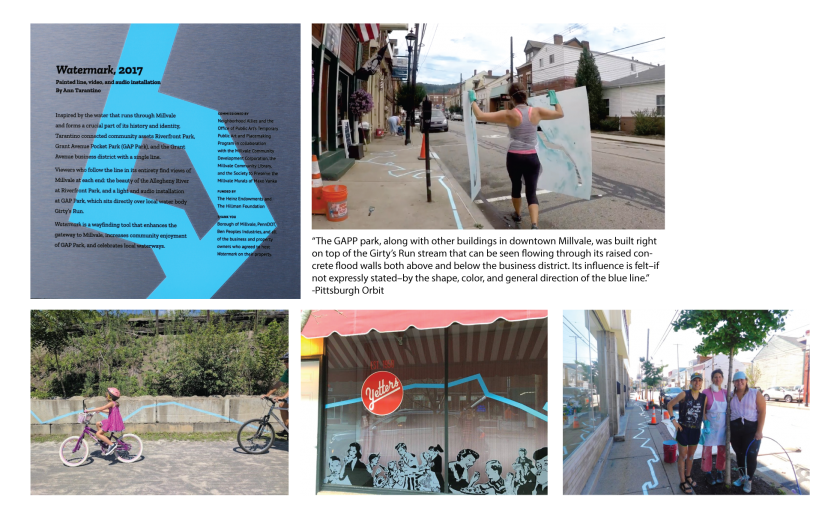

Waterline was inspired by the Water Walks events organized by Zachary Rapaport that began this Spring and Ann Tarantino’s Watermark in Millvale this past year. Both of them are trying to raise awareness about the role water plays in our lives, with a focus on critical issues that affect entire towns in Pittsburgh.

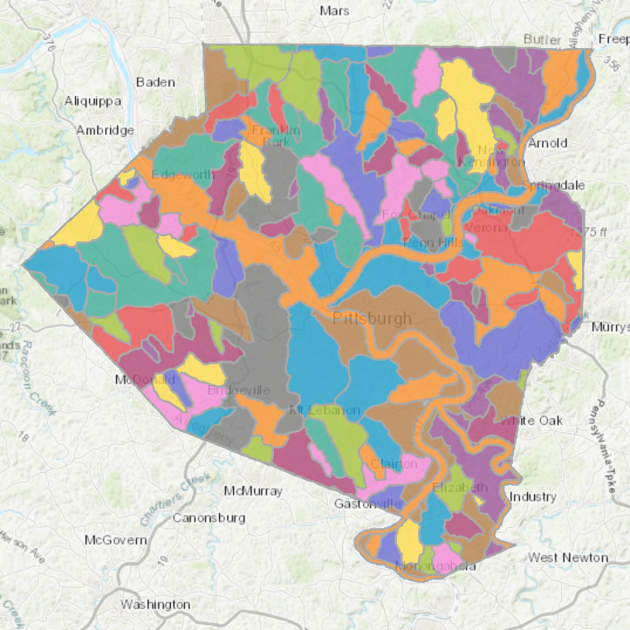

I developed Waterline in Unity using the Mapbox SDK. Most of the work of the app went into loading GeoJSON data on the Pittsburgh watersheds and using that data to determine a line by which water would flow from your current location to the nearest river. Waterline uses an inaccurate way of determining this line, so that right now it functions mostly as an abstract path to the river.

Waterline’s success, I believe, should be measured in its ability to meaningfully connect its users to the water they use in their daily life and to encourage more responsibility for their use of water. Ideally, this app would be used as a tool for organizations like the Living Waters of Larimer to quickly educate Pittsburgh residents about their impact, through water, on the environment and public health, so that the organization can have a stronger base for accomplishing its policy changes and sustainable urban development projects. I think this project is a successful first step on that path of quickly visualizing your impact on water, but it still needs to connect people more specifically to their watershed and answer the question of what can I do now to protect people downstream of me.

Thank you Zachary Rapaport and Abigail Owen for teaching me about the importance of water and watersheds in my life.

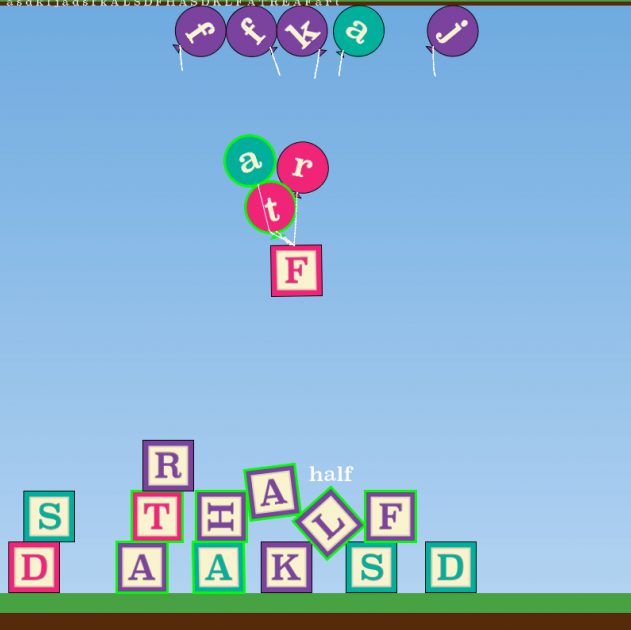

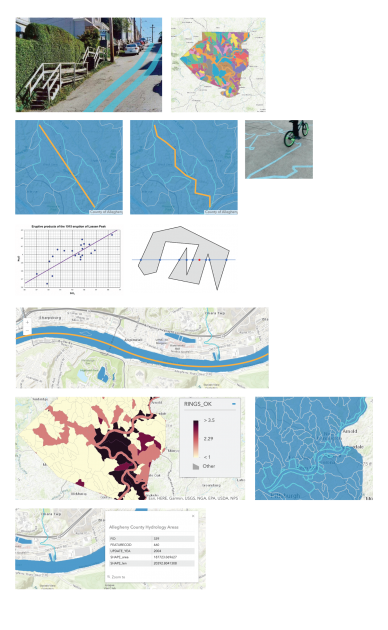

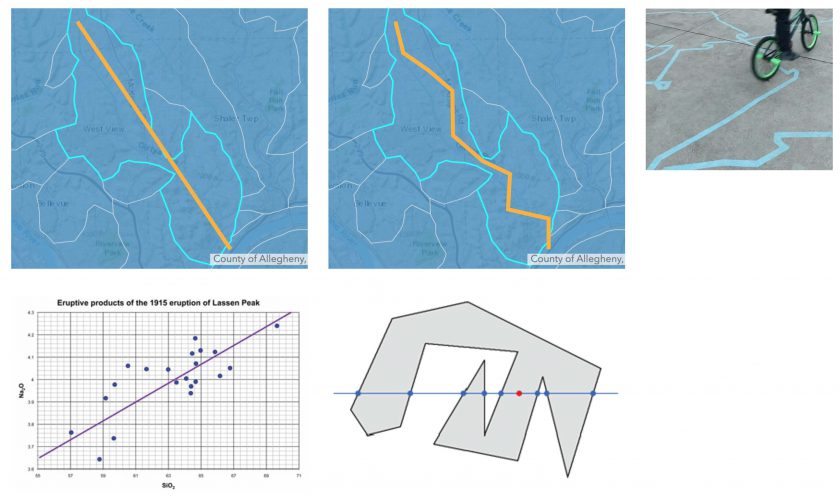

From my sketchbook, visuals and identifying technical problems to solve:

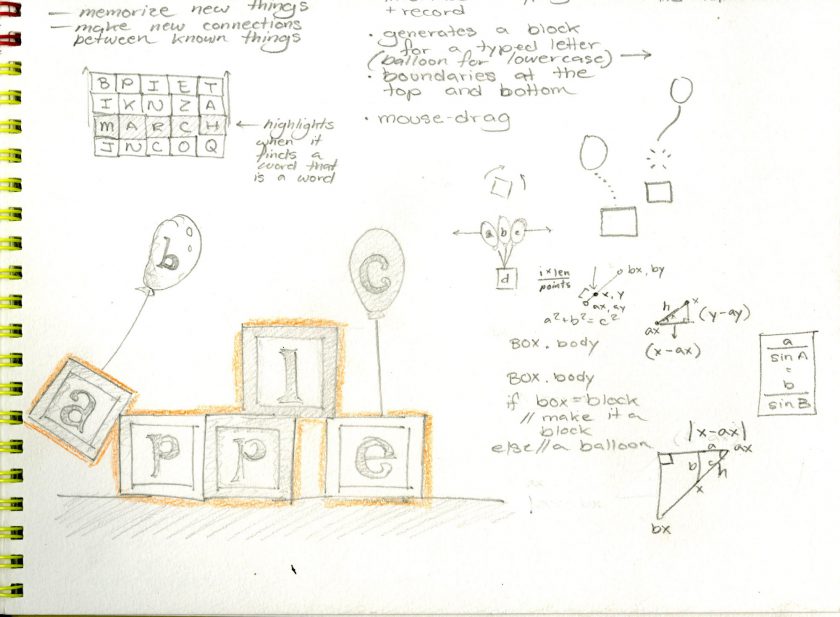

From my sketchbook, preliminary visual ideas for the app:

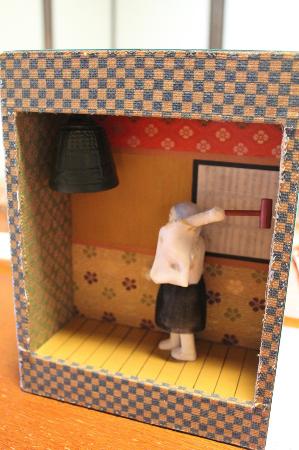

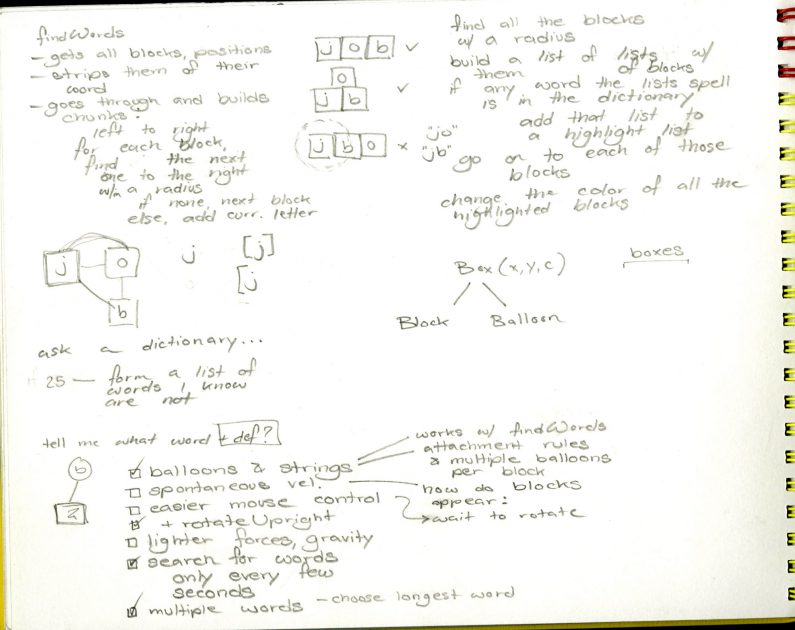

From my sketchbook, notes on Ann Tarantino’s Watermark:

From my sketchbook, notes on Ann Tarantino’s Watermark:

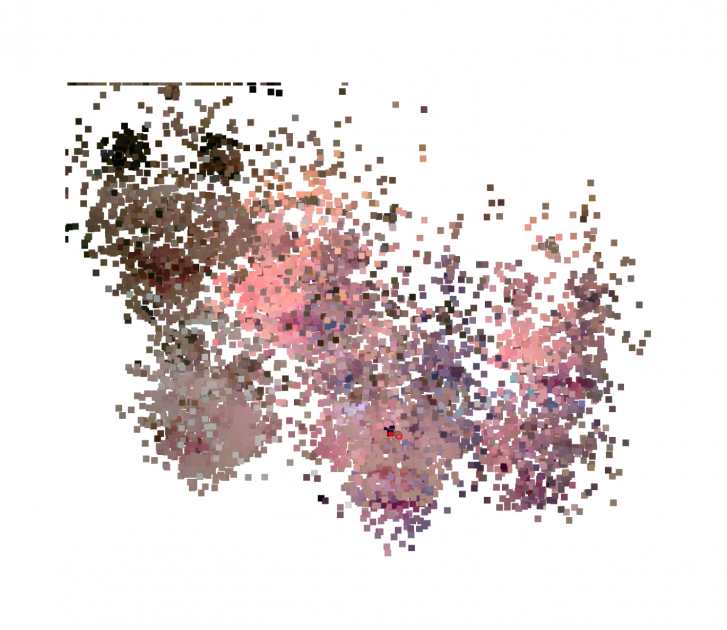

![[cut output image]](https://im4.ezgif.com/tmp/ezgif-4-ea51c06dedeb.gif)

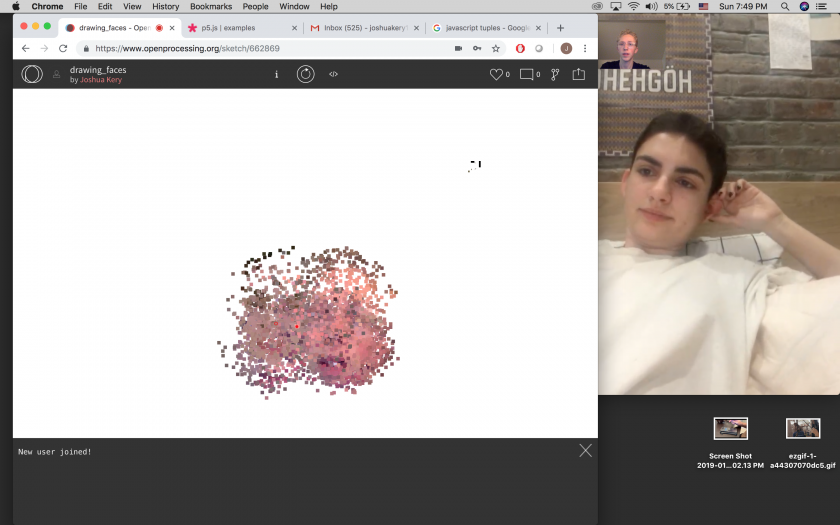

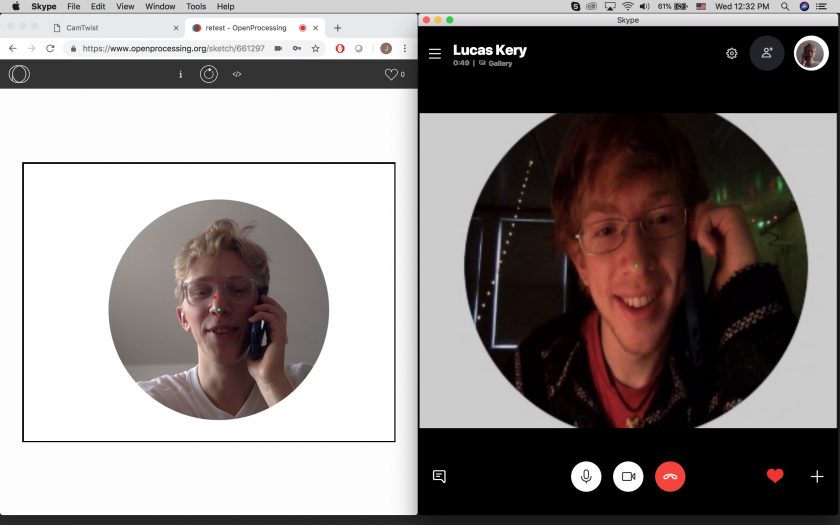

This project exchanges pixel information with your friend (in place of or in conjunction with video chat) and slowly draws your faces to the screen.

This project exchanges pixel information with your friend (in place of or in conjunction with video chat) and slowly draws your faces to the screen.