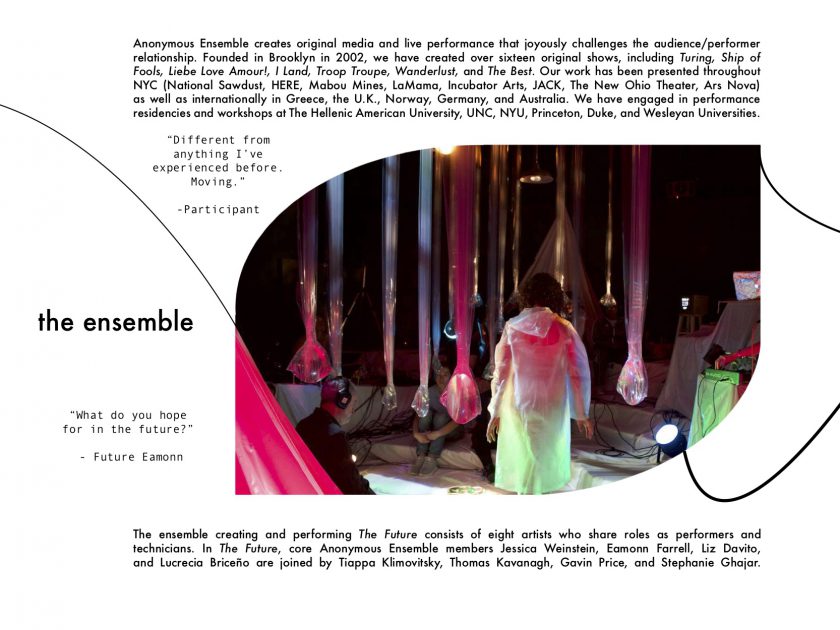

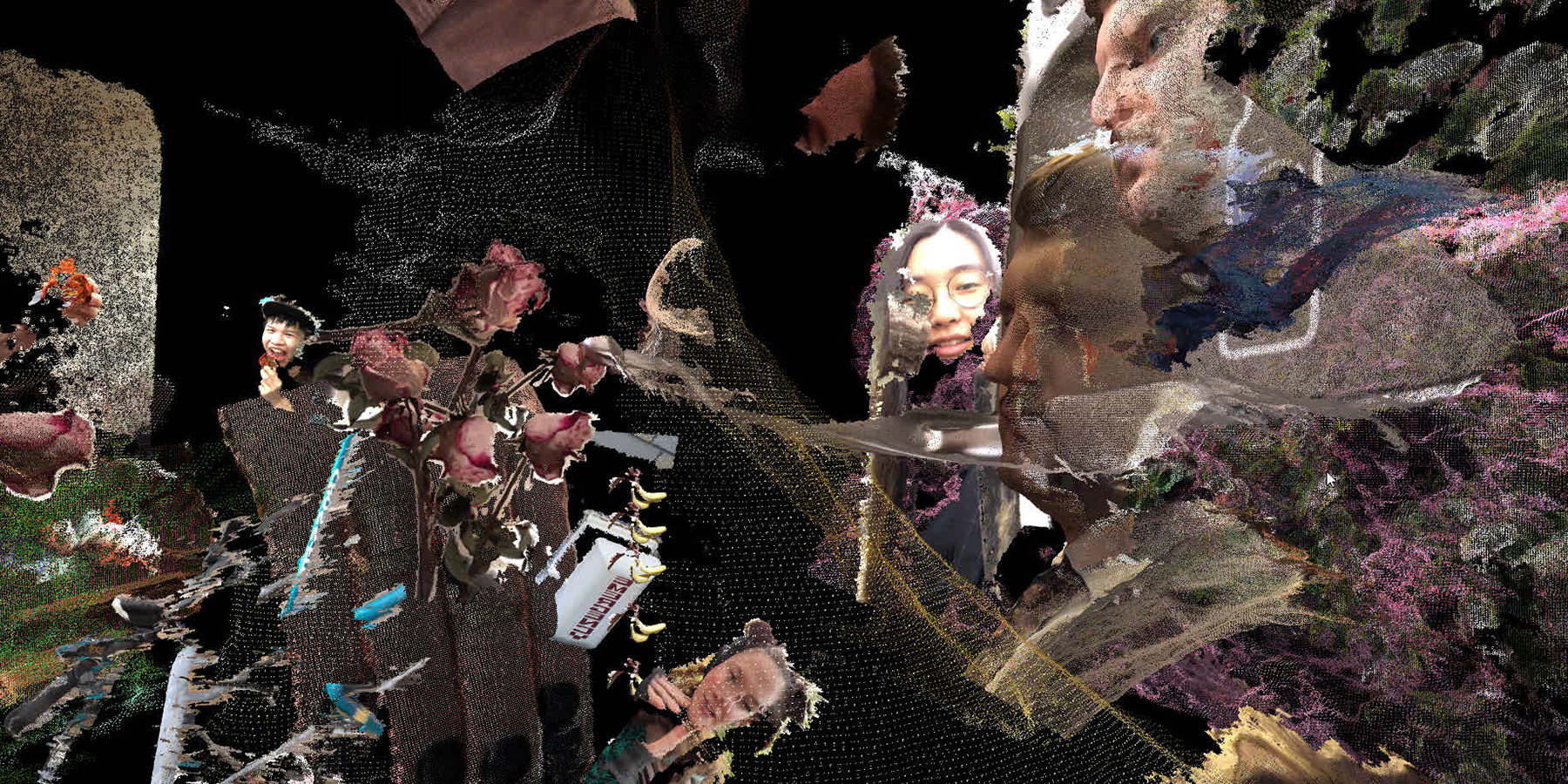

Point Cloud Gardens

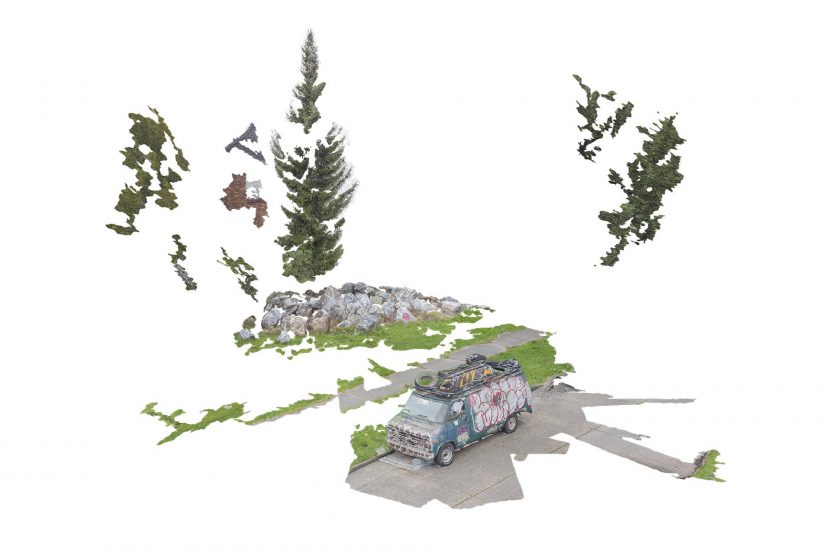

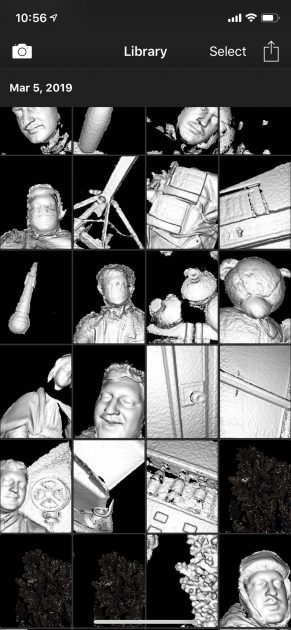

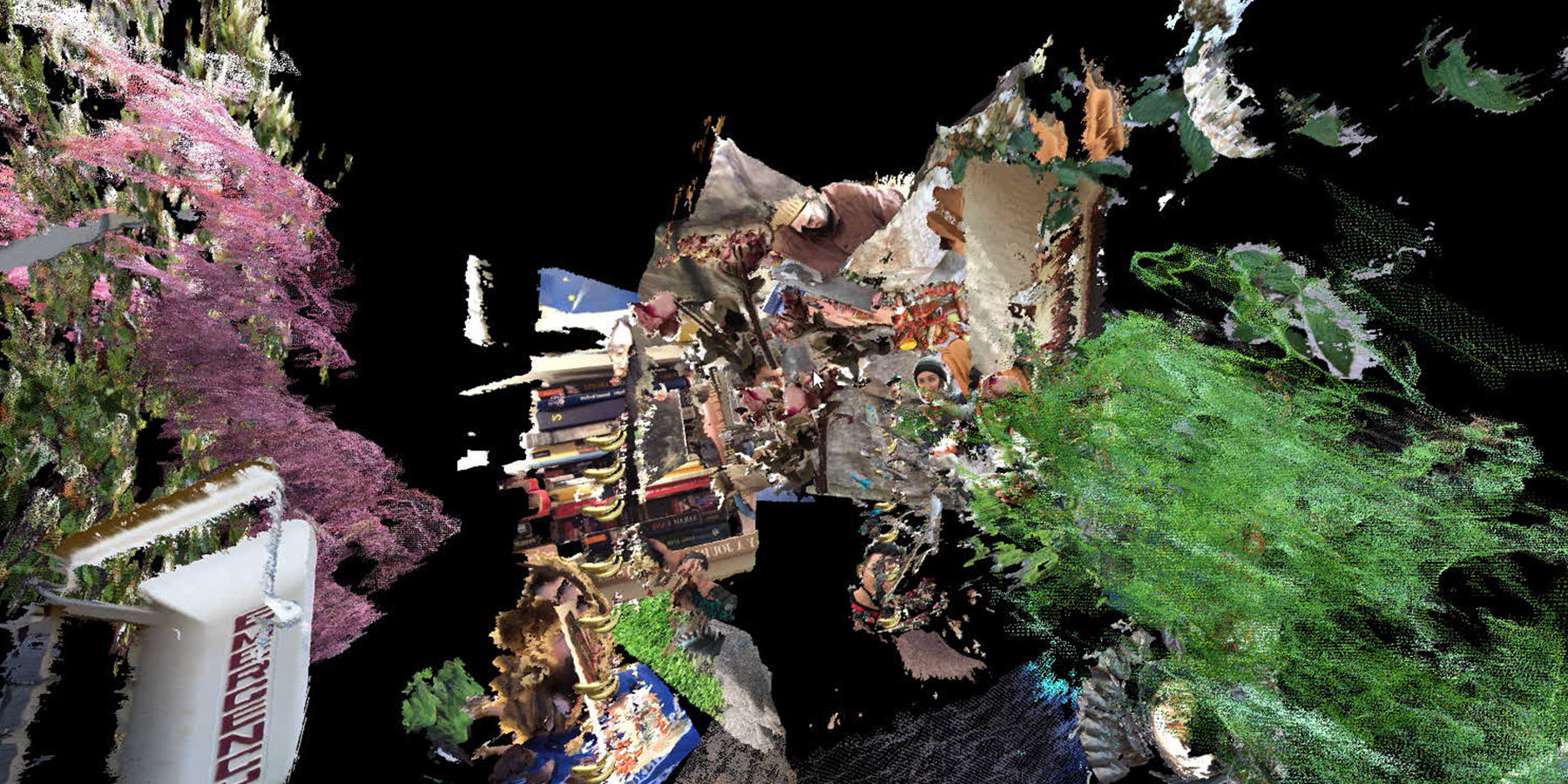

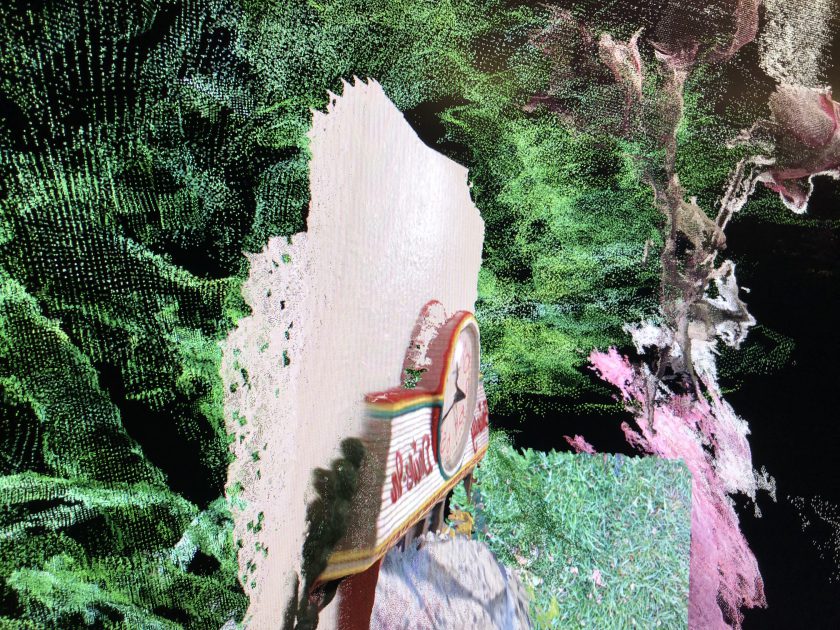

A collection of depth-capture collages from daily life arranged into an altar for personal reflection and recombination.

Overview:

This project was inspired to take the drippy tools of depth capture and create a means for using this technology to facilitate a daily drawing practice with point clouds. As I worked on creating this, ideas of rock gardens and altars came to me from a desire to create personal intimate spaces for archive, arrangement, and rest. How can I have the experience of my crystal garden in my pocket? A fully functional mobile application is the next step of this project. What are the objects in one’s everyday depth garden? For me, it was mostly my friends and interesting textures. What would you like to venerate in your everyday?

Narrative:

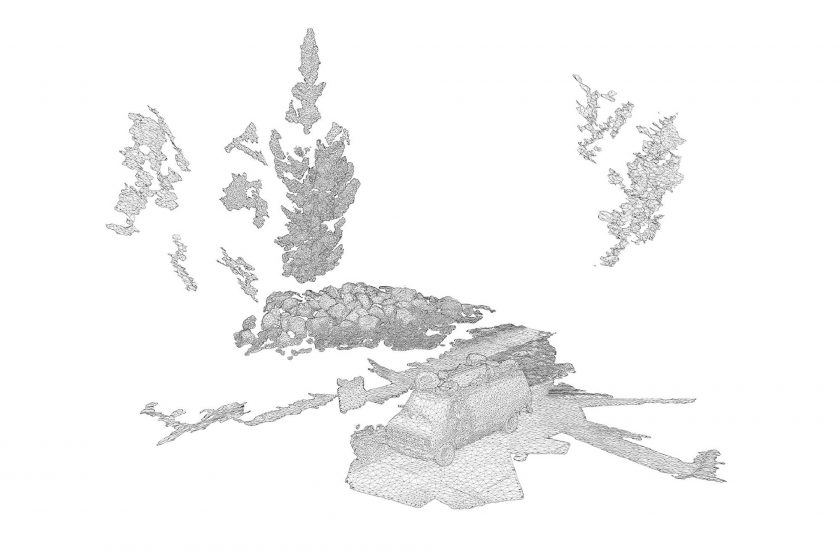

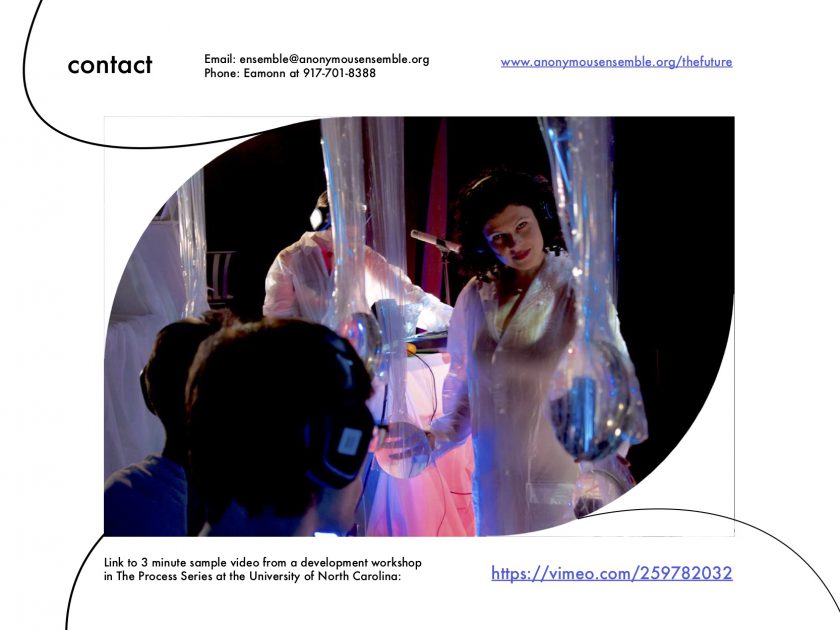

This project was a continuation of my drawing software, with the aim of activating the depth camera now included in new iPhones as a tool to collage everyday life – to draw a circle around a segment of reality and capture the drippy point clouds for later reconcatenation in a 3D/AR type space.

In this version of the project, I focused the scope of my project to create a space that could be navigated in 3D, a space that would allow viewers to ‘fly’ through the moments captured from life. In a world where there is so much noise and image-overload, I wanted to create a garden space that would allow people to collect, arrange and take care of reflections from their everyday – to traverse through senses of scale and arranged amalgamations outside of our material reality.

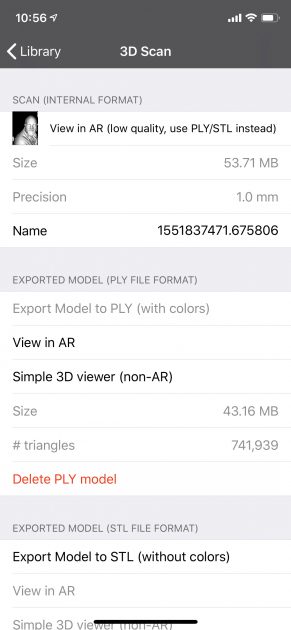

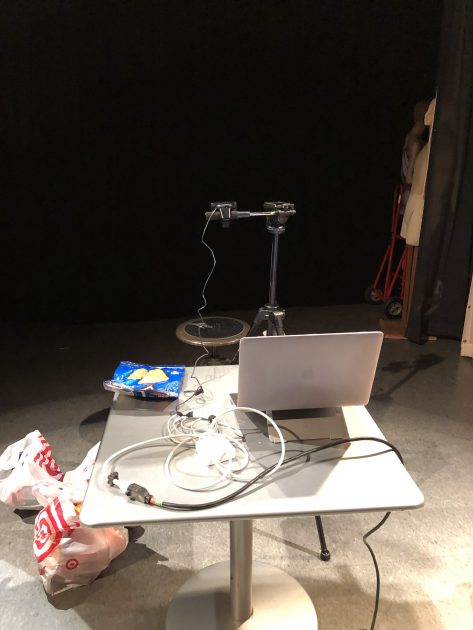

In developing this project, I had to face the challenges of getting the scans in .PLY format from the app Heges into my Unity-based driving space. Figuring out how to make this workflow possible was a definite challenge for me. The final pipeline solution was to use Heges to capture the scan in an unknown PLY format; then to bring it unto Meshlab to decimate the amount of data as well as convert it to the PLY binary little-endian format file that would allow Keijiro Takahashi’s PCX Unity package to work. From there I could start to collage and build space in Unity that could then be explored during gameplay mode.

While I was happy with the aesthetics of the result, I wanted to take this project much further in the following ways: I wanted to have the process described above be able to happen ‘offscreen’ in one click. I also wanted the garden space to be editable as well as traversable. Lastly, in the stretch-goal version I wanted it to be a mobile application that could be carried around in one’s pocket. I think as I continue to explore depth capture techniques and their everyday applications these steps are things I will strive towards.

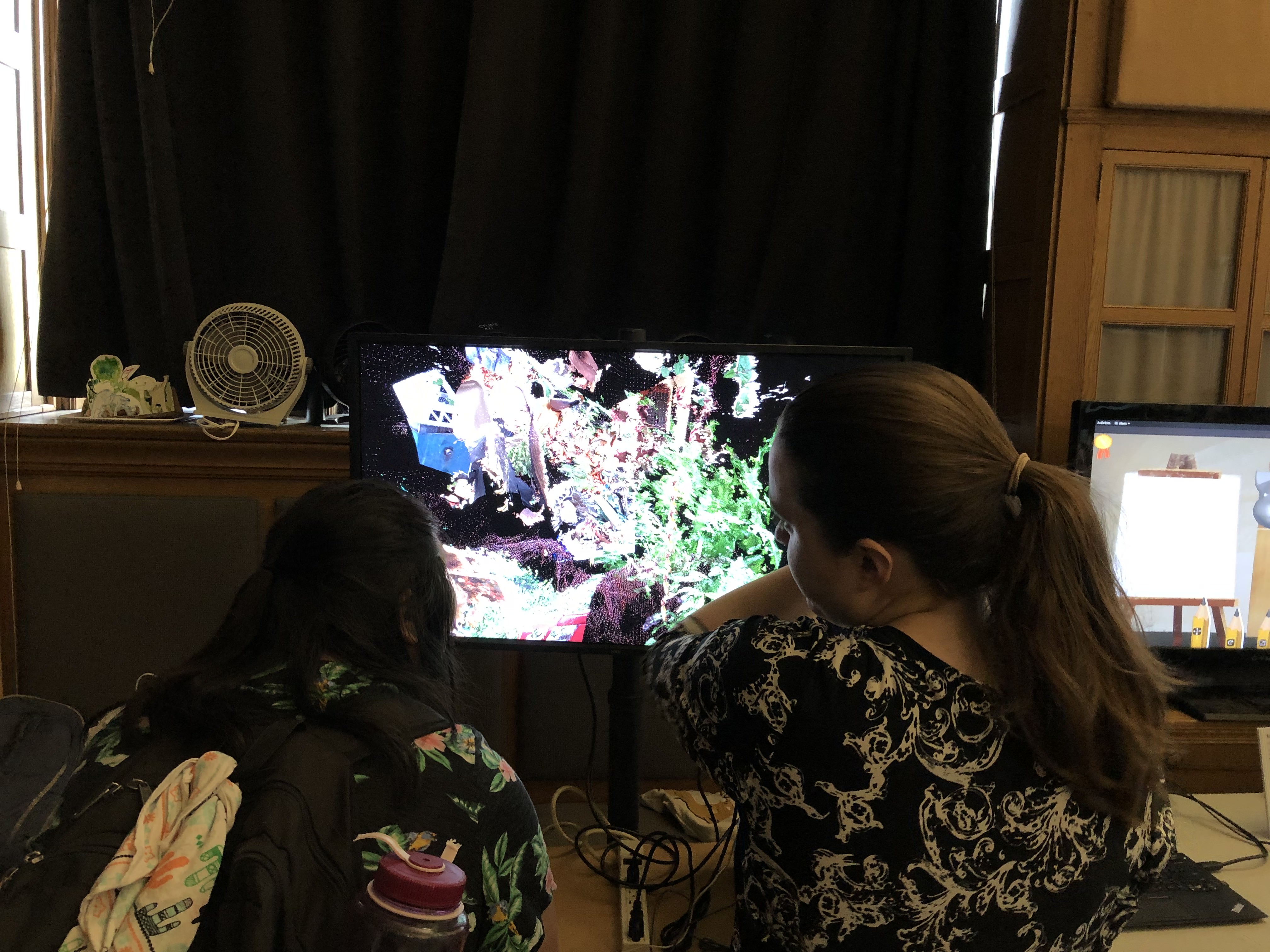

Above is video documentation of a fly through in the point cloud garden.