Facial mapping to song stem control

Category: Mask

a & conye — DrawingSoftware

For this project, I collaborated with a, and our documentation can be found here: http://golancourses.net/2019/a/03/06/conye-a-drawingsoftware/

Greecus – Mask

For this project I thought a good deal about what a mask was and the different ways that they are used. At its core, I found, a mask can have two purposes. A mask can obscure the wearer, keeping his or her features and emotions a secret (e.g. ski masks). I began to think of these as utilitarian focused masks. A mask can also allow the wearer to embody someone’s presence or take on a persona. At first when I thought about this kind of mask I considered masks that are used in ceremonies and performances (e.g. Kabuki theater and traditional African masks) where a performer puts on the mask and loses himself or herself in someone else, but after thinking some more about it I realized that many people put on masks every day not like someone else, but rather to feel more themselves.

After thinking about that for some time I began to draw inspiration from these different kinds of masks. I began to think about how deeply cultural masks are. The way that a culture’s masks are designed and created reflects very much on the aesthetic standards in the cultures from which it stems. Therefore It felt wrong to create a mask based on a culture to which I did not belong. That became the seed for my assignment because after I came to that realization, I began looking for a way that I could the common visual language of these different masks to create one that belonged to me.

One common aspect of the visual language that I found in masks was exaggerated features, and so I used deep reds and yellows to convey emotion while also maintaining a distance from fully understanding the emotion by not using a traditional representation of a face on the mask.

In my mask, I used a design showing a QR code as the most prominent element because I liked how it alluded to this idea that in our data-driven culture a computer-readable symbol linking to my social media could be just as representative of my identity as my face is.

For the project I used Kyle McDonald’s FaceOSC implementation and Processing.

sheep – mask

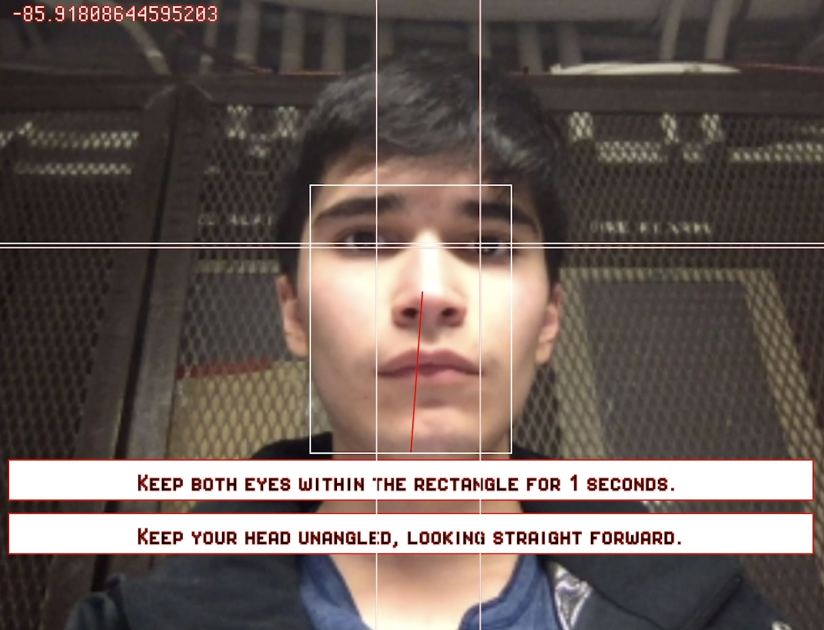

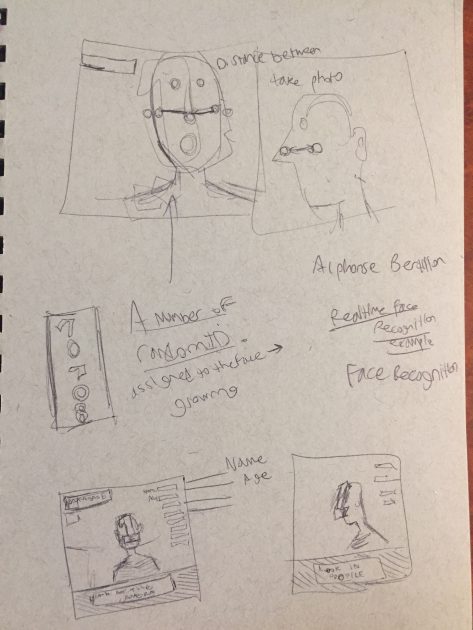

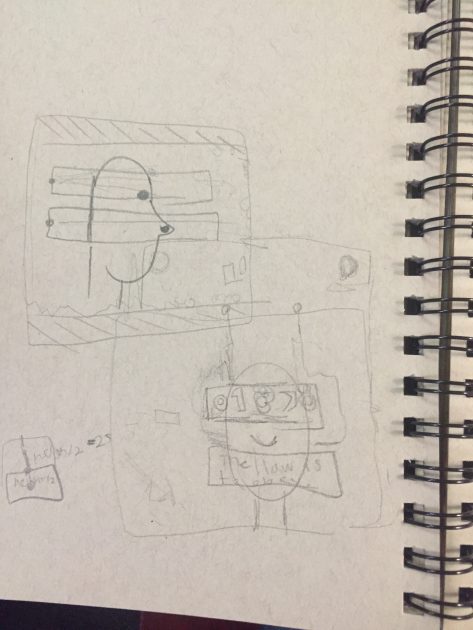

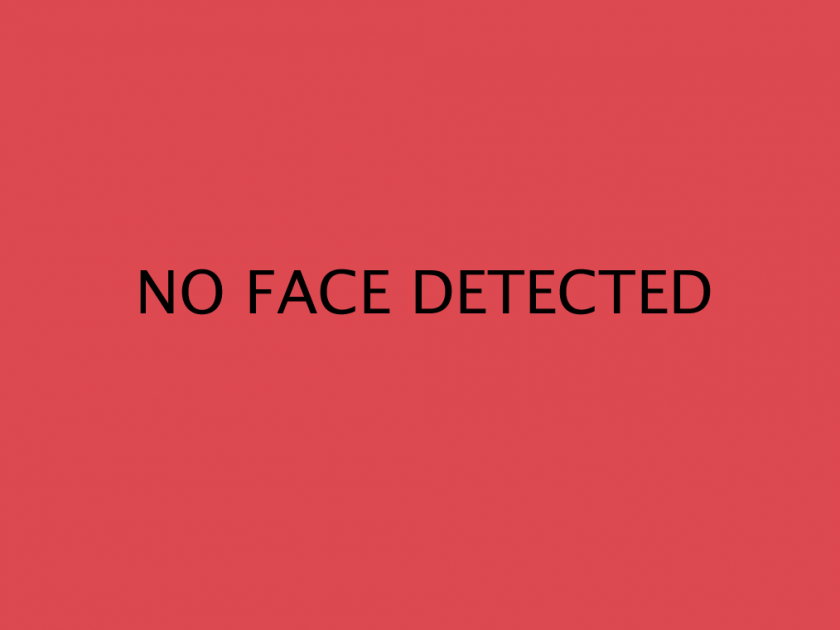

This is called Bertillon’s Dungeon. It’s designed to be a small interactive piece about surveillance and facial recognition. I was thinking about glut and the importance to business of sourcing faces as often as possible. I was thinking of mugshots and how they were used to profile criminals by their inventor, Bertillon until DNA testing outmoded them. Yet, we still use mugshots as a shorthand for being in the system. Though this was a small project, simple in nature, I reason that it could be expanded to be a smaller part of a larger story about facial recognition software. I also think it was important in my performance to try and hide my face when the pictures started getting taken, but to be sort of dazed and confused before the realization. I wanted to be someone who understood the motive behind the taking of the pictures (most likely to be used in court for incrimination), but still was conditioned to respect authority (even if they are trying to log into something they shouldn’t be).

Sound wasn’t done in the p5.js sketch. It was done for the documentation in logic. I would want to improve this by having the sound play in the browser.

Link to interaction: perebite.itch.io/

GIFs:

Video:

Screenshot:

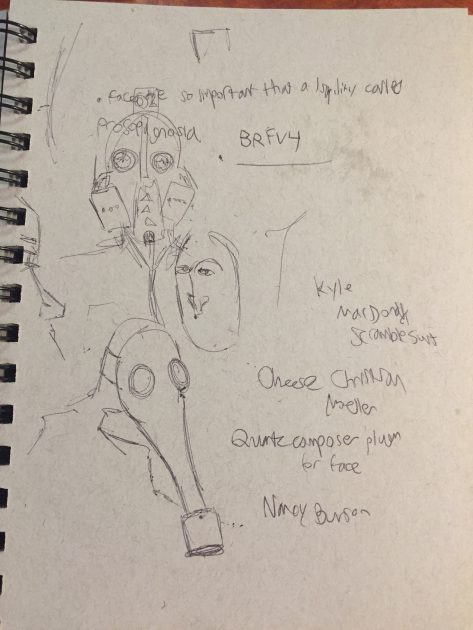

Process:

I started by thinking about a gasmask in which obvious breathing was needed to stay alive- a melding between human and machine. This eventually became a number emblazoned on the head, which eventually became the assigning of an ID. I was initially thinking of also having real time facial recognition (or approximations) but this was going to take too much time. The idea of a multi roll of cameras came from watching Dan Schiffman’s Muybridge tutorial and thinking about an interesting transition.

takos-mask

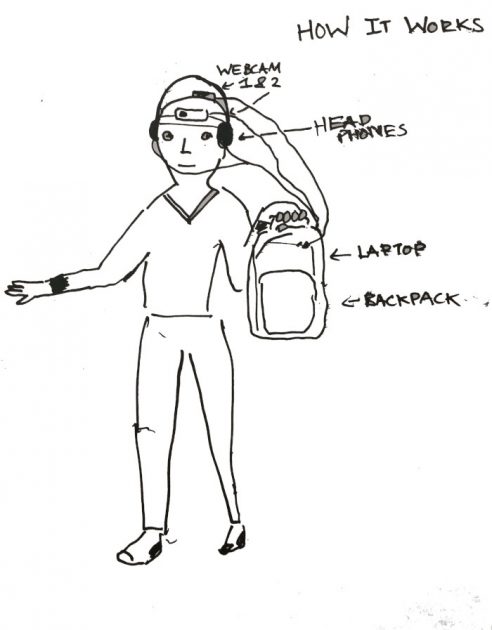

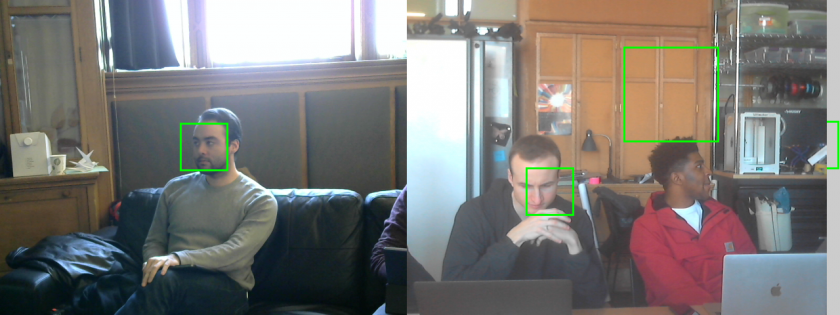

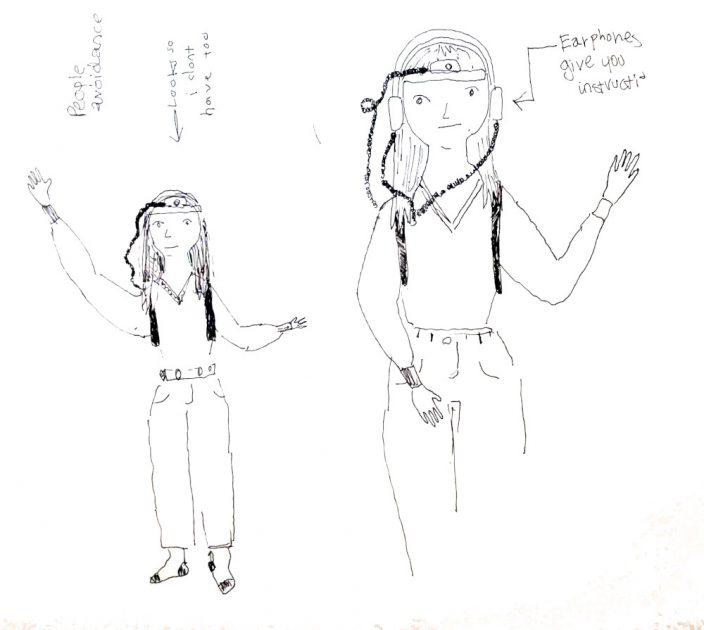

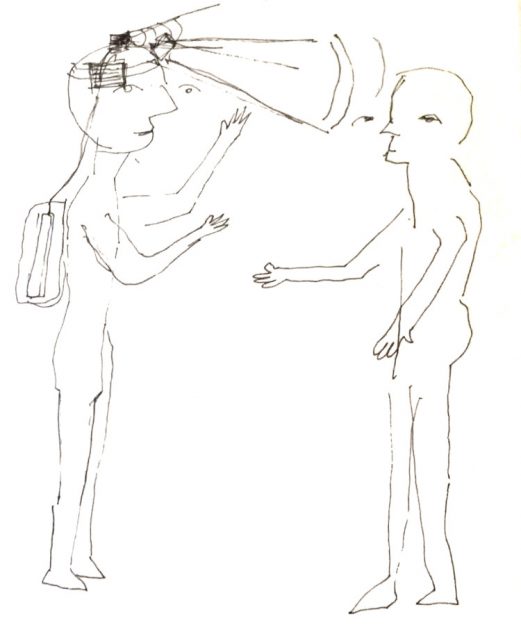

My mask takes the form of a wearable array of devices that notifies you of the presence of other people either in front or behind your person, and forces you to be constantly aware of your environment

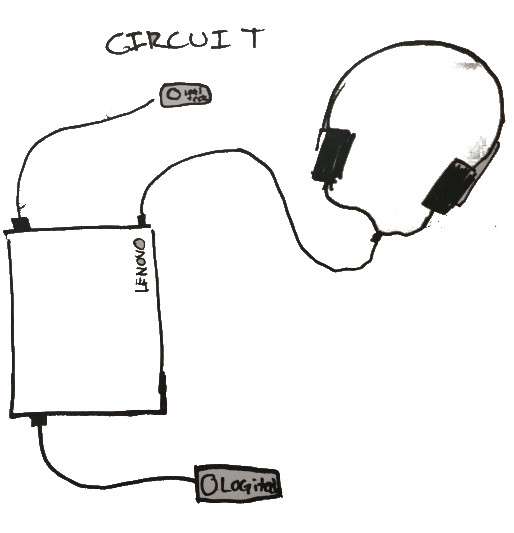

I hooked up two webcams and a set of earphones to my laptop; which the user carries around in my backpack. The user must wear a around their forehead to support the webcams, with one placed above their face and the other on the back of their head, as well as a set of headphones, all of which are attached to a laptop that is secured in back of the user.

The Webcams are both Logitech webcams, one from STUDIO, one is mine. The band is a re-purposed HRT sleep mask acquired at a job-fair like event. The laptop is mine. The backpack is mine.

Proof of function at very low framerate:

“there is someone behind you” ” there is someone in front of you”

Credits!

For my voices, I used NaturalReader’s Premium English (US) Susan voice, and chrome audio capture

there is someone in front of you:

there is someone behind you:

there is no one here (unused):

Thanks to Connie and Lukas for being my actors and helping me with documentation.

ideation sketches

dechoes – mask

Performative Mask

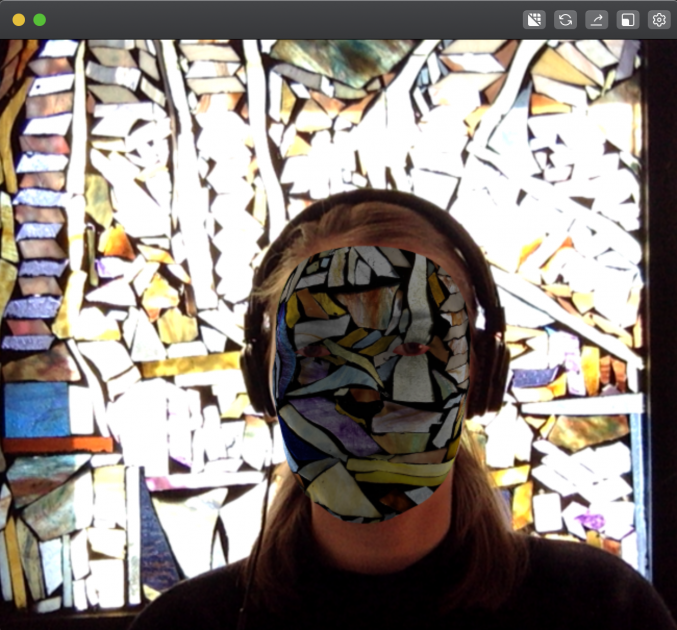

Overview. From the start, I was interested in doing some work with music videos, and adapted this assignment to my own constraints. I’ve been working with two bands from Montréal on a potential music video and album cover collaboration — this assignment felt like an opportunity to prototype some visual ideas. For the sake of this warm-up exercise, I worked with the song A Stone is a Stone by Helena Deland. She sings about goodbyes and core issues in either a person’s character or in a relationship.

The lyrics made me think of permanence. When you go through life with a specific idea, character trait or person for a long time, you sometimes lose track of their presence. I was interested in working with the idea of fading into one’s environment, having been somewhere or someone for so long that you become one with it. After a while, you can barely be distinguished from the space, metaphorically or physically, that you evolve in.

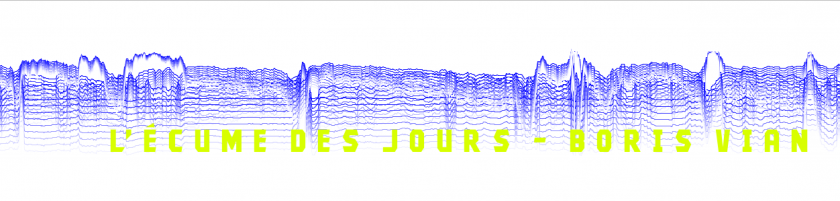

Ideation Process. My process was hectic, as I had a very hard time thinking of an idea that got me excited. I started by wanting to make an app which erased your face any time you smiled awkwardly in an uncomfortable situation, but decided this kind of work had been overdone in the wearables world. Then I decided to make a language grapher using mouth movements to have a visual representation of texts which had a “Chloe” as the protagonist (as a way to study the word, how other people perceive it in literature, and how that influences personal character). I went through with this idea, and hated it by the end, so I decided to start over from scratch. Hence, the current idea described in the overview.

The hoodies that allow you to hide from uncomfortable situations.

A visualization of the introduction of the character “Chloé” in the novel ‘L’Ecume Des Jours’ by Boris Vian.

Physical Process. I essentially worked using the environment as a way to inform the content, creating bidirectionally. The researched a couple backgrounds, photographed them and performed in front of them with my nice camera. I then applied the simple mapping filter of the aforementioned image to the prerecorded video. This project didn’t actually require any scripting, since I chose to do it with Spark AR. I originally started doing it in Processing but wasn’t happy with the way it looked. Spark AR had a more advanced mesh tracking option, which allowed for warping around face features, which I stuck with.

Testing patterns and functionality.

I proceeded to film a total of 6 scenes, selected by texture and color. I wore clothes that fit the background scheme, and photographed each material before filming myself lip-synching with a dslr. I then converted the videos to a smaller format (which was the only import option, sorry for the loss of quality) and layered the mask in Spark AR. The final editing rendered the video below, only featuring a small chunk of the song.

(I removed the video from youtube, sorry Golan. I left you a gif though)

Improvements. With more time, I probably would have:

1) found a way to also include the ears and the neck, which look silly without the mask layer

2) reconsidered my idea, because I felt like the overall effect was uncomfortable and funny, while the song really isn’t

3) found a way to change the scale of my map image, because it didn’t fade as much into the background as I had wanted

tli-mask

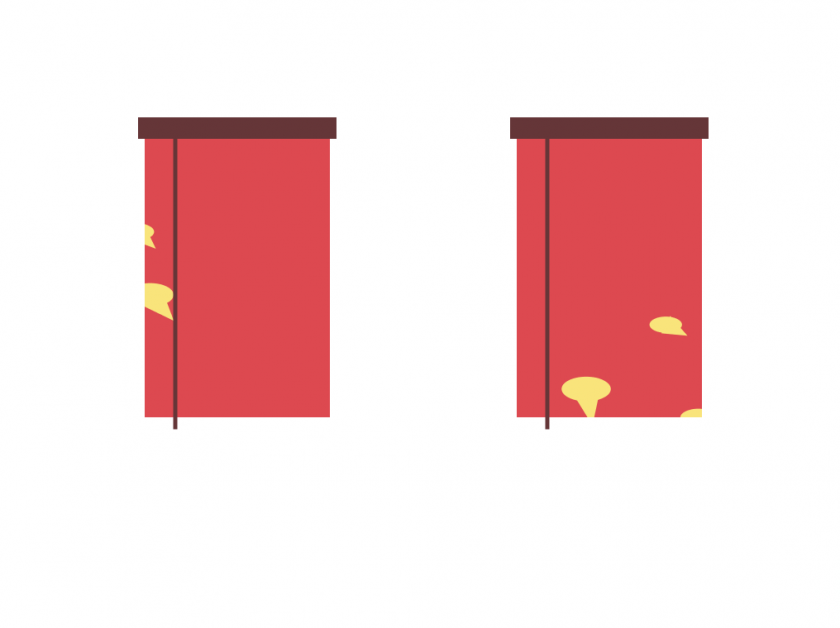

Windows is a (mostly) cozy view of two windows whose blinds open and close based on your blinks. Outside, you can see the birds flying by.

A couple things prompted this idea: a desire explore Processing’s API in conjunction with FaceOSC, a play on the word “mask” as the object that covers a face and “mask” as the image processing tool, and a take on that cheesy saying “eyes are the windows to the soul”. I set out to create something minimalistic and cozy like all those pleasant app store games, but as I worked through implementation details I discovered an interesting tension between the demands that the software makes of the viewer and its desire to be cozy. I decided to emphasize this inconsistency by introducing sudden and uncomfortable changes when the viewer can no longer be detected by the camera. I didn’t plan for an involved concept behind this work, but perhaps there’s a reading in there about how we perceive our digital infrastructure and how we can become desensitized (or shall I say blinded ( ͡° ͜ʖ ͡°)) to the odd demands it may make of us.

Besides the conceptual reading, I’m pleased at how satisfying the interaction with the blinds is. You can see the gaps between the blinds as they fall down, and the cord to the side rises and falls opposite the movement of the blinds. For this reason, my performance is mostly just me having fun blinking at the program when it works and bobbing my head at the camera when it doesn’t.

arialy-mask

This project uses p5.js and ClmTracker, and builds off of Kyle McDonalds p5.js + ClmTracker starter code (thank you!). The colors of the masks are generated with color-scheme.js.

The instability is part of what comes along with much of today’s open source face tracking software. What is that was a key feature, rather than a fundamental error? I made a mask that changes every time it loses track of the face.

My original inspiration for this project came from the Chinese Opera mask changers.

The mask changing here is playful and very entertaining! Though the magic partially comes from the performance taking place in the physical world, I wanted to bring this sort of energy into the digital space with my mask.

I first set to generating masks. I used the color-scheme.js library to get triadic color palettes, and then made a base mask with accent shapes on top.

Somewhat similar to the Chinese Opera mask changers, I can hide my face and reappear with a new mask (while trying to channel at least a fraction of the energy of those professionals!). Unique to this digital space is the more peculiar methods of intentionally changing masks and the manipulation of them in the process.

jamodei-mask

On Being: Dorito Dreaming

This project is an attempt to pay a sublime, transubstantiative homage to a product with which I have a deep connection. In this case, for me, it was Doritos. I was inspired to consider the concept of a mask in a more relational sense. We are asked to embody the values of many products many times per day – and instead of letting that subtextual, marketing interpellation happen in the background, I wanted to embrace it, to own it, to become it. To sync up with my material reality in hopes that I can find space to make choices among the many, many, many consumption-based asks that hit me in invisible waves. To create a visually realized version of the masks we are asked to wear every time we look at the bag of Doritos, or the Starbucks coffee, the Crest toothpaste, or the Instagram logo (etc.).

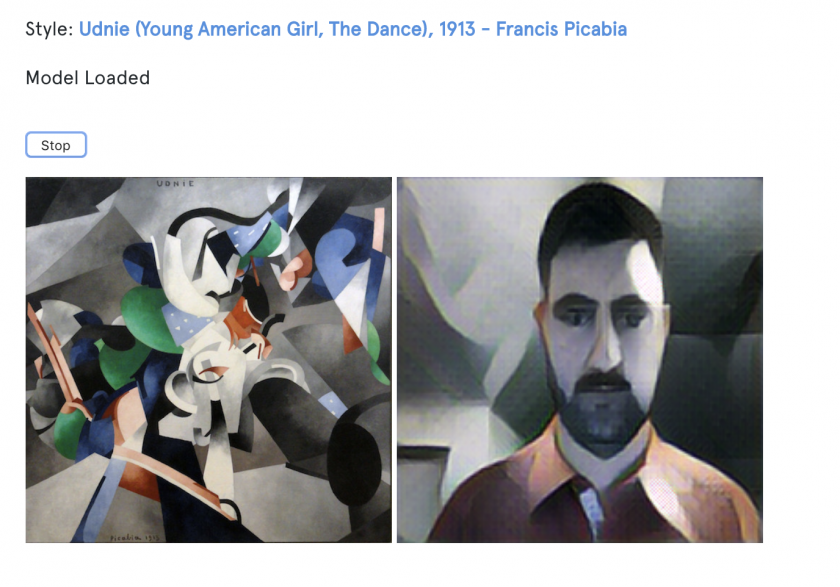

Research-wise, I was initially interested in face-mapping or face-swapping technologies. A deep dive eventually led me to find out about machine learning-created ‘style transfers,’ which are essentially the training of a neural network to repurpose an image into the style of another input image. I found an excellent tutorial that demonstrated how to do style-transfer for web cameras. I trained it on GPUs in the cloud via Paperspace’s Gradient. Then, the model ran in my browser with Ml5.js and P5.js. I was interested in using this technique to achieve the homage described above as I wanted to use a live camera to capture reality as a Dorito (bag) might.

This is the image I trained the model on for the ‘style transfer.’

Once I had the style-transfer camera complete, I began to look for a performative starting point. I was inspired in thinking about re-concatenating the world with a performance style similar to Dadaist Hugo Ball’s ‘Elefantenkarawane’ in simplicity, costume and direct confrontation with reality. I was using my software to re-configure reality capture, and I wanted to work in a performative tradition that also tried to configure reality for some sort of meaningful understanding (even if in the absurd). In On Being: Dorito Dreaming, to take the breakdown of language a step further and to remain focused on material embodiment, I created an ASMR (Autonomous sensory meridian response)-inspired soundtrack for the performance composed of sounds created by consuming Doritos and carefully observing the package next to a microphone.

I am interested in continuing this process with other people, and creating a series of vignettes of people trying to embody a product of their choice.

Below are some stills from the film studio and the costume/makeup construction:

Hugo Ball performing Elefantenkarawane, 1916

In my research, it seemed the a lot of the live-camera style transferring had been done with famous painting styles. Example below:

As far as getting the model trained, then up and running, I want to thank Jeena and Aman for helping get through some technical issues/questions I was having in this stage of the project!

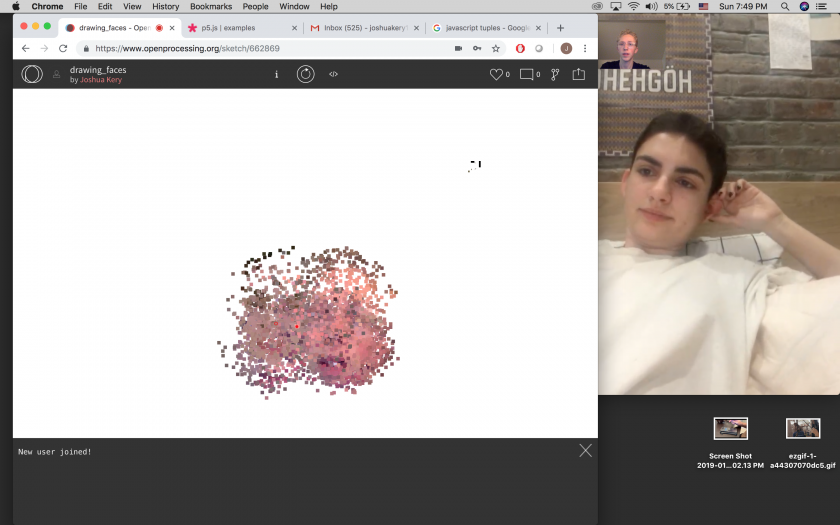

kerjos-mask

This project exchanges pixel information with your friend (in place of or in conjunction with video chat) and slowly draws your faces to the screen.

This project exchanges pixel information with your friend (in place of or in conjunction with video chat) and slowly draws your faces to the screen.

I’ve been thinking about different ways of intervening in the traditional videochat setup, and how the screen, camera, and computer as mediators can improve and complicate our connection with another.

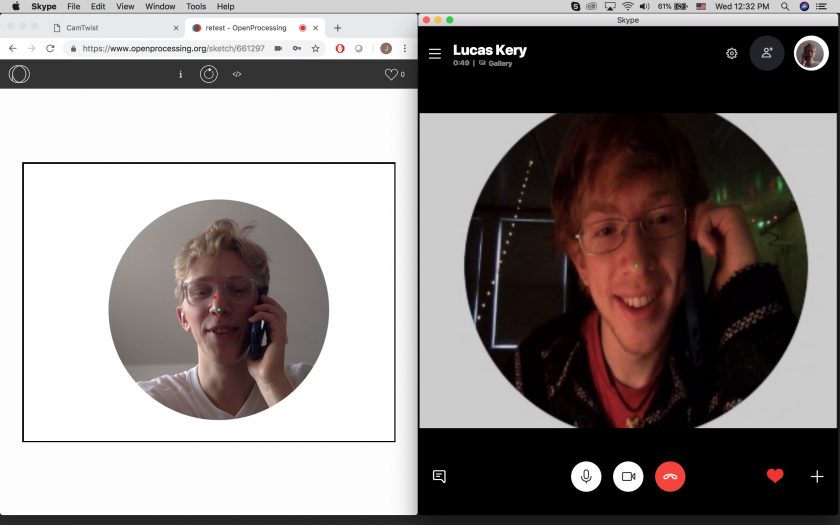

Initially, I was developing a mask — the Photoshop kind — that hid your friend from you during a Skype call until you aligned noses with respect to your screen.

The setup for this exchange involved asking my brother to download CamTwist and Skype and configure them for the OpenProcessing sketch. Even after all that, the results were very laggy. In a way, it was a success, because I certainly had made our communication more complicated.

I moved on to this drawing project because I thought it was a bit more performative. In the current state, the program runs very slowly as it draws you and your friend’s faces to the screen. For this reason, it requires both you and your friend to hold still for a few minutes each time it renders your face. Again the communication is complicated, this time for the sake of a sort of drawing.

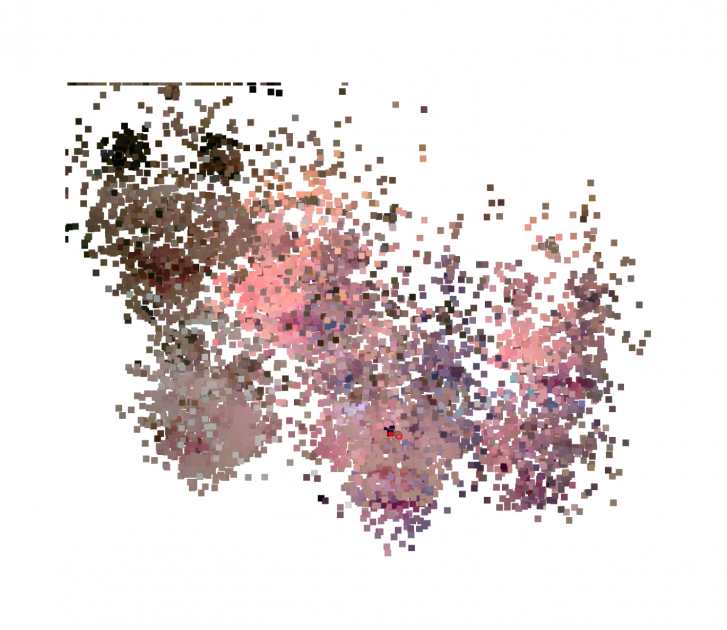

This program uses ml5js to find the position of your eyes and nose, and approximates other facial features from them. It prioritizes selecting pixel values from the areas around these features when drawing.